使用正则表达式

学会使用正则表达式

import requests

import match import re newsurl = 'http://news.gzcc.cn/html/xiaoyuanxinwen/' res = requests.get(newsurl) res.encoding = 'utf-8' from bs4 import BeautifulSoup soup = BeautifulSoup(res.text, 'html.parser')

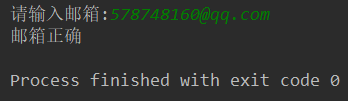

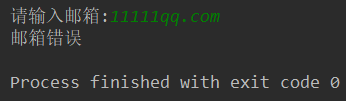

1. 用正则表达式判定邮箱是否输入正确。

email = input("请输入邮箱:") result = re.match('^(\w)+(\.\w+)*@(\w)+((\.\w{2,3}){1,3})$',email) if(result != None): print("邮箱正确") else: print("邮箱错误")

2. 用正则表达式识别出全部电话号码。

import re

str = '''版权所有:广州商学院 地址:广州市黄埔区九龙大道206号

学校办公室:020-82876130 招生电话:020-82872773

粤公网安备 44011602000060号 粤ICP备15103669号'''

print(re.findall('(\d{3,4})-(\d{6,8})',str))

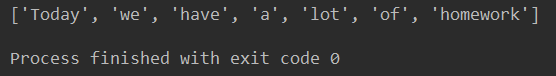

3. 用正则表达式进行英文分词。re.split('',news)

string = "Today,we have a lot of homework" print(re.split(',|\s',string))

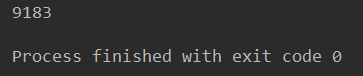

4. 使用正则表达式取得新闻编号

newsurl = "http://news.gzcc.cn/html/2018/xiaoyuanxinwen_0404/9183.html" newID = re.match('http://news.gzcc.cn/html/2018/xiaoyuanxinwen(.*).html', newsurl).group(1).split("/")[1] print(newID)

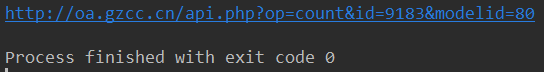

5. 生成点击次数的Request URL

url = "http://oa.gzcc.cn/api.php?op=count&id={}&modelid=80".format(newID) print(url)

6. 获取点击次数

newsurl = "http://news.gzcc.cn/html/2018/xiaoyuanxinwen_0404/9183.html" newID = re.match('http://news.gzcc.cn/html/2018/xiaoyuanxinwen(.*).html', newsurl).group(1).split("/")[1] url = "http://oa.gzcc.cn/api.php?op=count&id={}&modelid=80".format(newID) contact = requests.get(url) count = contact.text.split(".html")[-1].lstrip("('").rstrip("');") print(count)

7. 将456步骤定义成一个函数 def getClickCount(newsUrl):

def getClickCount(newsurl): newID = re.match('http://news.gzcc.cn/html/2018/xiaoyuanxinwen(.*).html', newsurl).group(1).split("/")[1] url = "http://oa.gzcc.cn/api.php?op=count&id={}&modelid=80".format(newID) contact = requests.get(url) count = contact.text.split(".html")[-1].lstrip("('").rstrip("');") return count

8. 将获取新闻详情的代码定义成一个函数 def getNewDetail(newsUrl):

def getNewDetail(newsUrl): count = getClickCount(newsUrl) print("点击次数:" + count) rq = requests.get(newsUrl) rq.encoding = "utf-8" soup = BeautifulSoup(rq.text, 'html.parser') title = soup.select(".show-title")[0].text content = soup.select("#content")[0].text info = soup.select(".show-info")[0].text author = info[info.find('作者:'):].split()[0].lstrip('作者:') source = info[info.find('来源:'):].split()[0].lstrip('来源:') Auditing = info[info.find('审核:'):].split()[0].lstrip('审核:') photo = info[info.find('摄影:'):].split()[0].lstrip('摄影:') print(author,source,Auditing,photo) from datetime import datetime da = datetime.strptime(info.lstrip('发布时间:')[0:19], "%Y-%m-%d %H:%M:%S") print(da)

for news in soup.select("li"):

if(len(news.select('.news-list-info'))>0):

newsUrl = news.select('a')[0].attrs['href']

print(newsUrl)

getNewDetail(newsUrl)

break;

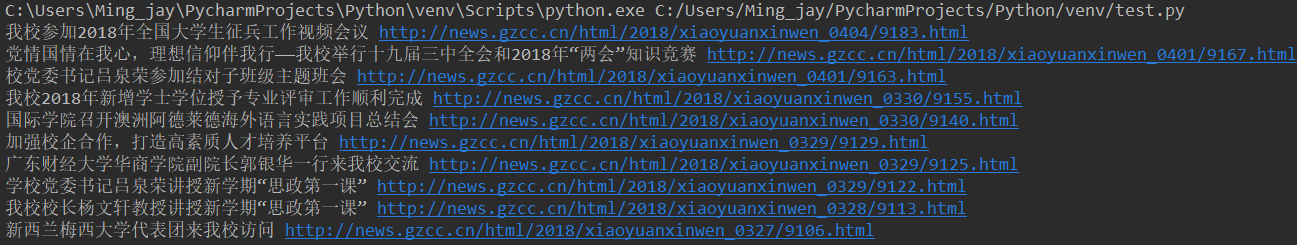

9. 取出一个新闻列表页的全部新闻 包装成函数def getListPage(pageUrl):

def getListPage(newsurl): res = requests.get(newsurl) # 返回response对象 res.encoding = 'utf-8' from bs4 import BeautifulSoup soup = BeautifulSoup(res.text, 'html.parser') for news in soup.select("li"): if (len(news.select('.news-list-info')) > 0): newsUrl = news.select('a')[0].attrs['href'] print(newsUrl) newsurl = 'http://news.gzcc.cn/html/xiaoyuanxinwen/' getListPage(newsurl)

10. 获取总的新闻篇数,算出新闻总页数包装成函数def getPageN():

def getPageN(newsurl): res = requests.get(newsurl) # 返回response对象 res.encoding = 'utf-8' from bs4 import BeautifulSoup soup = BeautifulSoup(res.text, 'html.parser') pageN = math.ceil(int(soup.select("#pages")[0].select("a")[0].text.rsplit("条")[0])/10) return pageN

11. 获取全部新闻列表页的全部新闻详情。

newsurl = 'http://news.gzcc.cn/html/xiaoyuanxinwen' getListPage(newsurl) n = getPageN(newsurl) for i in range(2,n+1): print(i) newsurl = 'http://news.gzcc.cn/html/xiaoyuanxinwen/{}.html'.format(i) getListPage(newsurl)

此处省去230张图。

浙公网安备 33010602011771号

浙公网安备 33010602011771号