kolla-ansible部署OpenStack Train版技术方案

简单架构示意

项目目标

1. 实现容器化部署docker+ Ansible+openstack-tarin

2. 使用keeplived监控nova服务实现在单台服务器宕机的情况下能迅速切断连接减轻平台负载

3. HAprox+Keepalived实现集群的负载均衡和高可用(部署)

4. 部署采用自动化部署工具kolla

5. 采用源码方式部署openstack(所有组件服务的源码均存放在对应容器的根下,方便二次开发)

6. Docker镜像托管至docker-regisiry私有仓库做docker备份以及镜像源

7. 系统采用centos-2009版本(禁止使用最小化版本CentOS-7-x86_64-Minimal-2009.iso)

8. 将控制节点资源加入计算服务,此处注意在nova配额时需保留6核12g的资源来保证controller节点的正常运行

9. Freezer备份服务无法引入,环境存储资源不足

10. 部署mariadb高可用(2主)

11. 双控制节点保证基础服务的高可用

12. Neutron服务高可用

13. Kolla支持节点扩展所以本手册适用于拥有2台及以上服务器的实际生产环境

14. 使用Grafana+prometheus对主机进行监控

前期准备

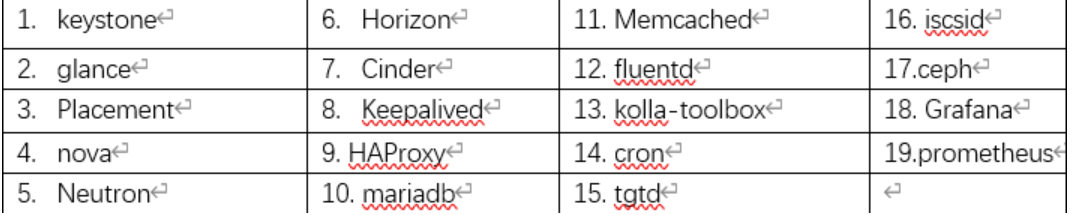

部署所需组件

部署

1、系统环境初始化

1、网卡配置

eth0(网卡名称根据实际情况修改)

TYPE=Ethernet

BOOTPROTO=static

NAME=eth0

DEVICE=eth0

ONBOOT=yes

IPADDR=192.168.100.11

NETMASK=255.255.255.0

GATEWAY=192.168.100.1

DNS1=114.114.114.114

DNS2=223.5.5.5

eth1(网卡名称根据实际情况修改)

TYPE=Ethernet

BOOTPROTO=none

NAME=eth1

DEVICE=eth1

ONBOOT=yes

第一张网卡配置为静态地址且连接网络

第二张网卡配置为激活状态无ip模式none

所有节点均配置

2、设置主机名 以及hosts解析

hostnamectl set-hostname openstack-con01 && bash

hostnamectl set-hostname openstack-con02 && bash

hostnamectl set-hostname openstack-con03 && bash

vim /etc/hosts #设置hosts 先清空hosts文件 然后添加如下

192.168.100.10 openstack-con01

192.168.100.11 openstack-con02

192.168.100.12 openstack-con03

#或者使用

tee /etc/hosts <<-'EOF'

192.168.100.10 openstack-con01

192.168.100.11 openstack-con02

192.168.100.12 openstack-con03

EOF

3、关闭系统安全设置

#关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

#关闭网络管理组件

systemctl stop NetworkManager && systemctl disable NetworkManager

#关闭selinux

setenforce 0 && sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

3、手动配置免密登录(所有节点都需执行)

ssh-keygen

ssh-copy-id -i /root/.ssh/id_rsa.pub openstack-con01

ssh-copy-id -i /root/.ssh/id_rsa.pub openstack-con02

ssh-copy-id -i /root/.ssh/id_rsa.pub openstack-con03

3、安装基础软件包

yum install -y python-devel libffi-devel gcc openssl-devel libselinux-python git wget vim yum-utils

2、安装docker(所有节点)

2.1 docker是本次部署的关键,因此需要安装docker。

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum list docker-ce --showduplicates | sort -r

(docekr版本使用稳定版,手动指定安装)

yum install -y docker-ce-19.03.13-3.el7 docker-ce-cli-19.03.13-3.el7 containerd.io

systemctl start docker

systemctl enable docker

docker version

开启 Docker 的共享挂载功能:所谓共享挂载即同一个目录或设备可以挂载到多个不同的路径并且能够保持互相之间的共享可见性,类似于 mount --shared。在 OpenStack for Kolla 中,主要解决 Neutron 的 namespace 在不同 container 中得以保持实效性的问题。

mkdir /etc/systemd/system/docker.service.d

tee /etc/systemd/system/docker.service.d/kolla.conf << 'EOF'

[Service]

MountFlags=shared

EOF

#修改docker国内镜像源

mkdir -p /etc/docker

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://registry.docker-cn.com", "http://hub-mirror.c.163.com", "https://docker.mirrors.ustc.edu.cn"]

}

EOF

#启动并自启docker

systemctl daemon-reload

systemctl restart docker

systemctl enable docker

#查看docker信息

docker info

2.2 部署docker私有仓库并导入镜像

备注:生产环境推荐安装docker-registry或者 harbor用于存储docker镜像,前提是你已经拿到了所需要的镜像

方法如下:

注意: docker-registry默认端口5000,如果部署在con节点映射成5000会占用haproxy得端口,需要换一个

- 如果不使用本地docker仓库,则2.2和2.3就不需要做,直接配置公有镜像仓库在线pull镜像

# 1、registry 私有库安装

docker pull registry

# 2、启动命令:

mkdir -p /my-registry/registry

docker run -d -ti --restart always --name registry -p 4000:5000 -v /my-registry/registry:/var/lib/registry registry

#3、访问仓库地址测试

curl 127.0.0.1:4000/v2/_catalog

#4、registryweb页面可视化界面

docker pull hyper/docker-registry-web

#启动命令:

docker run -d --restart=always -p 4001:8080 --name registry-web --link registry -e REGISTRY_URL=http://192.168.100.12:4000/v2 -e REGISTRY_NAME=192.168.100.12:4000 hyper/docker-registry-web

# 页面访问验证

192.168.100.12:4001

2.3 导入镜像

cat > /etc/docker/daemon.json << EOF

{

"insecure-registries": [

"192.168.100.12:4000"

],

"log-opts": {

"max-file": "5",

"max-size": "50m"

}

}

EOF

#将配置推送到各个节点

scp /etc/docker/daemon.json openstack-con02:/etc/docker/daemon.json

......

#所有节点重载配置重启docker服务

systemctl daemon-reload && systemctl restart docker

#导入镜像

##上传镜像包

ll koll-ansible_Train/

total 4

drwxr-xr-x 2 root root 42 Dec 5 08:39 config

drwxr-xr-x 2 root root 6 Dec 5 08:39 doc

drwxr-xr-x 2 root root 4096 Dec 5 09:17 docker_repo

drwxr-xr-x 3 root root 136 Dec 5 08:59 tools

cd /root/koll-ansible_Train/tools

bash import.sh

#节点查看

docker images

#打tag (注意根据实际环境替换)

docker images | grep kolla | grep -v toolbox| sed 's/kolla/10.122.0.231:4000\/kolla/g' | awk '{print "docker tag"" " $3" "$1":"$2}'|sh

docker tag d2fea47ea65d 10.122.0.231:4000/kolla/centos-binary-kolla-toolbox:train

#push到私有仓库,你可以用脚本,也可以直接用一条命令搞定,脚本在/root/koll-ansible_Train/tools

##下面演示命令形式 (注意替换仓库地址)

for i in $(docker images | grep 192.168.100.12:4000| awk 'BEGIN{OFS=":"}{print $1,$2}'); do docker push $i; done

##其他节点curl一下镜像仓库看看是否导入成功

curl 192.168.100.12:4000/v2/_catalog

3、安装pip(所有节点)

3.1 配置阿里云pip源(所有节点)安装pip

yum -y install python-pip

cd ~/

mkdir ~/.pip

cat > ~/.pip/pip.conf << EOF

[global]

trusted-host=mirrors.aliyun.com

index-url=https://mirrors.aliyun.com/pypi/simple/

EOF

#安装更新pip版本

pip install -U pip==19.3.1

pip install -U setuptools -i https://mirrors.aliyun.com/pypi/simple/

#强制更新requets库 否则后续再执行安装docker SDK时候会报错requests 2.20.0 has requirement idna<2.8,>=2.5, but you'll have idna 2.4 which is incompatible.错误

pip install --ignore-installed requests

##########方法2:

yum install -y wget

wget https://bootstrap.pypa.io/pip/2.7/get-pip.py

python2.7 get-pip.py

pip install --ignore-installed requests

4、安装ansible、kolla-ansible(部署节点)

kolla-ansible部署是需要使用ansible,它是一款自动化的工具,是基于python开发。因此ansible是必须部署。

##获取epel的yum文件,否则ansible可能找不到包

#yum install epel-release -y

##安装ansible

#yum install ansible -y

## 推荐使用pip安装ansible 主要版本

pip install 'ansible<2.10'

#试过很多次都要先安装这个报告

pip install pbr

#开始安装kolla-ansible,要带上--ignore-installed ,否则可能会报错

pip install kolla-ansible==9.3.2 --ignore-installed PyYAML

#创建kolla的文件夹,后续部署的时候很多openstack的配置文件都会在这

mkdir -p /etc/kolla

chown $USER:$USER /etc/kolla

#复制ansible的部署配置文件

cp -v /usr/share/kolla-ansible/ansible/inventory/* /etc/kolla/.

#负责gloable.yml和password.yml到目录

cp -rv /usr/share/kolla-ansible/etc_examples/kolla/* /etc/kolla/.

#检查`etc/kolla`文件夹下的文件

5、配置ansible

5.1 修改ansible配置文件(contoller)

cat << EOF | sed -i '/^\[defaults\]$/ r /dev/stdin' /etc/ansible/ansible.cfg

host_key_checking=False

pipelining=True

forks=100

EOF

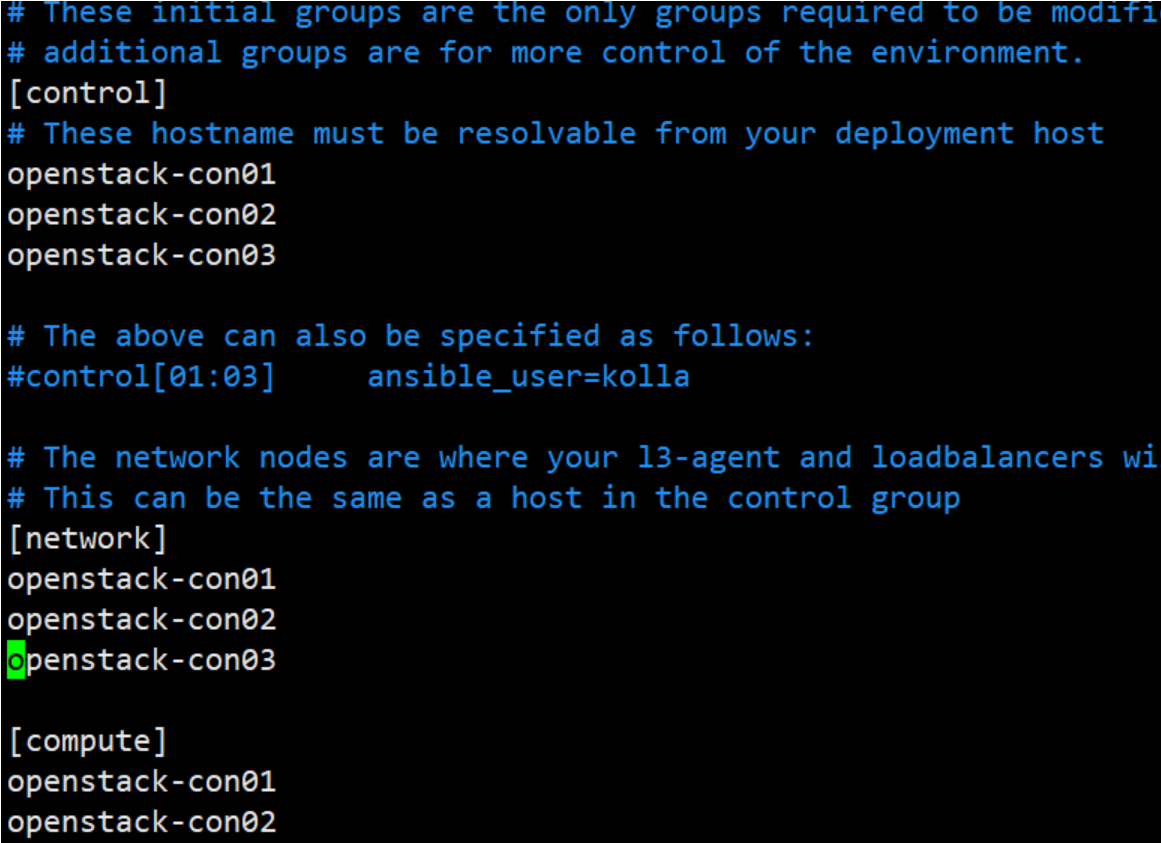

5.2 配置ansible剧本(contoller)

1.使用剧本multinode文件配置 控制 计算 网络 监控 等角色

2.检查inventory配置是否正确,执行:

ansible -i multinode all -m ping

3.生成openstack组件用到的密码,该操作会填充/etc/kolla/passwords.yml,该文件中默认参数为空。

kolla-genpwd

备注:如果执行过程中出现类似以下的报错

[root@openstack-con01 kolla]# kolla-genpwd

Traceback (most recent call last):

File "/usr/bin/kolla-genpwd", line 6, in <module>

from kolla_ansible.cmd.genpwd import main

File "/usr/lib/python2.7/site-packages/kolla_ansible/cmd/genpwd.py", line 25, in <module>

from cryptography.hazmat.primitives import serialization

File "/usr/lib64/python2.7/site-packages/cryptography/hazmat/primitives/serialization/__init__.py", line 7, in <module>

from cryptography.hazmat.primitives.serialization.base import (

File "/usr/lib64/python2.7/site-packages/cryptography/hazmat/primitives/serialization/base.py", line 13, in <module>

from cryptography.hazmat.backends import _get_backend

则可以尝试执行以下命令进行解决:

pip install python-openstackclient --ignore-installed pyOpenSSl

4、修改keystone_admin_password,可以修改为自定义的密码方便后续horizon登录,这里改为kolla。

sed -i 's#keystone_admin_password:.*#keystone_admin_password: 123#g' /etc/kolla/passwords.yml

cat /etc/kolla/passwords.yml | grep keystone_admin_password

注意:/etc/kolla/passwords.yml 为openstack各组件服务密码

5、修改全局配置文件globals.yml,该文件用来控制安装哪些组件,以及如何配置组件,由于全部是注释,这里直接追加进去,也可以逐个找到对应项进行修改。

cat > /etc/kolla/globals.yml <<EOF

---

# Kolla options

kolla_base_distro: "centos"

kolla_install_type: "binary"

openstack_release: "train"

kolla_internal_vip_address: "192.168.100.140"

kolla_internal_fqdn: "{{ kolla_internal_vip_address }}"

kolla_external_vip_address: "10.0.100.240"

kolla_external_fqdn: "{{ kolla_external_vip_address }}"

#Docker options

docker_registry: "192.168.100.10:4000"

# Messaging options

om_rpc_transport: "rabbit"

# Neutron - Networking Options

# These can be adjusted for even more customization. The default is the same as

# the 'network_interface'. These interfaces must contain an IP address.

network_interface: "eth0"

kolla_external_vip_interface: "eth1" #

api_interface: "eth0"

storage_interface: "eth0" # 存储接入网

cluster_interface: "eth0" # 存储复制网

tunnel_interface: "eth0" # 业务网

network_address_family: "ipv4"

neutron_external_interface: "eth1" # 外部网络

neutron_plugin_agent: "openvswitch"

neutron_enable_rolling_upgrade: "yes"

# keepalived options

keepalived_virtual_router_id: "66"

# TLS options

#kolla_enable_tls_internal: "yes"

# Region options

openstack_region_name: "RegionOne"

# OpenStack options

openstack_logging_debug: "False"

# glance, keystone, neutron, nova, heat, and horizon.

enable_openstack_core: "yes"

enable_haproxy: "yes"

enable_mariadb: "yes"

enable_memcached: "yes"

enable_ceph: "no"

enable_ceph_mds: "no"

enable_ceph_rgw: "no"

enable_ceph_nfs: "no"

enable_chrony: "yes"

enable_cinder: "yes"

enable_fluentd: "no"

#enable_grafana: "yes"

enable_nova_ssh: "yes"

#enable_prometheus: "yes"

#enable_redis: "yes"

#enable_etcd: "no"

enable_neutron_provider_networks: "yes" # 缺少此项,provider网络的虚拟机建立报错,日志显示 portbinding error

# Keystone - Identity Options

keystone_token_provider: 'fernet'

keystone_admin_user: "admin"

keystone_admin_project: "admin"

# Glance - Image Options

glance_backend_ceph: "yes"

# Cinder - Block Storage Options

cinder_backend_ceph: "yes"

cinder_volume_group: "cinder-volumes"

# Nova - Compute Options

nova_backend_ceph: "yes"

nova_console: "novnc"

nova_compute_virt_type: "qemu" # 如果是使用虚拟化部署,此选项必须选择qemu, 如果是物理机,默认是kvm

EOF

6、后端存储Ceph部署(此次为三节点)

6.1 在所有ceph节点配置YUM:

#

#配置系统源码,阿里基础源和epel源

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

#YUM优先级别:

yum -y install yum-plugin-priorities.noarch

6.2 所有配置ceph源:

cat << EOF | tee /etc/yum.repos.d/ceph.repo

[Ceph]

name=Ceph packages for $basearch

baseurl=http://mirrors.163.com/ceph/rpm-nautilus/el7/\$basearch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

priority=1

[Ceph-noarch]

name=Ceph noarch packages

baseurl=http://mirrors.163.com/ceph/rpm-nautilus/el7/noarch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

priority=1

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.163.com/ceph/rpm-nautilus/el7/SRPMS

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

EOF

6.3 在所有集群和客户端节点安装Ceph

1、所有节点安装

yum -y install ceph

ceph -v命令查看版本:

若报错urllib3无法安装可先删除后重装(无报错则无需执行)

pip uninstall urllib3

2、在controller节点额外安装ceph-deploy

yum -y install ceph-deploy

3、部署MON节点

创建目录生成配置文件

mkdir cluster

cd cluster

ceph-deploy new openstack-con01 openstack-con02 openstack-con03

初始化密钥

ceph-deploy mon create-initial

将ceph.client.admin.keyring拷贝到各个节点上

ceph-deploy --overwrite-conf admin openstack-con01 openstack-con02 openstack-con03

查看是否配置成功。

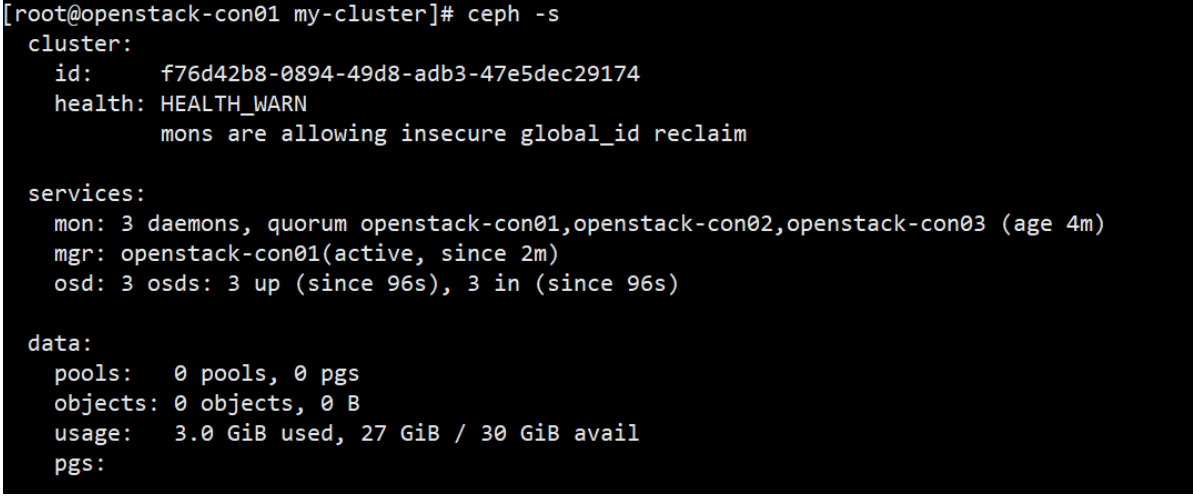

ceph -s

上述告警就是说你的集群不安全,禁用不安全模式就行

解决办法:

禁用不安全模式

ceph config set mon auth_allow_insecure_global_id_reclaim false

4、部署mgr

ceph-deploy mgr create openstack-con01 openstack-con02 openstack-con03

5、部署osd

# 检查OSD节点上所有可用的磁盘

[cephuser@ceph-admin cluster]$ ceph-deploy disk list openstack-con01 openstack-con02 openstack-con03

#使用zap选项删除所有osd节点上的分区

[cephuser@ceph-admin cluster]$ ceph-deploy disk zap openstack-con01:/dev/sdb openstack-con02:/dev/sdb openstack-con03:/dev/sdb

#在ceph1上一次初始化磁盘

ceph-deploy osd create --data /dev/sdb openstack-con01

ceph-deploy osd create --data /dev/sdb openstack-con02

ceph-deploy osd create --data /dev/sdb openstack-con03

7、ceph为对接openstack做准备

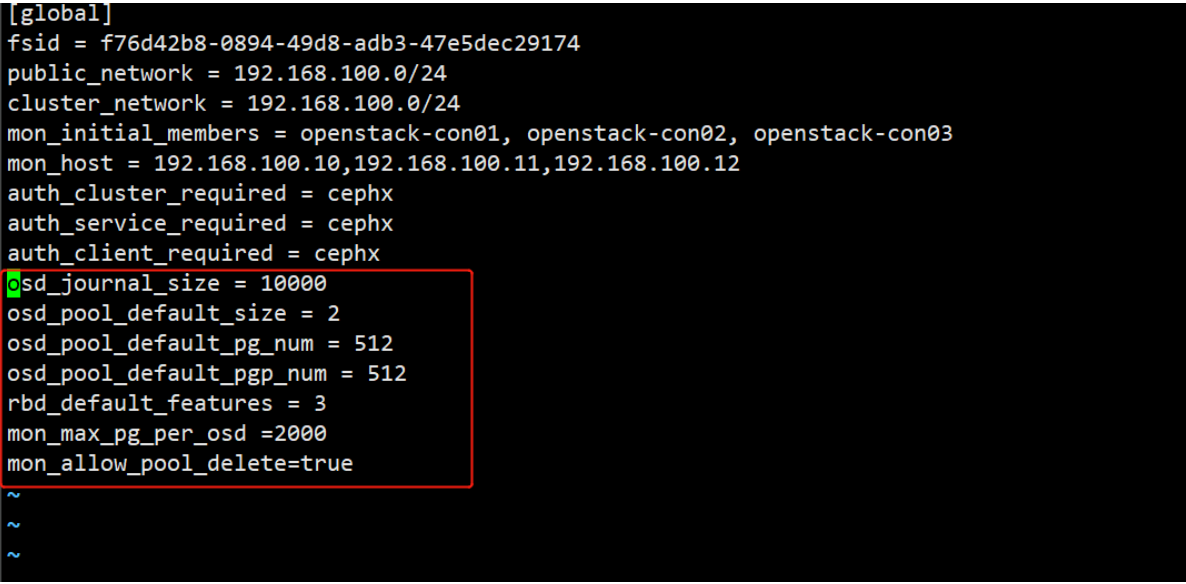

7.1 修改ceph配置文件

vim /etc/ceph/ceph.conf加入:

osd_journal_size = 10000

osd_pool_default_size = 2

osd_pool_default_pg_num = 512

osd_pool_default_pgp_num = 512

rbd_default_features = 3

mon_max_pg_per_osd =2000

mon_allow_pool_delete=true

实验环境使用二副本存储(性能考虑),实际生产环境建议三副本数环境资源不足建议单副本

osd_pool_default_size = 3

7.2 推送配置

ceph-deploy --overwrite-conf config push openstack-con01 openstack-con02 openstack-con03

7.3 重启所有ceph节点mon服务

# systemctl status ceph-mon@openstack-con01

systemctl restart ceph-mon@openstack-con01

systemctl restart ceph-mon@openstack-con02

systemctl restart ceph-mon@openstack-con03

7.3 创建对应存储池并初始化

ceph osd pool create glance-images 64

ceph osd pool create cinder-bakcups 32

ceph osd pool create cinder-volumes 64

ceph osd pool create nova-vms 32

rbd pool init glance-images

rbd pool init cinder-bakcups

rbd pool init cinder-volumes

rbd pool init nova-vms

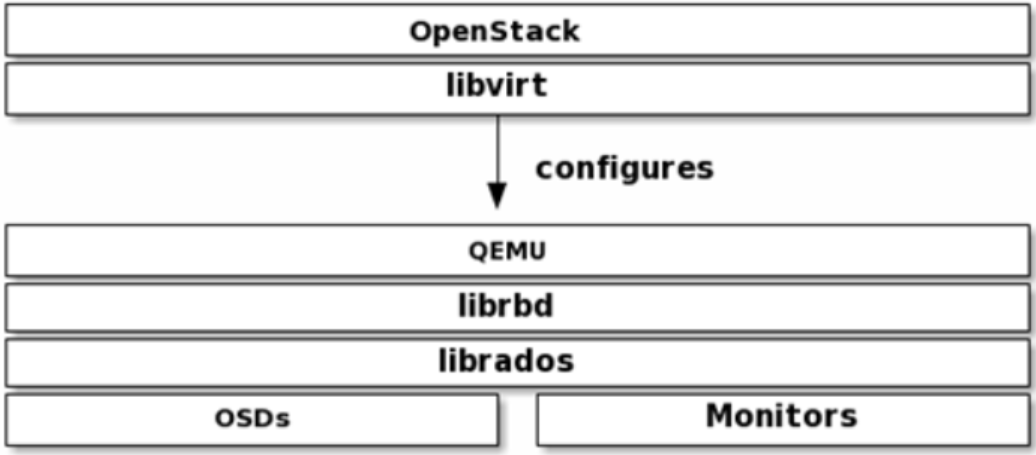

ceph接入后端图解

7.4 Glance(写入配置文件和创建ceph用户)

1.创建目录

mkdir -p /etc/kolla/config/glance

2..为 glance-api.conf 配置RBD 后端

编辑 /etc/kolla/config/glance/glance-api.conf 加入如下配置:

cat > /etc/kolla/config/glance/glance-api.conf << EOF

[DEFAULT]

show_image_direct_url = True

[glance_store]

stores = rbd

default_store = rbd

rbd_store_pool = glance-images

rbd_store_user = glance

rbd_store_ceph_conf = /etc/ceph/ceph.conf

EOF

4.生成ceph.client.glance.keyring文件,并保存到 /etc/kolla/config/glance 目录

在controller节点执行命令:

ceph auth get-or-create client.glance mon 'allow rwx' osd 'allow class-read object_prefix rbd_children, allow rwx pool=glance-images' > /etc/kolla/config/glance/ceph.client.glance.keyring

7.5 Cinder(写入配置文件和创建ceph用户)

1.创建目录

mkdir -p /etc/kolla/config/cinder

2.编辑 /etc/kolla/config/cinder/cinder-volume.conf,并配置如下内容:

cat > /etc/kolla/config/cinder/cinder-volume.conf << EOF

[DEFAULT]

enabled_backends=rbd-1

[rbd-1]

rbd_ceph_conf=/etc/ceph/ceph.conf

rbd_user=cinder

rbd_pool=cinder-volumes

volume_backend_name=rbd-1

volume_driver=cinder.volume.drivers.rbd.RBDDriver

rbd_secret_uuid = 074bc021-434d-43fb-8cbd-f517ce21e537 #在kolla配置文件夹下password.yml里找到

EOF

3.编辑 /etc/kolla/config/cinder/cinder-backup.conf,并配置如下内容:

cat > /etc/kolla/config/cinder/cinder-backup.conf << EOF

[DEFAULT]

backup_ceph_conf=/etc/ceph/ceph.conf

backup_ceph_user=cinder-backup

backup_ceph_chunk_size = 134217728

backup_ceph_pool=cinder-backups

backup_driver = cinder.backup.drivers.ceph

backup_ceph_stripe_unit = 0

backup_ceph_stripe_count = 0

restore_discard_excess_bytes = true

EOF

- 拷贝ceph的配置文件(/etc/ceph/ceph.conf)到 /etc/kolla/config/cinder

cp /etc/ceph/ceph.conf /etc/kolla/config/cinder

- 生成 ceph.client.cinder.keyring 文件

在/etc/kolla/config/cinder/创建目录

mkdir /etc/kolla/config/cinder/cinder-backup

mkdir /etc/kolla/config/cinder/cinder-volume

在 controller 节点运行:

ceph auth get-or-create client.cinder mon 'allow rwx' osd 'allow class-read object_prefix rbd_children, allow rwx pool=cinder-volumes, allow rwx pool=nova-vms ,allow rwx pool=glance-images' > /etc/kolla/config/cinder/cinder-volume/ceph.client.cinder.keyring

cp /etc/kolla/config/cinder/cinder-volume/ceph.client.cinder.keyring /etc/kolla/config/cinder/cinder-backup

在controller继续运行

ceph auth get-or-create client.cinder-backup mon 'allow rwx' osd 'allow class-read object_prefix rbd_children, allow rwx pool=cinder-bakcups' > /etc/kolla/config/cinder/cinder-backup/ceph.client.cinder-backup.keyring

7.6 Nova (写入配置文件和创建ceph用户)#

- 创建目录

mkdir -p /etc/kolla/config/nova

- 编辑 /etc/kolla/config/nova/nova-compute.conf ,配置如下内容:

cat > /etc/kolla/config/nova/nova-compute.conf << EOF

[libvirt]

images_rbd_pool=nova-vms

images_type=rbd

images_rbd_ceph_conf=/etc/ceph/ceph.conf

rbd_user=nova

EOF

- 生成 ceph.client.nova.keyring 文件

Controller节点执行

ceph auth get-or-create client.nova mon 'allow rwx' osd 'allow class-read object_prefix rbd_children, allow rwx pool=nova-vms' > /etc/kolla/config/nova/ceph.client.nova.keyring

拷贝ceph.conf, 和cinder client keyring 到 /etc/kolla/config/nova

cp /etc/ceph/ceph.conf /etc/kolla/config/nova/

cp /etc/kolla/config/cinder/cinder-volume/ceph.client.cinder.keyring /etc/kolla/config/nova/

8 平台部署与环境初始化

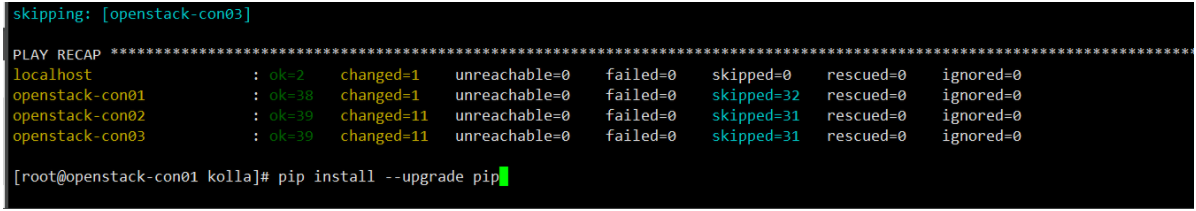

8.1 #预配置,安装docker、docker sdk、关闭防火墙、配置时间同步等

为各节点配置基础环境且会下载大量rpm包请务必保证节点网络的正常

kolla-ansible -i ./multinode bootstrap-servers -vvvv

8.2 环境预检查 没问题直接next

kolla-ansible -i /etc/kolla/multinode prechecks -vvvv

8.3 拉取镜像 时间有点长 大概15Minutes

kolla-ansible -i /etc/kolla/multinode pull -vvvv

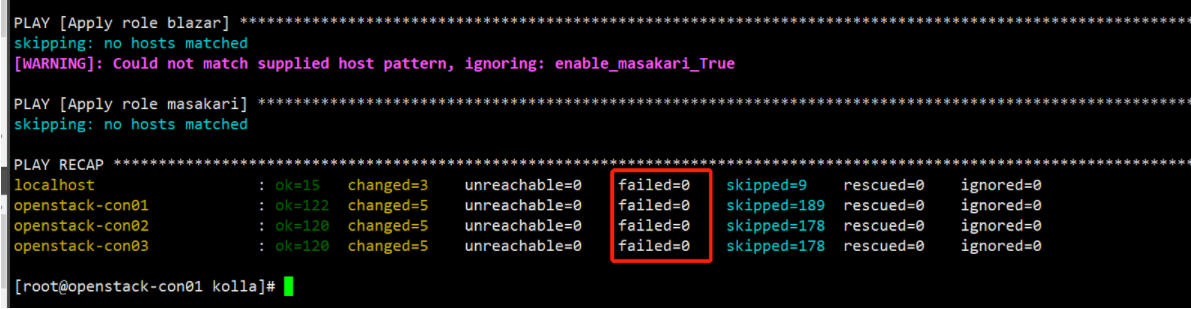

8.4 正式部署 大概15Minutes

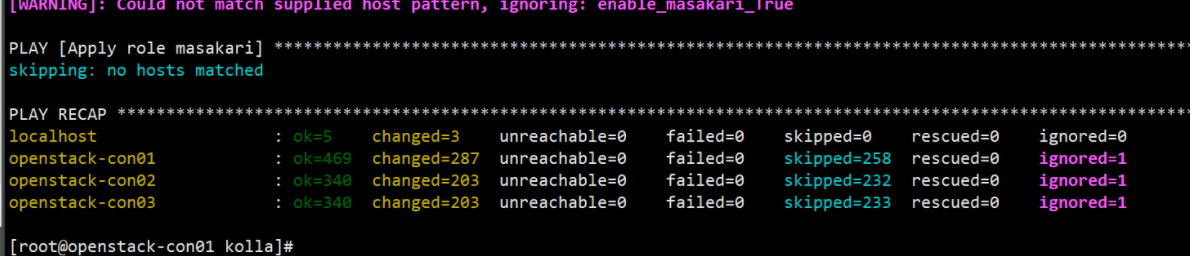

kolla-ansible -i /etc/kolla/multinode deploy -vvvv

8.5 安装openstack cli客户端(openstack con节点安装)

yum install -y centos-release-openstack-train

yum install -y python-openstackclient

8.6 生成openrc文件

kolla-ansible post-deploy

导入openrc中的环境变量

. /etc/kolla/admin-openrc.sh

9 平台初始化

# 自动建立demo project,建议手工建立

source /etc/kolla/admin-openrc.sh

/usr/local/share/kolla-ansible/init-runonce

10 dashboard

http://192.168.100.140

# admin / yP2p1EaAThI9pA2asJ5tAh3JJ34MQNGCXfQyNasf

# 在/etc/kolla/admin-openrc.sh里查询

11 openstack使用

# 查看openstack相关信息

openstack service list

openstack compute service list

openstack volume service list

openstack network agent list

openstack hypervisor list

# 参考手册

https://docs.openstack.org/python-openstackclient/train/cli/command-list.html

# 镜像修改密码和默认密码

virt-customize -a CentOS-7-x86_64-GenericCloud-2009.qcow2 --root-password password:abcd1234

cirros / gocubsgo.

# 导入镜像

openstack image create cirros-0.5.2-x86_64 --file /tmp/cirros-0.5.2-x86_64-disk.img --disk-format qcow2 --container-format bare --public

openstack image create centos-7-x86_64 --file /tmp/CentOS-7-x86_64-GenericCloud-2009.qcow2 --disk-format qcow2 --container-format bare --public

# 建立模板

openstack flavor create --id 0 --vcpus 1 --ram 256 --disk 1 m1.nano

openstack flavor create --id 1 --vcpus 1 --ram 2048 --disk 20 m1.small

# 建立provider network

openstack network create --share --external --provider-physical-network physnet1 --provider-network-type flat provider

openstack subnet create --network provider --allocation-pool start=192.168.100.221,end=192.168.100.230 --dns-nameserver 114.114.114.114 --gateway 192.168.100.1 --subnet-range 192.168.100.0/24 provider

# 建立selfservice network

openstack network create selfservice

openstack subnet create --network selfservice --dns-nameserver 114.114.114.114 --gateway 192.168.240.1 --subnet-range 192.168.240.0/24 selfservice

# 建立虚拟路由

openstack router create router

# 连接内外网络

openstack router add subnet router selfservice

openstack router set router --external-gateway provider

openstack port list

# 建立sshkey

ssh-keygen -q -N ""

openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey

# 建立安全策略

openstack security group rule create --proto icmp default

openstack security group rule create --proto tcp --dst-port 22 default

# 查看已建配置

openstack flavor list

openstack image list

openstack security group list

openstack port list

openstack network list

# 建立虚拟机

openstack server create --flavor m1.nano --image cirros-0.5.2-x86_64 --nic net-id=fe172dec-0522-472a-aed4-da70f6c269a6 --security-group default --key-name mykey provider-instance-01

openstack server create --flavor m1.nano --image cirros-0.5.2-x86_64 --nic net-id=c30c5057-607d-4736-acc9-31927cc9a22c --security-group default --key-name mykey selfservice-instance-01

# 指派对外服务ip

openstack floating ip create provider

openstack floating ip list

openstack server add floating ip selfservice-instance-01 10.0.100.227

openstack server list

# 私有云映射方法

iptables -t nat -A PREROUTING -i eth0 -p tcp --dport 1022 -j DNAT --to 192.168.122.231:22

iptables -t nat -D PREROUTING -i eth0 -p tcp --dport 1022 -j DNAT --to 192.168.122.231:22

# 注意

1. cirros 5.0 需要内存128M以上

2. centos7 ERROR nova.compute.manager [instance: e950095b-aa1e-47e5-bbb8-9942715eb1c3] 2021-10-15T02:17:33.897332Z qemu-kvm: cannot set up guest memory 'pc.ram': Cannot allocate memory

宿主机内存不够使用,临时解决方法 echo 1 > /proc/sys/vm/overcommit_memory