SuperPixel

Gonzalez R. C. and Woods R. E. Digital Image Processing (Forth Edition).

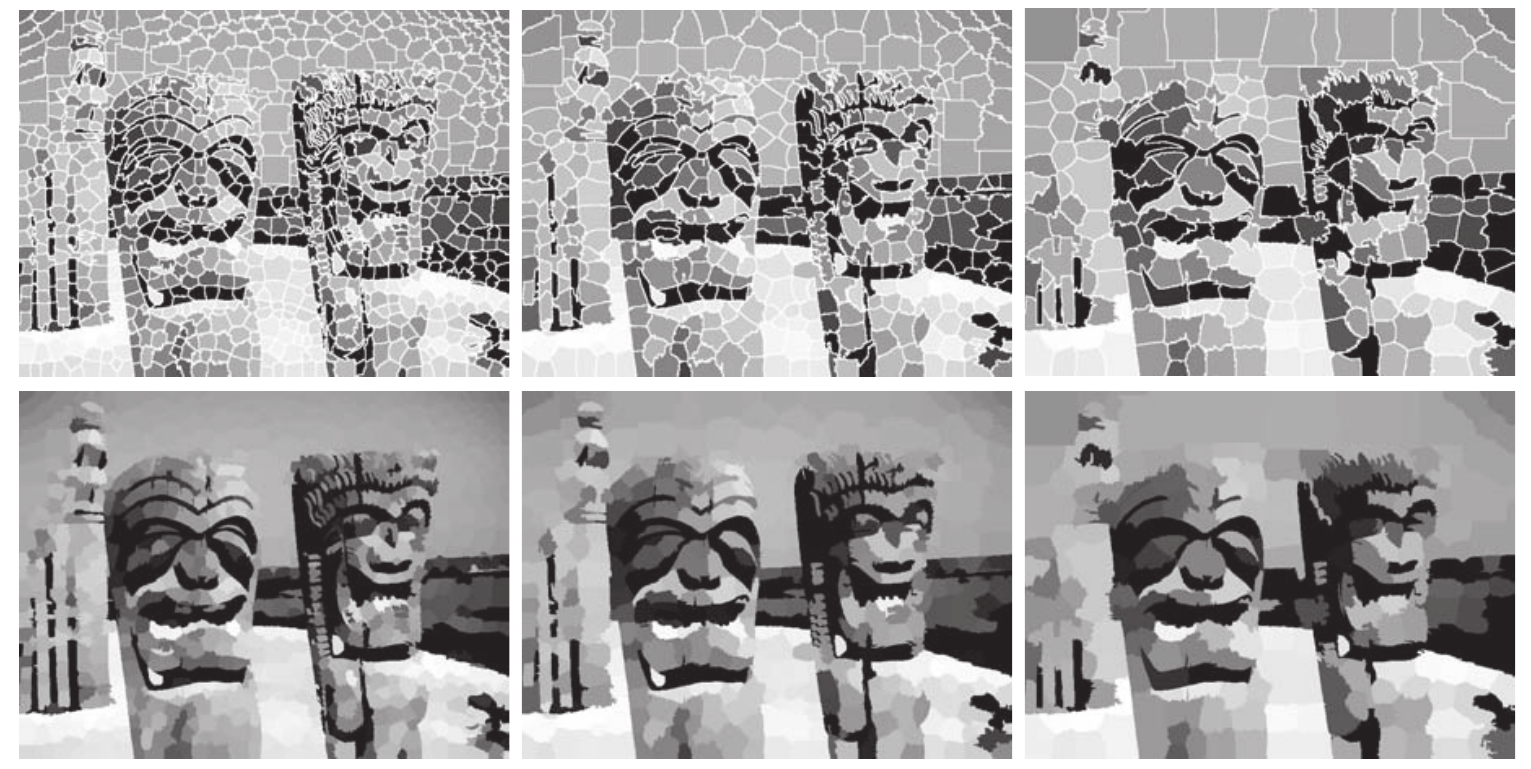

单个像素的意义其实很小, 于是有了superpixel的概念, 即一簇pixels的集合(且这堆pixels共用一个值), 这会导致图片有非常有趣的艺术风格(下图便是取不同的superpixel大小形成的效果, 有种抽象画的感觉?):

经过superpixel的预处理后, 图片可以变得更加容易提取edge, region, 毕竟superpixel已经率先提取过一次了.

SLIC Superpixel algorithm

SLIC (simple linear iterative clustering) 算法是基于k-means的一种聚类算法.

Given: 需要superpixels的个数\(n_{sp}\); 图片\(f(x, y) = (r, g, b), x = 1,2,\cdots M, y = 1, 2, \cdots, N\);

-

根据图片以及其位置信息生成数据:

\[\bm{z} = [r, g, b, x, y]^T, \]其中\(r, g, b\)是颜色编码, \(x, y\)是位置信息.

-

令\(n_{tp} = MN\)表示pixels的个数, 并计算网格大小:

\[s = [n_{tp} / n_{sp}]^{1/2}. \] -

将图片均匀分割为大小\(s\)的网格, 初始化superpixels的中心:

\[\bm{m}_i = [r_i, g_i, b_i, x_i, y_i]^T, i=1,2,\cdots, n_{sp}, \]为网格的中心. 或者, 为了防止噪声的影响, 选择中心\(3 \times 3\)领域内梯度最小的点.

-

将图片的每个pixel的类别标记为\(L(p) = -1\), 距离\(d(p) = \infty\);

-

重复下列步骤直到收敛:

-

对于每个像素点\(p\), 计算其与\(2s \times 2s\)邻域内的中心点\(\bm{m}_i\)之间的距离\(D_i(p)\), 倘若\(D_i(p) < d(p)\):

\[d(p) = D_i, L(p) = i. \] -

令\(C_i\)表示\(L(p) = i\)的像素点的集合, 更新superpixels的中心:

\[\bm{m}_i = \frac{1}{|C_i|} \sum_{\bm{z} \in C_i} \bm{z}, i=1, 2, \cdots, n_{sp}. \]

-

-

将以\(\bm{m}_i\)为中心的区域中的点的(r, g, b)设定为与\(\bm{m}_i\)一致.

距离函数的选择

倘若\(D\)采用的是和普通K-means一样的\(\|\cdot\|_2\)显然是不合适的, 因为\((r, g, b)\)和\((x, y)\)显然不是一个尺度的. 故采用如下的距离函数:

其中\(d_{cm}, d_{sm}\)分别是\(d_c, d_s\)可能取到的最大值, 相当于标准化了.

代码

import numpy as np

def _generate_data(img):

img = img.astype(np.float64)

if len(img.shape) == 2:

img = img[..., None]

M, N = img.shape[0], img.shape[1]

loc = np.stack(np.meshgrid(range(M), range(N), indexing='ij'), axis=-1)

classes = -np.ones((M, N))

distances = np.ones((M, N)) * np.float('inf')

data = np.concatenate((img, loc), axis=-1)

return data, classes, distances

def _generate_means(data, size: int):

M, N = data.shape[0], data.shape[1]

x_splits = np.arange(0, M + size, size)

y_splits = np.arange(0, N + size, size)

means = []

for i in range(len(x_splits) - 1):

for j in range(len(y_splits) - 1):

r1, r2 = x_splits[i:i+2]

c1, c2 = y_splits[j:j+2]

region = data[r1:r2, c1:c2]

means.append(region.mean(axis=(0, 1)))

return np.array(means)

def _unit_step(data, means, classes, distances, size, dis_fn):

M, N = data.shape[0], data.shape[1]

size = 2 * size

for i, m in enumerate(means):

# ..., x, y

x, y = np.round(m[-2:])

x, y = int(x), int(y)

xl, xr = max(0, x - size), min(x + size, M)

yb, yt = max(0, y - size), min(y + size, N)

p = data[xl:xr, yb:yt]

_dis = dis_fn(p, m)

indices = _dis < distances[xl:xr, yb:yt]

distances[xl:xr, yb:yt][indices] = _dis[indices]

classes[xl:xr, yb:yt][indices] = i

# update

for i in range(len(means)):

x_indices, y_indices = np.where(classes == i)

if len(x_indices) == 0:

continue

means[i] = data[x_indices, y_indices].mean(axis=0)

def slic(img, size, max_iters=10, compactness=10):

data, classes, distances = _generate_data(img)

means = _generate_means(data, size)

dsm = size

dcm = (img.max(axis=(0, 1)) - img.min(axis=(0, 1))) * compactness

dsc = np.concatenate((dcm, [dsm] * 2))

def dis_func(p, m):

_dis = ((p - m) / dsc) ** 2

return _dis.sum(axis=-1)

for _ in range(max_iters):

_unit_step(data, means, classes, distances, size, dis_func)

new_img = np.zeros_like(img, dtype=np.float)

for i, m in enumerate(means):

x_indices, y_indices = np.where(classes == i)

if len(x_indices) == 0:

continue

new_img[x_indices, y_indices] = m[:-2]

return new_img.astype(img.dtype)

from skimage import io, segmentation, filters

from freeplot.base import FreePlot

img = io.imread(r"Lenna.png")

ours = slic(img, size=50, compactness=0.5)

def mask2img(mask, img):

new_img = img.astype(np.float)

masks = np.unique(mask)

for m in masks:

x, y = np.where(mask == m)

mcolor = new_img[x, y].mean(axis=0)

new_img[x, y] = mcolor

return new_img.astype(img.dtype)

mask = segmentation.slic(img)

yours = mask2img(mask, img)

fp = FreePlot((1, 3), (10.3, 5), titles=('Lenna', 'ours', 'skimage.segmentation.slic'))

fp.imageplot(img, index=(0, 0))

fp.imageplot(ours, index=(0, 1))

fp.imageplot(yours, index=(0, 2))

fp.set_title()

fp.show()

skimage上实现的代码还有强制连通性, 我想这个是为什么它看起来这么流畅的原因. Compactness 越大, 聚类越倾向于空间信息, 所以越容易出现块状结构.