Data Augmentation

Heuristics-driven

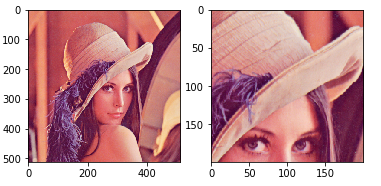

Pad and Crop

即:

import torchvision.transforms as T

T.Compose([

T.Pad(4, padding_mode='reflect'),

T.RandomCrop(32), # for CIFAR-10 and CIFAR-100

T.RandomHorizontalFlip(),

T.ToTensor()

])

注: T.RandomCrop(200)

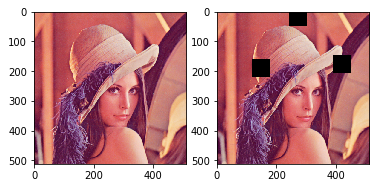

Cutout

形象点说, 就是给图片挖几个洞(不过作者的解释挺有趣的, 输入层的连续dropout, 这个想法有趣).

# uoguelph-mlrg cutout

# https://github.com/uoguelph-mlrg/Cutout/blob/master/util/cutout.py

# 原文代码

import torch

import numpy as np

class Cutout(object):

"""Randomly mask out one or more patches from an image.

Args:

n_holes (int): Number of patches to cut out of each image.

length (int): The length (in pixels) of each square patch.

"""

def __init__(self, n_holes, length):

self.n_holes = n_holes

self.length = length

def __call__(self, img):

"""

Args:

img (Tensor): Tensor image of size (C, H, W).

Returns:

Tensor: Image with n_holes of dimension length x length cut out of it.

"""

h = img.size(1)

w = img.size(2)

mask = np.ones((h, w), np.float32)

for n in range(self.n_holes):

y = np.random.randint(h)

x = np.random.randint(w)

y1 = np.clip(y - self.length // 2, 0, h)

y2 = np.clip(y + self.length // 2, 0, h)

x1 = np.clip(x - self.length // 2, 0, w)

x2 = np.clip(x + self.length // 2, 0, w)

mask[y1: y2, x1: x2] = 0.

mask = torch.from_numpy(mask)

mask = mask.expand_as(img)

img = img * mask

return img

MixUp

MixUp 就是将两个图片按照一定比例相加

\[\tilde{x} = \lambda x_A + (1 - \lambda) x_B,

\]

\(\lambda \sim \mathrm{Beta}(\alpha, \alpha), \alpha \in (0, +\infty)\), 同时

\[\tilde{y} = \lambda y_A + (1 - \lambda) y_B.

\]

CutMix

CutMix 就是将一个图片裁剪掉一块, 然后用另一张图片的对应位置填补:

\[\tilde{x} = M \circ x_A + (1 - M) \circ x_B,

\]

其中\(M \in \{0, 1\}^{H \times W}\) 是掩码矩阵, 按照如下方式生成:

\[r_x \sim U[0, W], \: r_y = U[0, H], \\

r_w = W\sqrt{1 - \lambda} , \: r_h = H\sqrt{1 - \lambda},

\]

得到一个bounding box, 在其中的\(M_{ij}= 0\), 否则为\(1\), \(\lambda\)控制框的大小的:

\[ \frac{r_w r_h}{HW} = 1 - \lambda.

\]

此时, 标签需要发生相应变化:

\[\tilde{y} = \lambda y_{A} + (1 - \lambda)y_B,

\]

这里标签是one-hot形式(或者概率向量).

AugMix

Data-driven

AutoAugment

根据数据集选择合理的augmentations策略.

RandAugment

从\(K\)中augmentations中随机选择\(N\)(replace=True), 每个的magnitude都是\(M\).

其中\(M, N\)是通过网格搜索找到的(太粗暴了吧)

DeepAugment

假设\(f(\cdot;\theta)\)是一个Image-to-Image的网络, 则DeepAugment是指对\(\theta\)添加扰动, 比如扰动权重, 改变激活函数等等.

和这个思想类似的还有AdversarialAugment.