TriggerBN ++

motivation

用两个BN(一个用于干净样本, 一个用于对抗样本), 结果当使用的时候, 精度能够上升, 而使用的时候, 也有相当的鲁棒性. 原文采用的是

来训练(这里输出的是概率向量而非logits), 试试看别的组合方式, 比如

settings

| Attribute | Value |

|---|---|

| attack | pgd-linf |

| batch_size | 128 |

| beta1 | 0.9 |

| beta2 | 0.999 |

| dataset | cifar10 |

| description | AT=0.5=default-sgd-0.1=pgd-linf-0.0314-0.25-10=128=default |

| epochs | 100 |

| epsilon | 0.03137254901960784 |

| learning_policy | [50, 75] x 0.1 |

| leverage | 0.5 |

| loss | cross_entropy |

| lr | 0.1 |

| model | resnet32 |

| momentum | 0.9 |

| optimizer | sgd |

| progress | False |

| resume | False |

| seed | 1 |

| stats_log | False |

| steps | 10 |

| stepsize | 0.25 |

| transform | default |

| weight_decay | 0.0005 |

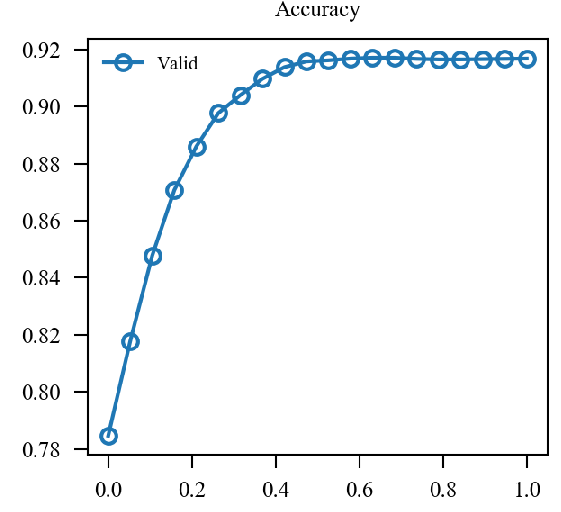

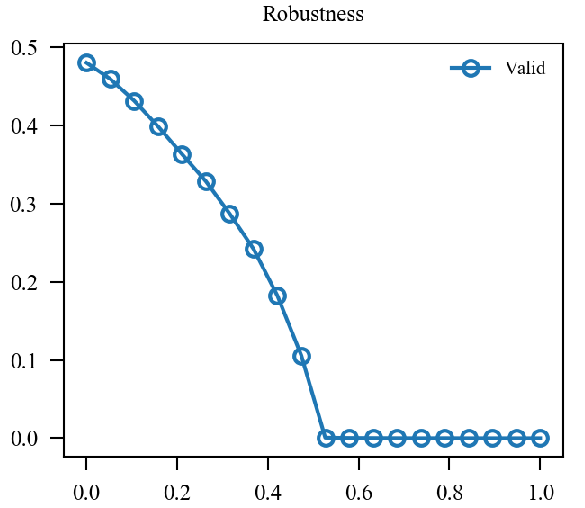

results

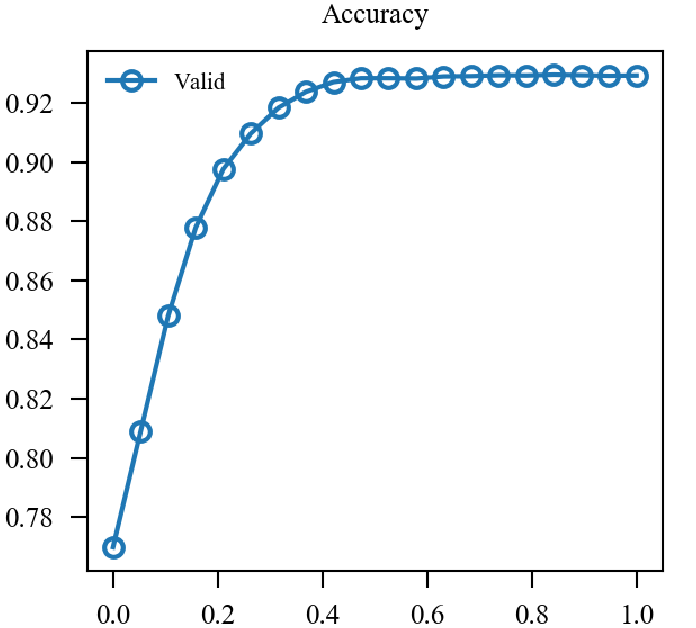

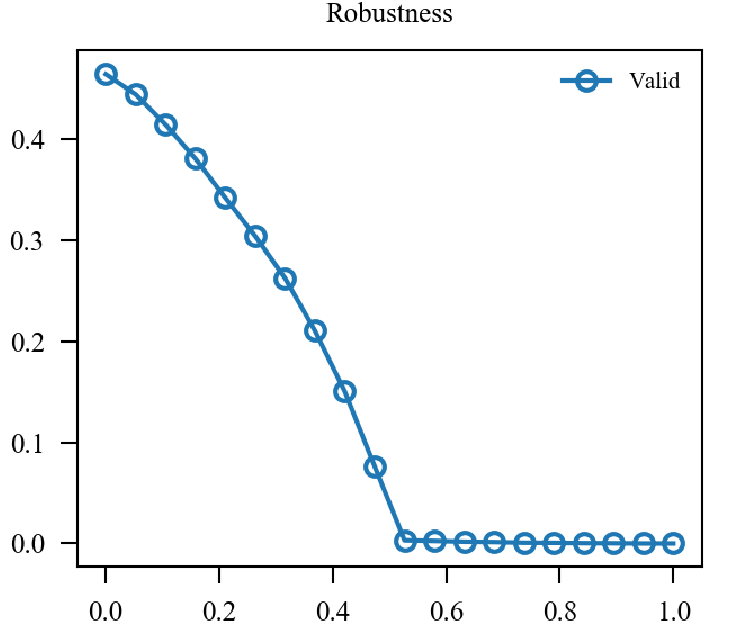

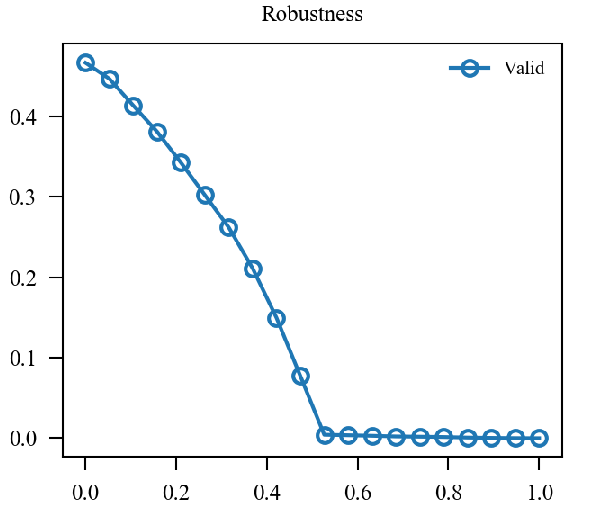

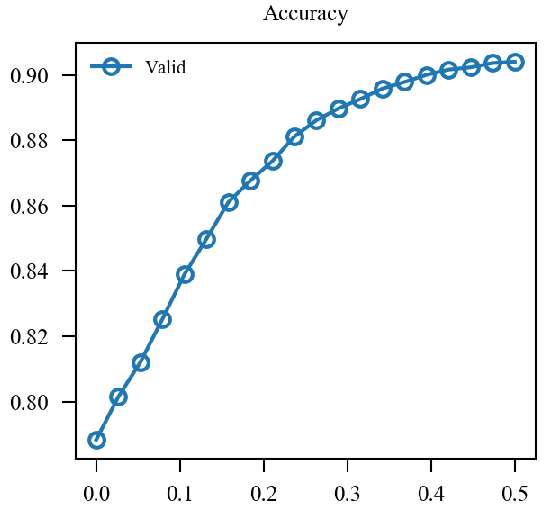

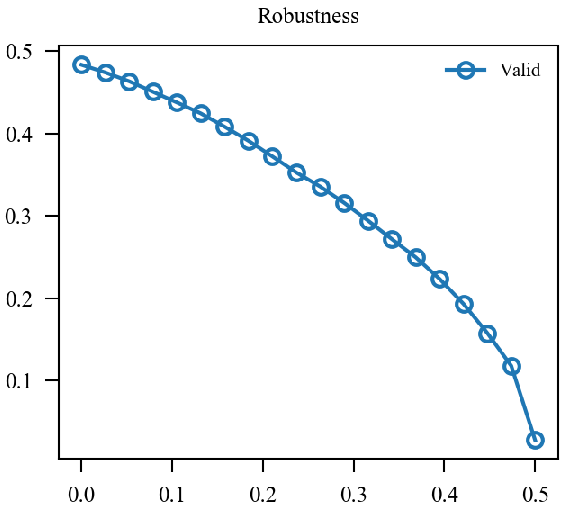

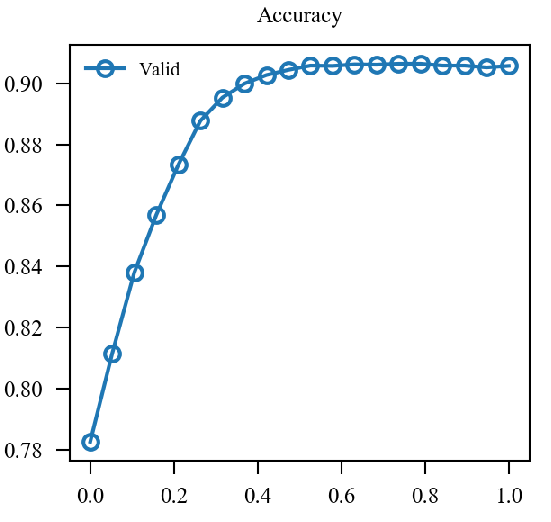

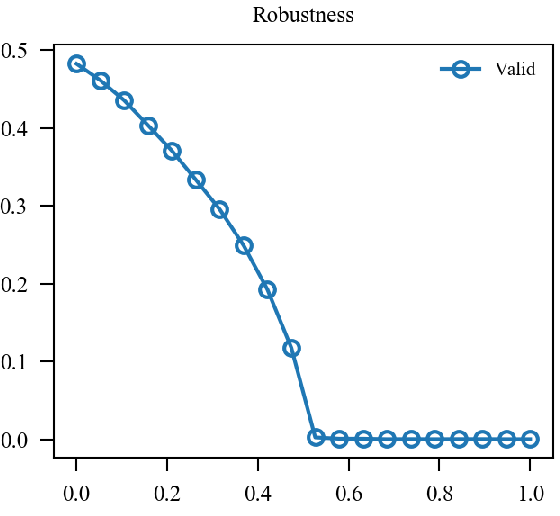

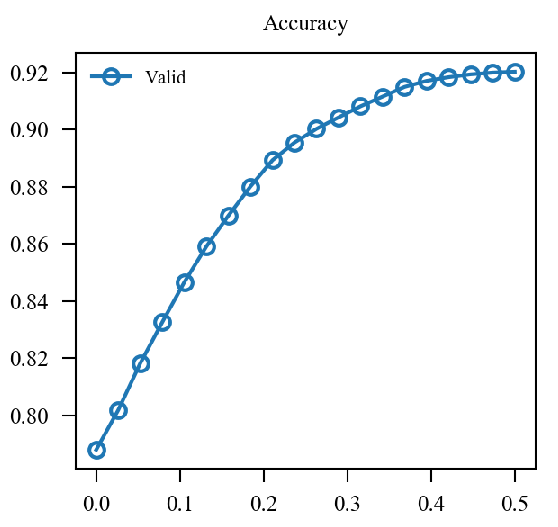

x轴为从变化到.

| Accuracy | Robustness | |

|---|---|---|

|

|

|

|

|

|

| 48.350 |  |

|

| 48.270 |  |

|

| 48.310 |  |

|

| 47.960 |  |

|

似乎原来的形式情况更好一点.

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· 【自荐】一款简洁、开源的在线白板工具 Drawnix