爬虫_糗事百科(正则表达式)

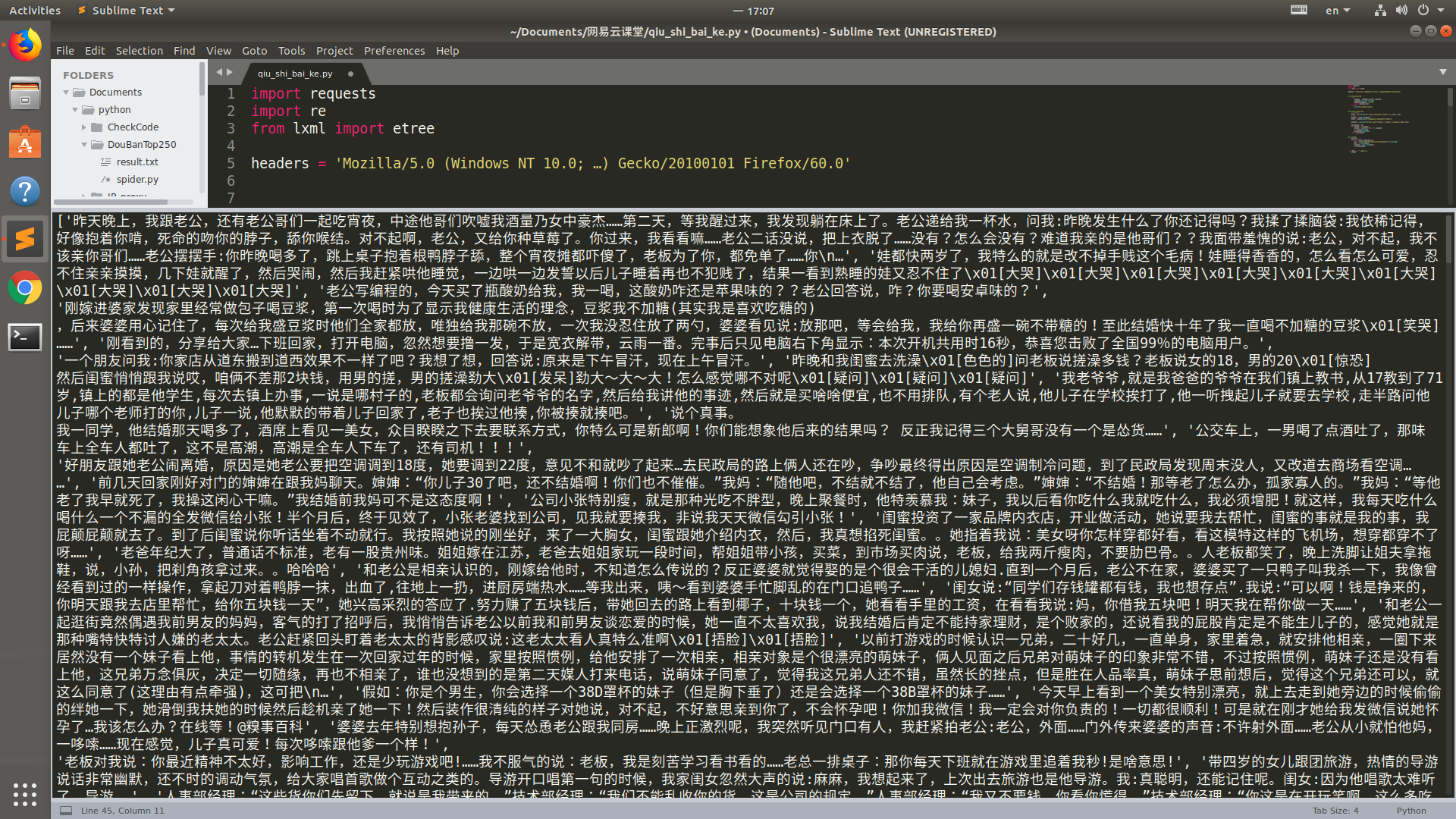

1 import requests 2 import re 3 from lxml import etree 4 5 headers = 'Mozilla/5.0 (Windows NT 10.0; …) Gecko/20100101 Firefox/60.0' 6 7 8 def get_html(url): 9 try: 10 response = requests.get(url, headers) 11 response.raise_for_status() 12 response.encoding = 'utf-8' 13 return response.text 14 except: 15 print('get_html() faild') 16 17 18 def parse_html(html): 19 # 正则表达式 20 hrefs = re.findall(r'<a class="contentHerf" href=(.*?)', html, re.S) 21 # xpath 22 element = etree.HTML(html) 23 hrefs = element.xpath('//a[@class="contentHerf"]/@href') 24 25 contents = re.findall(r'<div class="content".*?<span>(.*?)</span>', html, re.S) 26 27 new_content = [] 28 for content in contents: 29 content = re.sub('<.*?>', '', content) 30 x = content.strip() 31 new_content.append(x) 32 return new_content 33 34 35 def main(): 36 page_num = 13 37 for i in range(1, page_num+1): 38 url = 'https://www.qiushibaike.com/text/page/{}/'.format(i) 39 html = get_html(url) 40 contents = parse_html(html) 41 print(contents) 42 43 44 if __name__ == '__main__': 45 main()

hrefs = re.findall(r'<a class="contentHerf" href=(.*?)', html, re.S)

这个链接的正则表达式感觉写的没问题啊,可是匹配不到东西,奇了怪了,先放着吧,xpath可以匹配到

运行结果