数据模拟

1.日志数据的生成

1)数据准备,下载地址:https://pan.baidu.com/s/1aaf2-Fuchi3v-0cJqLw4EQ 提取码:sbim

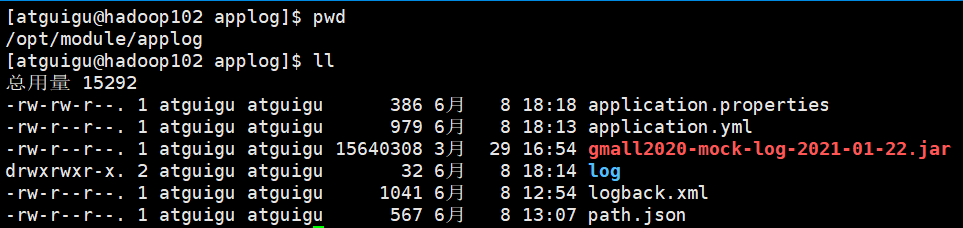

2)在/opt/module/下创建applog目录,将application.properties、application.yml、gmall2020-mock-log-2021-01-22.jar、path.json、logback.xml上传至该目录

3)运行Jar包(生成2021-06-08号的数据:首日数据)

java -jar gmall2020-mock-log-2021-01-22.jar

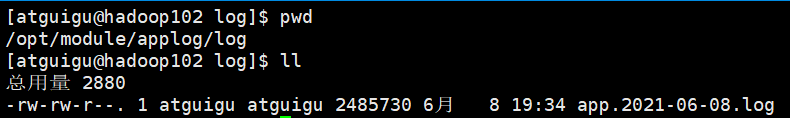

4)查询生成的数据(复制其中的一个时间戳,查看数据生成日期)

tail -f log/app.2021-06-08.log

date -d @1623060886

5)将applog目录分发

xsync /opt/module/applog/

6)将该数据使用Flume采集到HDFS上

vim /opt/module/flume/job/file_to_kafka.conf

#1、定义agent、source、channel的名称 a1.sources = r1 a1.channels = c1 #2、描述source a1.sources.r1.type = TAILDIR #定义断点续传文件 a1.sources.r1.positionFile = /opt/module/flume/position.json #定义监控的文件组 a1.sources.r1.filegroups = f1 #制定文件组监控的文件 a1.sources.r1.filegroups.f1 = /opt/module/applog/log/app.* #定义source每个批次采集的数据 a1.sources.r1.batchSize = 100 #3、描述拦截器[过滤非json数据] #定义拦截器名称 a1.sources.r1.interceptors = i1 #定义拦截器类型 a1.sources.r1.interceptors.i1.type = com.yuange.flume.interceptor.ETLInterceptor$Builder #4、描述channel a1.channels.c1.type = org.apache.flume.channel.kafka.KafkaChannel #指定kafka集群地址 a1.channels.c1.kafka.bootstrap.servers = hadoop102:9092,hadoop103:9092,hadoop104:9092 #指定写入的topic的名称 a1.channels.c1.kafka.topic = applog #指定数据是否以Event数据格式写入kafka a1.channels.c1.parseAsFlumeEvent = false #5、关联source->channel a1.sources.r1.channels = c1

vim /opt/module/flume/job/kafka-to-hdfs.conf

#1、定义agent、channel、source、sink名称 a1.sources = r1 a1.channels = c1 a1.sinks = k1 # ##2、描述source a1.sources.r1.type = org.apache.flume.source.kafka.KafkaSource ##指定kafka集群地址 a1.sources.r1.kafka.bootstrap.servers = hadoop102:9092,hadoop103:9092 ##指定从哪个topic读取数据 a1.sources.r1.kafka.topics = applog ##指定消费者组的id a1.sources.r1.kafka.consumer.group.id = g1 ##指定source从kafka一个批次拉取多少条消息: batchSize<=事务容量<=channel容量 a1.sources.r1.batchSize = 100 ##指定消费者组第一个消费topic的数据的时候从哪里开始消费 a1.sources.r1.kafka.consumer.auto.offset.reset = earliest # ##3、描述拦截器 a1.sources.r1.interceptors = i1 a1.sources.r1.interceptors.i1.type = com.yuange.flume.interceptor.MyTimestampInterceptor$Builder # ##4、描述channel a1.channels.c1.type = file ##指定数据保存在本地磁盘哪个目录 a1.channels.c1.dataDirs = /opt/module/flume/datas ##指定channel内存中event的指针数据 a1.channels.c1.checkpointDir = /opt/module/flume/checkpoint ##指定checkpoint的持久化的间隔时间 a1.channels.c1.checkpointInterval = 5000 ##指定channel容量 a1.channels.c1.capacity = 1000000 # ##5、描述sink a1.sinks.k1.type = hdfs ##指定数据存储目录 a1.sinks.k1.hdfs.path = hdfs://hadoop102:8020/origin_data/gmall/log/topic_log/%Y-%m-%d ##指定文件的前缀 a1.sinks.k1.hdfs.filePrefix = log- ##指定滚动生成文件的时间间隔 a1.sinks.k1.hdfs.rollInterval = 30 ##指定滚动生成文件的大小 a1.sinks.k1.hdfs.rollSize = 132120576 ##指定写入多少个event之后滚动生成新文件<一般禁用> a1.sinks.k1.hdfs.rollCount = 0 ##指定sink每个批次从channel拉取多少数据 a1.sinks.k1.hdfs.batchSize = 100 ##指定写入hdfs的时候压缩格式 a1.sinks.k1.hdfs.codeC = lzop ##指定文件写入的是格式 SequenceFile-序列化文件, DataStream-文本文件, CompressedStream-压缩文件 a1.sinks.k1.hdfs.fileType = CompressedStream #a1.sinks.k1.hdfs.fileType = DataStream # ##6、关联source->channel->sink a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

vim /home/atguigu/bin/flume-first.sh

#! /bin/bash #1、判断参数是否传入 if [ $# -lt 1 ] then echo "必须传入参数..." exit fi #2、根据参数匹配逻辑 case $1 in "start") for host in hadoop102 hadoop103 do echo "--------------------$host flume启动采集---------------------------" ssh $host "nohup /opt/module/flume/bin/flume-ng agent -n a1 -c /opt/module/flume/conf/ -f /opt/module/flume/job/file_to_kafka.conf >/opt/module/flume/logs.txt 2>&1 &" done ;; "stop") for host in hadoop102 hadoop103 do echo "--------------------$host flume停止采集-----------------------" ssh $host "ps -ef |grep file_to_kafka |grep -v grep |awk '{print \$2}'| xargs -n1 kill" done ;; *) echo "参数输入错误....." ;; esac

vim /home/atguigu/bin/flume-second.sh

#! /bin/bash if [ $# -lt 1 ] then echo "参数个数错误!!!" exit fi case $1 in "start") for i in hadoop104 do echo " --------启动 $i 消费flume-------" ssh $i "nohup /opt/module/flume/bin/flume-ng agent --conf /opt/module/flume/conf/ --conf-file /opt/module/flume/job/kafka-to-hdfs.conf --name a1 >/opt/module/flume/logs.txt 2>&1 &" done ;; "stop") for i in hadoop104 do echo " --------停止 $i 消费flume-------" ssh $i "ps -ef | grep kafka-to-hdfs | grep -v grep |awk '{print \$2}' | xargs -n1 kill" done ;; *) echo "参数格式错误!!!" exit ;; esac

7)启动Flume数据采集脚本(启动之前先分发)

flume-first.sh start

flume-second.sh start

8)编写脚本一键造数据

(1)在家目录的bin目录中新建 lg.sh

vim lg.sh

#!/bin/bash #调用后,在hadoop102和hadoop103运行日志生成程序 for i in hadoop102 hadoop103 do ssh $i "cd /opt/module/applog ; java -jar gmall2020-mock-log-2021-01-22.jar > /dev/null 2>&1 &" done

(2)在家目录的bin目录中新建 makeLogData.sh

vim makeLogData.sh

#!/bin/bash # makeLogData.sh 起始日期 10(造多少天的数据) if [ -n "$1" ] then startdate=$1 else # 默认使用当前日期的前一天 echo 请传入起始日期! exit fi if [ -n "$2" ] ;then days=$2 else days=10 fi function createLogConfs() { logContent="logging.config=./logback.xml\n mock.date=$1\n mock.type=log\n mock.startup.count=200\n mock.max.mid=500000\n mock.max.uid=500\n mock.max.sku-id=35\n mock.page.during-time-ms=20000\n mock.error.rate=3\n mock.log.sleep=10\n mock.detail.source-type-rate=40:25:15:20\n mock.if_get_coupon_rate=75\n mock.max.coupon-id=3\n mock.search.keyword=图书,小米,iphone11,电视,口红,ps5,苹果手机,小米盒子\n" echo -e $logContent> /opt/module/applog/application.properties xsync /opt/module/applog/application.properties } for((i=0;i<$days;i++)) do do_date=`date -d "$startdate $i day" +'%F'` echo ------------------当前生成数据日期:$do_date-------------------- createLogConfs $do_date lg.sh sleep 5s done echo 全部结束!

9)分发脚本并赋予脚本可执行权限

chmod +x lg.sh makeLogData.sh

xsync lg.sh makeLogData.sh

10)运行造脚本(模拟2021-06-09 ----- 2021-06-10: 每日)

makeLogData.sh 2021-06-09 2

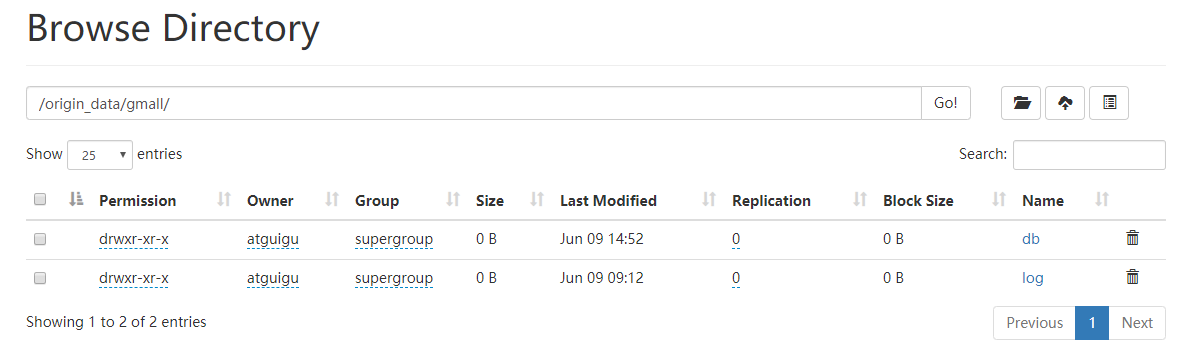

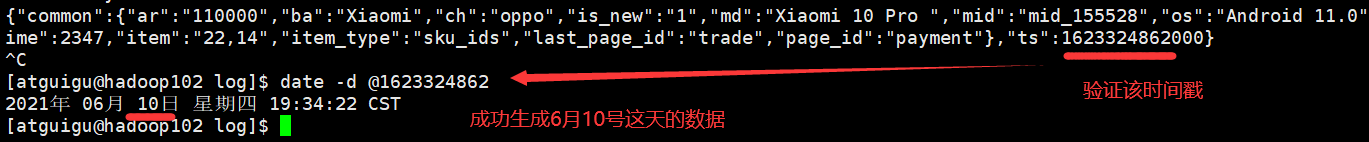

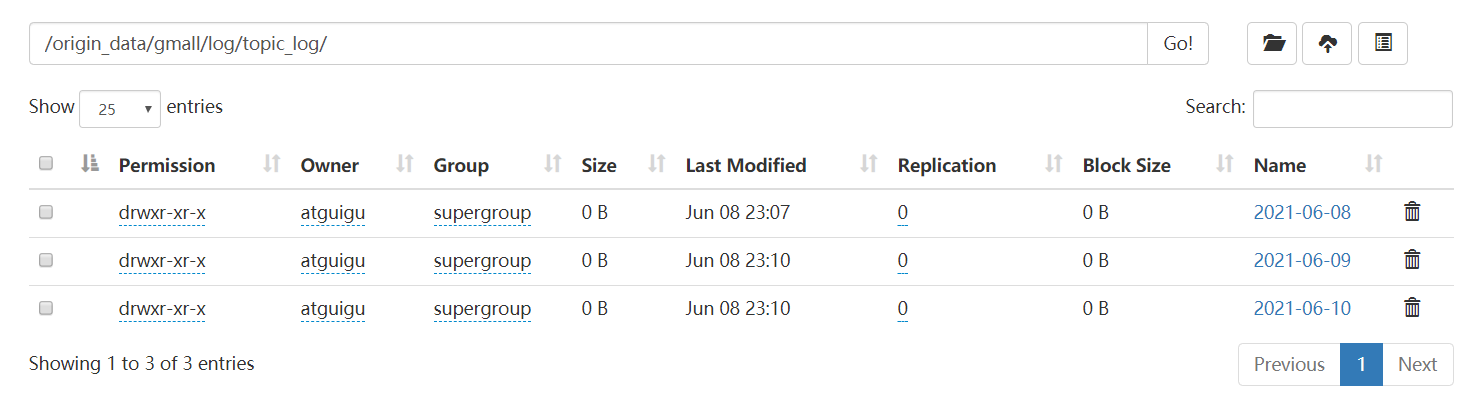

11)验证数据

tail -f app.2021-06-08.log

date -d @1623324862

2.业务数据的生成

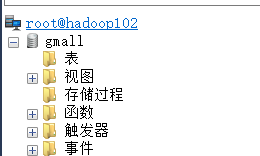

1)连接mysql,然后创建gmall数据库(编码选择utf8,格式选择通用)

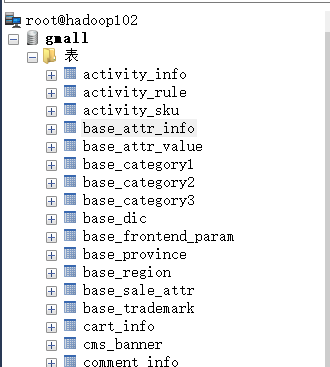

2)创建完成之后运行gmall的sql脚本,完成之后刷新数据库,发现表已经创建进来

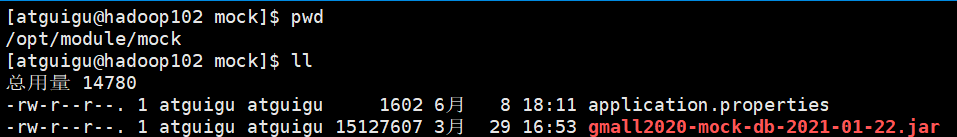

3)在/opt/module/下创建mock目录,然后将application.properties、gmall2020-mock-db-2021-01-22.jar文件上传至该目录

mkdir /opt/module/mock

vim application.properties

logging.level.root=info spring.datasource.driver-class-name=com.mysql.jdbc.Driver spring.datasource.url=jdbc:mysql://hadoop102:3306/gmall?characterEncoding=utf-8&useSSL=false&serverTimezone=GMT%2B8 spring.datasource.username=root spring.datasource.password=root123 logging.pattern.console=%m%n mybatis-plus.global-config.db-config.field-strategy=not_null #业务日期 mock.date=2021-06-08 #第一次生成数据,需要将以下两个重置配置设置为1 #是否重置 mock.clear=0 #是否重置用户 mock.clear.user=0 #生成新用户数量 mock.user.count=100 #男性比例 mock.user.male-rate=20 #用户数据变化概率 mock.user.update-rate:20 #收藏取消比例 mock.favor.cancel-rate=10 #收藏数量 mock.favor.count=100 #每个用户添加购物车的概率 mock.cart.user-rate=50 #每次每个用户最多添加多少种商品进购物车 mock.cart.max-sku-count=8 #每个商品最多买几个 mock.cart.max-sku-num=3 #购物车来源 用户查询,商品推广,智能推荐, 促销活动 mock.cart.source-type-rate=60:20:10:10 #用户下单比例 mock.order.user-rate=50 #用户从购物中购买商品比例 mock.order.sku-rate=50 #是否参加活动 mock.order.join-activity=1 #是否使用购物券 mock.order.use-coupon=1 #购物券领取人数 mock.coupon.user-count=100 #支付比例 mock.payment.rate=70 #支付方式 支付宝:微信 :银联 mock.payment.payment-type=30:60:10 #评价比例 好:中:差:自动 mock.comment.appraise-rate=30:10:10:50 #退款原因比例:质量问题 商品描述与实际描述不一致 缺货 号码不合适 拍错 不想买了 其他 mock.refund.reason-rate=30:10:20:5:15:5:5

4)运行Jar,生成数据到MySQL(若是第一次生成,则修改配置文件中的mock.clear=1以及mock.clear.user=1)

java -jar gmall2020-mock-db-2021-01-22.jar

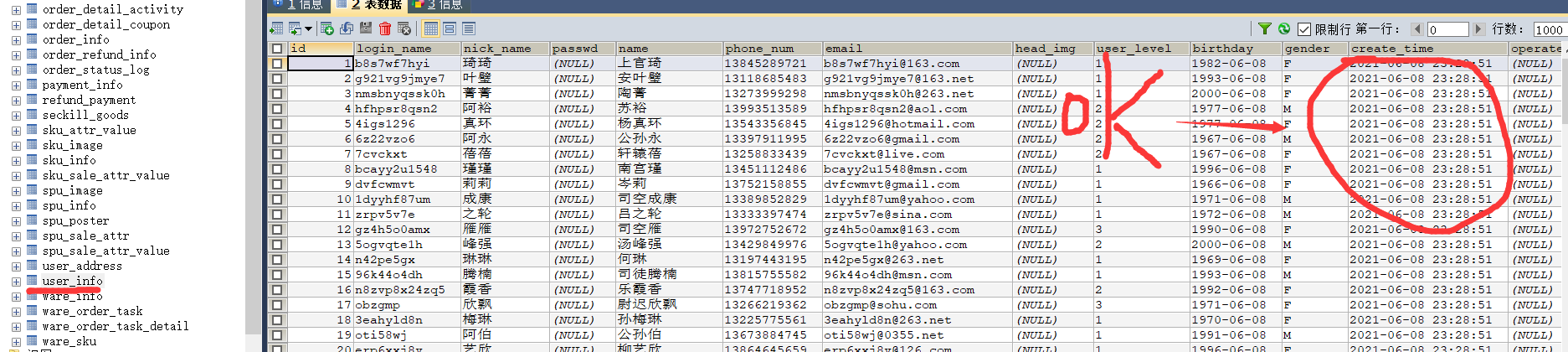

5)查看gmall数据库

6)安转Sqoop

(1)下载地址:http://mirrors.hust.edu.cn/apache/sqoop/1.4.6/

(2)上传安装包sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz到hadoop102的/opt/software路径中

(3)解压sqoop安装包到指定目录,如:

tar -zxvf sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz -C /opt/module/

(4)进入/opt/module/目录,重命名sqoop-1.4.6.bin__hadoop-2.0.4-alpha

mv sqoop-1.4.6.bin__hadoop-2.0.4-alpha/ sqoop

(5) 进入到/opt/module/sqoop/conf目录,重命名配置文件

mv sqoop-env-template.sh sqoop-env.sh

(6) 修改配置文件

vim sqoop-env.sh

#添加如下内容 export HADOOP_COMMON_HOME=/opt/module/hadoop-3.1.3 export HADOOP_MAPRED_HOME=/opt/module/hadoop-3.1.3 export HIVE_HOME=/opt/module/hive export ZOOKEEPER_HOME=/opt/module/zookeeper export ZOOCFGDIR=/opt/module/zookeeper/conf

(7)拷贝jdbc驱动到sqoop的lib目录下

cp /opt/software/mysql-connector-java-5.1.27-bin.jar /opt/module/sqoop/lib/

(8)我们可以通过某一个command来验证sqoop配置是否正确

bin/sqoop help

(9)测试Sqoop是否能够成功连接数据库

bin/sqoop list-databases --connect jdbc:mysql://hadoop102:3306/ --username root --password root123

7)编写脚本,一键造数据

(1)在用户家目录下的bin目录下创建makeDBData.sh

vim makeDBData.sh

#!/bin/bash if [ -n "$1" ] then startdate=$1 else # 默认使用当前日期的前一天 echo 请传入起始日期! exit fi if [ -n "$2" ] ;then days=$2 else days=10 fi #动态生成application.properties function createconfs() { content=" logging.level.root=info\n spring.datasource.driver-class-name=com.mysql.jdbc.Driver\n spring.datasource.url=jdbc:mysql://hadoop102:3306/gmall?characterEncoding=utf-8&useSSL=false&serverTimezone=GMT%2B8\n spring.datasource.username=root\n spring.datasource.password=root123\n logging.pattern.console=%m%n\n mybatis-plus.global-config.db-config.field-strategy=not_null\n mock.date=$1\n mock.clear=0\n mock.clear.user=0\n mock.user.count=100\n mock.user.male-rate=20\n mock.user.update-rate:20\n mock.favor.cancel-rate=10\n mock.favor.count=100\n mock.cart.user-rate=50\n mock.cart.max-sku-count=8\n mock.cart.max-sku-num=3\n mock.cart.source-type-rate=60:20:10:10\n mock.order.user-rate=50\n mock.order.sku-rate=50\n mock.order.join-activity=1\n mock.order.use-coupon=1\n mock.coupon.user-count=100\n mock.payment.rate=70\n mock.payment.payment-type=30:60:10\n mock.comment.appraise-rate=30:10:10:50\n mock.refund.reason-rate=30:10:20:5:15:5:5\n " echo -e $content> /opt/module/mock/application.properties } for((i=0;i<$days;i++)) do do_date=`date -d "$startdate $i day" +'%F'` echo ------------------当前生成数据日期:$do_date-------------------- createconfs $do_date cd /opt/module/mock java -jar gmall2020-mock-db-2021-01-22.jar mysql_sqoop_hdfs_everyday.sh all $do_date done echo 全部结束!

(2)在家目录的bin目录继续创建mysql_sqoop_hdfs_init.sh

vim /home/atguigu/bin/mysql_sqoop_hdfs_init.sh

#! /bin/bash APP=gmall sqoop=/opt/module/sqoop/bin/sqoop # 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天 if [ -n "$2" ] ;then do_date=$2 else echo "请传入日期参数" exit fi import_data(){ $sqoop import \ --connect jdbc:mysql://hadoop102:3306/$APP \ --username root \ --password root123 \ --target-dir /origin_data/$APP/db/$1/$do_date \ --delete-target-dir \ --query "$2 where \$CONDITIONS" \ --num-mappers 1 \ --fields-terminated-by '\t' \ --compress \ --compression-codec lzop \ --null-string '\\N' \ --null-non-string '\\N' hadoop jar /opt/module/hadoop-3.1.3/share/hadoop/common/hadoop-lzo-0.4.20.jar com.hadoop.compression.lzo.DistributedLzoIndexer /origin_data/$APP/db/$1/$do_date } import_order_info(){ import_data order_info "select id, total_amount, order_status, user_id, payment_way, delivery_address, out_trade_no, create_time, operate_time, expire_time, tracking_no, province_id, activity_reduce_amount, coupon_reduce_amount, original_total_amount, feight_fee, feight_fee_reduce from order_info" } import_coupon_use(){ import_data coupon_use "select id, coupon_id, user_id, order_id, coupon_status, get_time, using_time, used_time, expire_time from coupon_use" } import_order_status_log(){ import_data order_status_log "select id, order_id, order_status, operate_time from order_status_log" } import_user_info(){ import_data "user_info" "select id, login_name, nick_name, name, phone_num, email, user_level, birthday, gender, create_time, operate_time from user_info" } import_order_detail(){ import_data order_detail "select id, order_id, sku_id, sku_name, order_price, sku_num, create_time, source_type, source_id, split_total_amount, split_activity_amount, split_coupon_amount from order_detail" } import_payment_info(){ import_data "payment_info" "select id, out_trade_no, order_id, user_id, payment_type, trade_no, total_amount, subject, payment_status, create_time, callback_time from payment_info" } import_comment_info(){ import_data comment_info "select id, user_id, sku_id, spu_id, order_id, appraise, create_time from comment_info" } import_order_refund_info(){ import_data order_refund_info "select id, user_id, order_id, sku_id, refund_type, refund_num, refund_amount, refund_reason_type, refund_status, create_time from order_refund_info" } import_sku_info(){ import_data sku_info "select id, spu_id, price, sku_name, sku_desc, weight, tm_id, category3_id, is_sale, create_time from sku_info" } import_base_category1(){ import_data "base_category1" "select id, name from base_category1" } import_base_category2(){ import_data "base_category2" "select id, name, category1_id from base_category2" } import_base_category3(){ import_data "base_category3" "select id, name, category2_id from base_category3" } import_base_province(){ import_data base_province "select id, name, region_id, area_code, iso_code, iso_3166_2 from base_province" } import_base_region(){ import_data base_region "select id, region_name from base_region" } import_base_trademark(){ import_data base_trademark "select id, tm_name from base_trademark" } import_spu_info(){ import_data spu_info "select id, spu_name, category3_id, tm_id from spu_info" } import_favor_info(){ import_data favor_info "select id, user_id, sku_id, spu_id, is_cancel, create_time, cancel_time from favor_info" } import_cart_info(){ import_data cart_info "select id, user_id, sku_id, cart_price, sku_num, sku_name, create_time, operate_time, is_ordered, order_time, source_type, source_id from cart_info" } import_coupon_info(){ import_data coupon_info "select id, coupon_name, coupon_type, condition_amount, condition_num, activity_id, benefit_amount, benefit_discount, create_time, range_type, limit_num, taken_count, start_time, end_time, operate_time, expire_time from coupon_info" } import_activity_info(){ import_data activity_info "select id, activity_name, activity_type, start_time, end_time, create_time from activity_info" } import_activity_rule(){ import_data activity_rule "select id, activity_id, activity_type, condition_amount, condition_num, benefit_amount, benefit_discount, benefit_level from activity_rule" } import_base_dic(){ import_data base_dic "select dic_code, dic_name, parent_code, create_time, operate_time from base_dic" } import_order_detail_activity(){ import_data order_detail_activity "select id, order_id, order_detail_id, activity_id, activity_rule_id, sku_id, create_time from order_detail_activity" } import_order_detail_coupon(){ import_data order_detail_coupon "select id, order_id, order_detail_id, coupon_id, coupon_use_id, sku_id, create_time from order_detail_coupon" } import_refund_payment(){ import_data refund_payment "select id, out_trade_no, order_id, sku_id, payment_type, trade_no, total_amount, subject, refund_status, create_time, callback_time from refund_payment" } import_sku_attr_value(){ import_data sku_attr_value "select id, attr_id, value_id, sku_id, attr_name, value_name from sku_attr_value" } import_sku_sale_attr_value(){ import_data sku_sale_attr_value "select id, sku_id, spu_id, sale_attr_value_id, sale_attr_id, sale_attr_name, sale_attr_value_name from sku_sale_attr_value" } case $1 in "order_info") import_order_info ;; "base_category1") import_base_category1 ;; "base_category2") import_base_category2 ;; "base_category3") import_base_category3 ;; "order_detail") import_order_detail ;; "sku_info") import_sku_info ;; "user_info") import_user_info ;; "payment_info") import_payment_info ;; "base_province") import_base_province ;; "base_region") import_base_region ;; "base_trademark") import_base_trademark ;; "activity_info") import_activity_info ;; "cart_info") import_cart_info ;; "comment_info") import_comment_info ;; "coupon_info") import_coupon_info ;; "coupon_use") import_coupon_use ;; "favor_info") import_favor_info ;; "order_refund_info") import_order_refund_info ;; "order_status_log") import_order_status_log ;; "spu_info") import_spu_info ;; "activity_rule") import_activity_rule ;; "base_dic") import_base_dic ;; "order_detail_activity") import_order_detail_activity ;; "order_detail_coupon") import_order_detail_coupon ;; "refund_payment") import_refund_payment ;; "sku_attr_value") import_sku_attr_value ;; "sku_sale_attr_value") import_sku_sale_attr_value ;; "all") import_base_category1 import_base_category2 import_base_category3 import_order_info import_order_detail import_sku_info import_user_info import_payment_info import_base_region import_base_province import_base_trademark import_activity_info import_cart_info import_comment_info import_coupon_use import_coupon_info import_favor_info import_order_refund_info import_order_status_log import_spu_info import_activity_rule import_base_dic import_order_detail_activity import_order_detail_coupon import_refund_payment import_sku_attr_value import_sku_sale_attr_value ;; esac

(3)在家目录的bin目录继续创建mysql_sqoop_hdfs_everyday.sh

vim /home/atguigu/bin/mysql_sqoop_hdfs_everyday.sh

#! /bin/bash APP=gmall sqoop=/opt/module/sqoop/bin/sqoop if [ -n "$2" ] ;then do_date=$2 else do_date=`date -d '-1 day' +%F` fi import_data(){ $sqoop import \ --connect jdbc:mysql://hadoop102:3306/$APP \ --username root \ --password root123 \ --target-dir /origin_data/$APP/db/$1/$do_date \ --delete-target-dir \ --query "$2 and \$CONDITIONS" \ --num-mappers 1 \ --fields-terminated-by '\t' \ --compress \ --compression-codec lzop \ --null-string '\\N' \ --null-non-string '\\N' hadoop jar /opt/module/hadoop-3.1.3/share/hadoop/common/hadoop-lzo-0.4.20.jar com.hadoop.compression.lzo.DistributedLzoIndexer /origin_data/$APP/db/$1/$do_date } import_order_info(){ import_data order_info "select id, total_amount, order_status, user_id, payment_way, delivery_address, out_trade_no, create_time, operate_time, expire_time, tracking_no, province_id, activity_reduce_amount, coupon_reduce_amount, original_total_amount, feight_fee, feight_fee_reduce from order_info where (date_format(create_time,'%Y-%m-%d')='$do_date' or date_format(operate_time,'%Y-%m-%d')='$do_date')" } import_coupon_use(){ import_data coupon_use "select id, coupon_id, user_id, order_id, coupon_status, get_time, using_time, used_time, expire_time from coupon_use where (date_format(get_time,'%Y-%m-%d')='$do_date' or date_format(using_time,'%Y-%m-%d')='$do_date' or date_format(used_time,'%Y-%m-%d')='$do_date' or date_format(expire_time,'%Y-%m-%d')='$do_date')" } import_order_status_log(){ import_data order_status_log "select id, order_id, order_status, operate_time from order_status_log where date_format(operate_time,'%Y-%m-%d')='$do_date'" } import_user_info(){ import_data "user_info" "select id, login_name, nick_name, name, phone_num, email, user_level, birthday, gender, create_time, operate_time from user_info where (DATE_FORMAT(create_time,'%Y-%m-%d')='$do_date' or DATE_FORMAT(operate_time,'%Y-%m-%d')='$do_date')" } import_order_detail(){ import_data order_detail "select id, order_id, sku_id, sku_name, order_price, sku_num, create_time, source_type, source_id, split_total_amount, split_activity_amount, split_coupon_amount from order_detail where DATE_FORMAT(create_time,'%Y-%m-%d')='$do_date'" } import_payment_info(){ import_data "payment_info" "select id, out_trade_no, order_id, user_id, payment_type, trade_no, total_amount, subject, payment_status, create_time, callback_time from payment_info where (DATE_FORMAT(create_time,'%Y-%m-%d')='$do_date' or DATE_FORMAT(callback_time,'%Y-%m-%d')='$do_date')" } import_comment_info(){ import_data comment_info "select id, user_id, sku_id, spu_id, order_id, appraise, create_time from comment_info where date_format(create_time,'%Y-%m-%d')='$do_date'" } import_order_refund_info(){ import_data order_refund_info "select id, user_id, order_id, sku_id, refund_type, refund_num, refund_amount, refund_reason_type, refund_status, create_time from order_refund_info where date_format(create_time,'%Y-%m-%d')='$do_date'" } import_sku_info(){ import_data sku_info "select id, spu_id, price, sku_name, sku_desc, weight, tm_id, category3_id, is_sale, create_time from sku_info where 1=1" } import_base_category1(){ import_data "base_category1" "select id, name from base_category1 where 1=1" } import_base_category2(){ import_data "base_category2" "select id, name, category1_id from base_category2 where 1=1" } import_base_category3(){ import_data "base_category3" "select id, name, category2_id from base_category3 where 1=1" } import_base_province(){ import_data base_province "select id, name, region_id, area_code, iso_code, iso_3166_2 from base_province where 1=1" } import_base_region(){ import_data base_region "select id, region_name from base_region where 1=1" } import_base_trademark(){ import_data base_trademark "select id, tm_name from base_trademark where 1=1" } import_spu_info(){ import_data spu_info "select id, spu_name, category3_id, tm_id from spu_info where 1=1" } import_favor_info(){ import_data favor_info "select id, user_id, sku_id, spu_id, is_cancel, create_time, cancel_time from favor_info where 1=1" } import_cart_info(){ import_data cart_info "select id, user_id, sku_id, cart_price, sku_num, sku_name, create_time, operate_time, is_ordered, order_time, source_type, source_id from cart_info where 1=1" } import_coupon_info(){ import_data coupon_info "select id, coupon_name, coupon_type, condition_amount, condition_num, activity_id, benefit_amount, benefit_discount, create_time, range_type, limit_num, taken_count, start_time, end_time, operate_time, expire_time from coupon_info where 1=1" } import_activity_info(){ import_data activity_info "select id, activity_name, activity_type, start_time, end_time, create_time from activity_info where 1=1" } import_activity_rule(){ import_data activity_rule "select id, activity_id, activity_type, condition_amount, condition_num, benefit_amount, benefit_discount, benefit_level from activity_rule where 1=1" } import_base_dic(){ import_data base_dic "select dic_code, dic_name, parent_code, create_time, operate_time from base_dic where 1=1" } import_order_detail_activity(){ import_data order_detail_activity "select id, order_id, order_detail_id, activity_id, activity_rule_id, sku_id, create_time from order_detail_activity where date_format(create_time,'%Y-%m-%d')='$do_date'" } import_order_detail_coupon(){ import_data order_detail_coupon "select id, order_id, order_detail_id, coupon_id, coupon_use_id, sku_id, create_time from order_detail_coupon where date_format(create_time,'%Y-%m-%d')='$do_date'" } import_refund_payment(){ import_data refund_payment "select id, out_trade_no, order_id, sku_id, payment_type, trade_no, total_amount, subject, refund_status, create_time, callback_time from refund_payment where (DATE_FORMAT(create_time,'%Y-%m-%d')='$do_date' or DATE_FORMAT(callback_time,'%Y-%m-%d')='$do_date')" } import_sku_attr_value(){ import_data sku_attr_value "select id, attr_id, value_id, sku_id, attr_name, value_name from sku_attr_value where 1=1" } import_sku_sale_attr_value(){ import_data sku_sale_attr_value "select id, sku_id, spu_id, sale_attr_value_id, sale_attr_id, sale_attr_name, sale_attr_value_name from sku_sale_attr_value where 1=1" } case $1 in "order_info") import_order_info ;; "base_category1") import_base_category1 ;; "base_category2") import_base_category2 ;; "base_category3") import_base_category3 ;; "order_detail") import_order_detail ;; "sku_info") import_sku_info ;; "user_info") import_user_info ;; "payment_info") import_payment_info ;; "base_province") import_base_province ;; "activity_info") import_activity_info ;; "cart_info") import_cart_info ;; "comment_info") import_comment_info ;; "coupon_info") import_coupon_info ;; "coupon_use") import_coupon_use ;; "favor_info") import_favor_info ;; "order_refund_info") import_order_refund_info ;; "order_status_log") import_order_status_log ;; "spu_info") import_spu_info ;; "activity_rule") import_activity_rule ;; "base_dic") import_base_dic ;; "order_detail_activity") import_order_detail_activity ;; "order_detail_coupon") import_order_detail_coupon ;; "refund_payment") import_refund_payment ;; "sku_attr_value") import_sku_attr_value ;; "sku_sale_attr_value") import_sku_sale_attr_value ;; "all") import_base_category1 import_base_category2 import_base_category3 import_order_info import_order_detail import_sku_info import_user_info import_payment_info import_base_trademark import_activity_info import_cart_info import_comment_info import_coupon_use import_coupon_info import_favor_info import_order_refund_info import_order_status_log import_spu_info import_activity_rule import_base_dic import_order_detail_activity import_order_detail_coupon import_refund_payment import_sku_attr_value import_sku_sale_attr_value ;; esac

(4)赋予可执行权限

chmod +x makeDBData.sh mysql_sqoop_hdfs_init.sh mysql_sqoop_hdfs_everyday.sh

8)启动脚本(模拟存量数据)

(1)2021-06-08 首日数据(上传至HDFS)

mysql_sqoop_hdfs_init.sh all 2021-06-08

(2)2021-06-09----2021-06-10 : 每日同步

makeDBData.sh 2021-06-09 2