常见反爬措施——ip反爬

在使用爬虫过程中经常会遇到这样的情况,爬虫最初运行还可以,正常爬取数据,但一杯茶的功夫就会出现报错,比如返回403Forbidden,这时打开网页可能会发现数据为空,原来网页端的信息并未显示,或提示您的IP访问频率太高,又或者弹出一个验证码需要我们去识别,再者过了一会又可以正常访问。

出现上述现象的原因是一些网站采取了一些反爬措施,如服务器会检测某个IP在单位时间内的请求次数,如果这个次数超过了指定的阈值,就直接拒绝服务,并返回错误信息,这种情况就称作封IP,爬虫就不能通过正常途径进行数据的爬取了。

这时,我们需要借助代理,将自己的IP伪装起来,用代理的IP 对所要的数据发起请求,然后将数据传回给爬虫。笔者在此就简单介绍一些代理IP的流程及使用方法。

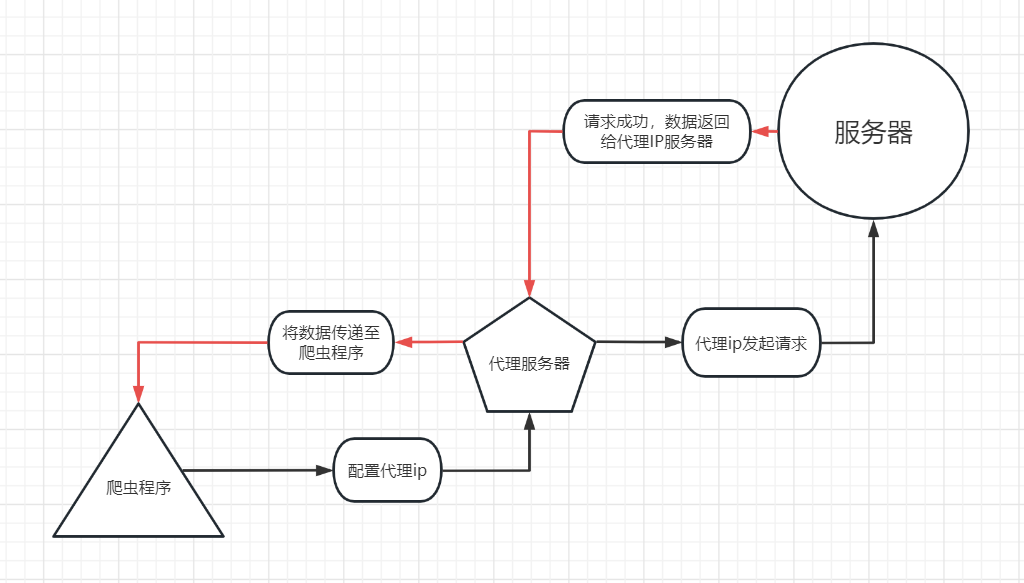

一、IP代理的逻辑

逻辑图

二、代理的设置

在使用代理ip之前,我们需要做一些准备工作,找到代理ip的服务商,当然网上有很多代理服务的网站,如:快代理、流冠代理等,当然有些代理服务网站会有一些免费代理,至于免费代理的质量,有些不尽人意。当然你也可以通过各大免费代理网站上爬取免费代理构建ip池,但构建ip池后的使用时效果不太好,所以较为靠谱的方式是用付费代理。

代理其实就是IP地址和端口的组合,格式(ip:port)。

- requests的代理设置

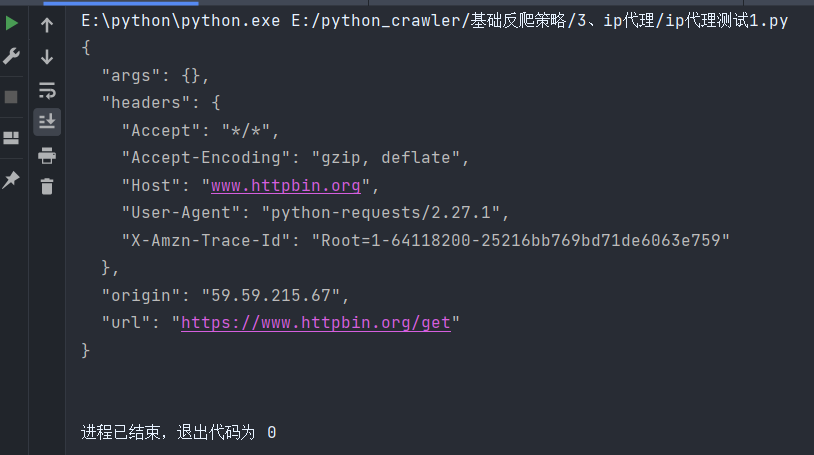

在爬虫中运用较多的大都是requests,而且代理设置非常简单,只需要传入proxies参数即可。本节介绍使用的是流管代理,通过api链接生成ip,如下图所示:

具体代码如下:

proxy = '183.162.226.249:25020'

proxies = {

'http': 'http://' + proxy,

'https': 'http://' + proxy,

}

try:

response=requests.get('https://www.httpbin.org/get',proxies=proxies,timeout=5)

print(response.text)

except requests.exceptions.ConnectionError as e:

print('Error',e.args)返回结果如下:

从以上简单示例中可以看出我们已成功配置代理ip。但是,以上生成一个ip是三分钟,如果复制再加上程序的改调,时间也差不多用完了,那么接下来需要构建一个基础代理IP池。

三、付费IP代理池。

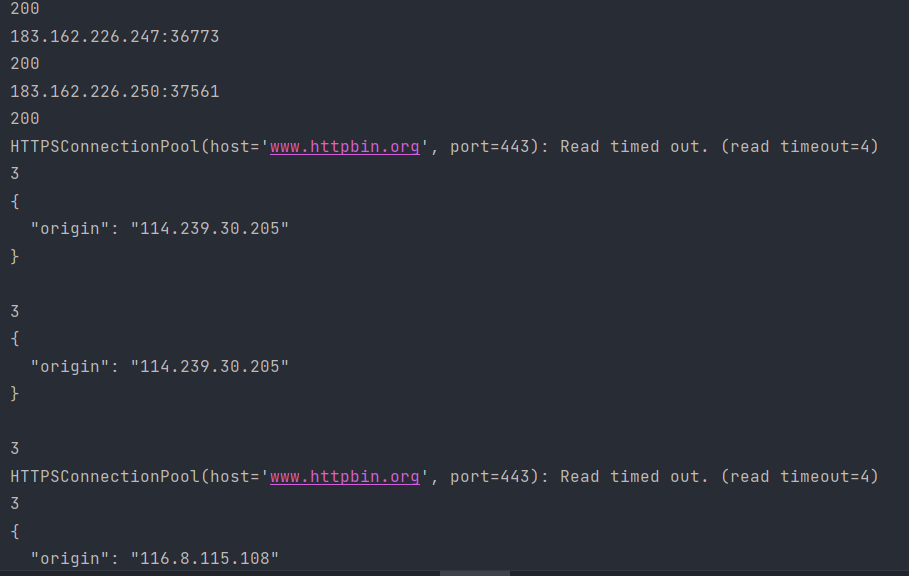

1、简单的多线程代理池的示例。

- 一个具有时效性的代理池,防止其中的一个代理被封造成数据丢失或者爬虫程序停止。最好的代理池的构建方式是ip的api接口+基础代码逻辑+数据库,进行筛选构建,如崔神的Python3爬虫教程-高效代理池的维护。

- 笔者简单构建了一个付费的代理池,效率有些方面还需要完善,具体代码如下:

import json

import time

import requests

from fake_useragent import UserAgent

import threading

use_ip_list = []

class IP_pool(object):

def __init__(self):

# 目标网站

self.url = "https://httpbin.org/get"

# 付费代理IP的API链接,可以生成五个,三分钟的IP

self.get_url = "https://api.hailiangip.com:8522/api/getIpEncrypt?dataType=0&encryptParam=i6OcePKr4Cq0wPH1UJ%2FCOyBYXf0wSdR0KIhVhoMMPHvy912xFHA3Hogn7b2rQpv2HYJUsHY9eUYoxg%2BMnszqBry50mHL0pwmTLsDGPSw"

# 存放代理ip

self.ip_list = []

# 当前使用第几个ip

self.result = 0

# 记录每个ip的使用次数

self.number = 0

# 测试ip是否可用的网站

self.test_url = "https://www.baidu.com"

ua = UserAgent()

self.headers = {"User-Agent": f"{ua.random}"}

# print(self.headers)

# self.queue = Queue()

global use_ip_list

while True:

if len(use_ip_list) == 0:

self.get_ip()

else:

time.sleep(10)

# 获取ip

def get_ip(self):

try:

get_data = requests.get(self.get_url).text

self.ip_list.clear()

# 生成一个json列表,包含五个ip,循环选中列表中的ip内容

for data_ip in json.loads(get_data)["data"]:

# print(data_ip)

self.ip_list.append(

f'{data_ip["ip"]}:{data_ip["port"]}'

)

# print(self.ip_list)

self.test_ip()

except Exception as e:

print(e)

print("获取ip失败!")

# self.test_ip()

# 测试ip

def test_ip(self):

global use_ip_list

try:

for i in self.ip_list:

print(i)

proxies = {

"http": "http://" + i,

"https": "http://" + i,

}

# print(proxies)

# print(66)

response = requests.get("https://www.baidu.com", headers=self.headers, proxies=proxies, timeout=2)

# print(response.text)

if response.status_code == 200:

use_ip_list.append(i)

print(response.status_code)

# self.queue.put(proxies)

else:

if self.result == 5:

self.get_ip()

self.result = 0

self.result +=1

self.test_ip()

except Exception:

if self.result == 5:

self.get_ip()

self.result = 0

else:

self.result += 1

self.test_ip()

class parse_data():

def __init__(self):

time.sleep(10)

self.use_data()

# self.listen()

def listen(self):

print(1)

global use_ip_list

time.sleep(2)

test_number = 1

while test_number:

print("wait")

print(use_ip_list)

if len(use_ip_list) != 0:

print(2)

self.use_data()

test_number =0

else:

time.sleep(2)

def use_data(self):

ua=UserAgent()

headers=ua.random

print(headers)

global use_ip_list

number = 0

sign = 0

test_number1=1

print(use_ip_list)

try:

for i in range(15):

# proxies = self.queue.get()

proxie = use_ip_list[number]

print(3)

proxies = {

"http": "http://" + proxie,

"https": "http://" + proxie,

}

time.sleep(1)

# print(use_ip_list)

try:

response = requests.get('https://www.httpbin.org/ip', proxies=proxies,timeout=4)

print(response.text)

except Exception as e:

print(e)

number +=1

continue

sign += 1

if sign == 3:

number += 1

if number == 5:

use_ip_list.clear()

number = 0

time.sleep(10)

while test_number1:

if len(use_ip_list) != 0:

self.use_data()

test_number1 = 0

test_number1 = 1

except IndexError:

use_ip_list.clear()

number = 0

time.sleep(10)

while test_number1:

if len(use_ip_list) != 0:

self.use_data()

test_number1 = 0

if __name__ == '__main__':

t1 = threading.Thread(target=IP_pool, )

t2 = threading.Thread(target=parse_data, )

t1.start()

t2.start()- 对于超时或者不能用的代理进行跳过,换下一个代理IP。

在这里简单实现了付费IP代理池的功能,接下来配合scrapy进行构建IP代理池。

2、scrapy框架中的代理池示例。

- 在scrapy的中间件中配置ip代理池,每个ip用4次进行更换,若api连接超过最大重试次数更换api连接。

import json

import random

import requests

from scrapy import signals

class IpTextSpiderMiddleware:

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the spider middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_spider_input(self, response, spider):

# Called for each response that goes through the spider

# middleware and into the spider.

# Should return None or raise an exception.

return None

def process_spider_output(self, response, result, spider):

# Called with the results returned from the Spider, after

# it has processed the response.

# Must return an iterable of Request, or item objects.

for i in result:

yield i

def process_spider_exception(self, response, exception, spider):

# Called when a spider or process_spider_input() method

# (from other spider middleware) raises an exception.

# Should return either None or an iterable of Request or item objects.

pass

def process_start_requests(self, start_requests, spider):

# Called with the start requests of the spider, and works

# similarly to the process_spider_output() method, except

# that it doesn’t have a response associated.

# Must return only requests (not items).

for r in start_requests:

yield r

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

class IpTextDownloaderMiddleware:

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the downloader middleware does not modify the

# passed objects.

def __init__(self):

self.user_agent_list=["Mozilla/5.0 (Linux; U; Android 2.3.6; en-us; Nexus S Build/GRK39F) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1",

"Avant Browser/1.2.789rel1 (http://www.avantbrowser.com)",

"Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/532.5 (KHTML, like Gecko) Chrome/4.0.249.0 Safari/532.5",

"Mozilla/5.0 (Windows; U; Windows NT 5.2; en-US) AppleWebKit/532.9 (KHTML, like Gecko) Chrome/5.0.310.0 Safari/532.9",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US) AppleWebKit/534.7 (KHTML, like Gecko) Chrome/7.0.514.0 Safari/534.7",

"Mozilla/5.0 (Windows; U; Windows NT 6.0; en-US) AppleWebKit/534.14 (KHTML, like Gecko) Chrome/9.0.601.0 Safari/534.14",

"Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.14 (KHTML, like Gecko) Chrome/10.0.601.0 Safari/534.14",

"Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.20 (KHTML, like Gecko) Chrome/11.0.672.2 Safari/534.20",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.27 (KHTML, like Gecko) Chrome/12.0.712.0 Safari/534.27",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/13.0.782.24 Safari/535.1",

"Mozilla/5.0 (Windows NT 6.0) AppleWebKit/535.2 (KHTML, like Gecko) Chrome/15.0.874.120 Safari/535.2",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.7 (KHTML, like Gecko) Chrome/16.0.912.36 Safari/535.7",

"Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.9.0.10) Gecko/2009042316 Firefox/3.0.10",

"Mozilla/5.0 (Windows; U; Windows NT 6.0; en-GB; rv:1.9.0.11) Gecko/2009060215 Firefox/3.0.11 (.NET CLR 3.5.30729)",

"Mozilla/5.0 (Windows; U; Windows NT 6.0; en-US; rv:1.9.1.6) Gecko/20091201 Firefox/3.5.6 GTB5",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; tr; rv:1.9.2.8) Gecko/20100722 Firefox/3.6.8 ( .NET CLR 3.5.30729; .NET4.0E)",

"Mozilla/5.0 (Windows NT 6.1; rv:2.0.1) Gecko/20100101 Firefox/4.0.1",

"Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:2.0.1) Gecko/20100101 Firefox/4.0.1",

"Mozilla/5.0 (Windows NT 5.1; rv:5.0) Gecko/20100101 Firefox/5.0",

"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:6.0a2) Gecko/20110622 Firefox/6.0a2",

"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:7.0.1) Gecko/20100101 Firefox/7.0.1",

"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:2.0b4pre) Gecko/20100815 Minefield/4.0b4pre",

"Mozilla/4.0 (compatible; MSIE 5.5; Windows NT 5.0 )",

"Mozilla/4.0 (compatible; MSIE 5.5; Windows 98; Win 9x 4.90)",

"Mozilla/5.0 (Windows; U; Windows XP) Gecko MultiZilla/1.6.1.0a",

"Mozilla/2.02E (Win95; U)",

"Mozilla/3.01Gold (Win95; I)",

"Mozilla/4.8 [en] (Windows NT 5.1; U)",

"Mozilla/5.0 (Windows; U; Win98; en-US; rv:1.4) Gecko Netscape/7.1 (ax)",

"HTC_Dream Mozilla/5.0 (Linux; U; Android 1.5; en-ca; Build/CUPCAKE) AppleWebKit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari/525.20.1",

"Mozilla/5.0 (hp-tablet; Linux; hpwOS/3.0.2; U; de-DE) AppleWebKit/534.6 (KHTML, like Gecko) wOSBrowser/234.40.1 Safari/534.6 TouchPad/1.0",

"Mozilla/5.0 (Linux; U; Android 1.5; en-us; sdk Build/CUPCAKE) AppleWebkit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari/525.20.1",

"Mozilla/5.0 (Linux; U; Android 2.1; en-us; Nexus One Build/ERD62) AppleWebKit/530.17 (KHTML, like Gecko) Version/4.0 Mobile Safari/530.17",

"Mozilla/5.0 (Linux; U; Android 2.2; en-us; Nexus One Build/FRF91) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1",

"Mozilla/5.0 (Linux; U; Android 1.5; en-us; htc_bahamas Build/CRB17) AppleWebKit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari/525.20.1",

"Mozilla/5.0 (Linux; U; Android 2.1-update1; de-de; HTC Desire 1.19.161.5 Build/ERE27) AppleWebKit/530.17 (KHTML, like Gecko) Version/4.0 Mobile Safari/530.17",

"Mozilla/5.0 (Linux; U; Android 2.2; en-us; Sprint APA9292KT Build/FRF91) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1",

"Mozilla/5.0 (Linux; U; Android 1.5; de-ch; HTC Hero Build/CUPCAKE) AppleWebKit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari/525.20.1",

"Mozilla/5.0 (Linux; U; Android 2.2; en-us; ADR6300 Build/FRF91) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1",

"Mozilla/5.0 (Linux; U; Android 2.1; en-us; HTC Legend Build/cupcake) AppleWebKit/530.17 (KHTML, like Gecko) Version/4.0 Mobile Safari/530.17",

"Mozilla/5.0 (Linux; U; Android 1.5; de-de; HTC Magic Build/PLAT-RC33) AppleWebKit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari/525.20.1 FirePHP/0.3",

"Mozilla/5.0 (Linux; U; Android 1.6; en-us; HTC_TATTOO_A3288 Build/DRC79) AppleWebKit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari/525.20.1",

"Mozilla/5.0 (Linux; U; Android 1.0; en-us; dream) AppleWebKit/525.10 (KHTML, like Gecko) Version/3.0.4 Mobile Safari/523.12.2",

"Mozilla/5.0 (Linux; U; Android 1.5; en-us; T-Mobile G1 Build/CRB43) AppleWebKit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari 525.20.1",

"Mozilla/5.0 (Linux; U; Android 1.5; en-gb; T-Mobile_G2_Touch Build/CUPCAKE) AppleWebKit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari/525.20.1",

"Mozilla/5.0 (Linux; U; Android 2.0; en-us; Droid Build/ESD20) AppleWebKit/530.17 (KHTML, like Gecko) Version/4.0 Mobile Safari/530.17",

"Mozilla/5.0 (Linux; U; Android 2.2; en-us; Droid Build/FRG22D) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1",

"Mozilla/5.0 (Linux; U; Android 2.0; en-us; Milestone Build/ SHOLS_U2_01.03.1) AppleWebKit/530.17 (KHTML, like Gecko) Version/4.0 Mobile Safari/530.17",

"Mozilla/5.0 (Linux; U; Android 2.0.1; de-de; Milestone Build/SHOLS_U2_01.14.0) AppleWebKit/530.17 (KHTML, like Gecko) Version/4.0 Mobile Safari/530.17",

"Mozilla/5.0 (Linux; U; Android 3.0; en-us; Xoom Build/HRI39) AppleWebKit/525.10 (KHTML, like Gecko) Version/3.0.4 Mobile Safari/523.12.2",

"Mozilla/5.0 (Linux; U; Android 0.5; en-us) AppleWebKit/522 (KHTML, like Gecko) Safari/419.3",

"Mozilla/5.0 (Linux; U; Android 1.1; en-gb; dream) AppleWebKit/525.10 (KHTML, like Gecko) Version/3.0.4 Mobile Safari/523.12.2",

"Mozilla/5.0 (Linux; U; Android 2.0; en-us; Droid Build/ESD20) AppleWebKit/530.17 (KHTML, like Gecko) Version/4.0 Mobile Safari/530.17",

"Mozilla/5.0 (Linux; U; Android 2.1; en-us; Nexus One Build/ERD62) AppleWebKit/530.17 (KHTML, like Gecko) Version/4.0 Mobile Safari/530.17",

"Mozilla/5.0 (Linux; U; Android 2.2; en-us; Sprint APA9292KT Build/FRF91) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1",

"Mozilla/5.0 (Linux; U; Android 2.2; en-us; ADR6300 Build/FRF91) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1",

"Mozilla/5.0 (Linux; U; Android 2.2; en-ca; GT-P1000M Build/FROYO) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1",

"Mozilla/5.0 (Linux; U; Android 3.0.1; fr-fr; A500 Build/HRI66) AppleWebKit/534.13 (KHTML, like Gecko) Version/4.0 Safari/534.13",

"Mozilla/5.0 (Linux; U; Android 3.0; en-us; Xoom Build/HRI39) AppleWebKit/525.10 (KHTML, like Gecko) Version/3.0.4 Mobile Safari/523.12.2",

"Mozilla/5.0 (Linux; U; Android 1.6; es-es; SonyEricssonX10i Build/R1FA016) AppleWebKit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari/525.20.1",

"Mozilla/5.0 (Linux; U; Android 1.6; en-us; SonyEricssonX10i Build/R1AA056) AppleWebKit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari/525.20.1",]

self.get_url="https://api.hailiangip.com:8522/api/getIpEncrypt?dataType=0&encryptParam=i6OcePKr4Cq0wPH1UJ%2FCOyBYXf0wSdR0KIhVhoMMPHvy912xFHA3Hogn7b2rQpv2lrmj76kLuq7e14sbfWQuzlOvHP1IbxTPA2LMO00qT4O%2F0UCy4GxAzlw0QepTrRUrV%2FcsKzI2Sft4udNK9vBeAMBuiCewJ1molaFh8MJxFydXwMMY2Pp7wRNtgRIJmPbHvs3ERyFHZ9FAgNS8WBDIMt0Jv%2FQlqwlcd4gkrYI6AFg%3D"

self.ip_list=[] # 存放代理ip

self.result=0 # 当前使用第几个ip

self.number=0 # 记录每个ip的使用次数

self.test_url="https://www.baidu.com"

self.test_headers={'USER_AGENT': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36'}

self.api_list=["https://api.hailiangip.com:8522/api/getIpEncrypt?dataType=0&encryptParam=i6OcePKr4Cq0wPH1UJ%2FCOyBYXf0wSdR0KIhVhoMMPHvy912xFHA3Hogn7b2rQpv2lrmj76kLuq7eOWHxckogBGUeCkpAfW2MwC6hSIUqvmJEuChn06y97xvK6RHiYy1dSqDX%2B368VRXwMMY2Pp7wRNtgRIJmPbHvs3ERyFHZ9FAgNS8WBDIMt0Jv%2FQlqwlcd4gkrYI6AFg%3D",

"https://api.hailiangip.com:8522/api/getIpEncrypt?dataType=0&encryptParam=i6OcePKr4Cq0wPH1UJ%2FCOyBYXf0wSdR0KIhVhoMMPHvy912xFHA3Hogn7b2rQpv2lrmj76kLuq7e14sbfWQuzlOvHP1IHWQ4A%2BVINCFoKbhydhvTlIRNXszr%2FHaGHGv939i1Nxu8r0dtugDHco%2BmanfXPh0hXwMMY2Pp7wRNtgRIJmPbHvs3ERyFHZ9FAgNS8WBDIMt0Jv%2FQlqwlcd4gkrYI6AFg%3D",

"https://api.hailiangip.com:8522/api/getIpEncrypt?dataType=0&encryptParam=i6OcePKr4Cq0wPH1UJ%2FCOyBYXf0wSdR0KIhVhoMMPHvy912xFHA3Hogn7b2rQpv2lrmj76kLuq7urq2dGXnKdp7EUQ4X9QpspzfvwQvOUpSUJb2phf%2B8byojswKKlj%2BTN0qRfulH7lBXwMMY2Pp7wRNtgRIJmPbHvs3ERyFHZ9FAgNS8WBDIMt0Jv%2FQlqwlcd4gkrYI6AFg%3D",

"https://api.hailiangip.com:8522/api/getIpEncrypt?dataType=0&encryptParam=i6OcePKr4Cq0wPH1UJ%2FCOyBYXf0wSdR0KIhVhoMMPHvy912xFHA3Hogn7b2rQpv2lrmj76kLiDdgWb%2BiP06kNQT1JBWiXSnS2Qt7WNIYYxM5GZIqxvz0lXwMMY2Pp7wRNtgRIJmPbHvs3ERyFHZ9FAgNS8WBDIMt0Jv%2FQlqwlcd4gkrYI6AFg%3D",

"https://api.hailiangip.com:8522/api/getIpEncrypt?dataType=0&encryptParam=i6OcePKr4Cq0wPH1UJ%2FCOyBYXf0wSdR0KIhVhoMMPHvy912xFHA3Hogn7b2rQpv2lrmj76kLuq7e14sbfWQu0qT4NvKCov%2B%2FIpmBc0mnbaMh3yTQ0le4s8ucZtiCLiOpFnV%2F02ZNRefDtCwn5VVr8I%2F1tXwMMY2Pp7wRNtgRIJmPbHvs3ERyFHZ9FAgNS8WBDIMt0Jv%2FQlqwlcd4gkrYI6AFg%3D",

"https://api.hailiangip.com:8522/api/getIpEncrypt?dataType=0&encryptParam=i6OcePKr4Cq0wPH1UJ%2FCOyBYXf0wSdR0KIhVhoMMPHvy912xFHA3Hogn7b2rQpv2lrmj76kLuq7A2LMO0jJ8Cc1cDves0KxrtmVpdlMty0gWL55wWjkCGznQdo0KP0RoNG5vrTavLIdXwMMY2Pp7wRNtgRIJmPbHvs3ERyFHZ9FAgNS8WBDIMt0Jv%2FQlqwlcd4gkrYI6AFg%3D"]

self.api_number=0

def get_ip(self):

try:

temp_data =requests.get(self.get_url).text

self.ip_list.clear()

for data_ip in json.loads(temp_data)["data"]:

print(data_ip)

self.ip_list.append({

"ip":data_ip["ip"],

"port":data_ip["port"],

})

except Exception:

self.get_url=self.api_list[self.api_number]

self.api_number +=1

if self.api_number == 6:

print("警告:api接口已是最后一个,请注意换api连接!")

print("警告:api接口已是最后一个,请注意换api连接!")

print("警告:api接口已是最后一个,请注意换api连接!")

print("警告:api接口已是最后一个,请注意换api连接!")

print("警告:api接口已是最后一个,请注意换api连接!")

# print(self.ip_list)

def change_ip_data(self,request):

if request.url.split(":")[0] == "http":

request.meta["proxy"]="http://"+str(self.ip_list[self.result-1]["ip"])+":"+str(self.ip_list[self.result-1]["port"])

if request.url.split(":")[0] == "https":

request.meta["proxy"]="https://"+str(self.ip_list[self.result-1]["ip"])+":"+str(self.ip_list[self.result-1]["port"])

def test_ip(self):

requests.get(self.test_url,headers=self.test_headers,proxies={"https://"+

str(self.ip_list[self.result-1]["ip"])+":"+str(self.ip_list[self.result-1]["port"])},timeout=5)

def ip_used(self,request):

try:

self.change_ip_data(request)

self.test_ip()

except:

if self.result==0 or self.result==5:

self.get_ip()

self.result=1

else:

self.result +=1

self.ip_used(request)

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_request(self, request, spider):

request.headers["User-Agent"]=random.choice(self.user_agent_list)

if self.result ==0 or self.result ==5:

self.get_ip()

self.result =1

if self.number == 3:

self.result +=1

self.number =0

self.change_ip_data(request)

else:

self.change_ip_data(request)

self.number +=1

# self.ip_used(request)

def process_response(self, request, response, spider):

# Called with the response returned from the downloader.

# Must either;

# - return a Response object

# - return a Request object

# - or raise IgnoreRequest

return response

def process_exception(self, request, exception, spider):

# Called when a download handler or a process_request()

# (from other downloader middleware) raises an exception.

# Must either:

# - return None: continue processing this exception

# - return a Response object: stops process_exception() chain

# - return a Request object: stops process_exception() chain

pass

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)对于ip代理池的构建到这里就结束了,在ip反爬中要注意避免代理ip的浪费,注意合理使用ip代理池。

PS:当然还有一种ip代理方式:ADSL拨号。这种方式可以根据重启光猫换动态ip,网上目前较为主流的方式是通过vps实现动态拨号,但是这种方式的难点是部署环境。需要vps主机、固定IP管理服务器、本机爬虫程序三方协同进行实现动态拨号。至于vps主机和固定IP管理服务器大家可以到网上租一个,在这里就先简单介绍以下:

- vps主机:网上有很多云主机,如云立方、阿斯云、阳光NET、无极网络,个人实现动态拨号选一些基础配置就够用了,如果是大量爬虫,需要实现一千万级以上的数据爬取,可以选一些配置高一点的。个人推荐后两个,相对实惠一些(70-88/月),当然也可以选一些稳定性强一些的代理商像云立方,但是就是贵些(110/月)。

- 固定IP管理服务器:其作用是通过VPS定时换ip,然后请求服务器,服务器获取VPS的IP地址。也可以用DDNS服务,动态域名解析IP,通过域名映射过来,获取云主机拨号后的ip。

- 本机爬虫程序:发送拨号信息,定时获取IP。

对于ADSL自动拨号换ip感兴趣的可以观看这篇博客:如何使用adsl自动拨号实现换代理(保姆级教程)? - 乐之之 - 博客园 (cnblogs.com)

通过python脚本简单实现了ADSL自动拨号换ip的操作,那么关于ip反爬就暂时介绍到这里了...

浙公网安备 33010602011771号

浙公网安备 33010602011771号