sqoop 的安装和使用

一、安装概述

安装sqoop,为实现将mysql、hive数据库之间数据传送功能。

二、下载及安装

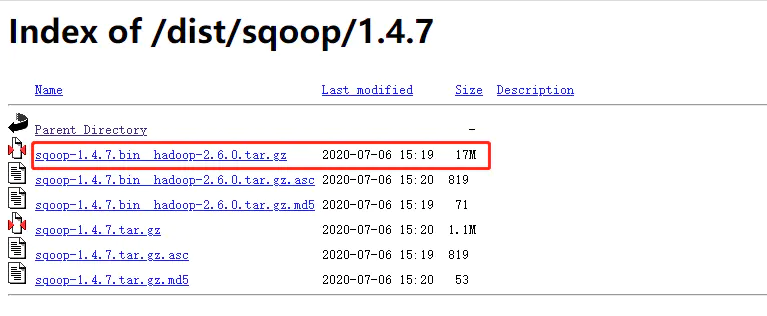

- 官网下载地址,http://archive.apache.org/dist/sqoop/,我选择的版本是1.4.7

将已下载的安装包上传到hadoop的根节点,并解压

将已下载的安装包上传到hadoop的根节点,并解压

[hadoop@hadoop01 ~]$ tar -zxvf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

名字太长了,看着不爽,改个名儿

[hadoop@hadoop01 ~]$ mv sqoop-1.4.7.bin__hadoop-2.6.0 sqoop-1.4.7

修改sqoop-env.sh,将#注释的环境变量解开并赋值

[hadoop@hadoop01 ~]$ cd sqoop-1.4.7/conf/ [hadoop@hadoop01 conf]$ mv sqoop-env-template.sh sqoop-env.sh [hadoop@hadoop01 conf]$ vim sqoop-env.sh

#Set path to where bin/hadoop is available

export HADOOP_COMMON_HOME=/bigdata/hadoop-2.10.1

#Set path to where hadoop-*-core.jar is available

export HADOOP_MAPRED_HOME=/bigdata/hadoop-2.10.1

#set the path to where bin/hbase is available

export HBASE_HOME=/bigdata/hbase-2.3.7

#Set the path to where bin/hive is available

export HIVE_HOME=/bigdata/apache-hive-1.2.2-bin

#Set the path for where zookeper config dir is

export ZOOCFGDIR=/bigdata/apache-hive-1.2.2-bin

将mysql的驱动包上传到sqoop的lib包下

将sqoop的环境变量添加到当前用户的环境变量下

[hadoop@hadoop01 conf]$ vim ~/.bashrc export PYTHON_HOME=/usr/local/python3 export PATH=$PYTHON_HOME/bin:$PYTHON_HOME/sbin:$PATH export HADOOP_HOME=/bigdata/hadoop-2.10.1 export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH export HIVE_HOME=/bigdata/apache-hive-1.2.2-bin export PATH=$HIVE_HOME/bin:$PATH export HBASE_HOME=/bigdata/hbase-2.3.7 export PATH=$HBASE_HOME/bin:$HBASE_HOME/sbin:$PATH export MAVEN__HOME=/bigdata/apache-maven-3.3.9 export PATH=$MAVEN_HOME/bin:$MAVEN_HOME/sbin:$PATH export SPARK_HOME=/bigdata/spark-3.2.0-bin-hadoop3.2 export PATH=$SPARK_HOME/bin:$SPARK_HOME/sbin:$PATH export ZOOKEEPER_HOME=/bigdata/apache-hive-1.2.2-bin export PATH=$ZOOKEEPER_HOME/bin:$PATH export SQOOP_HOME=/bigdata/sqoop export PATH=$SQOOP_HOME/bin:$PATH [hadoop@hadoop01 conf]$ source ~/.bashrc

验证sqoop安装结果

[hadoop@hadoop01 conf]$ sqoop version Warning: /home/hadoop/sqoop-1.4.7/../hbase does not exist! HBase imports will fail. Please set $HBASE_HOME to the root of your HBase installation. Warning: /home/hadoop/sqoop-1.4.7/../hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. Warning: /home/hadoop/sqoop-1.4.7/../accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. Warning: /home/hadoop/sqoop-1.4.7/../zookeeper does not exist! Accumulo imports will fail. Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation. 21/12/06 11:50:20 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 Sqoop 1.4.7 git commit id 2328971411f57f0cb683dfb79d19d4d19d185dd8 Compiled by maugli on Thu Dec 21 15:59:58 STD 2017 You have new mail in /var/spool/mail/root [hadoop@hadoop01 conf]$

三:sqoop 迁移

显示链接到的数据库

sqoop list-databases --connect jdbc:mysql://192.168。50.129:3306/ --username root -P

两种导入形式,我们选择增量,定期导入新数据

- sqoop全量导入

#!/bin/bash array=(user_profile user_basic news_user_channel news_channel user_follow user_blacklist user_search news_collection news_article_basic news_article_content news_read news_article_statistic user_material) for table_name in ${array[@]}; do sqoop import \ --connect jdbc:mysql://192.168.19.137/toutiao \ --username root \ --password password \ --table $table_name \ --m 5 \ --hive-home /root/bigdata/hive \ --hive-import \ --create-hive-table \ --hive-drop-import-delims \ --warehouse-dir /user/hive/warehouse/toutiao.db \ --hive-table toutiao.$table_name done

-

sqoop增量导入

-

append:即通过指定一个递增的列,如:--incremental append --check-column num_iid --last-value 0

-

incremental: 时间戳,比如:

--incremental lastmodified \ --check-column column \ --merge-key key \ --last-value '2012-02-01 11:0:00'

-

就是只导入check-column的列比'2012-02-01 11:0:00'更大的数据,按照key合并

-

- 导入最终结果两种形式,选择后者

- 直接sqoop导入到hive(–incremental lastmodified模式不支持导入Hive )

- sqoop导入到hdfs,然后建立hive表关联

- --target-dir /user/hive/warehouse/toutiao.db/

四:sqoop迁移案例

- 避坑指南:

- 导入数据到hive中,需要在创建HIVE表加入 row format delimited fields terminated by ','

hadoop数据在hive中查询就全是NULL,原因: sqoop 导出的 hdfs 分片数据,都是使用逗号 , 分割的,由于 hive 默认的分隔符是 /u0001(Ctrl+A),为了平滑迁移,需要在创建表格时指定数据的分割符号。

- 1、user_profile表:

- 使用lastmodified模式

- mysql数据库中更新时候update_time会修改最近时间,按照user_id合并(增量导入进去会有新的重复的数据,需要合并)

- 指定last_time时间

- 2、user_basic表:

- 使用lastmodified模式

- last_login作为更新时间

- 指定last_time时间,按照user_id合并

Mysql导入对应hive类型:

MySQL(bigint) --> Hive(bigint) MySQL(tinyint) --> Hive(tinyint) MySQL(int) --> Hive(int) MySQL(double) --> Hive(double) MySQL(bit) --> Hive(boolean) MySQL(varchar) --> Hive(string) MySQL(decimal) --> Hive(double) MySQL(date/timestamp) --> Hive(string)

在hive中建立表

create table user_profile( user_id BIGINT comment "userID", gender BOOLEAN comment "gender", birthday STRING comment "birthday", real_name STRING comment "real_name", create_time STRING comment "create_time", update_time STRING comment "update_time", register_media_time STRING comment "register_media_time", id_number STRING comment "id_number", id_card_front STRING comment "id_card_front", id_card_back STRING comment "id_card_back", id_card_handheld STRING comment "id_card_handheld", area STRING comment "area", company STRING comment "company", career STRING comment "career") COMMENT "toutiao user profile" row format delimited fields terminated by ',' LOCATION '/user/hive/warehouse/toutiao.db/user_profile'; create table user_basic( user_id BIGINT comment "user_id", mobile STRING comment "mobile", password STRING comment "password", profile_photo STRING comment "profile_photo", last_login STRING comment "last_login", is_media BOOLEAN comment "is_media", article_count BIGINT comment "article_count", following_count BIGINT comment "following_count", fans_count BIGINT comment "fans_count", like_count BIGINT comment "like_count", read_count BIGINT comment "read_count", introduction STRING comment "introduction", certificate STRING comment "certificate", is_verified BOOLEAN comment "is_verified") COMMENT "toutiao user basic" row format delimited fields terminated by ',' LOCATION '/user/hive/warehouse/toutiao.db/user_basic'; create table news_article_basic( article_id BIGINT comment "article_id", user_id BIGINT comment "user_id", channel_id BIGINT comment "channel_id", title STRING comment "title", status BIGINT comment "status", update_time STRING comment "update_time") COMMENT "toutiao news_article_basic" row format delimited fields terminated by ',' LOCATION '/user/hive/warehouse/toutiao.db/news_article_basic'; create table news_channel( channel_id BIGINT comment "channel_id", channel_name STRING comment "channel_name", create_time STRING comment "create_time", update_time STRING comment "update_time", sequence BIGINT comment "sequence", is_visible BOOLEAN comment "is_visible", is_default BOOLEAN comment "is_default") COMMENT "toutiao news_channel" row format delimited fields terminated by ',' LOCATION '/user/hive/warehouse/toutiao.db/news_channel'; create table news_article_content( article_id BIGINT comment "article_id", content STRING comment "content") COMMENT "toutiao news_article_content" row format delimited fields terminated by ',' LOCATION '/user/hive/warehouse/toutiao.db/news_article_content';

导入脚本,创建一个脚本文件执行import_incremental.sh脚本:

- -m:指定同时导入的线程数量

- 连接地址以及账号密码,表、目录

- 指定要导入的时间

#!/usr/bin/env bash # ## user_profile #sqoop import \ # --connect jdbc:mysql://192.168.19.137/toutiao \ # --username root \ # --password password \ # --table user_profile \ # --m 4 \ # --target-dir /user/hive/warehouse/toutiao.db/user_profile \ # --incremental lastmodified \ # --check-column update_time \ # --merge-key user_id \ # --last-value "2018-01-01 00:00:00" # # # # 多个文章相似导入 time=`date +"%Y-%m-%d" -d "-1day"` declare -A check check=([user_profile]=update_time [user_basic]=last_login [news_channel]=update_time) declare -A merge merge=([user_profile]=user_id [user_basic]=user_id [news_channel]=channel_id) for k in ${!check[@]} do sqoop import \ --connect jdbc:mysql://192.168.19.137/toutiao \ --username root \ --password password \ --table $k \ --m 4 \ --target-dir /user/hive/warehouse/toutiao.db/$k \ --incremental lastmodified \ --check-column ${check[$k]} \ --merge-key ${merge[$k]} \ --last-value ${time} done # news_article_basic sqoop import \ --connect jdbc:mysql://192.168.19.137/toutiao?tinyInt1isBit=false \ --username root \ --password password \ --m 4 \ --query 'select article_id, user_id, channel_id, REPLACE(REPLACE(REPLACE(title, CHAR(13),""),CHAR(10),""), ",", " ") title, status, update_time from news_article_basic WHERE $CONDITIONS' \ --split-by user_id \ --target-dir /user/hive/warehouse/toutiao.db/news_article_basic \ --incremental lastmodified \ --check-column update_time \ --merge-key article_id \ --last-value ${time} # 全量导入表 sqoop import \ --connect jdbc:mysql://192.168.19.137/toutiao \ --username root \ --password password \ --table news_article_content \ --m 4 \ --hive-home /root/bigdata/hive \ --hive-import \ --hive-drop-import-delims \ --hive-table toutiao.news_article_content \ --hive-overwrite

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· winform 绘制太阳,地球,月球 运作规律

· 超详细:普通电脑也行Windows部署deepseek R1训练数据并当服务器共享给他人

· 上周热点回顾(3.3-3.9)

· AI 智能体引爆开源社区「GitHub 热点速览」

· 写一个简单的SQL生成工具