机器学习应用篇——工业蒸汽数据分析

一、导包

点击查看代码

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

%matplotlib inline

import seaborn as sns#画图seaborn封装了matp;otlib

from sklearn.linear_model import LinearRegression,Lasso,Ridge,ElasticNet

#从线性模型引用线性回归,螺丝,岭回归,弹性网络

from sklearn.neighbors import KNeighborsRegressor #K近邻回归

from sklearn.ensemble import GradientBoostingRegressor,RandomForestRegressor,AdaBoostRegressor,ExtraTreesRegressor

#集成算法导入加强决策树,随机森林,adaboost等算法

from xgboost import XGBRegressor

from lightgbm import LGBMRegressor

#支持向量机(support vector mechine)

from sklearn.svm import SVR

#添加评价方法

from sklearn.metrics import mean_squared_error

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import MinMaxScaler,StandardScaler,PolynomialFeatures

#正则化,PolynomialFeatures将属性变多,

import warnings

warnings.filterwarnings("ignore")

二、数据聚合

train = pd.read_csv('./5zhengqi_train.txt',sep='\t')

test = pd.read_csv('./5zhengqi_test.txt',sep='\t')

#导入数据

train['origin'] = 'train'

test['origin'] = 'test'

#给train ,test原始数据上各增加一列新名叫train,test的数据列

data_all = pd.concat([train,test])

#融合

print(data_all.shape)

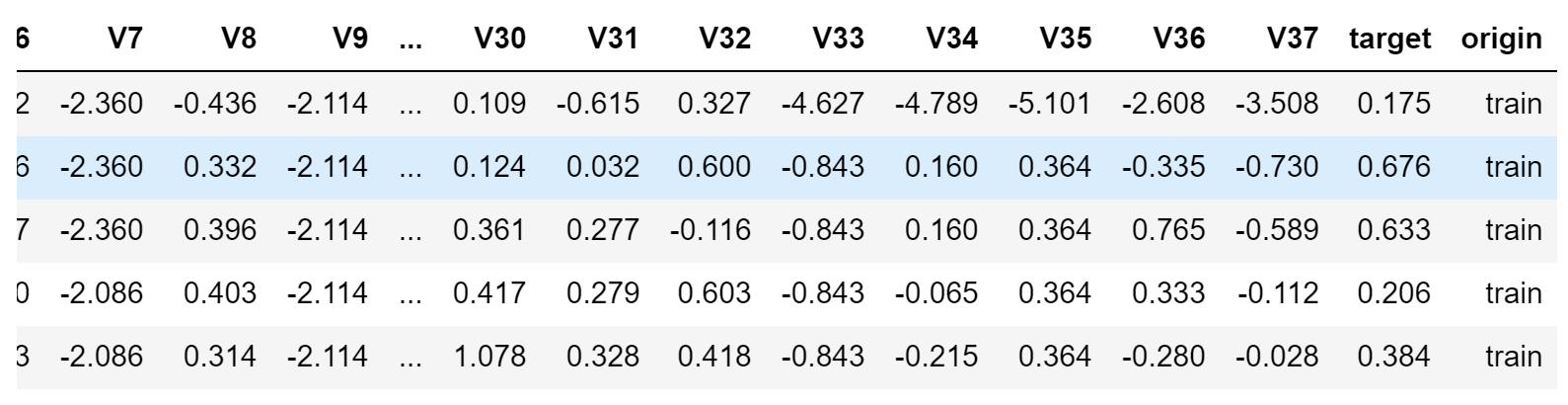

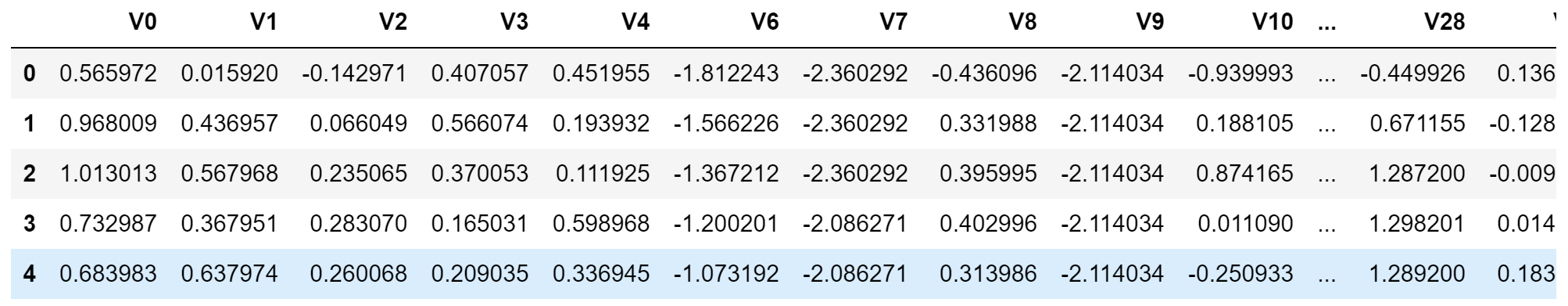

data_all.head()

#执行data_all的前5行数据

train.shape

#共2888个数据,39列

test.shape

#38个特征

三、特征探索

#共有38个特征,将一些不重要的删除

#特征分布情况,训练集与测试集特征分布不均匀的,删除

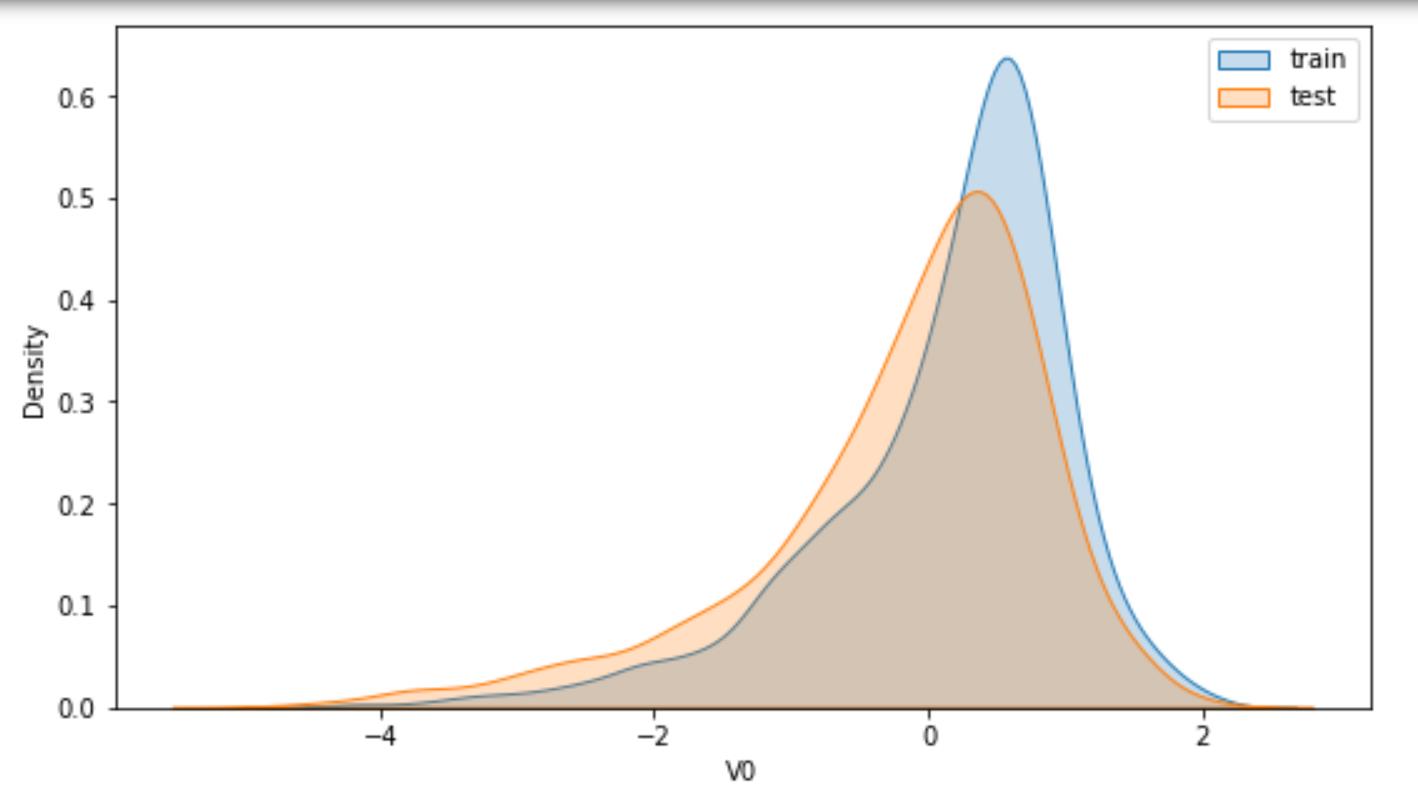

#绘制密度图sns.kdeplot()

plt.figure(figsize=(9,38*6))#设置画布尺寸

for i,col in enumerate(data_all.columns[:-2]):#枚举

cond = data_all['origin'] == 'train'

train_col = data_all[col][cond]#训练数据

cond = data_all['origin'] == 'test'

test_col = data_all[col][cond]#测试数据

axes = plt.subplot(38,1,i+1)#添加多张子图

ax = sns.kdeplot(train_col,shade = True)

sns.kdeplot(test_col,shade = True,ax = ax)

plt.legend(['train','test'])#添加图例

plt.xlabel(col)#添加横坐标是标签

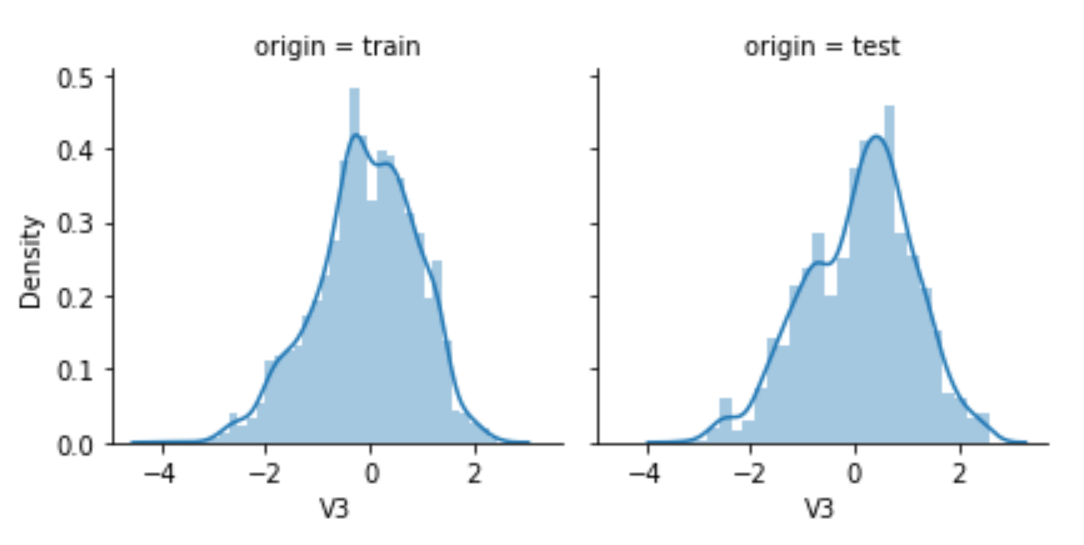

plt.figure(figsize=(9,6))

for col in data_all.columns[:-2]:

g = sns.FacetGrid(data_all,col = 'origin')#grid是网格

g.map(sns.distplot,col)#distribute分布图(方法,字符串)

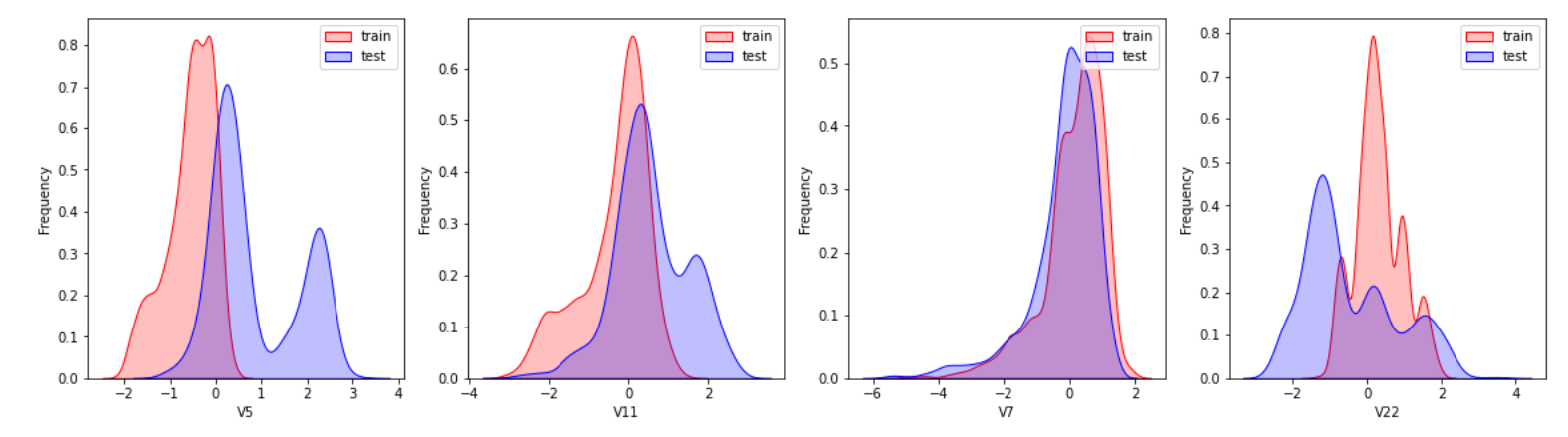

#%%查看v5,v7,v11,v22,的数据分布

drop_col = 6

drop_row = 1

plt.figure(figsize=(5*drop_col,5*drop_row))

i=1

for col in ["V5","V11","V7","V22"]:

ax =plt.subplot(drop_row,drop_col,i)

cond = data_all['origin'] == 'train'

train_col = data_all[col][cond]#训练数据

cond = data_all['origin'] == 'test'

test_col = data_all[col][cond]#测试数据

ax = sns.kdeplot(train_col, color="Red", shade=True)

ax = sns.kdeplot(test_col, color="Blue", shade=True)

ax.set_xlabel(col)

ax.set_ylabel("Frequency")

ax = ax.legend(["train","test"])

i+=1

drop_lables = ['V11','V17','V22','V5']

#标签出拟合模型不好的数据

#删除不好的数据,之前是40列,删除4列,余下36列

data_all.drop(drop_lables,axis=1,inplace=True)

data_all.shape

#1,求融合数据的协方差

cov = data_all.cov()

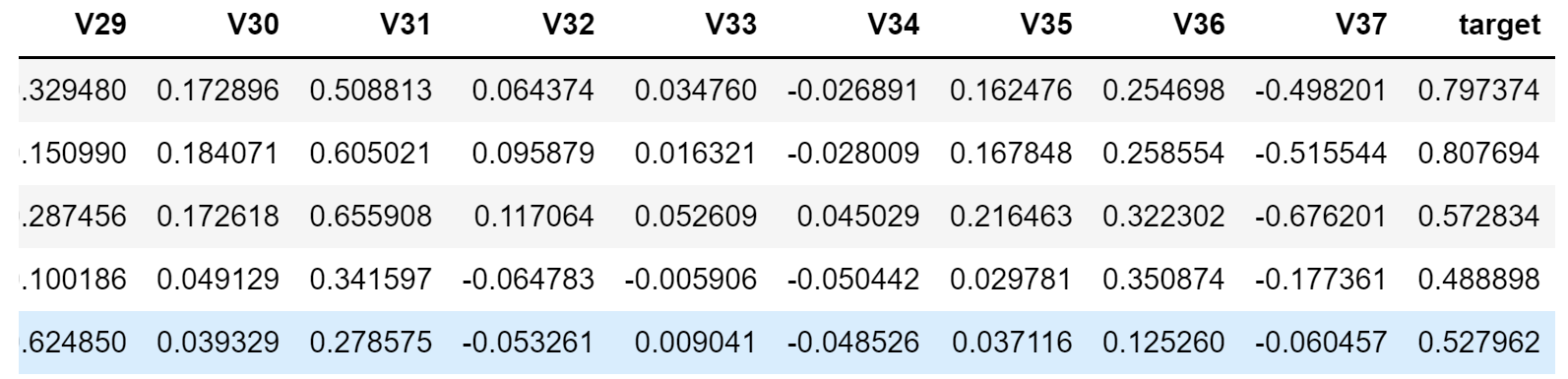

cov.head()

#2.求相关性系数(此处是融合数据)

corr = data_all.corr()

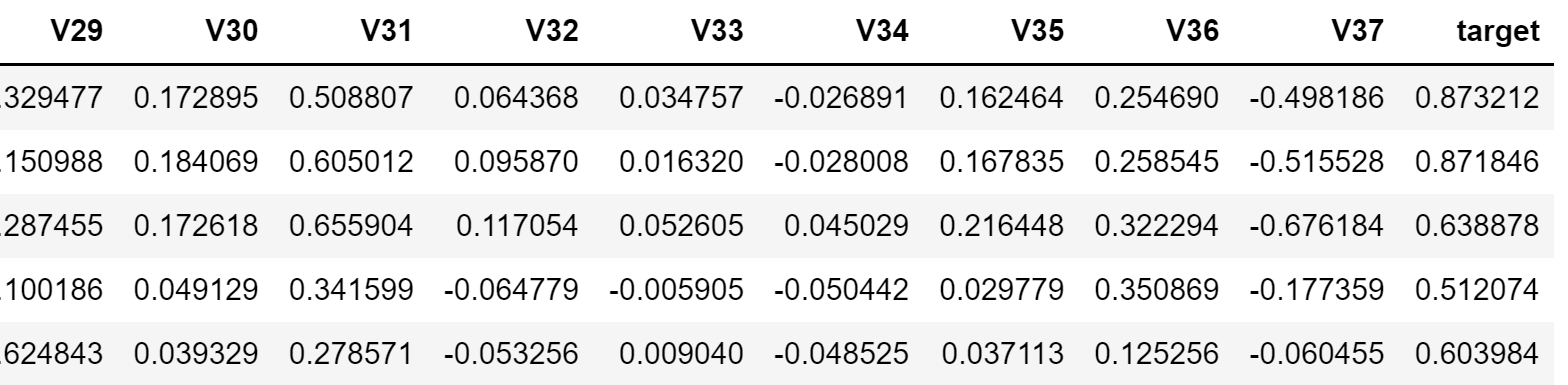

corr.head()

相关性系数=协方差/(属性1的标准差*属性2的标准差)

#选取训练数据的协方差

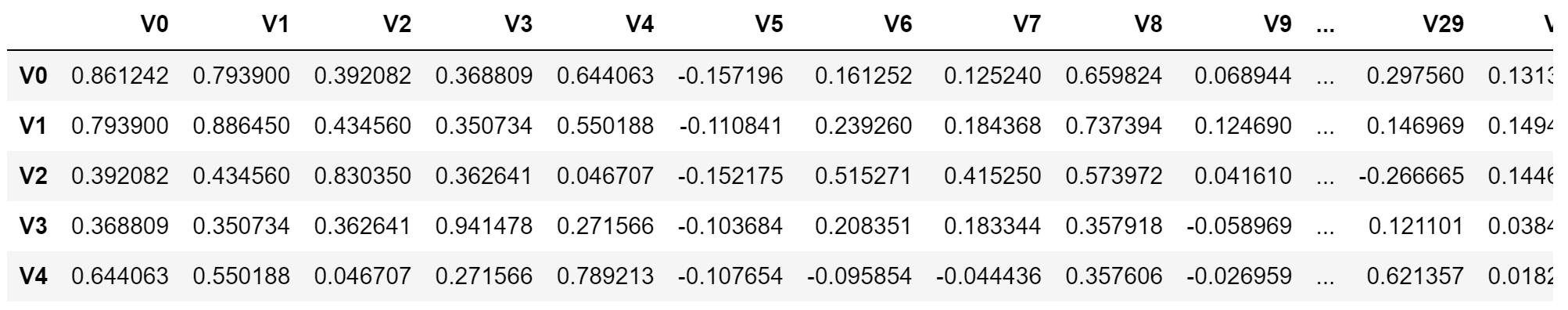

train.cov().head()

#协方差是两个属性之间的关系,如果两个属性一样:方差

#方差是协方差的一种特殊形式

#标准差(Standard Deviation)是方差的算术平方根,

#如:圆是椭圆的一种特殊形式

#导数是偏导数的特殊形式

#例如:如何计算相关系数?

#v1 与v0的协方差是0.793900,分母是v1的标准差(v1的方差0.886450开根号)*v0的标准差(v0的方差0.861242开根号)

0.793900/(0.886450**0.5*0.861242**0.5)

#计算训练数据的相关系数

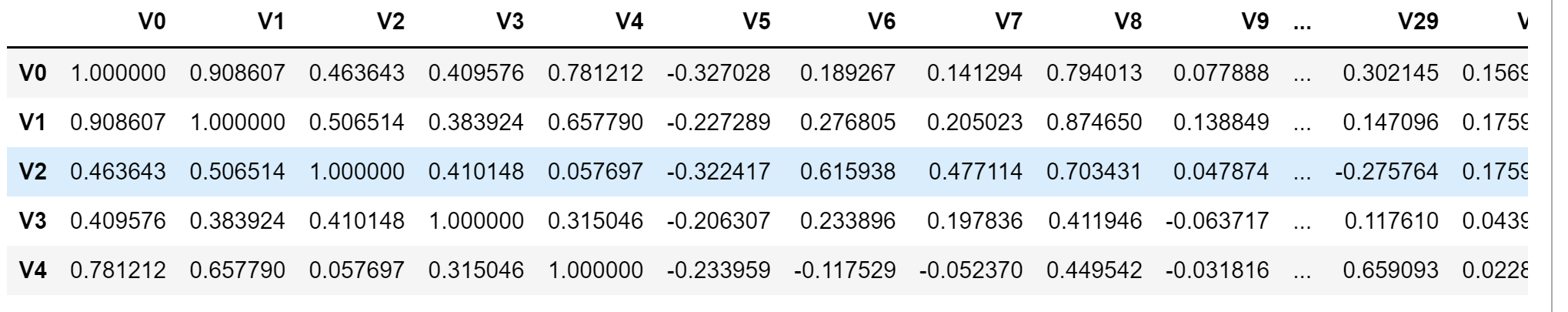

train.corr().head()

#通过相关性系数找到7个相关性不大的属性

cond = corr.loc['target'].abs()<0.1#上述数据加绝对值且小于0.1表示相关不大

drop_lables = corr.loc['target'].index[cond]

drop_lables

#Index(['V14', 'V21', 'V25', 'V26', 'V32', 'V33', 'V34'], dtype='object')这些图需要剔除

#对比查看属性分布,分布不好的删除,'V14', 'V21'需要删除,其他保留

drop_lables = ['V14', 'V21']

data_all.drop(drop_lables,axis = 1,inplace=True)

data_all.shape#上面36-2=34

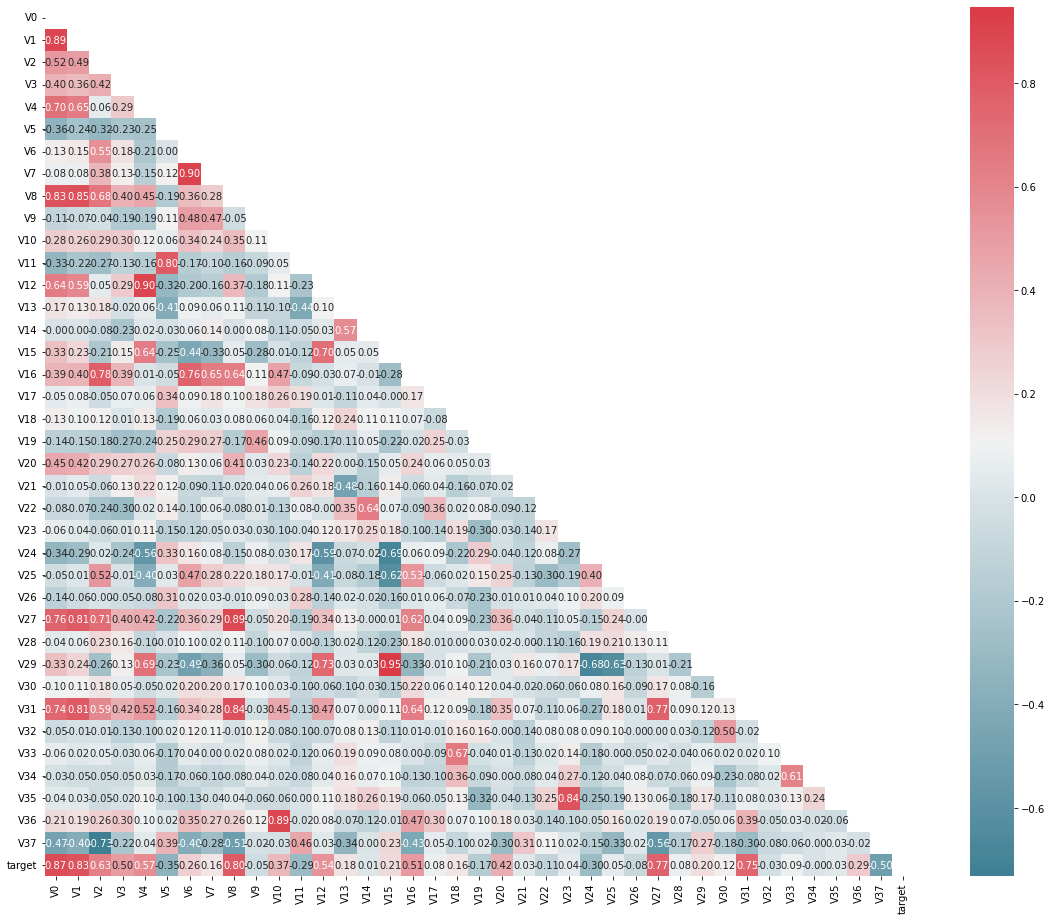

将相关性不大大的数据剔除后画热力图

# 找出相关程度

plt.figure(figsize=(20, 16)) # 指定绘图对象宽度和高度

mcorr = train.corr(method="spearman") # (默认方法)相关系数矩阵,即给出了任意两个变量之间的相关系数

mask = np.zeros_like(mcorr, dtype=np.bool) # 构造与mcorr同维数矩阵 为bool型

mask[np.triu_indices_from(mask)] = True # 上三角为True,【】内是索引

# np.triu_indices_from(mask)是上三角数据的索引

#颜色

cmap = sns.diverging_palette(220, 10, as_cmap=True) # 返回matplotlib colormap对象

g = sns.heatmap(mcorr, mask=mask, cmap=cmap, square=True, annot=True, fmt='0.2f') # 热力图(看两两相似度)

#annot注释

plt.show()

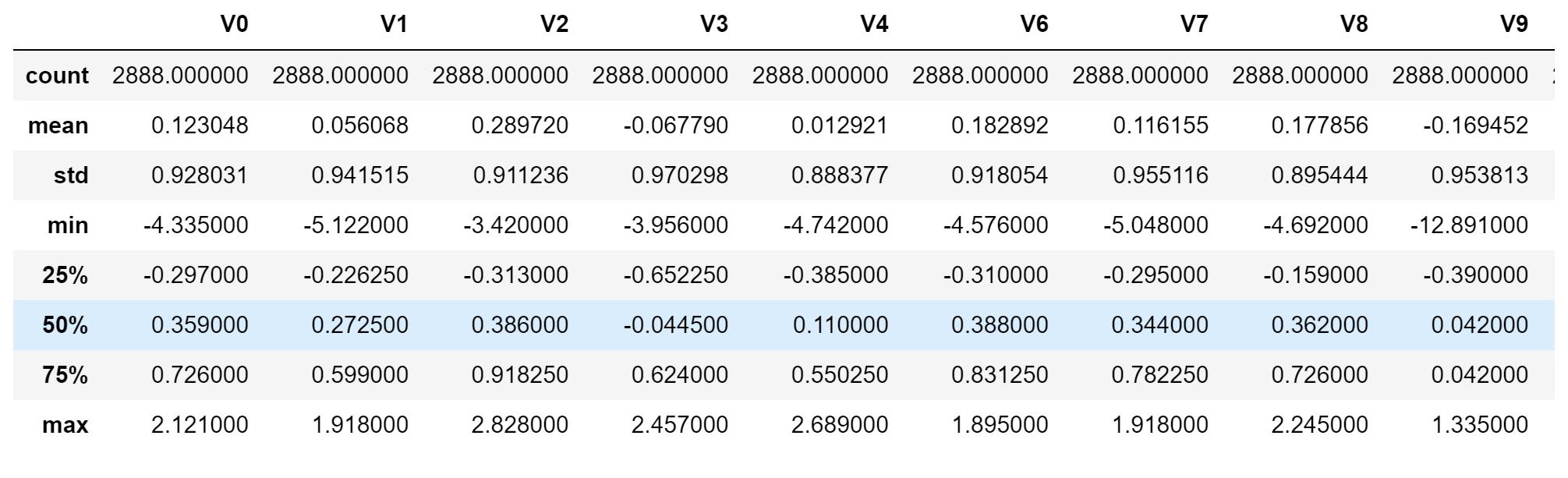

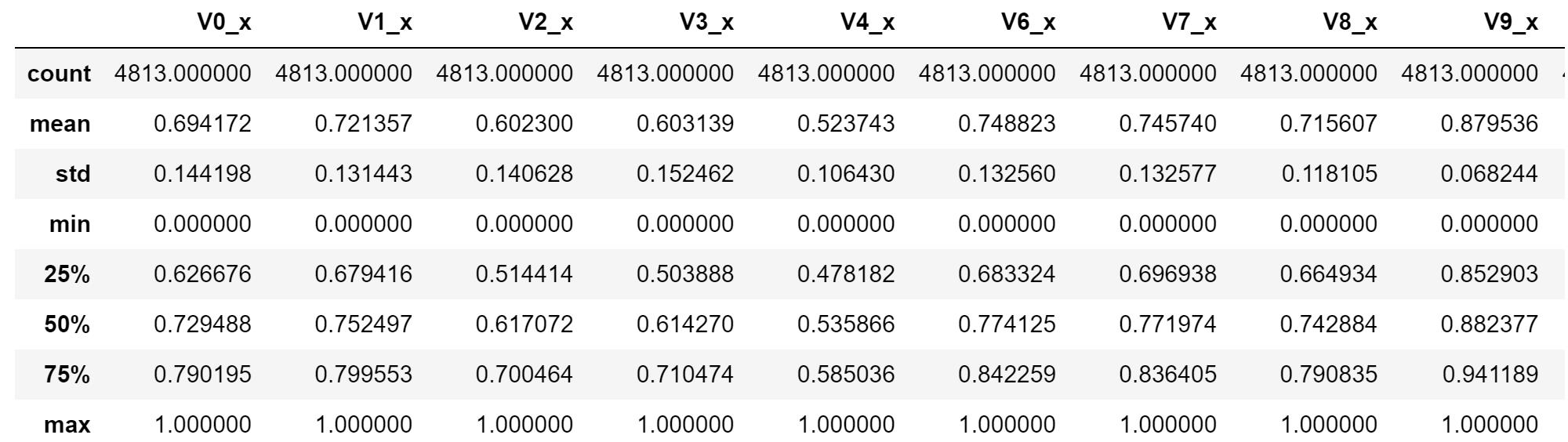

归一化于标准化是处理数据的两个不同的方式

1.标准化

将数据变换为均值为0,标准差为1的分布,切记:并非一定是正态的

data_all[data_all['origin'] == 'train'].describe()#训练数据 的数据情况

data_all[data_all['origin'] == 'test'].describe()#测试数据 的数据情况

#导包声明

#StandardScaler.fit_transform通过去除均值并缩放到单位方差来标准化特征。(归一化操作)

from sklearn.preprocessing import StandardScaler

stand = StandardScaler()

data = data_all.iloc[: , :-2]

#去掉target,origin列

data2 = stand.fit_transform(data)

data2#一维数据

#将一维转化为表格

clos = data_all.columns

data_all_std = pd.DataFrame(data2,columns=clos[:-2])

#注意columns去除最后两列

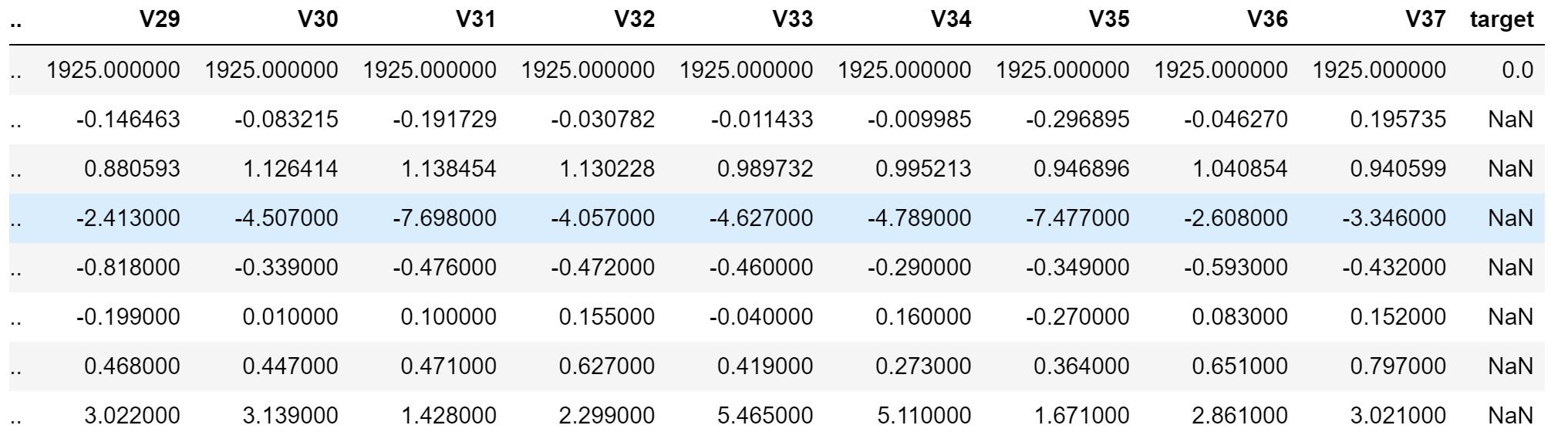

data_all_std

data_all_std = pd.merge(data_all_std,data_all.iloc[:,-2:],right_index=True,left_index==True)

#将标准化数据与元数据切除origin,target数据 融合

data_all_std.head()

使用不同算法预测异常值

#1.回归预测异常值

from sklearn.linear_model import RidgeCV

ridge = RidgeCV(alphas =[0.0001,0.001,0.01,0.1,0.2,0.5,1,2,3,4,5,10,20,30,50])

cond = data_all['origin'] == 'train'#条件是data_all中的origin作为训练数据

X_train = data_all[cond].iloc[:,:-2]

y_train = data_all[cond]['target']#此处是真实值y_train

ridge.fit(X_train,y_train)#经过模型训练,算法拟合数据和目标值的时候,不可能100%拟合

y_ = ridge.predict(X_train)

#预测值y_ 肯定会和真实值由一定偏差,偏差特别大,当成差异值

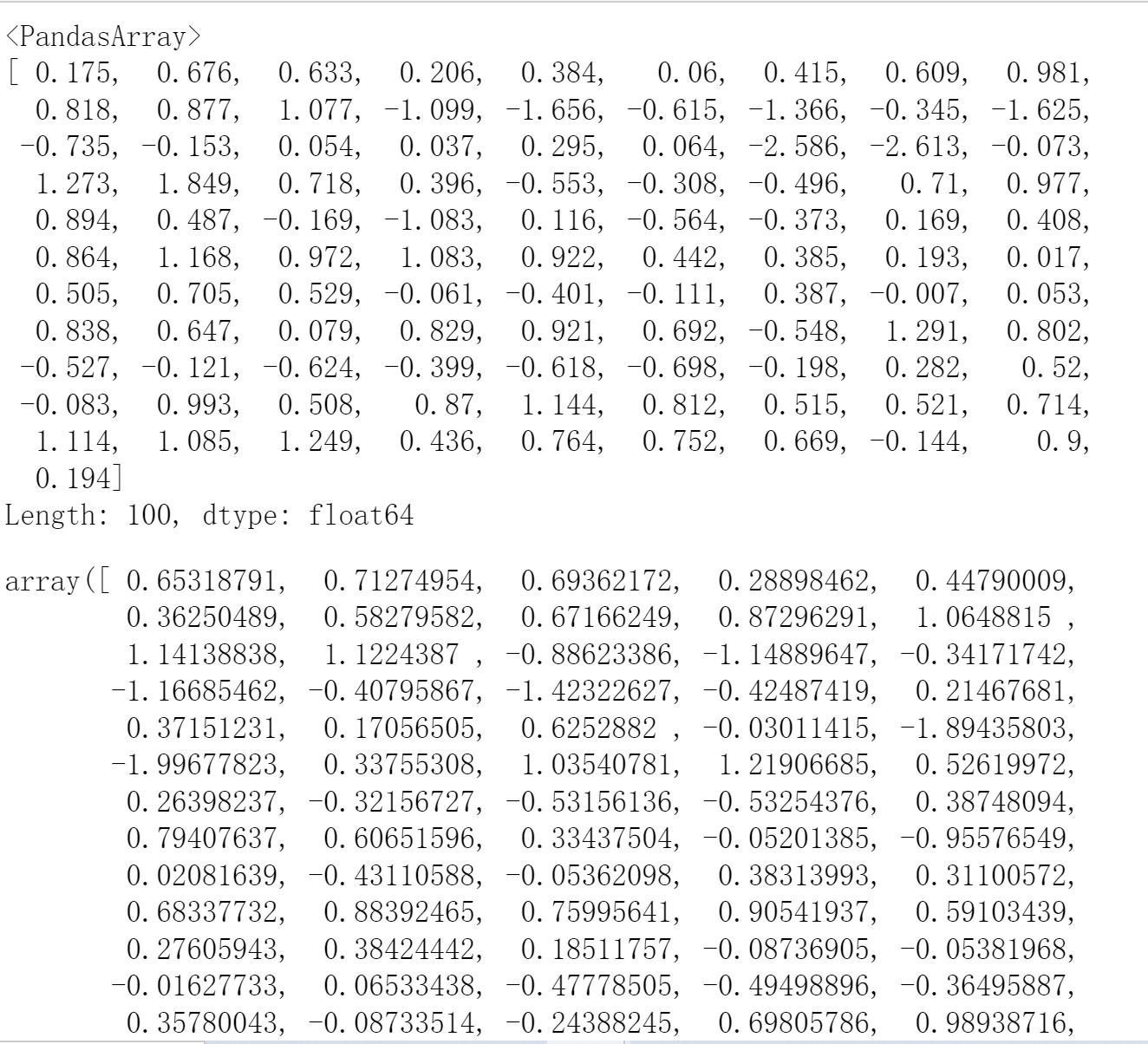

display(y_train[:100].array)

display(y_[:100])

#2.寻找cond异常值:让真实值与预测值比较即可

#此处可以>任何值,此处 y_train.std()约等于1,所以此式子也是利用正态分布找差异

cond = (y_train - y_).abs() > y_train.std()*0.8

cond.sum()

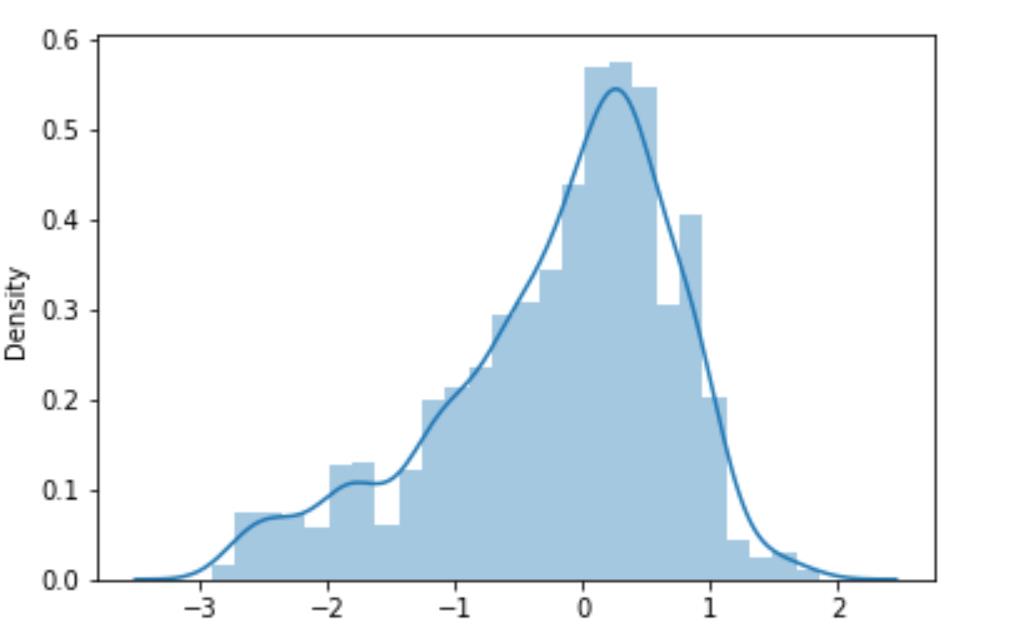

#3.画图

plt.figure(figsize = (12,6))

axes = plt.subplot(1,3,1)#1行3列第一个画布

axes.scatter(y_train,y_)#横坐标是y_train,纵坐标y_预测值

axes.scatter(y_[cond],y_train[cond],c = 'red',s = 20)#将差异值挑出来,大小20.颜色红色

axes = plt.subplot(1,3,2)

axes.scatter(y_train,y_train-y_)

axes.scatter(y_train[cond],(y_train-y_)[cond],c = 'red',s =20)#[]内是条件、索引

axes = plt.subplot(1,3,3)

# _= axes.hist(y_train,bins=50)#柱状图,bins挑宽度,但无法区分出异常值

(y_train-y_).plot.hist(bins = 50,ax=axes)#画出柱状图,同时区分出 差异值的柱状图

(y_train-y_).loc[cond].plot.hist(bins = 50,ax = axes,color = 'r')

#4.将异常值点过滤

cond#异常值是条件为false的值

rop_index = cond[cond].index#将cond转换为true且找出索引

print(data_all.shape)#所有数据的样式

data_all.drop(drop_index,axis=0,inplace=True)#在所有数据的样式上删除差异值的索引

data_all.shape#显示删除后所有数据的样式

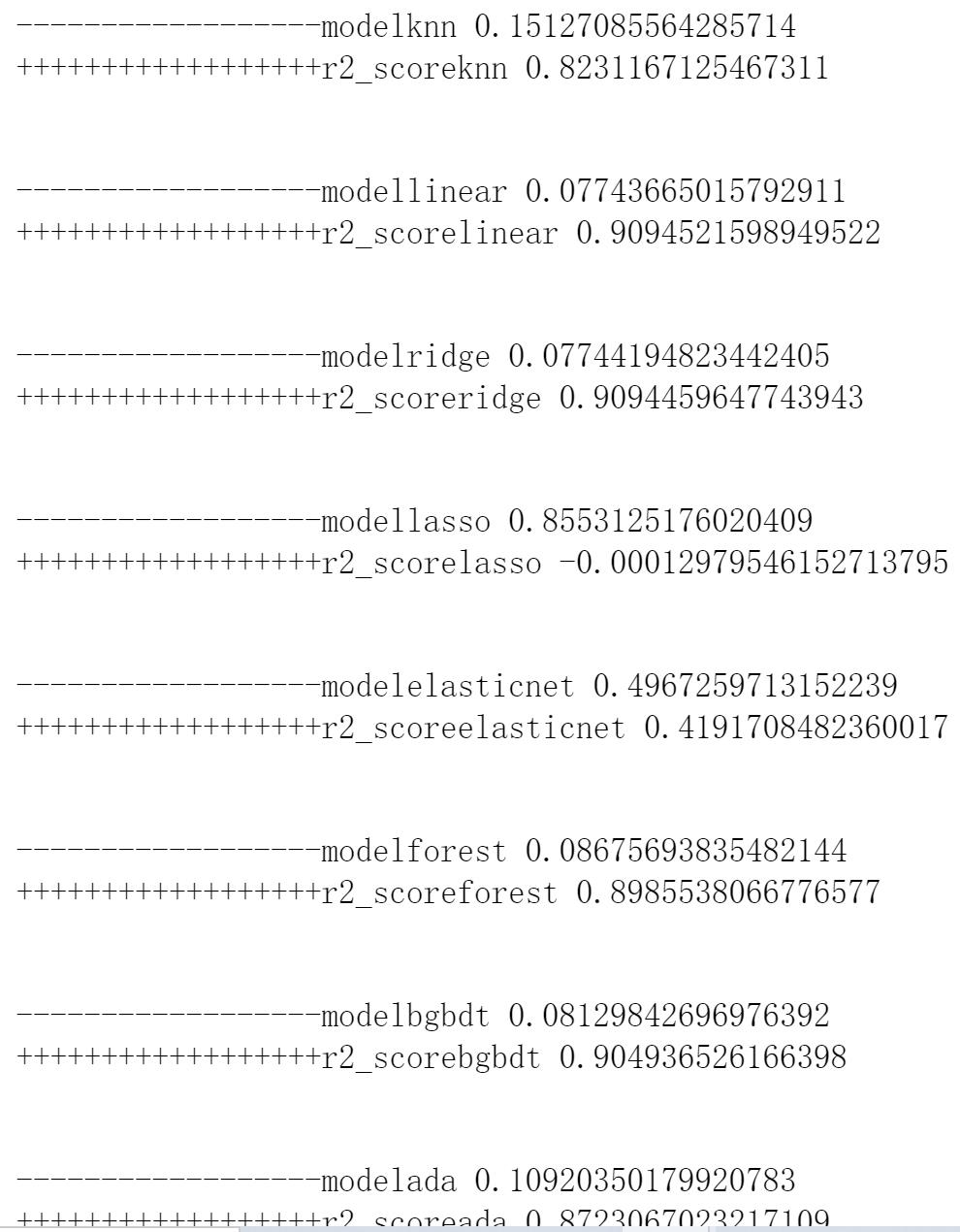

#5.测试各个算法的使用情况

def detect_model(estimators,data):

for key,estimator in estimators.items():

estimator.fit(data[0],data[2])#第一个数据索引,数据

y_ =estimator.predict(data[1])#第二个数据

mse = mean_squared_error(data[3],y_)#均方误差

print('------------------model%s'%(key),mse)#算法

r2 = estimator.score(data[1],data[3])#测试数据

print('++++++++++++++++++r2_score%s'%(key),r2)

print('\n')

cond = data_all['origin'] == 'train'#选择训练数据

X = data_all[cond].iloc[:,:-2]

y = data_all[cond]['target']#目标值

data = train_test_split(X,y,test_size=0.2)#返回数据

estimators = {}

estimators['knn'] = KNeighborsRegressor()

estimators['linear'] = LinearRegression()

estimators['ridge'] = Ridge()

estimators['lasso'] = Lasso()

estimators['elasticnet'] = ElasticNet()

estimators['forest'] = RandomForestRegressor()

estimators['bgbdt'] = GradientBoostingRegressor()

estimators['ada'] = AdaBoostRegressor()

estimators['extreme'] = ExtraTreesRegressor()

estimators['svm_rbf'] = SVR(kernel='rbf')

estimators['svm_poly'] = SVR(kernel='poly')

# estimators['light'] = LGBMRegressor()

estimators['xgb'] = XGBRegressor()

detect_model(estimators,data)#将data ,estimators放在函数中

对于我们的测试数据而言,KNN,Lasso,ElasticNet,SVM_poly都不太好

#6.保留以下算法

estimators = {}

# estimators['linear'] = LinearRegression()

# estimators['ridge'] = Ridge()

estimators['forest'] = RandomForestRegressor()

estimators['bgbdt'] = GradientBoostingRegressor()

estimators['ada'] = AdaBoostRegressor()

estimators['extreme'] = ExtraTreesRegressor()

estimators['svm_rbf'] = SVR(kernel='rbf')

estimators['light'] = LGBMRegressor()

estimators['xgb'] = XGBRegressor()

#7.数据:重新选择训练集,测试集

cond = data_all['origin'] == 'train'

X_train = data_all[cond].iloc[:,:-2]

y_train = data_all[cond]['target']

cond = data_all['origin'] =='test'

X_test = data_all[cond].iloc[:,:-2]

#8.一个算法预测结果,将结果合并

y_pred = []#将各个算法的测试结果放在列表中

for key,model in estimators.items():

model.fit(X_train,y_train)

y_ = model.predict(X_test)

y_pred.append(y_)

y_ = np.mean(y_pred,axis=0)#多个算法求均值

pd.Series(y_).to_csv('./ensemble.txt',index = False)#保存数据文件

#9.预测的结果作为新特征,让我们的算法学习,寻找数据和目标值之间的关系

#y_是预测值,和真实值之间的差距,将预测值当作新的训练特征,让算法进行再学习

for key,model in estimators.items():

model.fit(X_train,y_train)

y_ = model.predict(X_train)

X_train[key] = y_

y_ = model.predict(X_test)

X_test[key] = y_

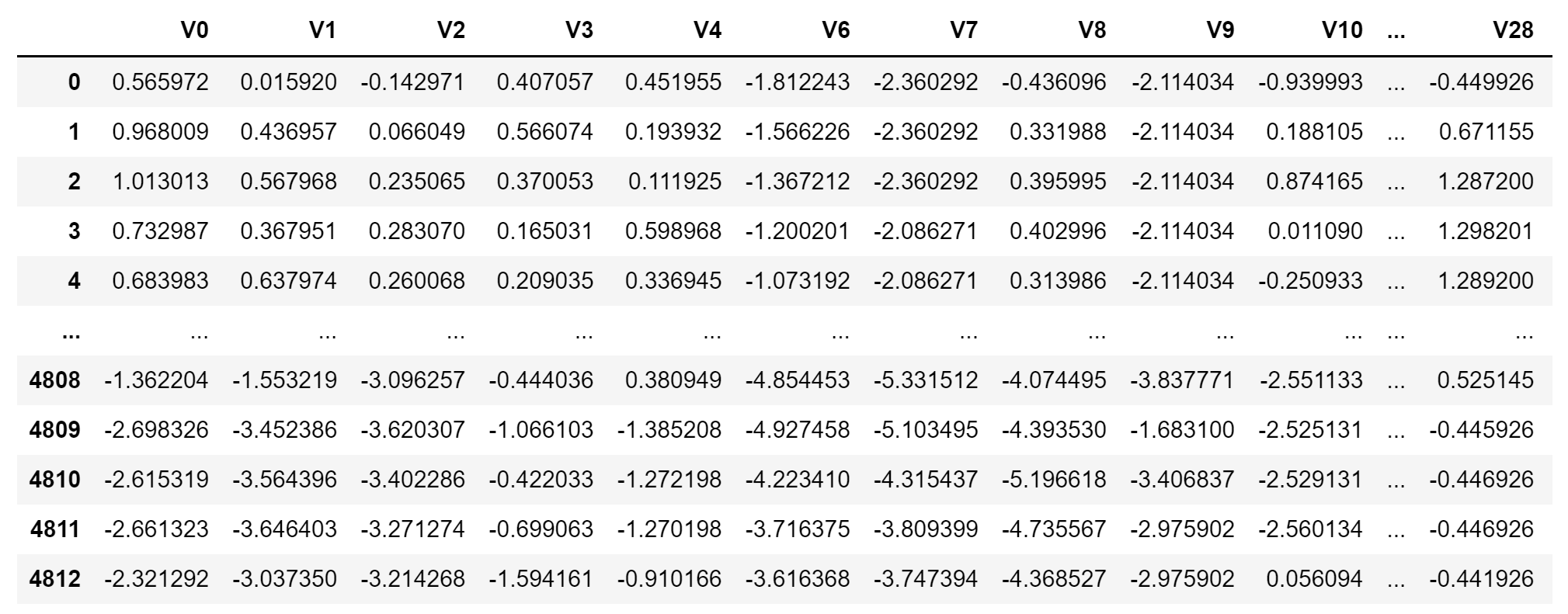

X_train.head()

X_test.head()

#10.反复调整预测数据

y_pred = []#将各个算法的测试结果放在列表中

for key,model in estimators.items():

model.fit(X_train,y_train)

y_ = model.predict(X_test)

y_pred.append(y_)

y_ = np.mean(y_pred,axis=0)

sns.distplot(y_)#预测结果也属于正态分布

y_ += np.random.randn(1925)*0.1#继续调整预测值,更加属于正态分布

pd.Series(y_).to_csv('./ensemble2.txt',index = False)

2.归一化

minmaxscaler = MinMaxScaler()#声明函数

data3 = minmaxscaler.fit_transform(data_all_std)

data3#是一个numpy

# 归一化操作

data_all_norm = pd.DataFrame(data3,columns=data_all_std.columns)

data_all_std

data_all_norm = pd.merge(data_all_norm,data_all_std,left_index = True,right_index = True)#集联两个数据

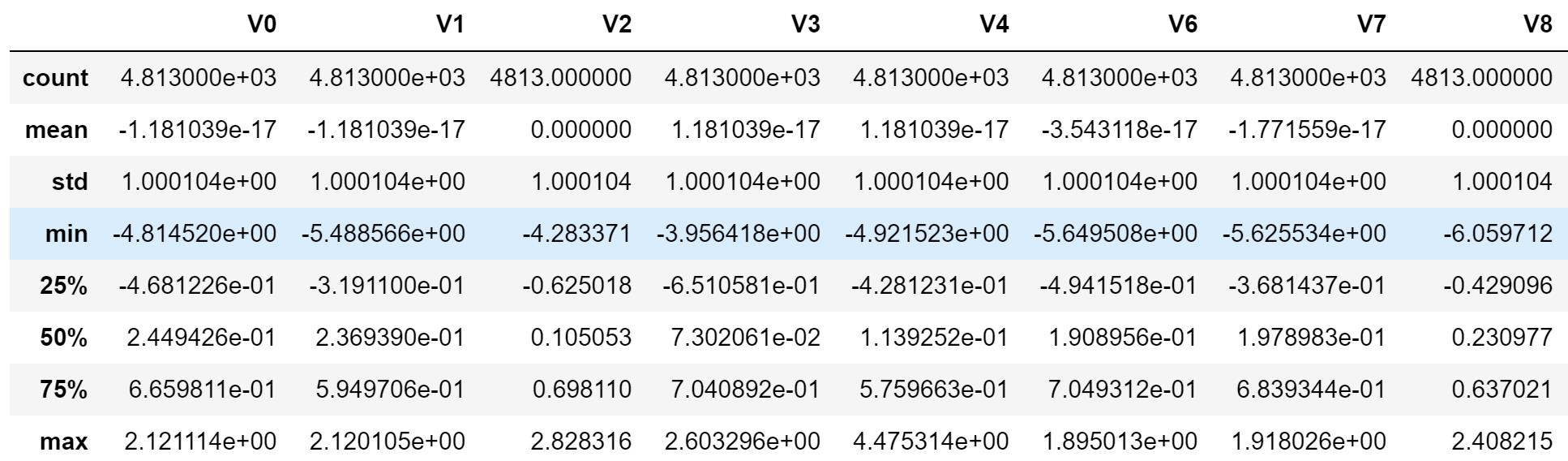

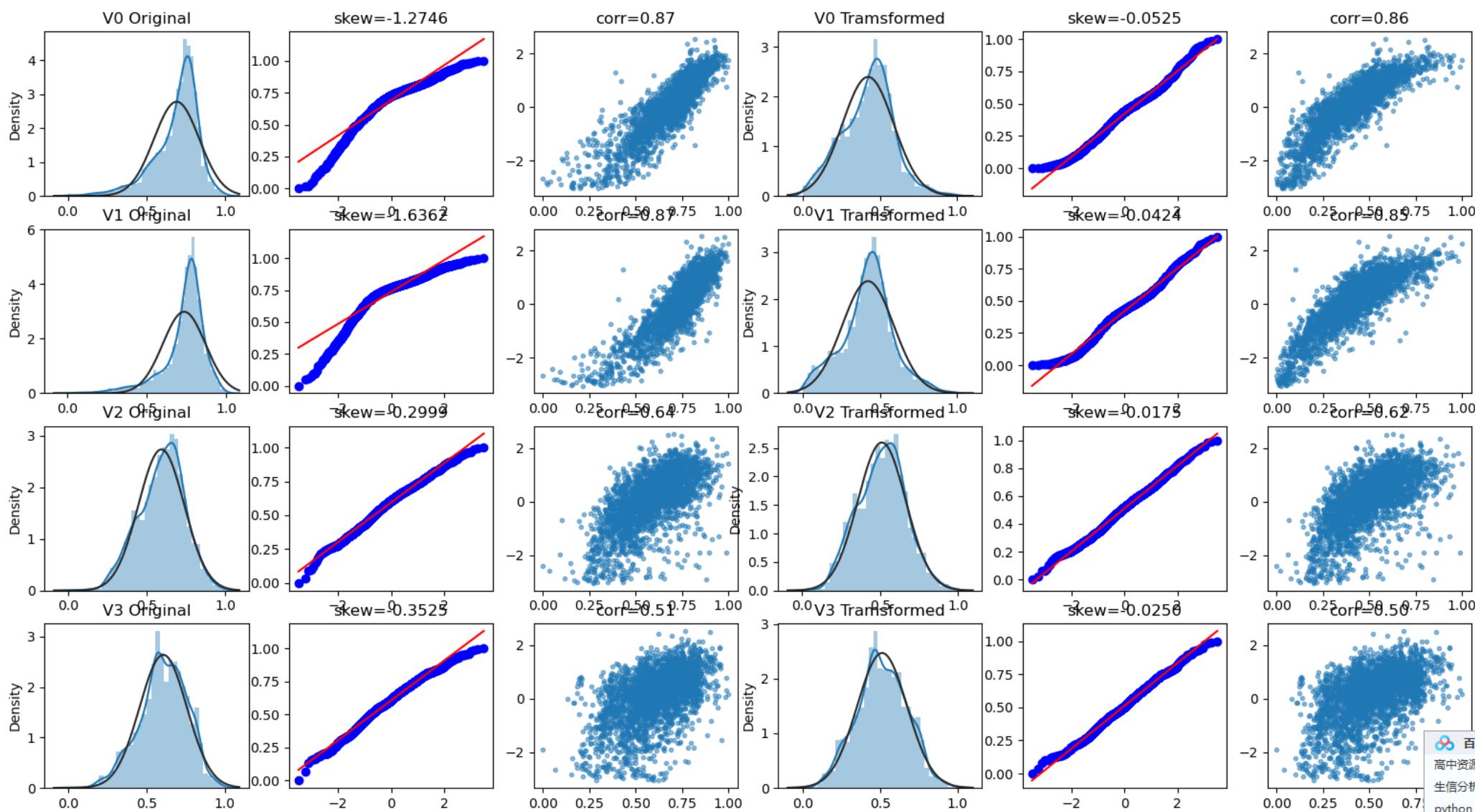

data_all_norm.describe()#归一化

data_all_std.describe()#标准化

此处需要继续优化

#%%查看v0-v3四个特征的箱盒图,查看其分布是否符合正态分布

#Check effect of Box-Cox transforms on distributions of continuous variables

# 检验Box-Cox变换对连续变量分布的影响

#pip install scipy

from scipy import stats

fcols = 6

frows = len(data_all_norm.columns)

plt.figure(figsize=(4*fcols,4*frows))

i=0

for col in data_all_norm.columns:

dat = data_all_norm[[col,]].dropna()#data_all_norm归一化之后的深度数据

#第一个图:这条线就是数据分布dist,distribution(分布)

i+=1

plt.subplot(frows,fcols,i)

sns.distplot(dat[col] , fit=stats.norm);#fit画出正态分布的标准的黑线

plt.title(col+' Original')

plt.xlabel('')

#第二个图,skew统计分析中的一个属性

#skewness 偏斜系数,对正态分布的度量

i+=1

plt.subplot(frows,fcols,i)

_=stats.probplot(dat[col], plot=plt)#画图,偏斜度

plt.title('skew='+'{:.4f}'.format(stats.skew(dat[col])))

plt.xlabel('')

plt.ylabel('')

#第三个:散点图,

i+=1

plt.subplot(frows,fcols,i)

# plt.plot(dat var, dat['target'],'.',alpha=0.5)

plt.scatter(dat[col],dat['target'],alpha = 0.5)#属性于目标值的坐标图

plt.title('corr='+'{:.2f}'.format(np.corrcoef(dat[col], dat['target'])[0][1]))#相关性系数

#对数据进行进一步stats.boxcox处理

# 数据分布图

i+=1

plt.subplot(frows,fcols,i)

trans_var, lambda_var = stats.boxcox(dat[col].dropna()+1)

trans_var = scale_minmax(trans_var)#转化后的数据是缩放的数据

sns.distplot(trans_var , fit=stats.norm);

plt.title(var+' Tramsformed')

plt.xlabel('')

#偏斜度

i+=1

plt.subplot(frows,fcols,i)

_=stats.probplot(trans_var, plot=plt)

plt.title('skew='+'{:.4f}'.format(stats.skew(trans_var)))

plt.xlabel('')

plt.ylabel('')

i+=1

plt.subplot(frows,fcols,i)

plt.plot(trans_var, dat['target'],'.',alpha=0.5)

plt.title('corr='+'{:.2f}'.format(nabsp.corrcoef(trans_var,dat['target'])[0][1]))

四、特征优化

#将数据进行BOX-COX转换

#统计建模中常用的数据变换

#数据更加正正态化,标准化

for col in data_all_norm.columns[:-2]:

boxcox ,maxlog = stats.boxcox(data_all_norm[col] + 1)

#stats.boxcox返回两个值

data_all_norm[col] = scale_minmax(boxcox)

过滤异常值

ridge = RidgeCV(alphas =[0.0001,0.001,0.01,0.1,0.2,0.5,1,2,3,4,5,10,20,30,50])

cond = data_all_norm['origin'] == 'train'#条件是data_all中的origin作为训练数据

X_train = data_all_norm[cond].iloc[:,:-2]

y_train = data_all_norm[cond]['target']#此处是真实值y_train

ridge.fit(X_train,y_train)#经过模型训练,算法拟合数据和目标值的时候,不可能100%拟合

y_ = ridge.predict(X_train)

#预测值y_ 坑定会和真实值由一定偏差,偏差特别大,当成差异值

cond = abs(y_-y_train)>y_train.std()

print(cond.sum())

#画图

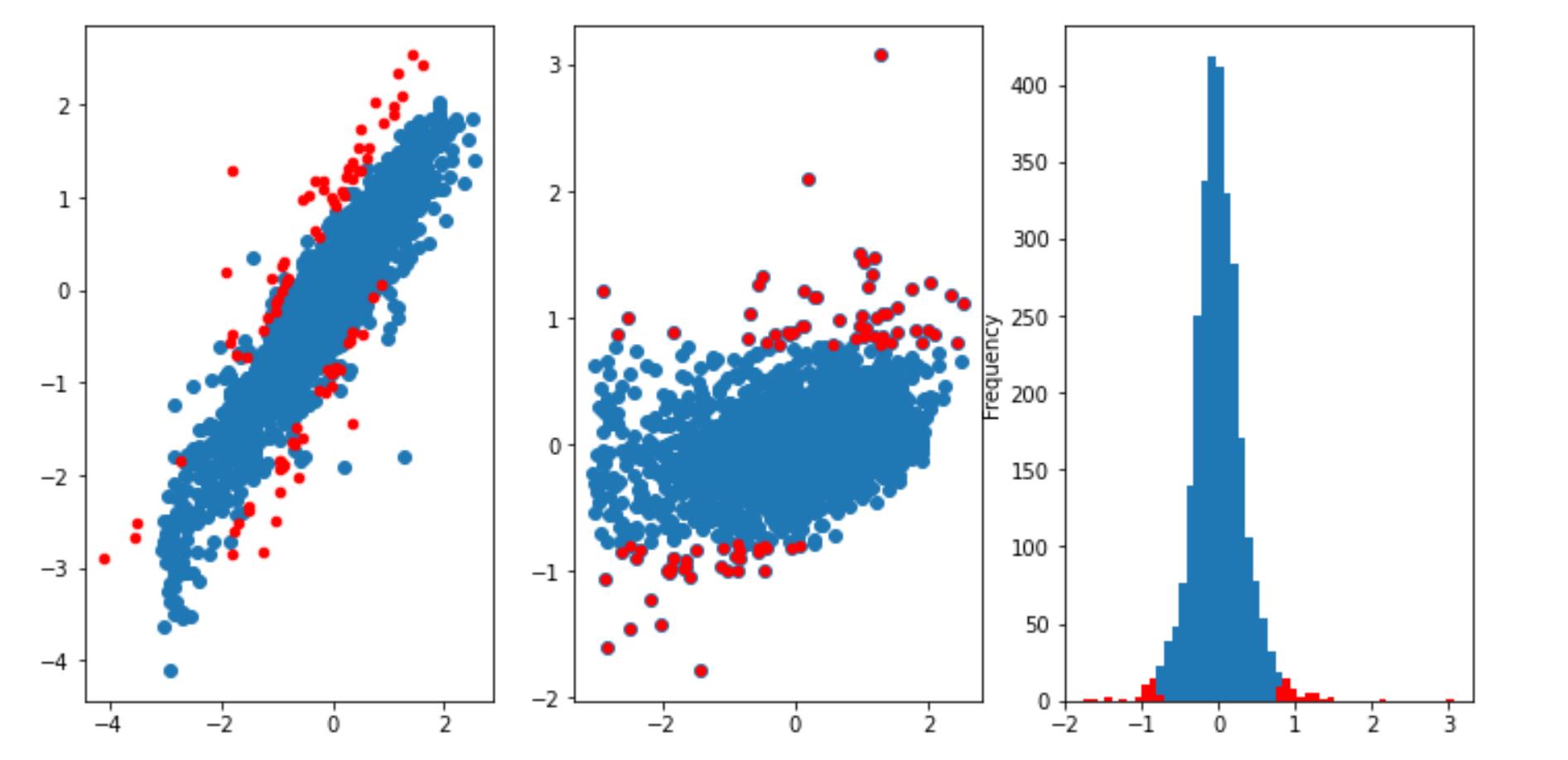

plt.figure(figsize = (12,6))

axes = plt.subplot(1,3,1)#1行3列第一个画布

axes.scatter(y_train,y_)#横坐标是y_train,纵坐标y_预测值

axes.scatter(y_[cond],y_train[cond],c = 'red',s = 20)#将差异值挑出来,大小20.颜色红色

axes = plt.subplot(1,3,2)

axes.scatter(y_train,y_train-y_)

axes.scatter(y_train[cond],(y_train-y_)[cond],c = 'red',s =20)#[]内是条件、索引

axes = plt.subplot(1,3,3)

# _= axes.hist(y_train,bins=50)#柱状图,bins挑宽度,但无法区分出异常值

(y_train-y_).plot.hist(bins = 50,ax=axes)#画出柱状图,同时区分出 差异值的柱状图

(y_train-y_).loc[cond].plot.hist(bins = 50,ax = axes,color = 'r')

index = cond[cond]index

data_all_norm.drop(index,axis = 0,inplace = T)

#删除过后差异值,记下来训练数据

data_all_norm['origin'] == 'train'

X_train = data_all_norm[cond].iloc[:,:-2]

y_train = data_all_norm[cond]['target']

cond = data_all_norm['origin'] == 'test'

X_test =data_all_norm[cond].iloc[:,:-2]

#使用算法

estimators = {}

# estimators['linear'] = LinearRegression()

# estimators['ridge'] = Ridge()

estimators['forest'] = RandomForestRegressor()

estimators['bgbdt'] = GradientBoostingRegressor()

estimators['ada'] = AdaBoostRegressor()

estimators['extreme'] = ExtraTreesRegressor()

estimators['svm_rbf'] = SVR(kernel='rbf')

estimators['light'] = LGBMRegressor()

estimators['xgb'] = XGBRegressor()

#一个算法预测结果,将结果合并

y_pred = []#将各个算法的测试结果放在列表中

for key,model in estimators.items():

model.fit(X_train,y_train)

y_ = model.predict(X_test)

y_pred.append(y_)

y_ = np.mean(y_pred,axis=0)

pd.Series(y_).to_csv('./norm.txt',index = False)

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本