LLM的训练微调:LLaMA-Factory

LLaMA-Factory是一个整合了主流的各种高效训练微调技术、适配主流开源模型、功能丰富、适配性好的训练框架。

安装LLaMA Factory

conda create -n llamafactory python=3.8.0 conda activate llamafactory git clone --depth 1 https://github.com/hiyouga/LLaMA-Factory.git cd LLaMA-Factory pip install -e ".[torch,metrics]"

如果要在windows上开启量化LoRA(QLoRA),需要安装预编译的bitsandbytes库,支持CUDA11.1 - 12.2,根据CUDA版本自行选择合适的版本

https://github.com/jllllll/bitsandbytes-windows-webui/releases/tag/wheels

pip install https://github.com/jllllll/bitsandbytes-windows-webui/releases/download/wheels/bitsandbytes-0.41.2.post2-py3-none-win_amd64.whl

如果要在windows上开始FlashAttention-2,需要安装预编译的flash-attn库,支持CUDA12.1 - 12.2,根据CUDA版本自行选择安装

https://github.com/bdashore3/flash-attention/releases

数据准备

LLaMA-Factory的/data路径下有数据集的示例,数据形式为

[ { "instruction": "hello", "input":"", "output":"Hi, i can sing,dance and play basketball." }, ... { "instruction": "", "input":"", "output":"" } ]

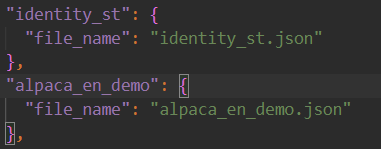

使用自己的数据集时需要在/data路径下的dataset_info.json中将自己的数据集更新进去

快速开始

如下三行命令可以分别对Llama3-8b-Instruct模型进行LoRA微调、推理、合并

llamafactory-cli train examples/lora_single_gpu/llama3_lora_sft.yaml llamafactory-cli chat examples/inference/llama3_lora_sft.yaml llamafactory-cli export examples/merge_lora/llama3_lora_sft.yaml

指令操作的参数调整在对应的yaml文件中进行修改

高级用法参考examples/README.md

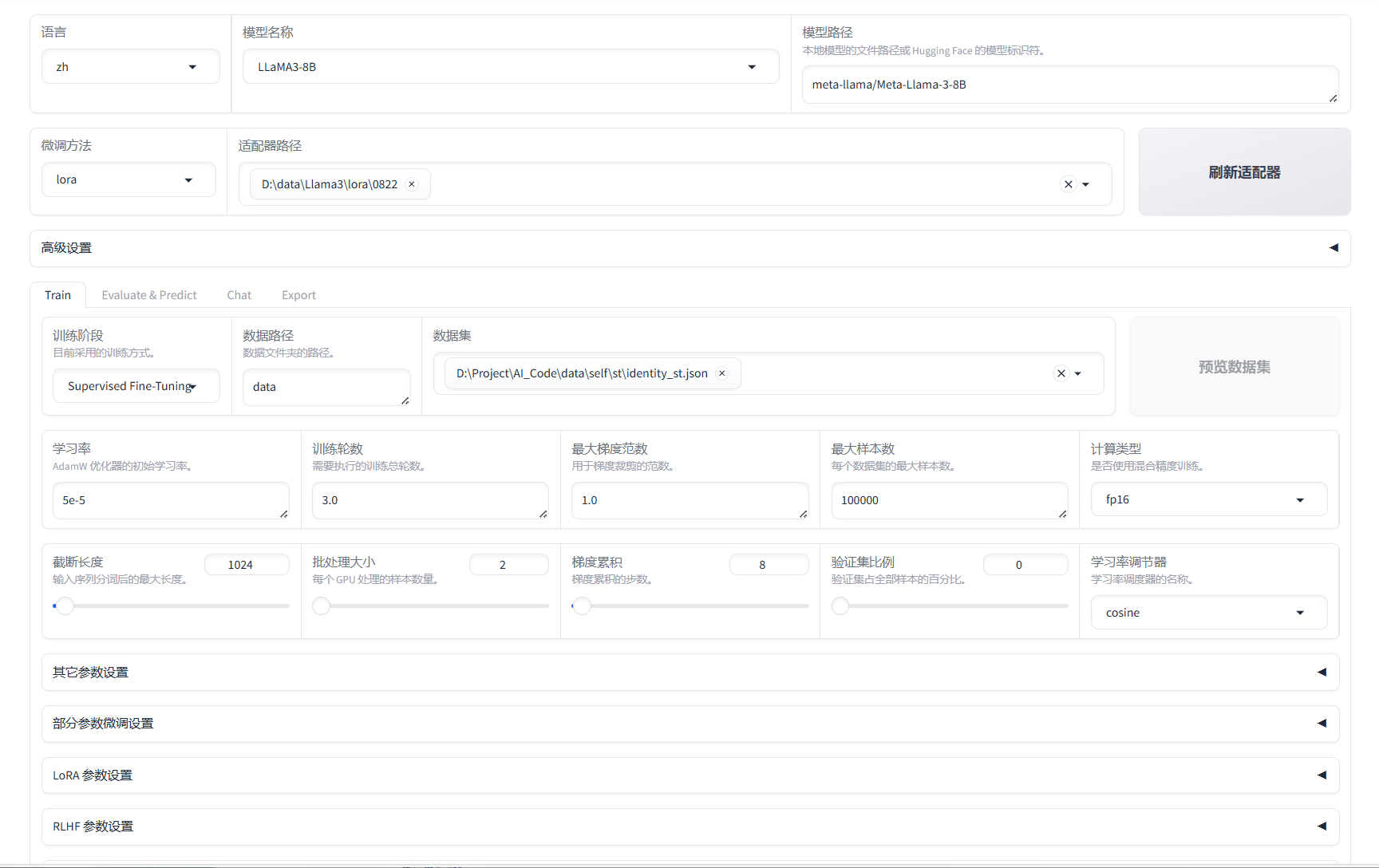

LLaMA Board 可视化微调(由Gradio驱动)

llamafactory-cli webui

127.0.0.1

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律

2023-08-22 深度学习中上下文信息、全局信息、长距离依赖、粒度等概念