flink HelloWorld 之词频统计

最近也在跟着学习flink,也是费了一点功夫才把开发环境都搭建了起来,做了一个简单的词频统计的demo…

准备工作

首先我们需要搭建需要的flink开发环境,我这里使用的是IDEA作为我的开发工具,所以我已经新建好了一个项目,需要添加下面的依赖,这样才可以方便我们进行项目的开发,下面的有一些是需要提前搭建好flink和Kafka环境,如果不搭建当然也可以使用一些其他作为我们的source

<properties>

<project.build.sourceEncoding>UTF-8</project.build.soureEncoding>

<flink.version>1.12.1</flink.version>

<scala.binary.version>2.12</scala.binary.version>

<target.java.version>1.8</target.java.version>

<maven.compiler.source>${target.java.version}</maven.compiler.source>

<maven.compiler.target>${target.java.version}</maven.compiler.target>

<log4j.version>2.12.1</log4j.version>

</properties>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-slf4j-impl</artifactId>

<version>${log4j.version}</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-api</artifactId>

<version>${log4j.version}</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>${log4j.version}</version>

</dependency>

</dependencies>

</dependencyManagement>

获取上下文

在运行一个flink项目前我们需要获取环境的上下文,而获取上下文是这个flink项目的基础,我们可以通过下面这些方式进行获取上下文(来自官网)

getExecutionEnvironment();

createLocalEnvironment();

createRemoteEnvironment(String host, int port, String... jarFiles);

Source

获取到上下文后,我们需要获取一个Source,而获取一个Source的方式也有很多,我们可以使用readTextFile从文本文件中获取到数据,readFile可以读取一个文件,这里我们可以获取到这个Source的并行度是多少,一般Source的并行度是和你的机器的核数有关,当然你也可以通过SetParallelism来设置全局的并行度。

// 对于env设置的并行度 是一个全局的概念

env.setParallelism(5);

DataStreamSource<Long> source = env.fromParallelCollection(

new NumberSequenceIterator(1, 10), Long.class

);

System.out.println("source:" + source.getParallelism());

// 运行一个流作业

env.execute("StreamingWcApp");

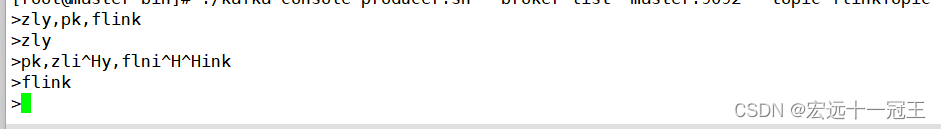

我们也可以对接一下kafka生成者的数据,这里的主题是我提前已经创建好的了。

public static void test05(StreamExecutionEnvironment env ) {

Properties properties = new Properties();

properties.setProperty("bootstrap.servers", "192.168.246.132:9092");

properties.setProperty("group.id", "test");

DataStream<String> stream = env

.addSource(new FlinkKafkaConsumer<>("flinkTopic", new SimpleStringSchema(), properties));

System.out.println(stream.getParallelism());

stream.print();

}

flatMap算子

我们是想对一个输入进来的单词进行词频的统计,首先当然我们需要将单词进行一个分割的操作,flatMap可以实现一对一操作,也可以实现一对多的操作,这里代码的意思就是实现一个对String的分割,最近通过一个Collector来进行收集

public static void flatMap (StreamExecutionEnvironment env) {

DataStreamSource<String> source = env.socketTextStream("192.168.246.132", 9527);

source.flatMap(new FlatMapFunction<String, String>() {

@Override

public void flatMap(String value, Collector<String> out) throws Exception {

String[] splits = value.split(",");

for (String word : splits) {

out.collect(word);

}

}

});

}

filter算子

filter算子是用来实现过滤掉一些不需要的数据流,下面我们在上面的例子的基础上过滤掉一些我们不需要的数据,在flink里面在使用一个算子都会需要实现一个Function对象

public static void flatMap (StreamExecutionEnvironment env) {

DataStreamSource<String> source = env.socketTextStream("192.168.246.132", 9527);

source.flatMap(new FlatMapFunction<String, String>() {

@Override

public void flatMap(String value, Collector<String> out) throws Exception {

String[] splits = value.split(",");

for (String word : splits) {

out.collect(word);

}

}

}).filter(new FilterFunction<String>() {

@Override

public boolean filter(String value) throws Exception {

return value.equals("zhanglianyong");

}

}).print();

}

map算子

在对单词进行分割后,我们是不是还需要对单词进行初始化个数,即将全部的单词词频设置成1,这里需要记得这里面做的是1对1的处理,这里需要用到的类型是Tuple类型,我们这里需要是Tuple2<String, Integer>类型,然后初始化词频数为1,这里如何访问Tuple类型数据呢,比如value.f0是第一个值,value.f2是第二个值

public static SingleOutputStreamOperator<Tuple2<String, Integer>> map(SingleOutputStreamOperator<String> flatMapStream) {

SingleOutputStreamOperator<Tuple2<String, Integer>> mapStream = flatMapStream.map(new MapFunction<String, Tuple2<String, Integer>>() {

@Override

public Tuple2<String, Integer> map(String value) throws Exception {

return new Tuple2<>(value, 1);

}

});

return mapStream;

}

keyBy算子

统计词频是不是还需要对单词进行分组,就比如我们SQL语句中的group by, 需要将相同的单词合并在一起,这里keyBy只是指定如何进行分组

public static KeyedStream<Tuple2<String, Integer>, String> keyBy( SingleOutputStreamOperator<Tuple2<String, Integer>> mapStream) {

KeyedStream<Tuple2<String, Integer>, String> keyByStream = mapStream.keyBy(new KeySelector<Tuple2<String, Integer>, String>() {

@Override

public String getKey(Tuple2<String, Integer> value) throws Exception {

return value.f0;

}

});

return keyByStream;

}

reduce算子

这个reduce算子是需要在keyBy算子的基础上才能使用的,如果没有keyBy算子会报错,reduce算子是对我们之前分组好的数据进行统计和汇总,这里也可以使用一个sum来替代。

public static SingleOutputStreamOperator<Tuple2<String, Integer>> reduce(KeyedStream<Tuple2<String, Integer>, String> keyByStream) {

SingleOutputStreamOperator<Tuple2<String, Integer>> reduceStream = keyByStream.reduce(new ReduceFunction<Tuple2<String, Integer>>() {

@Override

public Tuple2<String, Integer> reduce(Tuple2<String, Integer> value1, Tuple2<String, Integer> value2) throws Exception {

return Tuple2.of(value1.f0, value1.f1 + value2.f1);

}

});

return reduceStream;

}

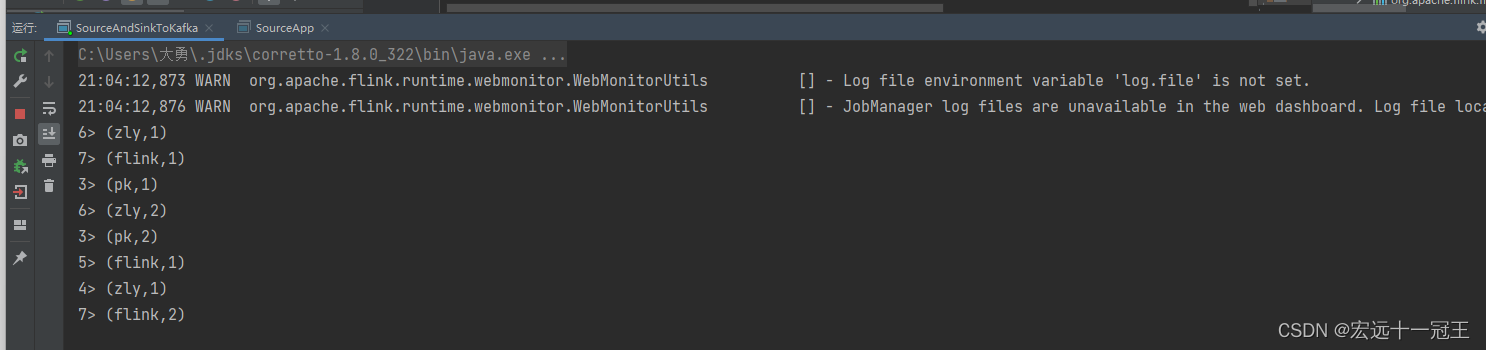

最后

从上面到下面就是整个统计词频的全部算子,我们可以将全部代码整合到一个代码文件中,然后运行来看一下我们的结果是否有问题。

package com.imooc.flink.wordCount;

import com.twitter.chill.Tuple2IntIntSerializer;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.functions.ReduceFunction;

import org.apache.flink.api.common.serialization.SerializationSchema;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.shaded.netty4.io.netty.buffer.ByteBuf;

import org.apache.flink.shaded.netty4.io.netty.buffer.Unpooled;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducer;

import org.apache.flink.streaming.connectors.kafka.KafkaSerializationSchema;

import org.apache.flink.streaming.util.serialization.KeyedSerializationSchema;

import org.apache.flink.util.Collector;

import org.apache.kafka.common.protocol.types.Field;

import java.io.ByteArrayInputStream;

import java.io.DataInputStream;

import java.io.InputStream;

import java.io.ObjectInputStream;

import java.nio.Buffer;

import java.util.Properties;

import java.util.stream.Collectors;

/**

* @author zhanglianyong

* 2022/8/1418:38

*/

public class SourceAndSinkToKafka {

public static void main(String[] args) throws Exception{

// 创建上下文

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// connect to Kafka, 获取Source

DataStream<String> source = getSource(env);

// 将输入进行的单词进行分割,成一个个单词

SingleOutputStreamOperator<String> flatMapStream = flatMap(source);

SingleOutputStreamOperator<Tuple2<String, Integer>> mapStream = map(flatMapStream);

KeyedStream<Tuple2<String, Integer>, String> keyByStream = keyBy(mapStream);

SingleOutputStreamOperator<Tuple2<String, Integer>> reduceStream = reduce(keyByStream);

// 将结果流向Kafka,

reduceStream.print();

env.execute("SourceAndSinkToKafkaApp");

}

public static DataStream<String> getSource (StreamExecutionEnvironment env) {

Properties properties = new Properties();

properties.setProperty("bootstrap.servers", "192.168.246.132:9092");

properties.setProperty("group.id", "test");

DataStream<String> stream = env

.addSource(new FlinkKafkaConsumer<>("flinkTopic", new SimpleStringSchema(), properties));

return stream;

}

public static SingleOutputStreamOperator<String> flatMap(DataStream<String> source) {

SingleOutputStreamOperator<String> flatMapStream = source.flatMap(new FlatMapFunction<String, String>() {

@Override

public void flatMap(String value, Collector<String> out) throws Exception {

String[] splits = value.split(",");

for (String word : splits) {

out.collect(word);

}

}

});

return flatMapStream;

}

public static SingleOutputStreamOperator<Tuple2<String, Integer>> map(SingleOutputStreamOperator<String> flatMapStream) {

SingleOutputStreamOperator<Tuple2<String, Integer>> mapStream = flatMapStream.map(new MapFunction<String, Tuple2<String, Integer>>() {

@Override

public Tuple2<String, Integer> map(String value) throws Exception {

return new Tuple2<>(value, 1);

}

});

return mapStream;

}

public static KeyedStream<Tuple2<String, Integer>, String> keyBy( SingleOutputStreamOperator<Tuple2<String, Integer>> mapStream) {

KeyedStream<Tuple2<String, Integer>, String> keyByStream = mapStream.keyBy(new KeySelector<Tuple2<String, Integer>, String>() {

@Override

public String getKey(Tuple2<String, Integer> value) throws Exception {

return value.f0;

}

});

return keyByStream;

}

public static SingleOutputStreamOperator<Tuple2<String, Integer>> reduce(KeyedStream<Tuple2<String, Integer>, String> keyByStream) {

SingleOutputStreamOperator<Tuple2<String, Integer>> reduceStream = keyByStream.reduce(new ReduceFunction<Tuple2<String, Integer>>() {

@Override

public Tuple2<String, Integer> reduce(Tuple2<String, Integer> value1, Tuple2<String, Integer> value2) throws Exception {

return Tuple2.of(value1.f0, value1.f1 + value2.f1);

}

});

return reduceStream;

}

}

从这里我们也可以但是整个是一个流式作业,是一直都在运行的。

到这里,整个Hello World就结束啦…

浙公网安备 33010602011771号

浙公网安备 33010602011771号