Python机器视觉之人脸检测 (二)从摄像头读取视频流做人脸检测

基于上一篇文章[Python机器视觉之人脸检测(一)人脸检测的4中办法](https://www.cnblogs.com/LearningC/p/17184329.html "Python机器视觉之人脸检测(一)人脸检测的4中办法"),做了这个案列,内容比较简单,文字就可以少一点。

前提

1、打开和关闭摄像头的基本操作需要会。

2、使用Pyqt显示摄像头视频画面的操作需要会。

3、pyqt线程操作需要熟悉。

Windows 窗体代码:

class CameraWindow(QMainWindow,MainWindow):

def __init__(self):

super(CameraWindow, self).__init__()

self.setupUi(self)

# Events

self.btnOpen.clicked.connect(self.openCamera)

self.btnClose.clicked.connect(self.closeCamera)

def closeEvent(self,event):

self.closeCamera()

def openCamera(self):

self.reader = ImageThread()

self.reader.image_read.connect(self.dispaly)

self.reader.start()

def dispaly(self,image):

showImage = QImage(image.data, image.shape[1],image.shape[0],QImage.Format_RGB888)

self.label.setPixmap(QPixmap.fromImage(showImage))

def closeCamera(self):

print('windows closed.')

self.reader.cancel()

比较有趣的就是,显示摄像头画面居然用的是Label控件,原以为像C#或是Java里面使用Picture控件或是 Image控件,代码如下:

def dispaly(self,image):

showImage = QImage(image.data, image.shape[1],image.shape[0],QImage.Format_RGB888)

self.label.setPixmap(QPixmap.fromImage(showImage))

closeEvent 函数是 QMainWindow 关闭窗口后触发的槽函数。

打开摄像头,读取视频流的线程代码如下:

class ImageThread(QThread):

image_read = pyqtSignal(np.ndarray)

def __init__(self):

super(ImageThread,self).__init__()

self.stop = False

def run(self):

self.cap = cv2.VideoCapture(0)

while self.stop == False:

start_time = time.time()

ret,frame = self.cap.read()

# if frame :

frame_fixed = cv2.cvtColor(frame,cv2.COLOR_BGR2RGB)

# flipping around y

frame = cv2.flip(frame_fixed,1)

fps = 1 / (time.time() - start_time)

cv2.putText(frame, "fps:"+ str(int(fps)),(25,70),cv2.FONT_HERSHEY_COMPLEX,1,(0,255,0),2,cv2.LINE_AA)

frame = self.face_detector(frame)

self.image_read.emit(frame)

def cancel(self):

self.stop = True

self.cap.release()

def face_detector(self,img):

# 构造Haar检测器

face_detector = cv2.CascadeClassifier('./cascades/haarcascade_frontalface_default.xml')

# 将img转为灰度图

img_gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

# 检测结果

# 调整图片尺寸 scaleFactor = 1.2

# 最小尺寸 minSize = (45,45)

# 最大尺寸 maxSize = (80,80)

detections = face_detector.detectMultiScale(img_gray,scaleFactor = 1.1,minNeighbors = 8) # ,minSize = (150,150) ,maxSize= (200,200)

# 解析结果

color = (0,255,0)

thinkness = 2

for (x,y,w,h) in detections:

cv2.rectangle(img,(x,y),(x+w,y+h),color,thinkness)

return img

QThread线程基本操作如果不会可以去查查资料,模型配置文件 haarcascade_frontalface_default.xml 可以在github上找到。

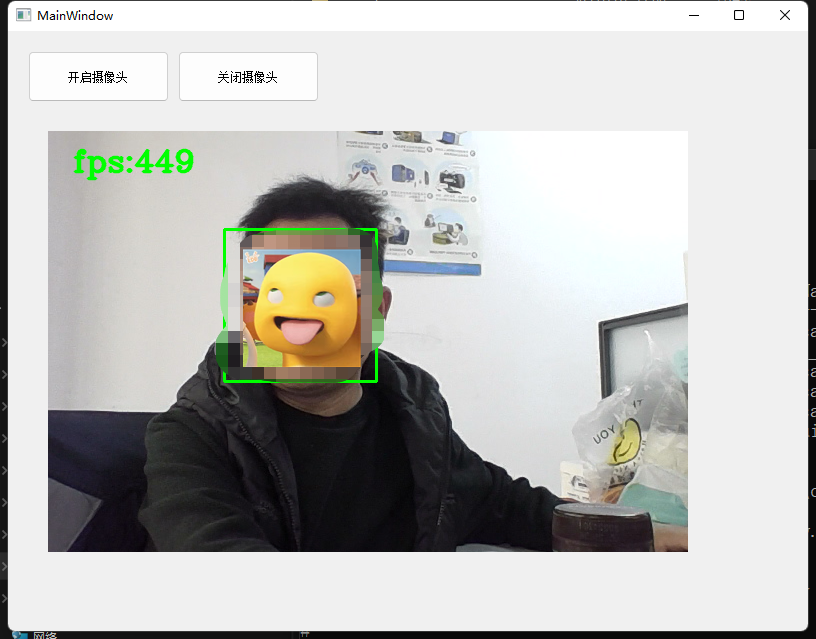

最后效果如下:

完整代码如下:

点击查看UI代码

# -*- coding: utf-8 -*-

# Form implementation generated from reading ui file 'camera.ui'

#

# Created by: PyQt5 UI code generator 5.15.4

#

# WARNING: Any manual changes made to this file will be lost when pyuic5 is

# run again. Do not edit this file unless you know what you are doing.

from PyQt5 import QtCore, QtGui, QtWidgets

class Ui_MainWindow(object):

def setupUi(self, MainWindow):

MainWindow.setObjectName("MainWindow")

MainWindow.resize(800, 600)

self.centralwidget = QtWidgets.QWidget(MainWindow)

self.centralwidget.setObjectName("centralwidget")

self.btnOpen = QtWidgets.QPushButton(self.centralwidget)

self.btnOpen.setGeometry(QtCore.QRect(20, 20, 141, 51))

self.btnOpen.setObjectName("btnOpen")

self.btnClose = QtWidgets.QPushButton(self.centralwidget)

self.btnClose.setGeometry(QtCore.QRect(170, 20, 141, 51))

self.btnClose.setObjectName("btnClose")

self.label = QtWidgets.QLabel(self.centralwidget)

self.label.setGeometry(QtCore.QRect(40, 100, 721, 421))

self.label.setText("")

self.label.setObjectName("label")

MainWindow.setCentralWidget(self.centralwidget)

self.retranslateUi(MainWindow)

QtCore.QMetaObject.connectSlotsByName(MainWindow)

def retranslateUi(self, MainWindow):

_translate = QtCore.QCoreApplication.translate

MainWindow.setWindowTitle(_translate("MainWindow", "MainWindow"))

self.btnOpen.setText(_translate("MainWindow", "开启摄像头"))

self.btnClose.setText(_translate("MainWindow", "关闭摄像头"))

点击查看界面完整代码

from PyQt5.QtWidgets import *

from PyQt5 import QtCore

from PyQt5.QtCore import *

from PyQt5.QtGui import *

import sys

from camera_ui import Ui_MainWindow as MainWindow

import cv2

import numpy as np

import time

class ImageThread(QThread):

image_read = pyqtSignal(np.ndarray)

def __init__(self):

super(ImageThread,self).__init__()

self.stop = False

def run(self):

self.cap = cv2.VideoCapture(0)

while self.stop == False:

start_time = time.time()

ret,frame = self.cap.read()

# if frame :

frame_fixed = cv2.cvtColor(frame,cv2.COLOR_BGR2RGB)

# flipping around y

frame = cv2.flip(frame_fixed,1)

fps = 1 / (time.time() - start_time)

cv2.putText(frame, "fps:"+ str(int(fps)),(25,70),cv2.FONT_HERSHEY_COMPLEX,1,(0,255,0),2,cv2.LINE_AA)

frame = self.face_detector(frame)

self.image_read.emit(frame)

def cancel(self):

self.stop = True

self.cap.release()

def face_detector(self,img):

# 构造Haar检测器

face_detector = cv2.CascadeClassifier('./cascades/haarcascade_frontalface_default.xml')

# 将img转为灰度图

img_gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

# 检测结果

# 调整图片尺寸 scaleFactor = 1.2

# 最小尺寸 minSize = (45,45)

# 最大尺寸 maxSize = (80,80)

detections = face_detector.detectMultiScale(img_gray,scaleFactor = 1.1,minNeighbors = 8) # ,minSize = (150,150) ,maxSize= (200,200)

# 解析结果

color = (0,255,0)

thinkness = 2

for (x,y,w,h) in detections:

cv2.rectangle(img,(x,y),(x+w,y+h),color,thinkness)

return img

class CameraWindow(QMainWindow,MainWindow):

def __init__(self):

super(CameraWindow, self).__init__()

self.setupUi(self)

# Events

self.btnOpen.clicked.connect(self.openCamera)

self.btnClose.clicked.connect(self.closeCamera)

def closeEvent(self,event):

self.closeCamera()

def openCamera(self):

self.reader = ImageThread()

self.reader.image_read.connect(self.dispaly)

self.reader.start()

def dispaly(self,image):

showImage = QImage(image.data, image.shape[1],image.shape[0],QImage.Format_RGB888)

self.label.setPixmap(QPixmap.fromImage(showImage))

def closeCamera(self):

print('windows closed.')

self.reader.cancel()

if __name__ == "__main__":

QtCore.QCoreApplication.setAttribute(QtCore.Qt.AA_EnableHighDpiScaling)

app = QApplication(sys.argv)

c = CameraWindow()

c.show()

sys.exit(app.exec_())

浙公网安备 33010602011771号

浙公网安备 33010602011771号