Operation system note

Key words

Context Switch Time

big parts:

Communication between a Device and the CPU

• Method One: Polling or Hand-shaking

• Method Two: Interrupts

• Method Three: DMA – Direct Memory Access

Memory Management

A memory management unit or MMU

only sees: • A stream of addresses + read requests OR • An address + data and write requests

A memory management unit (MMU) is a computer hardware unit having all memory references passed through itself, primarily performing the translation of logical memory addresses to physical addresses. It is usually implemented as part of the CPU

Memory Management with Bitmaps

Each bit in the bitmap represents a block of memory

If allocation units are too small then bitmap becomes large Searching the bitmap may be slow since each bit may have to be checked

Memory Management with Linked Lists

Allocation Algorithm:

First fit

Advantages • Easy to implement • Very fast Disadvantages • Can lead to memory fragmentation

Next fit

Advantages • Attempt to reduce memory fragmentation Disadvantages • Provides slightly worse performance than First Fi

Best fit

Disadvantages • Slow because the whole list must be searched each time • Also produces fragmentation with lots of small holes

First fit tends to produce larger holes on average

Worst fit

Search the whole list for the biggest hole • Idea is to reduce fragmentation by leaving large holes Disadvantages • Need to search the whole list (although can be optimized)

Files are collections of related information defined by their creator. They commonly represent programs and data.

Files can be accessed in two ways:

• Sequential access: • Read all bytes/records from the beginning • Cannot jump around, but can rewind/back-up • Was convenient when the medium was magnetic tape

• Random access: • Bytes/records can be read in any order • Essential for database systems • Can seek/read or read/seek

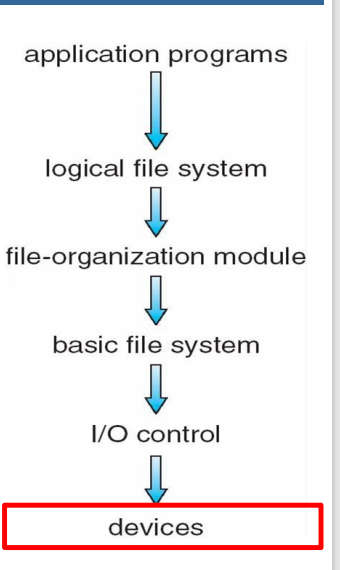

A VFS is software that forms an interface between an OS kernel and a more concrete file system

• file systems types • network file system

Then dispatches operation to appropriate file system implementation routines

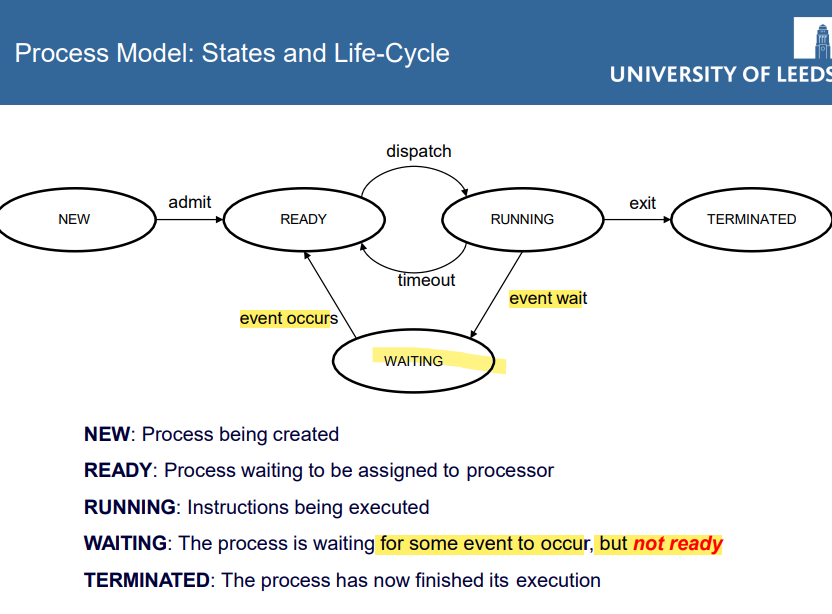

A process is a run-time instance of a computer program that is being executed. It contains the program code and its current activity. Depending on the operating system, a process may be made up of multiple threads of execution that execute instructions concurrently (to be discussed later)

• Process isolation is a set of different hardware (e.g. lookup cache) and software (e.g. exception handlers) technologies designed to protect each process from other processes on the operating system. It does so by preventing process A from writing to process B (to be discussed in “Virtual Memory”).

the dispatcher gives a process control over the CPU after it has been selected by the scheduler. Its function involves the following:

Switching context (e.g. switching from process A to B)

Switching from kernel mode to user mode

Jumping to the proper location in the user program to restart that program

Process Control Block (PCB) • Data structure used by OS to manage the execution of a process; PCB0 , PCB1 , … • Describes process for scheduler,

includes accounting and priority • Indicates current process state • Records state of registers, heap, stack, etc

Context Switch • Stopping one process and starting another

• Register values and other state information of current process stored in PCBx

• Register values and other state information of new process loaded from PCBy

• Must be as efficient as possible. Context switching is pure overhead, no real work is done

Process Termination Termination criteria examples • Normal completion: Process indicates it has finished executing final statement • Bounds violation (Seg Fault): Process attempts access to memory which it is not allowed to • Memory exceeded: Process requires more memory than system has available • Arithmetic error: Divide by zero, etc. • OS or operator termination: OS or user terminates process Process returns status code to parent indicating reason for termination UNIX: e x i t ( ) Windows: ExitProcess()

Thread:

Multiple concurrent paths of execution within a process • Lightweight process – a simplified form of process • Unit of dispatching (execution) • Threads in a process share address space

Primary Benefits

• Responsiveness: applications can continue executing while parts are blocked

• Resource sharing: Threads share code and data • Economy: Cheaper to create threads than processes

• Multiprocessor utilisation: threads can utilise parallel execution Separates resource ownership and scheduling Applications that benefit from multi-threading

• Web servers: Each request handled in a separate thread • Heavy I/O operations or long computation: Interactive during execution • Any application with a GUI

User Level Threads:

• Cheap to create • Cheap context switches • Thread yields don’t result in process context switch • Application-specific scheduling

Drawbacks (single point of…) • Blocking system calls can block entire process • Errors propagate to whole process • No clock interrupts, scheduler runs at same level as thread • No benefits from multi-processor systems

Kernel Level Threads

Benefits • Errors stop threads not processes Kernel has full control of thread scheduling May give more time to processes with more threads • Kernel handles blocking system calls Drawbacks • Operations much slower than user level threads • Significant overhead for kernel. It must manage and schedule processes and threads

Shared Memory

Benefits • Speed: No requirement for context switching to kernel for communication

• Easy access: Processes write to shared memory as normal,no distinction between shared memory and own memory Drawbacks

• No protection: Up to processes to write data in a controlled way and all processes using memory need to be trusted

• Synchronisation: Requires mutual exclusion and protected critical regions (future lectures)

• Limited modularity: Must have pointer or ID to shared memory before it can be grafted. All sharing processes must agree shared definitions

Race Conditions Cause non-deterministic results (x = 0 or 1?)

• Actual result of execution depends on scheduling

• Execution may fail sporadically and with strange, unreproducible output Solving requires mutual exclusion

• Ensure only one process or thread can do the same thing to a shared variable or file

• Access to shared resources must be synchronised to avoid inconsistencies Operations are not atomic and context switches can happen at any time • Not a single instruction for CPU

• Mutual exclusion:

No two processes or threads can be in their related critical sections at the same time

• Progress: Progress cannot be delayed by another process or thread not in a critical section

•Bounded Waiting: No process or threads should wait forever to enter its critical section (or deadlock possible)

Hardware Solutions

Requires CPU instruction set support • Can disable interrupts, avoids pre-emption Atomic Instructions

• TSL (test-set-lock), may only lock a single processor

• SWAP, compare and swap registers atomically Sleep and wakeup • Avoids spin locking, but inadequate for mutual exclusion

Semaphores

• Access through two atomic operations • Wait locks entry to a critical region • Signal releases the lock Count accesses to a finite resource • S initialised to number of resources

Characteristics of Deadlock

4 Mutual Exclusion • Only one process at a time can use a resource Hold and Wait

• A process holding at least one resource and waiting for another to be released

No Preemption • Resources can only be released voluntarily (no enforcement)

Circular Wait • A set of processes {P0, P1, P2, . . ., PN} must exist suchthat every P[i] is waiting for P[i + 1] mod N

Dealing with Deadlocks

Deal with deadlock in three ways • Use a protocol to prevent or avoid deadlock • Detect it and recover • Ignore it (Most operating systems do this, left up to the programmer)

Prevention • Requires OS to know what will be requested in advance

Avoidance • Possible low device utilisation Detection

• Recovery is a problem

Benefits of Virtual Memory

Freeing applications from managing a shared memory space 2. No longer constrained by limits of physical memory 3. Each program takes less memory while running 4. More programs run at the same time 5. More programs running = Better CPU utilization 6. Less I/O needed to load or swap programs into memory 7. Increased security due to memory isolation

Benefits of Virtual

Benefits • Freedom of choice for operating system (i.e. testing environment) • Consolidates server and infrastructure • It saves time and money • Makes it easier to manage and secure desktop environments • Isolates “unsafe” programs into VM • Can copy VMs from one machine to another • Can setup copies of the same OS/Software setup quickly

KVM, Xen, VMware, VirtualBox etc

attack sort

Security Violation Categories 6 • Breach of confidentiality • Unauthorized reading of data • Breach of integrity • Unauthorized modification of data • Breach of availability • Unauthorized destruction of data or services • Theft of service • Unauthorized use of resources • Denial of service (DOS) • Prevention of legitimate use

Masquerading (breach authentication) • Pretending to be an authorized user to escalate privileges • Replay attack • As is or with message modification • Man-in-the-middle attack • Intruder sits in data flow, masquerading as sender to receiver and vice versa • Session hijacking • Intercept an already-established security session to bypass authentication

Access control is centered around two important concepts: • Authentication • Authorisation

Asymmetric Encryption for Secure Communication • public key – published key used to encrypt data • private key – key known only to individual user used to decrypt dat

Secure Hash Algorithms

Guiding principle – principle of least privilege

Domain Structure Access Matrix

RAID • Redundant Array of Independent (Inexpensive) Disks • Developed to avoid the “pending I/O” crisis • Attempts to improve disk performance and/or reliability • Multiple disks in an array can be accessed simultaneously • Performs accesses in parallel to increase throughput • Additional drives can be used to improve data integrity

Distributed File Systems… • Don’t move data to workers… move workers to the data! • Store data on the local disks of nodes in the cluster • Start up the workers (programs) on the node that has the data local • Why? • Not enough RAM to hold all the data in memory • Disk access is slow, but disk throughput is reasonable • A distributed file system is the answer • GFS (Google File System) for Google’s MapReduce • HDFS (Hadoop Distributed File System) for Hadoop’s MapReduce

Methods for Mitigating Long-Tails An application job consists of many tasks which may be executed in parallel over a large number of servers

• Method One (Task Cloning): For each task, execute many copies of it over different servers, and use the results from the quickest server, thereby reducing the tail of the job execution – but costly

• Method Two (Kill and Re-try): When the system detects that the execution of a task is too slow, it kills the task and re-starts it again, possibly on a different server in the hope that the re-try will be quicker – but based on a sort of luck

• Method Three (Speculation): When the system detects that the execution of a task is too slow, it starts the same task on another server, and uses the results from the quicker server, thereby possibly reducing the tail – but not always work

• Method Four: An AI-based method

Part I: Mobile OSs Part II: Real-Time OSs Part III: HPC and Cloud OSs