TensorFlow 使用

• 搭建你的TensorFlow开发环境

tensorflow:

tensorflow 一Current release for CPU-only (recommendedfor beginners)

tensorflow-gpu 一Current release with GPU support (Ubuntu and Windows)

tf-nightly —Nightly build for CPU-only (unstable)

tf-nightly-gpu —Nightly build with GPU support (unstable, Ubuntu and Windows)

创建Python虚拟环境

C:\Users\67001>pip install virtualenv

# 创建一个名字为envname的虚拟环境

D:\Program Files\Anaconda3\virtual_Env>virtualenv TestEnv

Using base prefix 'd:\\program files\\anaconda3'

No LICENSE.txt / LICENSE found in source

New python executable in D:\Program Files\Anaconda3\virtual_Env\TestEnv\Scripts\python.exe

Installing setuptools, pip, wheel...

done.

virtualenv -p python2 envname # 如果安装了多个python版本,如py2和py3,需要指定使用哪个创建虚拟环境

# 进入虚拟环境文件

D:\Program Files\Anaconda3\virtual_Env>cd TestEnv

# 进入相关的启动文件夹

D:\Program Files\Anaconda3\virtual_Env\TestEnv>cd Scripts

# 启动虚拟环境

D:\Program Files\Anaconda3\virtual_Env\TestEnv\Scripts>activate

(TestEnv) D:\Program Files\Anaconda3\virtual_Env\TestEnv\Scripts>

deactivate # 退出虚拟环境

在虚拟环境下安装tensorflow:

(TestEnv) D:\Program Files\Anaconda3\virtual_Env\TestEnv\Scripts>pip install tensorflow

或者:国内安装比较快

(TestEnv) D:\Program Files\Anaconda3\virtual_Env\TestEnv\Scripts>pip install -i https://pypi.tuna.tsinghua.edu.cn/simple/ --upgrade tensorflow

• “Hello TensorFlow”

>>> import tensorflow as tf

# 定义常量操作 hello >>> hello = tf.constant("Hello TensorFlow")

# 创建一个会话 >>> sess = tf.Session() 2019-07-24 15:31:55.832973: I tensorflow/core/platform/cpu_feature_guard.cc:142]

Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2

#执行常量操作hello并打印到标准输出 >>> print(sess.run(hello)) b'Hello TensorFlow'

支持AVX2指令集的CPUs

• Intel • Haswell processor, Q2 2013 • Haswell E processor, Q3 2014 • Broadwell processor, Q4 2014 • Broadwell E processor, Q3 2016 • Skylake processor, Q3 2015 • Kaby Lake processor, Q3 2016(ULV mobile)/Q1 2017(desktop/mobile) • Skylake-X processor, Q2 2017 • Coffee Lake processor, Q4 2017 • Cannon Lake processor, expected in 2018 • Cascade Lake processor, expected in 2018 • Ice Lake processor, expected in 2018

• AMD • Excavator processor and newer, Q2 2015 • Zen processor, Q1 2017 • Zen+ processor, Q2 2018

• 在交互式环境中使用 TensorFlow

pip install jupyter (TestEnv) D:\Program Files\Anaconda3\virtual_Env\TestEnv\Scripts>pip install ipykernel (TestEnv) D:\Program Files\Anaconda3\virtual_Env\TestEnv\Scripts>python -m ipykernel install --user --name=TestEnv Installed kernelspec TestEnv in C:\Users\67001\AppData\Roaming\jupyter\kernels\testenv (TestEnv) D:\Program Files\Anaconda3\virtual_Env\TestEnv\Scripts>jupyter kernelspec list Available kernels: testenv C:\Users\67001\AppData\Roaming\jupyter\kernels\testenv python3 d:\program files\anaconda3\virtual_env\testenv\share\jupyter\kernels\python3

(TestEnv) D:\Program Files\Anaconda3\virtual_Env\TestEnv\Scripts>jupyter notebook

多层感知机模型示例

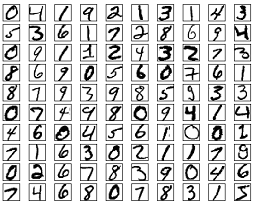

MNIST图像数据集使用形如[28,28]的二阶数组来表示每张图像,数组中的每个元素对应一个像素点。

该数据集中的图像都是256阶灰度图,像素值0表示白色(背景),255表示黑色(前景)。

由于每张图像的尺寸都是28x28像素,为了方便连续存储,我们可以将形如[28,28]

的二阶数组“摊平”成形如[784]的一阶数组。数组中的784个元素共同组成了一个784维的向量。

More info: http://yann.lecun.com/exdb/mnist/

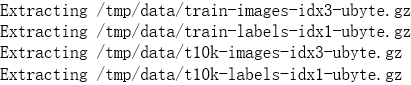

from __future__ import print_function # 导入 MNIST 数据集 from tensorflow.examples.tutorials.mnist import input_data mnist = input_data.read_data_sets("/tmp/data/", one_hot=True) import tensorflow as tf

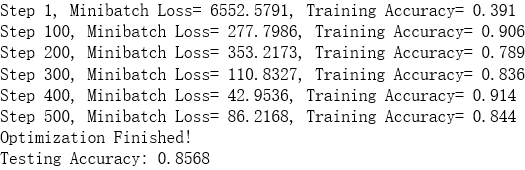

# 超参数 learning_rate = 0.1 num_steps = 500 batch_size = 128 display_step = 100 # 神经网络参数 n_hidden_1 = 256 # 第一层神经元个数 n_hidden_2 = 256 # 第二层神经元个数 num_input = 784 # MNIST 输入数据(图像大小: 28*28) num_classes = 10 # MNIST 手写体数字类别 (0-9) # 输入到数据流图中的训练数据 X = tf.placeholder("float", [None, num_input]) Y = tf.placeholder("float", [None, num_classes]) # 权重和偏置 weights = { 'h1': tf.Variable(tf.random_normal([num_input, n_hidden_1])), 'h2': tf.Variable(tf.random_normal([n_hidden_1, n_hidden_2])), 'out': tf.Variable(tf.random_normal([n_hidden_2, num_classes])) } biases = { 'b1': tf.Variable(tf.random_normal([n_hidden_1])), 'b2': tf.Variable(tf.random_normal([n_hidden_2])), 'out': tf.Variable(tf.random_normal([num_classes])) } # 权重和偏置 weights = { 'h1': tf.Variable(tf.random_normal([num_input, n_hidden_1])), 'h2': tf.Variable(tf.random_normal([n_hidden_1, n_hidden_2])), 'out': tf.Variable(tf.random_normal([n_hidden_2, num_classes])) } biases = { 'b1': tf.Variable(tf.random_normal([n_hidden_1])), 'b2': tf.Variable(tf.random_normal([n_hidden_2])), 'out': tf.Variable(tf.random_normal([num_classes])) } # 定义神经网络 def neural_net(x): # 第一层隐藏层(256个神经元) layer_1 = tf.add(tf.matmul(x, weights['h1']), biases['b1']) # 第二层隐藏层(256个神经元) layer_2 = tf.add(tf.matmul(layer_1, weights['h2']), biases['b2']) # 输出层 out_layer = tf.matmul(layer_2, weights['out']) + biases['out'] return out_layer # 构建模型 logits = neural_net(X) # 定义损失函数和优化器 loss_op = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits( logits=logits, labels=Y)) optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate) train_op = optimizer.minimize(loss_op) # 定义预测准确率 correct_pred = tf.equal(tf.argmax(logits, 1), tf.argmax(Y, 1)) accuracy = tf.reduce_mean(tf.cast(correct_pred, tf.float32)) # 初始化所有变量(赋默认值) init = tf.global_variables_initializer() # 开始训练 with tf.Session() as sess: # 执行初始化操作 sess.run(init) for step in range(1, num_steps+1): batch_x, batch_y = mnist.train.next_batch(batch_size) # 执行训练操作,包括前向和后向传播 sess.run(train_op, feed_dict={X: batch_x, Y: batch_y}) if step % display_step == 0 or step == 1: # 计算损失值和准确率 loss, acc = sess.run([loss_op, accuracy], feed_dict={X: batch_x, Y: batch_y}) print("Step " + str(step) + ", Minibatch Loss= " + \ "{:.4f}".format(loss) + ", Training Accuracy= " + \ "{:.3f}".format(acc)) print("Optimization Finished!") # 计算测试数据的准确率 print("Testing Accuracy:", \ sess.run(accuracy, feed_dict={X: mnist.test.images, Y: mnist.test.labels}))

• 在容器中使用 TensorFlow

VM vs Docker Container

请你一定不要停下来 成为你想成为的人

感谢您的阅读,我是LXL

浙公网安备 33010602011771号

浙公网安备 33010602011771号