cs20_2-1

1. Agenda

-

Basic operations

-

Tensor types

-

Importing data

-

Lazy loading

2. Basic operations

-

tensorborad使用介绍

-

Constants, Sequences, Variables, Ops

-

constants:

- normal tensors: e.g. 1-dim,2-dim,more-dim

- special tensors, e.g. zeros(), zeros_like(), ones(), ones_like(), fill()

-

sequences:

-

Constants as sequences: e.g. lin_space(), range()

-

Randomly Generated Constants: e.g.

tf.random_normal tf.truncated_normal tf.random_uniform tf.random_shuffle tf.random_crop tf.multinomial tf.random_gamma- truncated_normal:

- 从截断的正态分布中输出随机值。 生成的值服从具有指定均值和标准方差的正态分布,如果生成的值落在(μ-2σ,μ+2σ)之外,则丢弃这个生成的值重新选择。

- 在正态分布的曲线中,横轴区间(μ-σ,μ+σ)内的面积为68.268949%。

横轴区间(μ-2σ,μ+2σ)内的面积为95.449974%。

横轴区间(μ-3σ,μ+3σ)内的面积为99.730020%。

X落在(μ-3σ,μ+3σ)以外的概率小于千分之三,在实际问题中常认为相应的事件是不会发生的,基本上可以把区间(μ-3σ,μ+3σ)看作是随机变量X实际可能的取值区间,这称之为正态分布的“3σ”原则。 - 在tf.truncated_normal中如果x的取值在区间(μ-2σ,μ+2σ)之外则重新进行选择。这样保证了生成的值都在均值附近。(it doesn’t create any values more than two standard deviations away from its mean.)

- tf.set_random_seed(seed)

- truncated_normal:

-

-

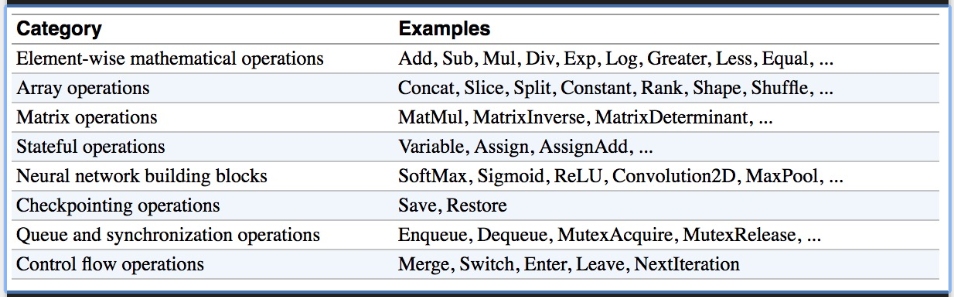

Operations

-

3. Tensor types

-

TensorFlow takes Python natives types: boolean, numeric (int, float), strings

-

tensor包括:0-dim(scalar), 1-dim(vector), 2-dim(matrix), more-dim.

Single values will be converted to 0-d tensors (or scalars), lists of values will be converted to 1-d tensors (vectors), lists of lists of values will be converted to 2-d tensors (matrices), and so on.

-

e.g.

t_0 = 19 # scalars are treated like 0-d tensors tf.zeros_like(t_0) # ==> 0 tf.ones_like(t_0) # ==> 1 t_1 = [b"apple", b"peach", b"grape"] # 1-d arrays are treated like 1-d tensors tf.zeros_like(t_1) # ==> [b'' b'' b''] tf.ones_like(t_1) # ==> TypeError: Expected string, got 1 of type 'int' instead. t_2 = [[True, False, False], [False, False, True], [False, True, False]] # 2-d arrays are treated like 2-d tensors tf.zeros_like(t_2) # ==> 3x3 tensor, all elements are False tf.ones_like(t_2) # ==> 3x3 tensor, all elements are True -

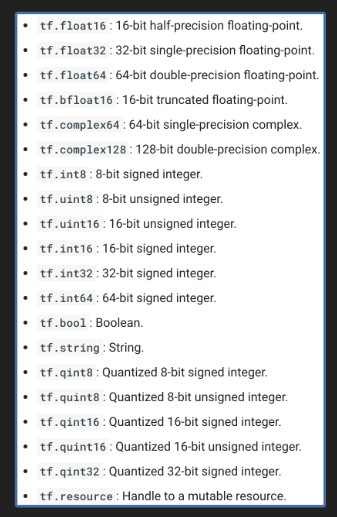

TensorFlow Data Types

-

TF vs NP Data Types

TensorFlow integrates seamlessly with NumPy tf.int32 == np.int32 # ⇒ True Can pass numpy types to TensorFlow ops tf.ones([2, 2], np.float32) # ⇒ [[1.0 1.0], [1.0 1.0]] For tf.Session.run(fetches): if the requested fetch is a Tensor , output will be a NumPy ndarray. sess = tf.Session() a = tf.zeros([2, 3], np.int32) print(type(a)) # ⇒ <class 'tensorflow.python.framework.ops.Tensor'> a = sess.run(a) print(type(a)) # ⇒ <class 'numpy.ndarray'> -

Use TF DType when possible

- Python native types: TensorFlow has to infer Python type(转化有计算成本)

- NumPy arrays: NumPy is not GPU compatible

-

What’s wrong with constants?、

- Constants are stored in the graph definition(导致graph太大,且不灵活)

- This makes loading graphs expensive when constants are big

- Only use constants for primitive types.

- Use variables or readers for more data that requires more memory

4. Importing data

-

Variables:tf.Variable, tf.get_variable

-

tf.Variable

tf.Variable holds several ops: x = tf.Variable(...) x.initializer # init op x.value() # read op x.assign(...) # write op x.assign_add(...) # and more -

tf.get_variable:

-

The easiest way is initializing all variables at once:

- Initializer is an op. You need to execute it within the context of a session

sess.run(tf.global_variables_initializer())

-

Initialize only a subset of variables:

sess.run(tf.variables_initializer([a, b]))仅仅Init了a,b这两个op variables

-

Initialize a single variable:

- sess.run(W.initializer)

-

Eval() a variable (效果类似于W.value()和sess.run(W))

-

tf.Variable.assign(), tf.assign_add(), tf.assign_sub()

-

Each session maintains its own copy of variables

-

Control Dependencies

# defines which ops should be run first # your graph g have 5 ops: a, b, c, d, e g = tf.get_default_graph() with g.control_dependencies([a, b, c]): # 'd' and 'e' will only run after 'a', 'b', and 'c' have executed. d = ... e = …

-

-

Placeholder:

-

Why placeholders?

We, or our clients, can later supply their own data when they need to execute the computation.

-

a = tf.placeholder(dtype, shape=None, name=None) sess.run(c, feed_dict={a: [1, 2, 3]})) # the tensor a is the key, not the string ‘a’ # shape=None means that tensor of any shape will be accepted as value for placeholder. # shape=None is easy to construct graphs, but nightmarish for debugging # shape=None also breaks all following shape inference, which makes many ops not work because they expect certain rank. # The session will look at the graph, trying to think: hmm, how can I get the value of a, then it computes all the nodes that leads to a. -

What if want to feed multiple data points in?

# You have to do it one at a time with tf.Session() as sess: for a_value in list_of_values_for_a: print(sess.run(c, {a: a_value})) -

You can feed_dict any feedable tensor.Placeholder is just a way to indicate that something must be fed:

tf.Graph.is_feedable(tensor) # True if and only if tensor is feedable. -

Feeding values to TF ops :

# create operations, tensors, etc (using the default graph) a = tf.add(2, 5) b = tf.multiply(a, 3) with tf.Session() as sess: # compute the value of b given a is 15 sess.run(b, feed_dict={a: 15}) # >> 4

-

5. Lazy loading

-

The trap of lazy loading*

-

What’s lazy loading?

- Defer creating/initializing an object until it is needed

- Lazy loading Example: (见cs_2_1的代码test_26,test_27)

-

Both give the same value of z, What’s the problem? (见cs_2_1的代码test_26,test_27)

-

normal loding: Node “Add” added once to the graph definition

-

lazy loding: Node “Add” added 10 times to the graph definition Or as many times as you want to compute z

-

Imagine you want to compute an op, thousands, or millions of times!

- Your graph gets bloated Slow to load Expensive to pass around, this is one of the most common TF non-bug bugs I’ve seen on GitHub.

- 所以要禁止定义匿名op节点

-

Solution:

-

Separate definition of ops from computing/running ops

-

Use Python property to ensure function is also loaded once the first time it is called

-

-

浙公网安备 33010602011771号

浙公网安备 33010602011771号