elasticsearch-分析器(五)

官方介绍

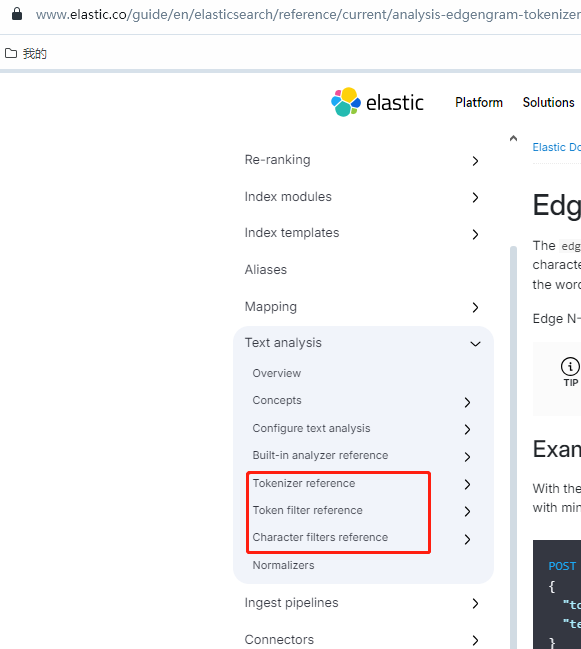

一个analyzer即分析器,无论是内置的还是自定义的,只是一个包含character filters(字符过滤器)、 tokenizers(分词器)、token filters(令牌过滤器)三个细分模块的包。

CharFilter

字符过滤器用于在将字符流传递给标记赋予器之前对其进行预处理。

字符过滤器接收原始文本作为字符流,并可以通过添加、删除或更改字符来转换该流。例如,可以使用字符过滤器将印度-阿拉伯数字(٠, ١٢٣٤٥٦٧٨, ٩)转换为阿拉伯-拉丁数字(0123456789),或者从流中剥离这样的HTML元素。

内置的有:HTML Strip Character Filter、Mapping Character Filter、Pattern Replace Character Filter

HTML Strip Character Filter

用于替换html标签如:

GET /_analyze { "tokenizer": "keyword", "char_filter": [ "html_strip" ], "text": "<p>I'm so <b>happy</b>!</p>" }

过滤后

[ \nI'm so happy!\n ]

Mapping Character Filter

可以替换任意字符

GET /_analyze { "tokenizer": "keyword", "char_filter": [ { "type": "mapping", "mappings": [ "٠ => 0", "١ => 1", "٢ => 2", "٣ => 3", "٤ => 4", "٥ => 5", "٦ => 6", "٧ => 7", "٨ => 8", "٩ => 9" ] } ], "text": "My license plate is ٢٥٠١٥" }

过滤后

[ My license plate is 25015 ]

Pattern Replace Character Filter

基于正则替代字符

案例1

PUT my-index-000001 { "settings": { "analysis": { "analyzer": { "my_analyzer": { "tokenizer": "standard", "char_filter": [ "my_char_filter" ] } }, "char_filter": { "my_char_filter": { "type": "pattern_replace", "pattern": "(\\d+)-(?=\\d)", "replacement": "$1_" } } } } } POST my-index-000001/_analyze { "analyzer": "my_analyzer", "text": "My credit card is 123-456-789" }

过滤后

[ My, credit, card, is, 123_456_789 ]

案例2

PUT my-index-000001 { "settings": { "analysis": { "analyzer": { "my_analyzer": { "tokenizer": "standard", "char_filter": [ "my_char_filter" ], "filter": [ "lowercase" ] } }, "char_filter": { "my_char_filter": { "type": "pattern_replace", "pattern": "(?<=\\p{Lower})(?=\\p{Upper})", "replacement": " " } } } }, "mappings": { "properties": { "text": { "type": "text", "analyzer": "my_analyzer" } } } } POST my-index-000001/_analyze { "analyzer": "my_analyzer", "text": "The fooBarBaz method" }

过滤后

[ the, foo, bar, baz, method ]

tokenizer

是分析器的一部分,负责将文本分割成初步的词(tokens)。

接收字符流,将其分解为单个标记(通常是单个单词),并输出标记流,如text "Quick brown fox!" 转换成[Quick, brown, fox!].

还负责记录每个词的顺序或位置,以及该词所代表的原始词的开始和结束字符偏移量。

一个analyzer必须有一个tokenizer

内置的文档:https://www.elastic.co/guide/en/elasticsearch/reference/current/analysis-tokenizers.html

如:

POST _analyze { "tokenizer": "lowercase", "text": "The 2 QUICK Brown-Foxes jumped over the lazy dog's bone." }

转换后

[ the, quick, brown, foxes, jumped, over, the, lazy, dog, s, bone ]

token filters

可以进一步处理这些词,例如去除停用词、转换大小写等。

token过滤器可以接收token stream 并且可以添加,删除或改变token

例如大小写转换

将以下token转换为大写

[ the, quick, brown, foxes, jumped, over, the, lazy, dog, s, bone ]

token过滤器不允许更改每个token的位置或字符偏移量

分析器可以有0个到多个token过滤器他们按顺序生效

token graph

https://www.elastic.co/guide/en/elasticsearch/reference/current/token-graphs.html

当被tokenizer标记后还将记录token的位置 以及token跨越的长度

如quick brown fox 转换后->[quick,brown,fox]

token filter还允许在现有token上添加新令牌,它将于现有的令牌位置和跨越相同,比如quick同义词fast,

filter还允许添加跨越多个位置的令牌比如domain name system is fragile转换后[domain,name,system,is,fragile ] 添加domain name system简写dns 允许通过dns匹配

在以下dns位置为0,但是positionLenght为3

在搜素的时候使用相同分析器通过match 或者match_phrase搜素

domain name system is fragile 或者dns is fragile 将能够搜素包含这2种的文档

es提供以下2种过滤器,允许将跨越多个位置的令牌positionLength记录为1

自定义分析器

官方文档:https://www.elastic.co/guide/en/elasticsearch/reference/current/analysis-custom-analyzer.html

可以参考中英文搜素配置https://www.cnblogs.com/LQBlog/p/10449637.html

同义词过滤器可以参考

比如搜索土豆 包含马铃薯的文档也可以搜出来

分析器处理过程的3步骤

1.字符过滤器:去除字符的特殊字符(chartFilter)

2.分词器:将词组分词(tokenizer)

3.对分词词组进行操作,比如转大写 分词后的词组替换等(tokenizer filter)

4.最终生成token graph

不同分词算法的思考

常用API

测试分析器

官网:https://www.elastic.co/guide/en/elasticsearch/reference/current/test-analyzer.html

get请求:http://127.0.0.1:9200/_analyze

body:

{ "analyzer":"standard",//分词器 "text":"Set the shape to semi-transparent by calling set_trans(5)"//测试分词的fulltext }

结果:

{ "tokens": [ { "token": "set",//被索引的词 "start_offset": 0,//原文本起始位置 "end_offset": 3,//原文本结束位置 "type": "<ALPHANUM>", "position": 0//第几个出现 }, { "token": "the", "start_offset": 4, "end_offset": 7, "type": "<ALPHANUM>", "position": 1 }, { "token": "shape", "start_offset": 8, "end_offset": 13, "type": "<ALPHANUM>", "position": 2 }, { "token": "to", "start_offset": 14, "end_offset": 16, "type": "<ALPHANUM>", "position": 3 }, { "token": "semi", "start_offset": 17, "end_offset": 21, "type": "<ALPHANUM>", "position": 4 }, { "token": "transparent", "start_offset": 22, "end_offset": 33, "type": "<ALPHANUM>", "position": 5 }, { "token": "by", "start_offset": 34, "end_offset": 36, "type": "<ALPHANUM>", "position": 6 }, { "token": "calling", "start_offset": 37, "end_offset": 44, "type": "<ALPHANUM>", "position": 7 }, { "token": "set_trans", "start_offset": 45, "end_offset": 54, "type": "<ALPHANUM>", "position": 8 }, { "token": "5", "start_offset": 55, "end_offset": 56, "type": "<NUM>", "position": 9 } ] }

查询某个文档分词结果

GET /${index}/${type}/${id}/_termvectors?fields=${fields_name}

type默认为_doc

例子

http://10.4.3.230:9200/assist_award_punishment/_doc/AP1816861322877568258/_termvectors?fields=acceptOrgPath

响应

{ "_index": "dev_assist_award_punishment_s0", "_type": "_doc", "_id": "AP1816861322877568258", "_version": 1, "found": true, "took": 0, "term_vectors": { "acceptOrgPath": { "field_statistics": { "sum_doc_freq": 64, "doc_count": 8, "sum_ttf": 64 }, "terms": { "10010000": { "term_freq": 1, "tokens": [ { "position": 0, "start_offset": 0, "end_offset": 8 } ] }, "10010001": { "term_freq": 1, "tokens": [ { "position": 1, "start_offset": 9, "end_offset": 17 } ] }, "10010010": { "term_freq": 1, "tokens": [ { "position": 2, "start_offset": 18, "end_offset": 26 } ] }, "10010400": { "term_freq": 1, "tokens": [ { "position": 4, "start_offset": 36, "end_offset": 44 } ] }, "10010401": { "term_freq": 1, "tokens": [ { "position": 5, "start_offset": 45, "end_offset": 53 } ] }, "10050120": { "term_freq": 1, "tokens": [ { "position": 3, "start_offset": 27, "end_offset": 35 } ] }, "10052279": { "term_freq": 1, "tokens": [ { "position": 6, "start_offset": 54, "end_offset": 62 } ] }, "a003": { "term_freq": 1, "tokens": [ { "position": 7, "start_offset": 63, "end_offset": 67 } ] } } } } }

查询指定索引指定分词器分词结果

GET /${index}/_analyze?analyzer={分词器名字}&text=2,3,4,5,100-100

或者

GET http://10.4.3.230:9200/_analyze { "analyzer":"standard",//分词器 "text":"1232-2323-4335|大幅度发-123"//测试分词的fulltext }

响应

{ "tokens": [ { "token": "1232", "start_offset": 0, "end_offset": 4, "type": "<NUM>", "position": 0 }, { "token": "2323", "start_offset": 5, "end_offset": 9, "type": "<NUM>", "position": 1 }, { "token": "4335", "start_offset": 10, "end_offset": 14, "type": "<NUM>", "position": 2 }, { "token": "大", "start_offset": 15, "end_offset": 16, "type": "<IDEOGRAPHIC>", "position": 3 }, { "token": "幅", "start_offset": 16, "end_offset": 17, "type": "<IDEOGRAPHIC>", "position": 4 }, { "token": "度", "start_offset": 17, "end_offset": 18, "type": "<IDEOGRAPHIC>", "position": 5 }, { "token": "发", "start_offset": 18, "end_offset": 19, "type": "<IDEOGRAPHIC>", "position": 6 }, { "token": "123", "start_offset": 20, "end_offset": 23, "type": "<NUM>", "position": 7 } ] }

查看搜索的执行计划

使用以下api查看搜索结果

get http://127.0.0.1:9200/opcm3/_validate/query?explain

{

"query":{

"match_phrase":{

"productName":{

"query":"纯生"

}

}

}

}

{

"valid": true,

"_shards": {

"total": 1,

"successful": 1,

"failed": 0

},

"explanations": [

{

"index": "opcm3",

"valid": true,

"explanation": "productName:\"(chun c) (sheng s)\""

}

]

}

可以理解为(chun or c) and (sheng or s)

自定义分析器

1.如果存在删除索引

curl --location --request DELETE 'http://10.4.3.230:9200/lq_test_analysis_index'

2.添加一个索引并设置自定义分词器

curl --location --request PUT 'http://10.4.3.230:9200/liqinag-index-test' \ --header 'Content-Type: application/json' \ --data '{ "settings": { "analysis": { "analyzer": { "my_stop_analyzer": { "type": "pattern", "pattern": "-" } } } } }'

3.测试分词器效果

curl --location --request GET 'http://10.4.3.230:9200/lq_test_analysis_index/_analyze' \ --header 'Content-Type: application/json' \ --data '{ "analyzer":"char_analyzer", "text":"李强" }'

响应

{ "tokens": [ { "token": "李", "start_offset": 0, "end_offset": 1, "type": "word", "position": 0 }, { "token": "强", "start_offset": 1, "end_offset": 2, "type": "word", "position": 1 } ] }

5.添加测试文档做搜索测试

curl --location 'http://10.4.3.230:9200/lq_test_analysis_index/_doc/1' \ --header 'Content-Type: application/json' \ --data '{ "content": "李强" }'

6.测试搜索

curl --location --request GET 'http://10.4.3.230:9200/lq_test_analysis_index/_search' \ --header 'Content-Type: application/json' \ --data '{ "query": { "match_phrase_prefix": { "content": "李" } } }'

响应

{ "took": 0, "timed_out": false, "_shards": { "total": 1, "successful": 1, "skipped": 0, "failed": 0 }, "hits": { "total": { "value": 1, "relation": "eq" }, "max_score": 1.0925692, "hits": [ { "_index": "lq_test_analysis_index", "_type": "_doc", "_id": "1", "_score": 1.0925692, "_source": { "content": "李强" } } ] } }

已有索引添加分析器

POST /{index}/_close #目标索引关闭,执行需要的更新操作 期间不能对索引进行操作 PUT /{index}/_settings { "settings": { "analysis": { "analyzer": { "ik_word": {#要新增的分析器 "tokenizer": "ik_max_word" } } } } } POST /{index}/_open #索引打开

查询已经安装的分词插件

GET /_cat/plugins?v

响应

name component version es_node01 analysis-ik 7.10.1 es_node01 analysis-pinyin 7.10.1

浙公网安备 33010602011771号

浙公网安备 33010602011771号