sparksql指南:https://hudi.apache.org/cn/docs/0.7.0/quick-start-guide

flinksql指南:https://hudi.apache.org/cn/docs/0.9.0/flink-quick-start-guide

FSpark读hudi表

set runner.support.reading-hudi=true;

spark-shell客户端启动命令

spark-shell \ --jars /app/spark-3.2.1-bin-hadoop2.7/hudijars/hudi-spark3.2-bundle_2.12-0.12.0.jar \ --conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer' \ --conf 'spark.sql.catalog.spark_catalog=org.apache.spark.sql.hudi.catalog.HoodieCatalog' \ --conf 'spark.sql.extensions=org.apache.spark.sql.hudi.HoodieSparkSessionExtension'

Scala终端

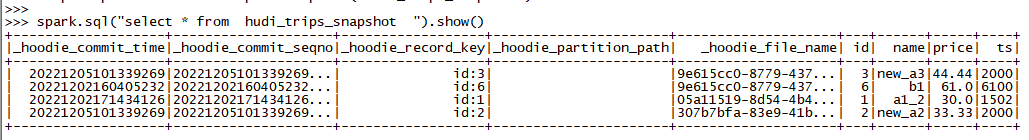

import org.apache.hudi.QuickstartUtils._ import scala.collection.JavaConversions._ import org.apache.spark.sql.SaveMode._ import org.apache.hudi.DataSourceReadOptions._ import org.apache.hudi.DataSourceWriteOptions._ import org.apache.hudi.config.HoodieWriteConfig._ import org.apache.hudi.common.model.HoodieRecord val tableName = "hudi_mor_tbl" val basePath="hdfs://test-cluster/user/hive/warehouse/sfsy/DM/dm_ezjc_gate/hudi_mor_tbl" val dataGen = new DataGenerator val roViewDF = spark. read. format("org.apache.hudi"). load(basePath + "/*") //load(basePath) 如果使用 "/partitionKey=partitionValue" 文件夹命名格式,Spark将自动识别分区信息 roViewDF.registerTempTable("hudi_ro_table") spark.sql("select _hoodie_commit_time, _hoodie_commit_seqno, _hoodie_record_key, _hoodie_partition_path, _hoodie_file_name, id, name, price, ts from hudi_ro_table where price < 40.0").show() spark.sql("select * from hudi_ro_table").show() val tripsSnapshotDF = spark. read. format("hudi"). load(basePath) tripsSnapshotDF.createOrReplaceTempView("hudi_trips_snapshot") spark.sql("select * from hudi_trips_snapshot ").show()

--******************* select * from dm_ezjc_gate.hudi_cow_pt_tbl; -- 一定要知道旧记录的时间戳,支持不同时间格式 select * from dm_ezjc_gate.hudi_cow_pt_tbl timestamp as of '20221202172406232' where id = 1; select * from dm_ezjc_gate.hudi_cow_pt_tbl timestamp as of '2022-12-02 17:24:06.232' where id = 1; //val tableName = "hudi_cow_pt_tbl" val basePath="hdfs://test-cluster/user/hive/warehouse/sfsy/DM/dm_ezjc_gate/hudi_cow_pt_tbl" // val dataGen = new DataGenerator val tripsSnapshotDF =spark.read. format("hudi"). //option("as.of.instant", "20221202172406232"). option("as.of.instant", "2022-12-02 17:24:06.232"). load(basePath) tripsSnapshotDF.createOrReplaceTempView("hudi_trips_snapshot") spark.sql("select * from hudi_trips_snapshot ").show()

Pyspark客户端

pyspark \ --jars /app/spark-3.2.1-bin-hadoop2.7/hudijars/hudi-spark3.2-bundle_2.12-0.12.0.jar \ --conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer' \ --conf 'spark.sql.catalog.spark_catalog=org.apache.spark.sql.hudi.catalog.HoodieCatalog' \ --conf 'spark.sql.extensions=org.apache.spark.sql.hudi.HoodieSparkSessionExtension'

basePath = "hdfs://test-cluster/user/hive/warehouse/sfsy/DM/dm_ezjc_gate/hudi_mor_tbl" tripsSnapshotDF = spark. \ read. \ format("hudi"). \ load(basePath) # load(basePath) use "/partitionKey=partitionValue" folder structure for Spark auto partition discovery tripsSnapshotDF.createOrReplaceTempView("hudi_trips_snapshot") spark.sql("select * from hudi_trips_snapshot ").show()

spark-sql客户端启动命令

spark-sql \ --jars /app/spark-3.2.1-bin-hadoop2.7/hudijars/hudi-spark3.2-bundle_2.12-0.12.0.jar \ --conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer' \ --conf 'spark.sql.extensions=org.apache.spark.sql.hudi.HoodieSparkSessionExtension' \ --conf 'spark.sql.catalog.spark_catalog=org.apache.spark.sql.hudi.catalog.HoodieCatalog'

无分区,示例【MOR】:创建、插入、更新、查询

create table dm_ezjc_gate.hudi_mor_tbl ( id int, name string, price double, ts bigint ) using hudi tblproperties ( type = 'mor', primaryKey = 'id', preCombineField = 'ts' ); insert into dm_ezjc_gate.hudi_mor_tbl select 1, 'a1', 20, 1000; insert into dm_ezjc_gate.hudi_mor_tbl select 1, 'a1', 30, 1000; insert into dm_ezjc_gate.hudi_mor_tbl select 1, 'a2', 30, 1500; insert into dm_ezjc_gate.hudi_mor_tbl select 3, 'a1', 20, 1000; insert into dm_ezjc_gate.hudi_mor_tbl select 6, 'b1', 60, 6000; insert into dm_ezjc_gate.hudi_mor_tbl select 6, 'b1', 61, 6100; select * from dm_ezjc_gate.hudi_mor_tbl;

| _hoodie_commit_time | _hoodie_commit_seqno | _hoodie_record_key | _hoodie_partition_path | _hoodie_file_name | id | name | price | ts |

| 20221202143737144 | 20221202143737144_0_2 | id:1 | 9d8d67bf-3133-4c06-91c9-84b01945a366-0 | 1 | a2 | 30 | 1500 | |

| 20221202143741904 | 20221202143741904_0_0 | id:3 | 2e4d1d10-01ef-43ef-a656-a4a62c4b1f97-0_0-164-5610_20221202143741904.parquet | 3 | a1 | 20 | 1000 | |

| 20221202155854682 | 20221202155854682_0_1 | id:6 | 2e4d1d10-01ef-43ef-a656-a4a62c4b1f97-0 | 2e4d1d10-01ef-43ef-a656-a4a62c4b1f97-0 | b1 | 61 | 6100 |

【示例】带分区【COW】:创建、插入、更新、查询

create table if not exists dm_ezjc_gate.hudi_cow_pt_tbl ( id bigint, name string, price double, ts bigint, dt string, hh string ) using hudi tblproperties ( type = 'cow', primaryKey = 'id', preCombineField = 'ts' ) partitioned by (dt, hh);

或者CTAS (Create Table As Select)

create table dm_ezjc_gate.hudi_cow_pt_tbl using hudi tblproperties ( type = 'cow', primaryKey = 'id', preCombineField = 'ts' ) partitioned by (dt, hh) as select 1 as id, 'a1' as name, 10 as price, 1000 as ts, '2021-12-01' as dt,'15' as hh;

两种插入方式,动态分区和静态分区

insert into dm_ezjc_gate.hudi_cow_pt_tbl partition (dt, hh) select 1 as id, 'a1' as name, 20.6 as price, 1000 as ts, '2021-12-09' as dt, '10' as hh; insert into dm_ezjc_gate.hudi_cow_pt_tbl partition (dt, hh) select 1 as id, 'a1' as name, 20.6 as price, 1000 as ts, '2021-12-01' as dt, '15' as hh; insert into dm_ezjc_gate.hudi_cow_pt_tbl partition(dt = '2021-12-09', hh='11') select 2, 'a2', 33.3, 1000;

| _hoodie_commit_time | _hoodie_commit_seqno | _hoodie_record_key | _hoodie_partition_path | _hoodie_file_name | id | name | price | ts |

| 20221202154718368 | 20221202154718368_0_0 | id:1 | dt=2021-12-01/hh=15 | 2a6a823b-e0e3-4c40-9c5c-c25cd9427ca8-0_0-19-914_20221202154718368.parquet | 1 | a1 | 20.6 | 1000 |

| 20221202154545238 | 20221202154545238_0_0 | id:1 | dt=2021-12-09/hh=10 | 833adf43-85b1-4cc5-90e6-9b6736285be5-0_0-125-3698_20221202154545238.parquet | 1 | a1 | 20.6 | 1000 |

| 20221202154808206 | 20221202154808206_0_0 | id:2 | dt=2021-12-09/hh=11 | 6fc96437-877e-4955-b260-3a43a078ba88-0_0-61-1862_20221202154808206.parquet | 2 | a2 | 33.3 | 1000 |

COW和MOR两种模式的区别

-- cow支持单分区更新,不支持多分区

-- cow单分区内,更新数据对ts没有要求,直接用当前数据覆盖旧数据

-- cow多分区,select会异常

-- mor支持多分区写入,但不支持更新:

-- mor模式,不支持分区对数据进行更新,select时会导致报错

-- mor支持多分区写入,多分区写入可select查询

- mor模式,只支持非分区表的数据更新

- ts值一定要比旧数据大,才能完成mor

drop table if exists dm_ezjc_gate.hudi_cow_pt_tbl; create table if not exists dm_ezjc_gate.hudi_cow_pt_tbl ( id bigint, name string, price DECIMAL(3,1), ts bigint, dt string, hh string ) using hudi tblproperties ( type = 'cow', primaryKey = 'id', preCombineField = 'ts' ) partitioned by (dt, hh); create table dm_ezjc_gate.hudi_cow_pt_tbl using hudi tblproperties ( type = 'cow', primaryKey = 'id', preCombineField = 'ts' ) partitioned by (dt, hh) as select 1 as id, 'a1' as name, 10.6 as price, 1000 as ts, '2021-12-01' as dt, '15' as hh; insert into dm_ezjc_gate.hudi_cow_pt_tbl partition (dt, hh) select 1 as id, 'a1' as name, 20.6 as price, 1000 as ts, '2021-12-09' as dt, '10' as hh; -- 更新数据对ts没有要求,直接用当前数据覆盖旧数据 insert into dm_ezjc_gate.hudi_cow_pt_tbl partition (dt, hh) select 1 as id, 'a1_1' as name, 20.6 as price, 1001 as ts, '2021-12-09' as dt, '10' as hh; -- 更新数据对ts没有要求,直接用当前数据覆盖旧数据 insert into dm_ezjc_gate.hudi_cow_pt_tbl partition (dt, hh) select 1 as id, 'a1_0' as name, 20.6 as price, 1000 as ts, '2021-12-09' as dt, '10' as hh; -- 更新数据对ts没有要求,直接用当前数据覆盖旧数据 insert into dm_ezjc_gate.hudi_cow_pt_tbl partition (dt, hh) select 1 as id, 'a1_0_0' as name, 20.6 as price, 1000 as ts, '2021-12-09' as dt, '10' as hh; -- cow支持更新,不支持多分区 insert into dm_ezjc_gate.hudi_cow_pt_tbl partition (dt, hh) select 2 as id, 'a2' as name, 20.6 as price, 1000 as ts, '2021-12-09' as dt, '11' as hh; select * from dm_ezjc_gate.hudi_cow_pt_tbl;

drop table if exists dm_ezjc_gate.hudi_mor_pt_tbl; create table if not exists dm_ezjc_gate.hudi_mor_pt_tbl ( id bigint, name string, price DECIMAL(3,1), ts bigint, dt string, hh string ) using hudi tblproperties ( type = 'mor', primaryKey = 'id', preCombineField = 'ts' ) partitioned by (dt, hh); create table dm_ezjc_gate.hudi_mor_pt_tbl using hudi tblproperties ( type = 'mor', primaryKey = 'id', preCombineField = 'ts' ) partitioned by (dt, hh) as select 1 as id, 'a1' as name, 10.6 as price, 1000 as ts, '2021-12-01' as dt, '15' as hh; -- mor支持多分区,但不支持更新: insert overwrite dm_ezjc_gate.hudi_mor_pt_tbl partition (dt, hh) select 1 as id, 'a1' as name, 20.6 as price, 1000 as ts, '2021-12-09' as dt, '10' as hh; -- mor模式,不支持分区对数据进行更新,select时会导致报错 insert into dm_ezjc_gate.hudi_mor_pt_tbl partition (dt, hh) select 1 as id, 'a1_6' as name, 20.6 as price, 1006 as ts, '2021-12-09' as dt, '10' as hh;

-- mor模式,只支持非分区表的数据更新 create table dm_ezjc_gate.hudi_mor_tbl ( id int, name string, price double, ts bigint ) using hudi tblproperties ( type = 'mor', primaryKey = 'id', preCombineField = 'ts' ); insert into dm_ezjc_gate.hudi_mor_tbl select 1, 'a1', 20, 1000; select * from dm_ezjc_gate.hudi_mor_tbl; -- ts值一定要比旧数据大,才能完成mor insert into dm_ezjc_gate.hudi_mor_tbl select 1, 'a1_1', 20, 1001; -- ts值一定要比旧数据大,才能完成mor insert into dm_ezjc_gate.hudi_mor_tbl select 1, 'a1_1', 30, 1501; -- ts值一定要比旧数据大,才能完成mor insert into dm_ezjc_gate.hudi_mor_tbl select 1, 'a1_2', 30, 1502;

merge into

create table dm_ezjc_gate.merge_source (id int, name string, price double, ts bigint) using hudi tblproperties (primaryKey = 'id', preCombineField = 'ts'); insert into dm_ezjc_gate.merge_source values (1, "old_a1", 22.22, 900), (2, "new_a2", 33.33, 2000), (3, "new_a3", 44.44, 2000); merge into dm_ezjc_gate.hudi_mor_tbl as target using dm_ezjc_gate.merge_source as source on target.id = source.id when matched then update set * when not matched then insert * ;

增、删、改(根据条件)

create table dm_ezjc_gate.merge_source2 (id int, name string, flag string, dt string, hh string) using parquet; insert into dm_ezjc_gate.merge_source2 values (1, "new_a1", 'update', '2021-12-09', '10'), (2, "new_a2", 'delete', '2021-12-09', '11'), (3, "new_a3", 'insert', '2021-12-09', '12'); merge into dm_ezjc_gate.hudi_cow_pt_tbl as target using ( select id, name, '1000' as ts, flag, dt, hh from dm_ezjc_gate.merge_source2 ) source on target.id = source.id when matched and flag != 'delete' then update set id = source.id, name = source.name, ts = source.ts, dt = source.dt, hh = source.hh when matched and flag = 'delete' then delete when not matched then insert (id, name, price, ts, dt, hh) values(source.id, source.name, 66.3,source.ts, source.dt, source.hh) ;

历史版本查询

select * from dm_ezjc_gate.hudi_cow_pt_tbl; -- 一定要知道旧记录的时间戳,支持不同时间格式 select * from dm_ezjc_gate.hudi_cow_pt_tbl timestamp as of '20221202172406232' where id = 1; select * from dm_ezjc_gate.hudi_cow_pt_tbl timestamp as of '2022-12-02 17:24:06.232' where id = 1;

update更新

insert into dm_ezjc_gate.hudi_cow_pt_tbl partition (dt, hh) select 2 as id, 'a2' as name, 20.2 as price, 2000 as ts, '2021-12-09' as dt, '10' as hh; --更新数据 update dm_ezjc_gate.hudi_cow_pt_tbl set name = 'a2_2', ts = 1001 where id = 2; -- 支持其他条件 update dm_ezjc_gate.hudi_cow_pt_tbl set ts = 2001 where dt = '2021-12-09';

Overwrite

-- insert overwrite non-partitioned table insert overwrite hudi_mor_tbl select 99, 'a99', 20.0, 900; insert overwrite hudi_cow_nonpcf_tbl select 99, 'a99', 20.0; -- insert overwrite partitioned table with dynamic partition insert overwrite table hudi_cow_pt_tbl select 10, 'a10', 1100, '2021-12-09', '10'; -- insert overwrite partitioned table with static partition insert overwrite hudi_cow_pt_tbl partition(dt = '2021-12-09', hh='12') select 13, 'a13', 1100;

#!/bin/sh export HADOOP_USER_NAME=mario startDate=`date -d '181 days ago' '+%Y%m%d'` inc_day_1=`date -d '1 days ago' '+%Y%m%d'` today=`date -d '0 days ago' '+%Y%m%d'` inc_day=`date -d '1 days ago' '+%Y/%m/%d'` inc_day_2=`date -d '2 days ago' '+%Y%m%d'` inc_day_30=`date -d '30 days ago' '+%Y%m%d'` ########################################################################### # Copyright 2022 sf-express.com Inc. All rights reserved. # Purpose: 解析出xx数据并折算成rmb # Ename: tmp_dwd.dwd_ssss_hudi_info_02 # HISTORY # ########################################################################### # ID DATE MODIFY REASON # 1 2022-08-02 01396497 Create ########################################################################### spark-sql --master yarn --name tmp_dwd.xxxxxxx_kafka_hudi_tmp_03 \ --executor-memory 20g \ --num-executors 250 \ --executor-cores 10 \ --driver-cores 2 \ --driver-memory 8g \ --queue root.${queuename} \ --jars hdfs://sfbdp1/tmp/rt_dw/jars/hudi-spark-bundle_2.11-0.9.0-SNAPSHOT_c.jar \ --conf 'spark.sql.extensions=org.apache.spark.sql.hudi.HoodieSparkSessionExtension' \ --conf spark.driver.maxResultSize=2g \ --conf spark.rdd.compress=true \ --conf spark.defalut.parallelism=2000 \ --conf spark.kryoserializer.buffer.max=512m \ --conf spark.sql.hive.convertMetastoreParquet=false \ --conf spark.task.maxFailures=4 \ --conf spark.sql.shuffle.partitions=2000 \ --conf spark.dynamicAllocation.maxExecutors=800 \ --conf yarn.nodemanager.vmem-check-enable=false \ --conf spark.yarn.driver.memoryOverhead=1024 \ --conf spark.yarn.executor.memoryOverhead=5120 \ --conf spark.yarn.max.executor.failures=100 \ --conf spark.shuffle.service.enabled=false \ --conf spark.dynamicAllocation.enabled=false \ --conf spark.memory.fraction=0.4 \ --conf spark.memory.storageFraction=0.5 \ --conf spark.speculation=false \ --conf spark.speculation.quantile=0.8 \ --conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer' \ --conf "spark.executor.extraJavaOptions=-XX:+UseG1GC -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintGCDateStamps -XX:+PrintGCApplicationStoppedTime -XX:+PrintGCApplicationConcurrentTime -XX:+PrintTenuringDistribution -XX:+HeapDumpOnOutOfMemoryError" \ -e " add jar hdfs://sfbdp1/tmp/udf/01368063/StringToJsonArray.jar; create temporary function string_to_jsonarray as 'com.sf.bdp.function.StringToJsonArray'; drop table if exists tmp_dwd.xxxxxxx_kafka_hudi_tmp_03; create table if not exists tmp_dwd.xxxxxxx_kafka_hudi_tmp_03 stored as parquet as select a.ssss_no, round(sum(if(a.feeTypeCode = '5', 0, a.feeAmt) * (case when a.currency_Code = 'CNY' then 1 else b.rate end)), 3) as all_fee_rmb, round(sum(if(a.feeTypeCode = '1', a.feeAmt, 0) * (case when a.currency_Code = 'CNY' then 1 else b.rate end)), 3) as freight_rmb, round(sum(if(a.feeTypeCode = '5', a.feeAmt, 0) * (case when a.currency_Code = 'CNY' then 1 else b.rate end)), 3) as cod_fee_rmb, round(sum(if(a.feeTypeCode in ('3','17','23','27'), a.feeAmt, 0)), 3) as cons_fee, round(sum(nvl(a.feeAmtInd,0)* (case when a.currency_Code = 'CNY' then 1 else b.rate end)), 3) as fee_ind_rmd, max(case when feeTypeCode='1' then case when settlementTypeCode='1' and paymentTypeCode='1' then '寄付现结' when settlementTypeCode='2' and paymentTypeCode='1' then '寄付月结' when settlementTypeCode='1' and paymentTypeCode='2' then '到付现结' when settlementTypeCode='2' and paymentTypeCode='2' then '到付月结' when settlementTypeCode='1' and paymentTypeCode='3' and paymentChangeTypeCode='1' then '寄付转第三方现结' when settlementTypeCode='2' and paymentTypeCode='3' and paymentChangeTypeCode='1' then '寄付转第三方月结' when settlementTypeCode='1' and paymentTypeCode='3' and paymentChangeTypeCode='2' then '到付转第三方现结' when settlementTypeCode='2' and paymentTypeCode='3' and paymentChangeTypeCode='2' then '到付转第三方月结' end end) as comb_payment_type_code from (select ssssno as ssss_no, upper(get_json_object(t.operationssssFee, '$.currencyCode')) as currency_Code, get_json_object(t.operationssssFee, '$.feeTypeCode') as feeTypeCode, get_json_object(t.operationssssFee, '$.feeAmt') as feeAmt, get_json_object(t.operationssssFee, '$.paymentChangeTypeCode') as paymentChangeTypeCode, get_json_object(t.operationssssFee, '$.settlementTypeCode') as settlementTypeCode, get_json_object(t.operationssssFee, '$.paymentTypeCode') as paymentTypeCode, get_json_object(t.operationssssFee, '$.feeAmtInd') as feeAmtInd, substr(consignedTm, 1, 7) as rate_month from tmp_dwd.xxxxxxx_kafka_hudi_tmp_01 lateral view explode(string_to_jsonarray(operationssssFeeList)) t as operationssssFee) a left join tmp_dwd.currency_rate_month_hudi_tmp b on a.currency_Code = b.currency_code and a.rate_month = b.rate_month group by a.ssss_no; "

flinksql指南:https://hudi.apache.org/cn/docs/0.9.0/flink-quick-start-guide

CREATE TABLE products ( id INT, name STRING, description STRING, PRIMARY KEY(id) NOT ENFORCED ) WITH ( 'connector' = 'mysql-cdc', 'datasource-id' = '2**5', 'database-name' = 'hudi', 'table-name' = 'products', 'scan.startup.mode' = 'INITIAL' ); CREATE TABLE hudi_products ( id INT, name STRING, description STRING, PRIMARY KEY(id) NOT ENFORCED ) WITH ( 'hive_sync.enable' = 'true', 'hive.cluster' = 'bdp', 'hive_sync.db' = 'dm_xx', 'hive_sync.mode' = 'jdbc', 'hive_sync.table' = 'dm_products', 'connector' = 'hudi', 'index.type' = 'BUCKET_INDEX', 'compaction.async.enabled'='true', 'compaction.schedule.enabled'='true', 'compaction.trigger.strategy'='num_or_time', 'compaction.delta_commits'='1', 'compaction.delta_seconds'='30', 'compaction.max_memory' = '500', 'compaction.tasks'='64', 'hoodie.logfile.max.size'='262144000', 'table.type' = 'MERGE_ON_READ' ); insert into hudi_products select * from products;

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· 一文读懂知识蒸馏

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下