Service

Service概览

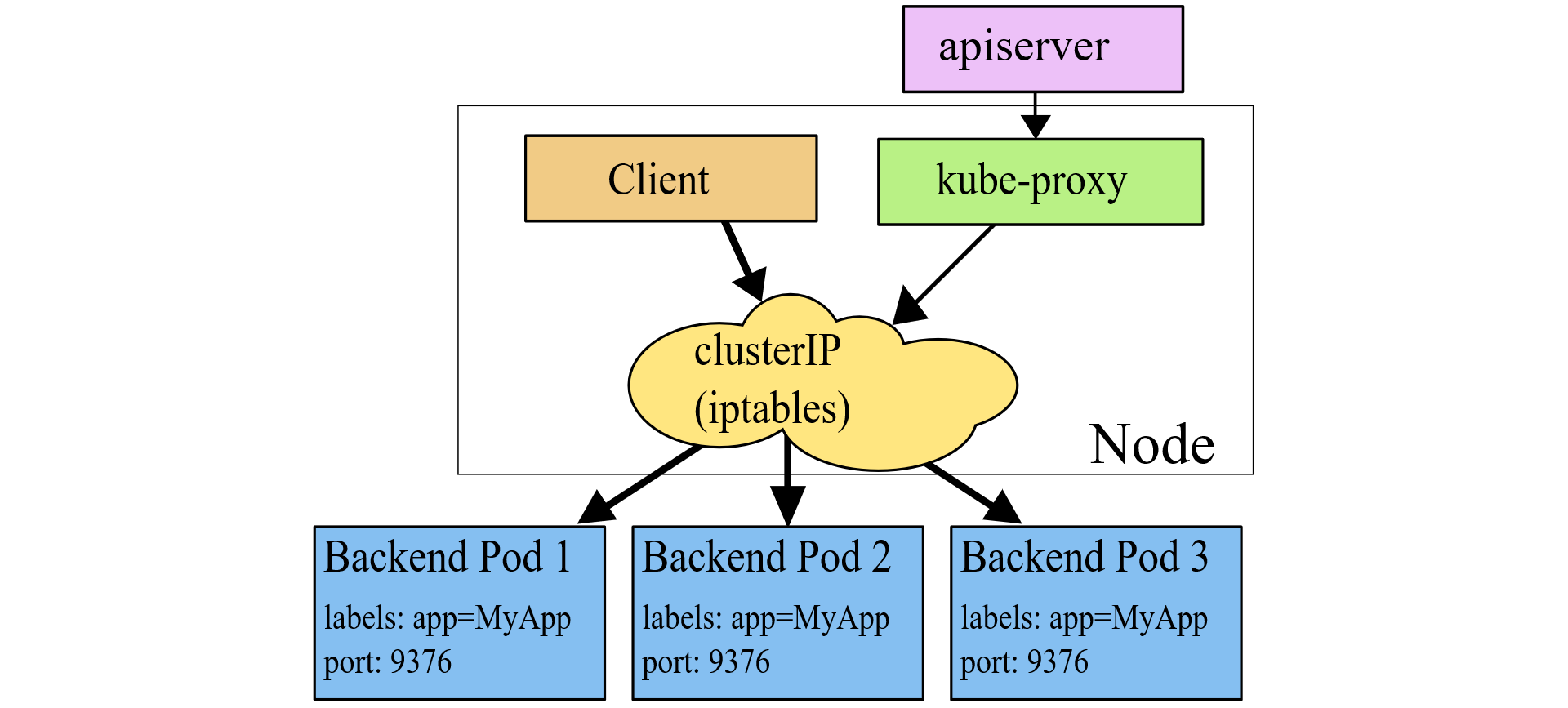

Service是一种可以访问 Pod逻辑分组的策略, Service通常是通过 Label Selector访问Pod组。

Service能够提供负载均衡的能力,但是在使用上有以下限制:只提供 4 层负载均衡能力,而没有 7 层功能,但有时我们可能需要更多的匹配规则来转发请求,这点上 4 层负载均衡是不支持的

service默认IP类型为主要分为:

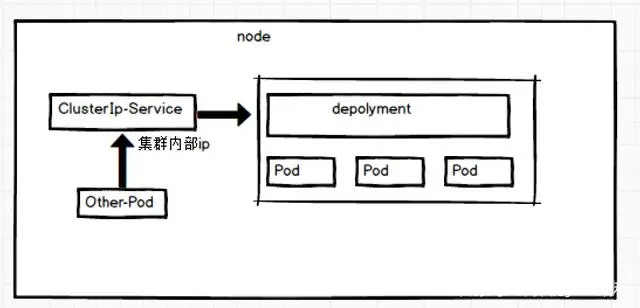

- ClusterIP:主要是为集群内部提供访问服务的 (默认类型)

- NodePort:可以被集群外部所访问,访问方式为 宿主机:端口号

- LoadBalancer:在NodePort的基础上,借助cloud provider(云提供商)创建一个外部负载均衡器并将请求转发到NodePort

- ExternalName: 把集群外部的访问引入到集群内部来,在集群内部直接使用,没有任何代理被创建

当Service一旦被创建,Kubernetes就会自动为它分配一个可用的Cluster IP,而且在Service的整个生命周期内,它的Cluster IP不会发生改变,service会通过标签选择器与后端的pod进行连接并被kubo-poxry监控,当后端pod被重建时会通过标签自动加入到对应的service服务中,从而避免失联。

ClusterIp

默认类型,自动分配一个仅Cluster内部可以访问的虚拟IP

service对象创建的同时,会创建同名的endpoints对象,若服务设置了readinessProbe, 当readinessProbe检测失败时,endpoints列表中会剔除掉对应的pod_ip,这样流量就不会分发到健康检测失败的Pod中

ClusterIp演示

[root@master svc]# cat nginx-svc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-svc

namespace: default

spec:

replicas: 3

selector:

matchLabels:

app: nginx-svc

template:

metadata:

labels:

app: nginx-svc

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: nginx-svc

ports:

- containerPort: 80

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx-svc

type: ClusterIP

[root@master svc]# kubectl create -f nginx-svc.yaml

deployment.apps/nginx-svc created

service/nginx-svc created

[root@master svc]# kubectl get deploy nginx-svc -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-svc 3/3 3 3 2m44s nginx-svc 10.0.0.80:5000/hpa-example:v1 app=nginx-svc

[root@master svc]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-svc-6cf9585495-2tkp9 1/1 Running 0 5m51s app=nginx-svc,pod-template-hash=6cf9585495

nginx-svc-6cf9585495-62fps 1/1 Running 0 5m51s app=nginx-svc,pod-template-hash=6cf9585495

nginx-svc-6cf9585495-869zh 1/1 Running 0 5m51s app=nginx-svc,pod-template-hash=6cf9585495

[root@master svc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-svc-6cf9585495-2tkp9 1/1 Running 0 2m52s 10.244.1.52 node1 <none> <none>

nginx-svc-6cf9585495-62fps 1/1 Running 0 2m52s 10.244.1.54 node1 <none> <none>

nginx-svc-6cf9585495-869zh 1/1 Running 0 2m52s 10.244.1.53 node1 <none> <none>

[root@master svc]# kubectl get svc nginx-svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

nginx-svc ClusterIP 10.107.38.65 <none> 80/TCP 3m26s app=nginx-svc

[root@master svc]# kubectl describe svc nginx-svc

Name: nginx-svc

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=nginx-svc

Type: ClusterIP

IP Families: <none>

IP: 10.107.38.65

IPs: 10.107.38.65

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.52:80,10.244.1.53:80,10.244.1.54:80

Session Affinity: None

Events: <none>

## 扩容myblog服务

[root@master svc]# kubectl scale deploy nginx-svc --replicas=4

deployment.apps/nginx-svc scaled

[root@master svc]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nfs-pvc 0/0 0 0 8d

[root@master svc]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-svc-6cf9585495-2tkp9 1/1 Running 0 8m25s app=nginx-svc,pod-template-hash=6cf9585495

nginx-svc-6cf9585495-62fps 1/1 Running 0 8m25s app=nginx-svc,pod-template-hash=6cf9585495

nginx-svc-6cf9585495-869zh 1/1 Running 0 8m25s app=nginx-svc,pod-template-hash=6cf9585495

nginx-svc-6cf9585495-dqwv2 1/1 Running 0 24s app=nginx-svc,pod-template-hash=6cf9585495

[root@master svc]# kubectl describe svc nginx-svc

Name: nginx-svc

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=nginx-svc

Type: ClusterIP

IP Families: <none>

IP: 10.107.38.65

IPs: 10.107.38.65

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.52:80,10.244.1.53:80,10.244.1.54:80 + 1 more...

Session Affinity: None

Events: <none>

[root@master svc]# kubectl get endpoints nginx-svc

NAME ENDPOINTS AGE

nginx-svc 10.244.1.52:80,10.244.1.53:80,10.244.1.54:80 + 1 more... 10m

服务发现

在k8s集群中,组件之间可以通过定义的Service名称实现通信。

创建svc和deploy

[root@master svc]# cat nginx-svc-01.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-svc-01

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: nginx-svc-01

template:

metadata:

labels:

app: nginx-svc-01

spec:

containers:

- name: nginx-svc-01

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc-01

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx-svc-01

type: ClusterIP

[root@master svc]# cat nginx-svc-02.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-svc-02

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: nginx-svc-02

template:

metadata:

labels:

app: nginx-svc-02

spec:

containers:

- name: nginx-svc-02

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc-02

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx-svc-02

type: ClusterIP

[root@master svc]#

[root@master svc]# kubectl delete -f nginx-svc-02.yaml

deployment.apps "nginx-svc-02" deleted

service "nginx-svc-02" deleted

[root@master svc]# kubectl delete -f nginx-svc-01.yaml

deployment.apps "nginx-svc-01" deleted

service "nginx-svc-01" deleted

[root@master svc]#

[root@master svc]# cat nginx-svc-01.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-svc-01

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: nginx-svc-01

template:

metadata:

labels:

app: nginx-svc-01

spec:

containers:

- name: nginx-svc-01

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc-01

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx-svc-01

type: ClusterIP

[root@master svc]# cat nginx-svc-02.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-svc-02

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: nginx-svc-02

template:

metadata:

labels:

app: nginx-svc-02

spec:

containers:

- name: nginx-svc-02

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc-02

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx-svc-02

type: ClusterIP

[root@master svc]# kubectl create -f nginx-svc-01.yaml

deployment.apps/nginx-svc-01 created

service/nginx-svc-01 created

[root@master svc]# kubectl create -f nginx-svc-02.yaml

deployment.apps/nginx-svc-02 created

service/nginx-svc-02 created

[root@master svc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-svc-01-7d486684bf-rvjdz 1/1 Running 0 100s 10.244.1.73 node1 <none> <none>

nginx-svc-02-559dbd94d5-nf7wq 1/1 Running 0 98s 10.244.1.74 node1 <none> <none>

[root@master svc]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 37d

nginx-svc-01 ClusterIP 10.103.35.104 <none> 80/TCP 47s

nginx-svc-02 ClusterIP 10.108.241.163 <none> 80/TCP 45s

修改pod中的index进行测试

[root@master svc]# kubectl exec -it nginx-svc-01-7d486684bf-rvjdz -- /bin/bash

root@nginx-svc-01-7d486684bf-rvjdz:/# echo "test------svc--01" > /usr/share/nginx/html/index.html

root@nginx-svc-01-7d486684bf-rvjdz:/# cat /usr/share/nginx/html/index.html

test------svc--01

root@nginx-svc-01-7d486684bf-rvjdz:/# exit

exit

[root@master svc]# kubectl exec -it nginx-svc-02-559dbd94d5-nf7wq -- /bin/bash

root@nginx-svc-02-559dbd94d5-nf7wq:/# echo "test------svc--02" > /usr/share/nginx/html/index.html

root@nginx-svc-02-559dbd94d5-nf7wq:/# cat /usr/share/nginx/html/index.html

test------svc--02

root@nginx-svc-02-559dbd94d5-nf7wq:/# exit

exit

[root@master svc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-svc-01-7d486684bf-rvjdz 1/1 Running 0 5m 10.244.1.73 node1 <none> <none>

nginx-svc-02-559dbd94d5-nf7wq 1/1 Running 0 4m58s 10.244.1.74 node1 <none> <none>

[root@master svc]# curl 10.244.1.73

test------svc--01

[root@master svc]# curl 10.244.1.74

test------svc--02

虽然podip和clusterip都不固定,但是service name是固定的,而且具有完全的跨集群可移植性,因此组件之间调用的同时,完全可以通过service name去通信,这样避免了大量的ip维护成本,使得服务的yaml模板更加简单。

测试在pod中通过service名称调用

[root@master svc]# kubectl exec -it nginx-svc-01-7d486684bf-rvjdz -- /bin/bash

root@nginx-svc-01-7d486684bf-rvjdz:/# curl nginx-svc-01

test------svc--01

root@nginx-svc-01-7d486684bf-rvjdz:/# curl nginx-svc-02

test------svc--02

root@nginx-svc-01-7d486684bf-rvjdz:/# exit

exit

[root@master svc]# kubectl exec -it nginx-svc-02-559dbd94d5-nf7wq -- /bin/bash

root@nginx-svc-02-559dbd94d5-nf7wq:/# curl nginx-svc-01

test------svc--01

root@nginx-svc-02-559dbd94d5-nf7wq:/# curl nginx-svc-02

test------svc--02

服务发现实现:

CoreDNS是一个Go语言实现的链式插件DNS服务端,是CNCF成员,是一个高性能、易扩展的DNS服务端。

[root@master svc]# kubectl -n kube-system get po -o wide|grep dns

coredns-7f89b7bc75-flxwb 1/1 Running 5 29d 10.244.1.64 node1 <none> <none>

coredns-7f89b7bc75-l5qp4 1/1 Running 5 29d 10.244.1.63 node1 <none> <none>

#查看pod的dns解析

[root@master svc]# kubectl exec -it nginx-svc-02-559dbd94d5-nf7wq -- /bin/bash

root@nginx-svc-02-559dbd94d5-nf7wq:/# cat /etc/resolv.conf

nameserver 10.96.0.10

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:5

root@nginx-svc-02-559dbd94d5-nf7wq:/# exit

exit

#10.96.0.10地址为coredns的server

[root@master svc]# kubectl -n kube-system get svc|grep dns

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 37d

#启动pod的时候,会把kube-dns服务的cluster-ip地址注入到pod的resolve解析配置中,同时添加对应的namespace的search域。 因此跨namespace通过service name访问的话,需要添加对应的namespace名称,service_name.namespace

[root@master svc]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 37d

nginx-svc-01 ClusterIP 10.103.35.104 <none> 80/TCP 54m

nginx-svc-02 ClusterIP 10.108.241.163 <none> 80/TCP 54m

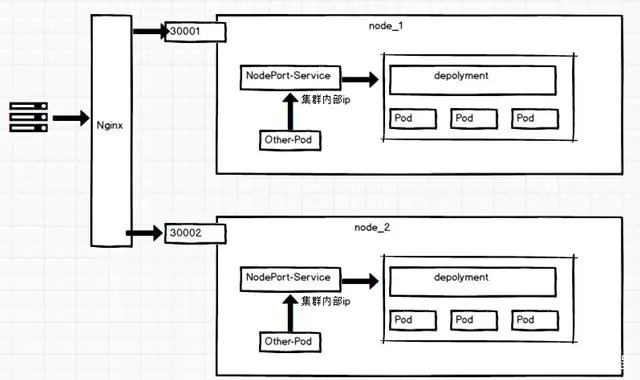

NodePort

cluster-ip为虚拟地址,只能在k8s集群内部进行访问,集群外部如果访问内部服务,实现方式之一为使用NodePort方式。NodePort会默认在 30000-32767 ,不指定的会随机使用其中一个。

修改默认端口范围:在/etc/kubernetes/manifests/kube-apiserver.yaml文件中添加service-node-port-range=10000-20000

NodePort演示

创建deployment和service

[root@master svc]# cat nginx-nodeport.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-node

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: nginx-node

template:

metadata:

labels:

app: nginx-node

spec:

containers:

- name: nginx-node

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-node

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx-node

type: NodePort

[root@master svc]# kubectl create -f nginx-nodeport.yaml

deployment.apps/nginx-node created

service/nginx-node created

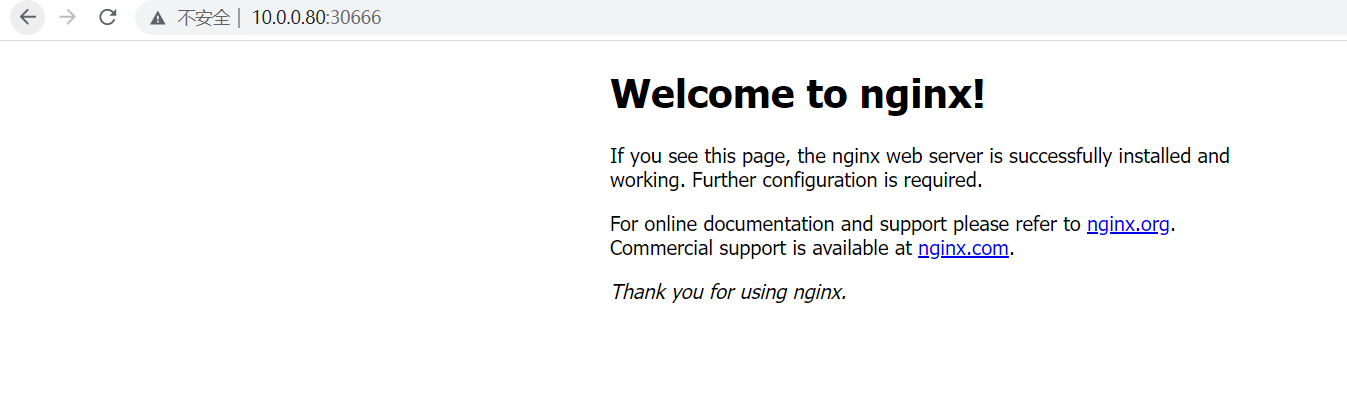

查看service和验证

[root@master svc]# kubectl get pod nginx-node-5d949765cc-67lbz -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-node-5d949765cc-67lbz 1/1 Running 0 43s 10.244.2.43 node2 <none> <none>

[root@master svc]# kubectl get svc|grep node

nginx-node NodePort 10.97.104.129 <none> 80:30666/TCP 13s

[root@master svc]# kubectl describe svc nginx-node

Name: nginx-node

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=nginx-node

Type: NodePort

IP Families: <none>

IP: 10.97.104.129

IPs: 10.97.104.129

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 30666/TCP

Endpoints: 10.244.2.43:80

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

浏览器验证

Headless service 无头服务

常规的service服务和无头服务的区别

- service:一组Pod访问策略,提供cluster-IP群集之间通讯,还提供负载均衡和服务发现

- Headless service 无头服务,不需要cluster-IP,clusterIP为None的service,直接绑定具体的Pod的IP,无头服务经常用于statefulset的有状态部署。

注:server资源默认分配给cluster-IP是为了让客户端能访问。而server还提供了pod之间的相互发现,互相访问资源,他们不需要cluster-IP

无头服务的使用场景

- 无头服务用于服务发现机制的项目或者中间件,如kafka和zookeeper之间进行leader选举,采用的是实例之间的实例IP通讯。

- 既然不需要负载均衡,则就不需要Cluster IP,如果没有Cluster IP则kube-proxy 不会处理它们, 并且kubernetes平台也不会给他创建负载均衡。

Service工作原理

kube-proxy运行在每个节点上,监听 API Server 中服务对象的变化,再通过创建流量路由规则来实现网络的转发。参照

有三种模式:

- User space, 让 Kube-Proxy 在用户空间监听一个端口,所有的 Service 都转发到这个端口,然后 Kube-Proxy 在内部应用层对其进行转发 , 所有报文都走一遍用户态,性能不高,k8s v1.2版本后废弃。

- Iptables, 当前默认模式,完全由 IPtables 来实现, 通过各个node节点上的iptables规则来实现service的负载均衡,但是随着service数量的增大,iptables模式由于线性查找匹配、全量更新等特点,其性能会显著下降。

- IPVS, 与iptables同样基于Netfilter,但是采用的hash表,因此当service数量达到一定规模时,hash查表的速度优势就会显现出来,从而提高service的服务性能。 k8s 1.8版本开始引入,1.11版本开始稳定,需要开启宿主机的ipvs模块。

IPtables模式示意图: