三Dubbo服务暴露源码分析--3远程暴露-中

三Dubbo服务暴露源码分析--3远程暴露-中

紧接上文,分析PTC2.2.1.1 Exchangers.bind(url, requestHandler)-BIND的绑定操作:

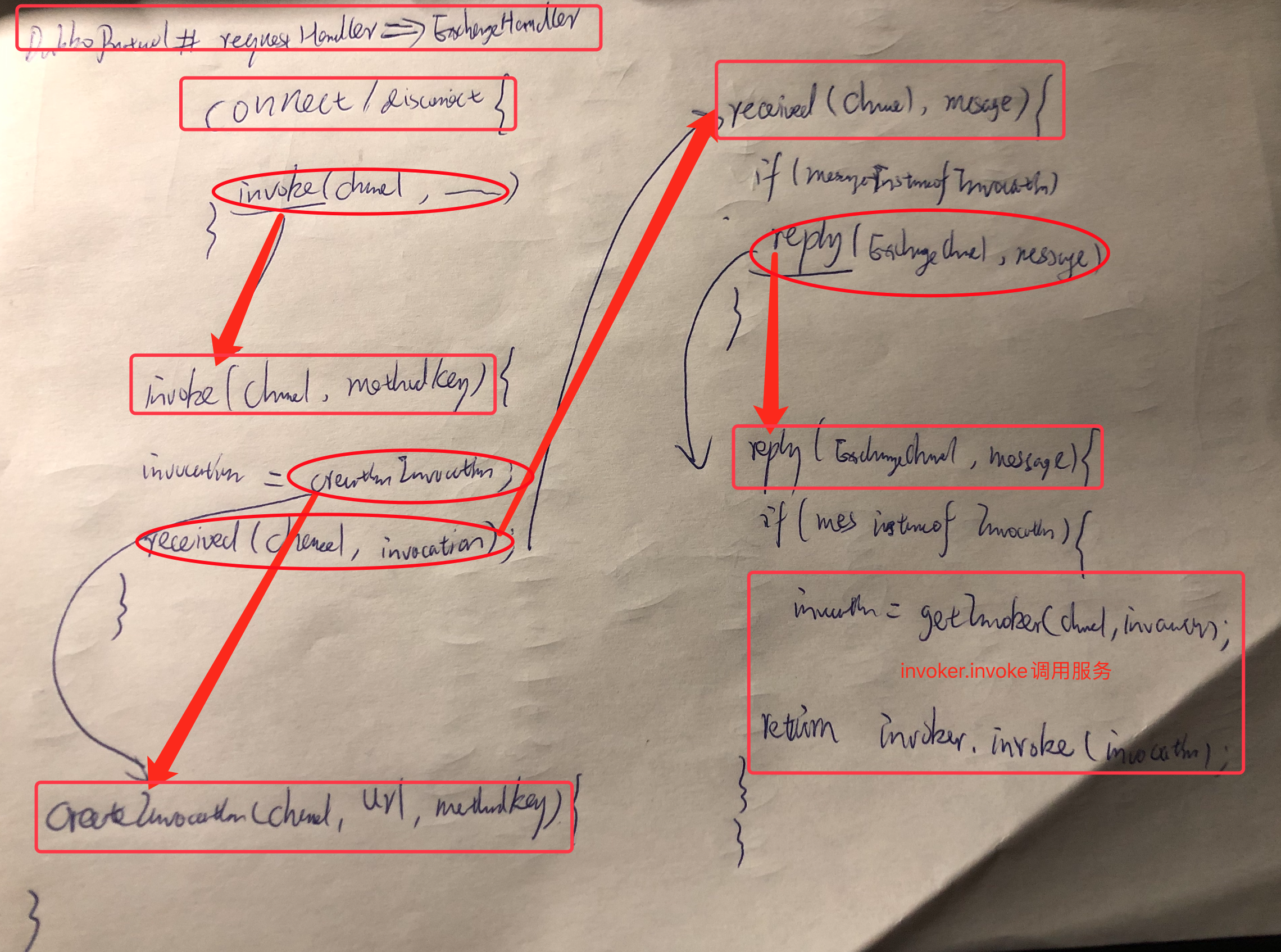

BIND1 ExchangeHandlerAdapter--处理client请求invoker.invoke(invocation)-(DubboProtocol$1)

requestHandler作为dubboProtocol的属性。

DubboProtocol

//provider端用于处理request的处理器

private ExchangeHandler requestHandler = new ExchangeHandlerAdapter() {

//处理调用请求

public Object reply(ExchangeChannel channel, Object message) throws RemotingException {

//handler只处理Invocation的信息

if (message instanceof Invocation) { //1

Invocation inv = (Invocation) message;

/**…………………………………………………………………………………………创建invoker……………………………………………………………………………………………… */

//从channel获取port,从invocation获取服务path,serviceKey=path:port,然后exporterMap.get(serviceKey).getInvoker获取服务的invoker

Invoker<?> invoker = getInvoker(channel, inv);

//如果是callback 需要处理高版本调用低版本的问题

if (Boolean.TRUE.toString().equals(inv.getAttachments().get(IS_CALLBACK_SERVICE_INVOKE))){ //2

String methodsStr = invoker.getUrl().getParameters().get("methods");

boolean hasMethod = false;

if (methodsStr == null || methodsStr.indexOf(",") == -1){//3

hasMethod = inv.getMethodName().equals(methodsStr);

} else {

String[] methods = methodsStr.split(",");

for (String method : methods){//4

if (inv.getMethodName().equals(method)){

hasMethod = true;

break;

}

} //4

}//3

if (!hasMethod){

logger.warn(new IllegalStateException("The methodName "+inv.getMethodName()+" not found in callback service interface ,invoke will be ignored. please update the api interface. url is:" + invoker.getUrl()) +" ,invocation is :"+inv );

return null;

}

} //2

/**……………………………………………… RpcContext.getContext()获取threadLocal线程变量,并将remote的客户端地址存入……………………………………………… */

RpcContext.getContext().setRemoteAddress(channel.getRemoteAddress());

/** ……………………………………………………………………………………………invoker.invoke(invocation)调用服务……………………………………………………………………………………*/

return invoker.invoke(inv);

} //1

throw new RemotingException(channel, "Unsupported request: " + message == null ? null : (message.getClass().getName() + ": " + message) + ", channel: consumer: " + channel.getRemoteAddress() + " --> provider: " + channel.getLocalAddress());

}

//接收信息

@Override

public void received(Channel channel, Object message) throws RemotingException {

if (message instanceof Invocation) {

reply((ExchangeChannel) channel, message);

} else {

super.received(channel, message);

}

}

@Override

public void connected(Channel channel) throws RemotingException {

//在connected连接时,调用invoker方法,其methodKey为onConnect

invoke(channel, Constants.ON_CONNECT_KEY);

}

@Override

public void disconnected(Channel channel) throws RemotingException {

if(logger.isInfoEnabled()){

logger.info("disconected from "+ channel.getRemoteAddress() + ",url:" + channel.getUrl());

}

//在disconnected断开连接时,调用invoker方法,其methodKey为onDisconnect

invoke(channel, Constants.ON_DISCONNECT_KEY);

}

//调用服务

private void invoke(Channel channel, String methodKey) {

Invocation invocation = createInvocation(channel, channel.getUrl(), methodKey);//构建invocation

if (invocation != null) {

try {

received(channel, invocation);

} catch (Throwable t) {

logger.warn("Failed to invoke event method " + invocation.getMethodName() + "(), cause: " + t.getMessage(), t);

}

}

}

//根据client端请求,创建调用的invocation信息

private Invocation createInvocation(Channel channel, URL url, String methodKey) {

String method = url.getParameter(methodKey);

if (method == null || method.length() == 0) {

return null;

}

RpcInvocation invocation = new RpcInvocation(method, new Class<?>[0], new Object[0]);

invocation.setAttachment(Constants.PATH_KEY, url.getPath());

invocation.setAttachment(Constants.GROUP_KEY, url.getParameter(Constants.GROUP_KEY));

invocation.setAttachment(Constants.INTERFACE_KEY, url.getParameter(Constants.INTERFACE_KEY));

invocation.setAttachment(Constants.VERSION_KEY, url.getParameter(Constants.VERSION_KEY));

if (url.getParameter(Constants.STUB_EVENT_KEY, false)){

invocation.setAttachment(Constants.STUB_EVENT_KEY, Boolean.TRUE.toString());

}

return invocation;

}

};

仔细看该ExchangeHandlerAdapter类,其内定义了在client端发来请求时,provider通过创建invoker,来实现调用服务的逻辑。

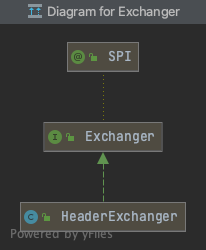

BIND2 Exchangers.bind 绑定

Exchangers作为exchange的门面类。

//PTC2.2.1.1 Exchangers.bind(url, requestHandler)

server = Exchangers.bind(url, requestHandler);

Exchangers

public static ExchangeServer bind(URL url, ExchangeHandler handler) throws RemotingException {

if (url == null) {

throw new IllegalArgumentException("url == null");

}

if (handler == null) {

throw new IllegalArgumentException("handler == null");

}

url = url.addParameterIfAbsent(Constants.CODEC_KEY, "exchange");

//BIND2.1 getExchanger(url)

//BIND2.2 bind

return getExchanger(url).bind(url, handler);

}

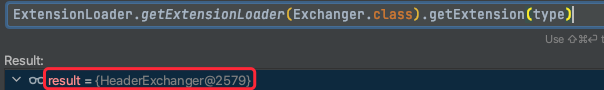

BIND2.1 getExchanger(url)-->HeaderExchanger

Exchangers

public static Exchanger getExchanger(URL url) {

//type为“header”

String type = url.getParameter(Constants.EXCHANGER_KEY, Constants.DEFAULT_EXCHANGER);

return getExchanger(type);

}

public static Exchanger getExchanger(String type) {

return ExtensionLoader.getExtensionLoader(Exchanger.class).getExtension(type);

}

获取HeaderExchanger

BIND2.2 HeaderExchanger.bind

public class HeaderExchanger implements Exchanger {

public static final String NAME = "header";

//client端连接

public ExchangeClient connect(URL url, ExchangeHandler handler) throws RemotingException {

return new HeaderExchangeClient(Transporters.connect(url, new DecodeHandler(new HeaderExchangeHandler(handler))));

}

//server端绑定URL

public ExchangeServer bind(URL url, ExchangeHandler handler) throws RemotingException {

//BIND2.2.1 new DecodeHandler(new HeaderExchangeHandler(handler))

//BIND2.2.2 Transporters.bind(url,handler)

//BIND2.2.3 HeaderExchangeServer

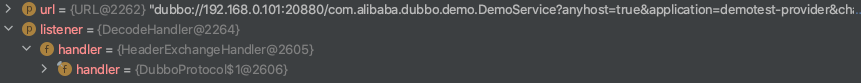

return new HeaderExchangeServer(Transporters.bind(url, new DecodeHandler(new HeaderExchangeHandler(handler))));

}

}

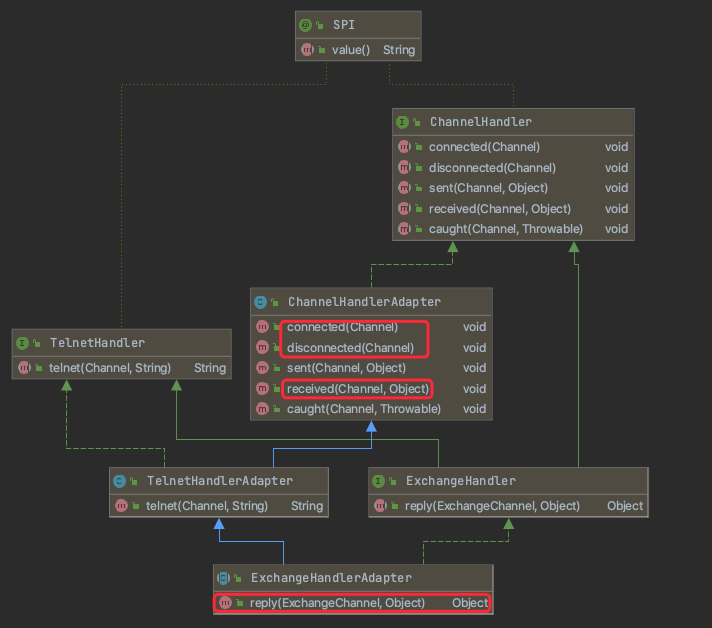

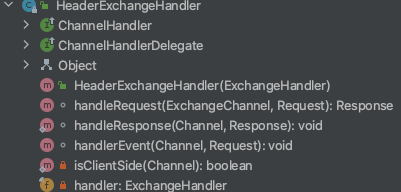

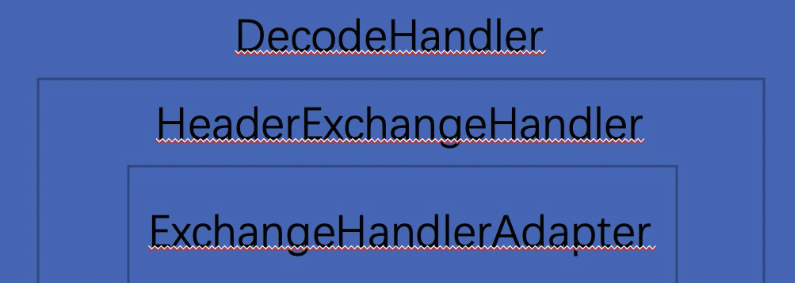

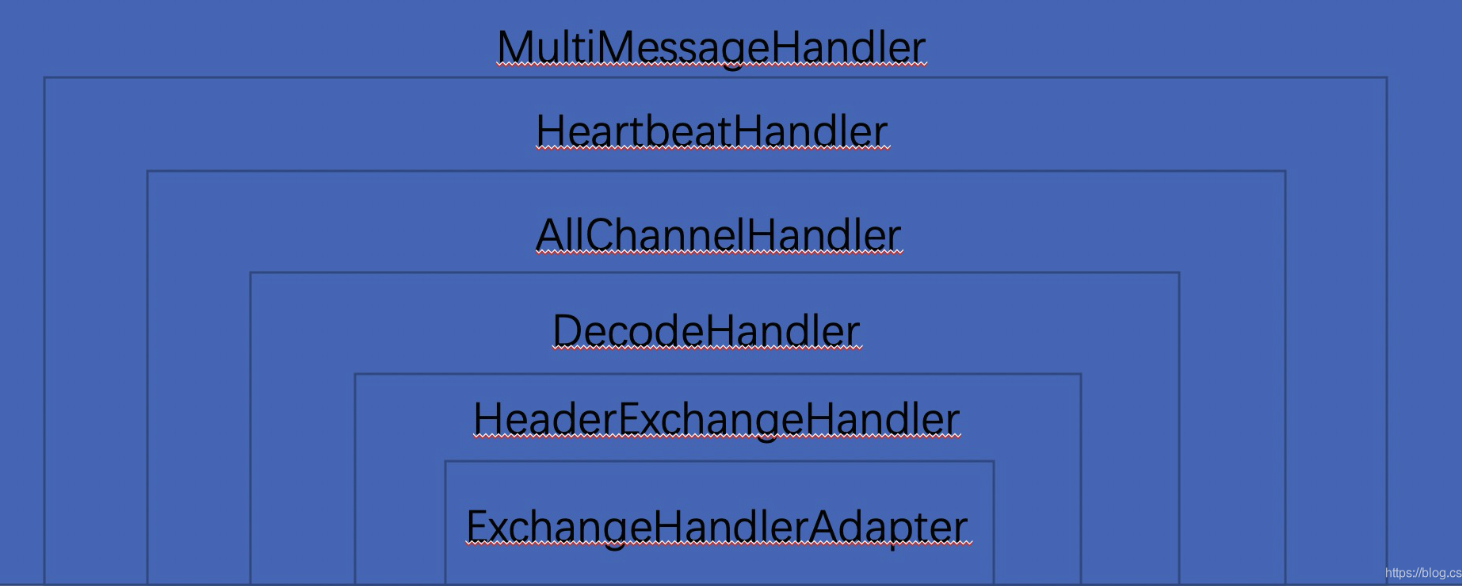

BIND2.2.1 new DecodeHandler(new HeaderExchangeHandler(handler))--handler处理链

构造provider处理client请求的handler链。

public class HeaderExchangeHandler implements ChannelHandlerDelegate {

//持有ExchangeHandlerAdapter的匿名对象

private final ExchangeHandler handler;

public HeaderExchangeHandler(ExchangeHandler handler){

if (handler == null) {

throw new IllegalArgumentException("handler == null");

}

this.handler = handler;

}

//以处理接受到的消息为例

public void received(Channel channel, Object message) throws RemotingException {

channel.setAttribute(KEY_READ_TIMESTAMP, System.currentTimeMillis());

ExchangeChannel exchangeChannel = HeaderExchangeChannel.getOrAddChannel(channel);

try {

if (message instanceof Request) {

// handle request.

Request request = (Request) message;

if (request.isEvent()) {

//handlerXXX为当前类中的方法

handlerEvent(channel, request);

} else {

if (request.isTwoWay()) {

Response response = handleRequest(exchangeChannel, request);

channel.send(response);

} else {

/** …………………………………………………………………………………………链式调用…………………………………………………………………………………………………………………………………… */

//调用ExchangeHandlerAdapter的匿名类内的方法

handler.received(exchangeChannel, request.getData());

}

}

} else if (message instanceof Response) {

handleResponse(channel, (Response) message);

} else if (message instanceof String) {

if (isClientSide(channel)) {

Exception e = new Exception("Dubbo client can not supported string message: " + message + " in channel: " + channel + ", url: " + channel.getUrl());

logger.error(e.getMessage(), e);

} else {

String echo = handler.telnet(channel, (String) message);

if (echo != null && echo.length() > 0) {

channel.send(echo);

}

}

} else {

handler.received(exchangeChannel, message);

}

} finally {

HeaderExchangeChannel.removeChannelIfDisconnected(channel);

}

}

其实现方法中,handlerXXX调用传入的ExchangeHandlerAdapter的匿名类内的方法。

public class DecodeHandler extends AbstractChannelHandlerDelegate {

private static final Logger log = LoggerFactory.getLogger(DecodeHandler.class);

public DecodeHandler(ChannelHandler handler) {

super(handler);

}

//以处理接受到的消息为例

public void received(Channel channel, Object message) throws RemotingException {

if (message instanceof Decodeable) {

decode(message);

}

if (message instanceof Request) {

//解码方法decode,由当前handler处理

decode(((Request)message).getData());

}

if (message instanceof Response) {

decode( ((Response)message).getResult());

}

/** …………………………………………………………………………………………链式调用…………………………………………………………………………………………………………………………………… */

//调用传入的HeaderExchangeHandler的方法,实现handler链式调用

handler.received(channel, message);

}

从上述过程,可知是对channelHandler的链式包装,构成handler处理链。

各个handler的作用:

HeaderExchangeHandler主要还是管理连接等。

DecodeHandler主要是对请求进行解码。

BIND2.2.2 Transporters.bind(url,handler)

Transporters是门面类。

dubbo://192.168.0.101:20880/com.alibaba.dubbo.demo.DemoService?anyhost=true&application=demotest-provider&channel.readonly.sent=true&codec=dubbo&dubbo=2.5.3&heartbeat=60000&interface=com.alibaba.dubbo.demo.DemoService&methods=getPermissions&organization=dubbox&owner=programmer&pid=77229&side=provider×tamp=1671442349364

Transporters

public static Server bind(URL url, ChannelHandler... handlers) throws RemotingException {

if (url == null) {

throw new IllegalArgumentException("url == null");

}

if (handlers == null || handlers.length == 0) {

throw new IllegalArgumentException("handlers == null");

}

ChannelHandler handler;

//handler构成处理器链,length=1

if (handlers.length == 1) {

handler = handlers[0];

} else {

handler = new ChannelHandlerDispatcher(handlers);

}

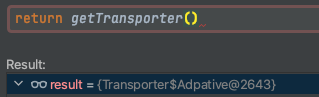

//BIND2.2.2.1 Transporter$Adpative.bind

return getTransporter().bind(url, handler);

}

public static Transporter getTransporter() {

return ExtensionLoader.getExtensionLoader(Transporter.class).getAdaptiveExtension();

}

//BIND2.2.2.1 Transporter$Adpative.bind

public class Transporter$Adpative implements com.alibaba.dubbo.remoting.Transporter {

public com.alibaba.dubbo.remoting.Server bind(com.alibaba.dubbo.common.URL arg0, com.alibaba.dubbo.remoting.ChannelHandler arg1) throws RemotingException {

if (arg0 == null) throw new IllegalArgumentException("url == null");

com.alibaba.dubbo.common.URL url = arg0;

//Transporter为netty协议

String extName = url.getParameter("server", url.getParameter("transporter", "netty"));

if(extName == null) throw new IllegalStateException("Fail to get extension(com.alibaba.dubbo.remoting.Transporter) name from url(" + url.toString() + ") use keys([server, transporter])");

com.alibaba.dubbo.remoting.Transporter extension = (com.alibaba.dubbo.remoting.Transporter)ExtensionLoader.getExtensionLoader(com.alibaba.dubbo.remoting.Transporter.class).getExtension(extName);

//BIND2.2.2.2 NettyTransporter.bind

//NettyTransporter

return extension.bind(arg0, arg1);

}

//BIND2.2.2.2 NettyTransporter.bind-->创建NettyServer

public class NettyTransporter implements Transporter {

public static final String NAME = "netty";

public Server bind(URL url, ChannelHandler listener) throws RemotingException {

//NETTY

//创建NettyServer

return new NettyServer(url, listener);

}

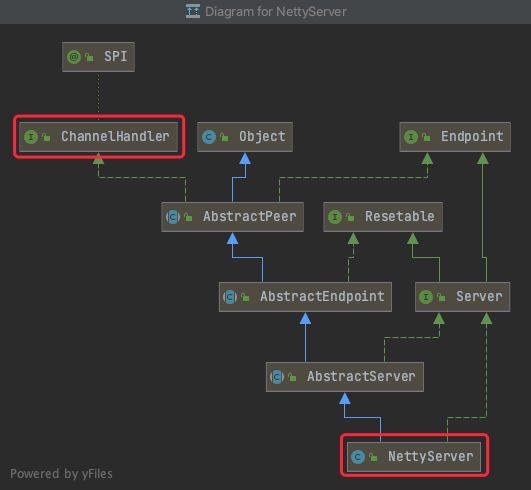

/*………NettyServer --开启provider端……………/

public class NettyServer extends AbstractServer implements Server {

//缓存channels

private Map<String, Channel> channels; // <ip:port, channel>

private ServerBootstrap bootstrap;

private org.jboss.netty.channel.Channel channel;

public NettyServer(URL url, ChannelHandler handler) throws RemotingException{

//NS1 ChannelHandlers.wrap(handler

//NS2 super(url, ChannelHandlers

super(url, ChannelHandlers.wrap(handler, ExecutorUtil.setThreadName(url, SERVER_THREAD_POOL_NAME)));

}

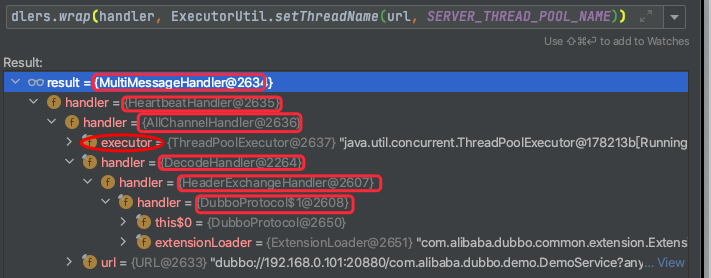

NS1 ChannelHandlers.wrap(handler---按指定线程模型包装handler

包裹handler,添加heartbeatHandler等处理器。

public class ChannelHandlers {

public static ChannelHandler wrap(ChannelHandler handler, URL url){

return ChannelHandlers.getInstance().wrapInternal(handler, url);

}

protected static ChannelHandlers getInstance() {

return INSTANCE;

}

protected ChannelHandler wrapInternal(ChannelHandler handler, URL url) {

//NS1.1 AllDispatcher--线程模型

return new MultiMessageHandler(new HeartbeatHandler(ExtensionLoader.getExtensionLoader(Dispatcher.class)

.getAdaptiveExtension().dispatch(handler, url)));

}

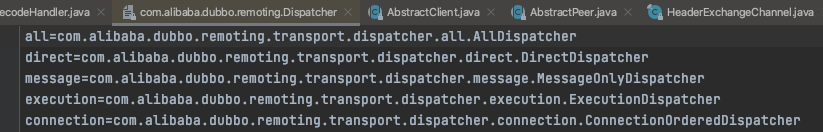

//NS1.1 AllDispatcher--线程模型--provider端handler链处理流程

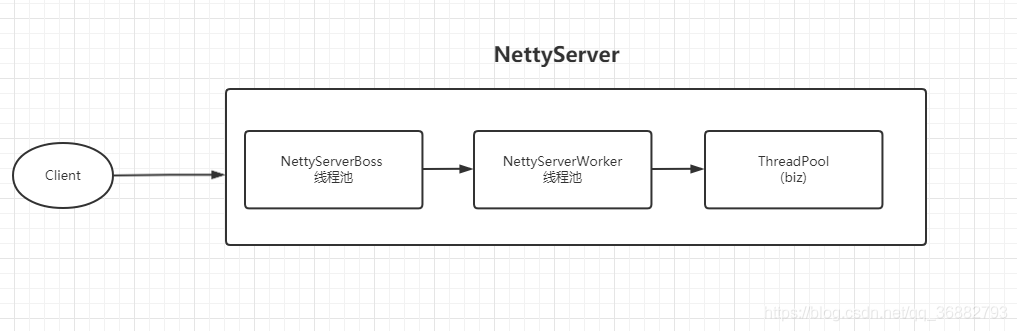

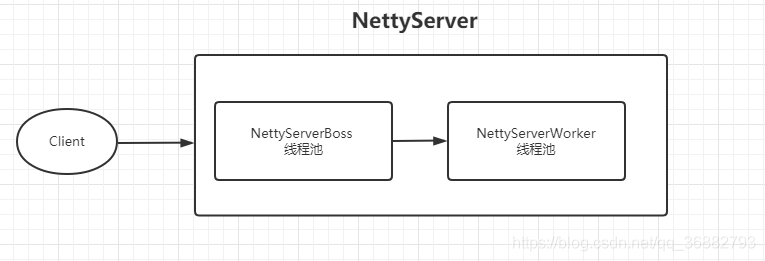

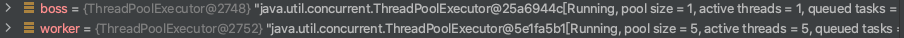

Dubbo 默认的底层网络通信使用的是Netty,服务提供方NettyServer 线程模型为reactor主从线程池模型,其中boss线程池主要用来接受客户端的链接请求,然后后续的read、write等处理由 worker 执行,我们把 boss 和worker线程组称为 I/O 线程。

在实际调用时,如果服务提供方的逻辑处理能够迅速完成,并且不会发起新的IO 请求,那么直接在 IO 线程上处理会更快,因为这样减少了线程池调度与上下文切换的开销。

但是如果处理逻辑较慢,或者需要发起新的IO请求(比如需要查询数据库),则IO线程必须派发请求到新的线程池进行处理,否则 IO 线程被阻塞,导致不能接收其他请求。

在Dubbo 中,线程模型的扩展接口为 org.apache.dubbo.remoting.Dispatcher,all为默认的线程模型,即由AllChannelHandler 来处理Netty 事件。

@SPI(AllDispatcher.NAME)

public interface Dispatcher {

/**

* 将消息调度到线程池.

*/

@Adaptive({Constants.DISPATCHER_KEY, "dispather", "channel.handler"}) // 后两个参数为兼容旧配置

ChannelHandler dispatch(ChannelHandler handler, URL url);

}

/**

* 默认的线程池配置

*

* @author chao.liuc

*/

public class AllDispatcher implements Dispatcher {

public static final String NAME = "all";

public ChannelHandler dispatch(ChannelHandler handler, URL url) {

//返回AllChannelHandler---AllDispatcher线程模型对应的处理器

return new AllChannelHandler(handler, url);

}

}

Dubbo支持的线程模型如下:

以allDispatcher和DirectDispatcher为例:

1 allDispatcher:所有的消息都派发到业务线程池,这些消息包括请求、响应、连接事件、断开事件、心跳事件等。

allDispatcher线程模型,创建对应线程模型的handler处理器---AllChannelHandler。从源码看出,所有的connect、disconnect、received等操作,都派发到业务线程池上---getExecutorService().execute。

public class AllChannelHandler extends WrappedChannelHandler {

public AllChannelHandler(ChannelHandler handler, URL url) {

//NS1.1.1 AllChannelHandler(线程模型handler、业务线程池)

super(handler, url);

}

@Override

public void connected(Channel channel) throws RemotingException {

ExecutorService cexecutor = getExecutorService();

try {

//创建任务ChannelEventRunnable,然后交给业务线程池

cexecutor.execute(new ChannelEventRunnable(channel, handler, ChannelState.CONNECTED));

} catch (Throwable t) {

throw new ExecutionException("connect event", channel, getClass() + " error when process connected event .", t);

}

}

@Override

public void received(Channel channel, Object message) throws RemotingException {

ExecutorService cexecutor = getExecutorService();

try {

//创建关注不同事件的任务ChannelEventRunnable,然后交给业务线程池

cexecutor.execute(new ChannelEventRunnable(channel, handler, ChannelState.RECEIVED, message));

} catch (Throwable t) {

//TODO A temporary solution to the problem that the exception information can not be sent to the opposite end after the thread pool is full. Need a refactoring

//fix The thread pool is full, refuses to call, does not return, and causes the consumer to wait for time out

if(message instanceof Request && t instanceof RejectedExecutionException){

Request request = (Request)message;

if(request.isTwoWay()){

String msg = "Server side(" + url.getIp() + "," + url.getPort() + ") threadpool is exhausted ,detail msg:" + t.getMessage();

Response response = new Response(request.getId(), request.getVersion());

response.setStatus(Response.SERVER_THREADPOOL_EXHAUSTED_ERROR);

response.setErrorMessage(msg);

channel.send(response);

return;

}

}

throw new ExecutionException(message, channel, getClass() + " error when process received event .", t);

}

}

//NS1.1.1 AllChannelHandler(线程模型handler、业务线程池)

而 WrappedChannelHandler 构造函数中会将当前 ChannelHandler 使用的业务线程池保存到 DataStore 中,也即是说 AbstractServer#executor 保存了当前线程模型所使用的业务线程池。

public WrappedChannelHandler(ChannelHandler handler, URL url) {

this.handler = handler;

this.url = url;

//业务线程池--FixedThreadPool

executor = (ExecutorService) ExtensionLoader.getExtensionLoader(ThreadPool.class).getAdaptiveExtension().getExecutor(url);

String componentKey = Constants.EXECUTOR_SERVICE_COMPONENT_KEY;

if (Constants.CONSUMER_SIDE.equalsIgnoreCase(url.getParameter(Constants.SIDE_KEY))) {

componentKey = Constants.CONSUMER_SIDE;

}

//将线程池保存到DataSource上

DataStore dataStore = ExtensionLoader.getExtensionLoader(DataStore.class).getDefaultExtension();

dataStore.put(componentKey, Integer.toString(url.getPort()), executor);

}

dubbo的线程池对象,为自适应扩展类:默认会创建核心线程和最大线程数为200的线程池对象FixedThreadPool。在allDispatcher线程模型中,所有的消息都派发到业务线程池FixedThreadPool中,这些消息包括请求、响应、连接事件、断开事件、心跳事件等。

@SPI("fixed")

public interface ThreadPool {

@Adaptive({Constants.THREADPOOL_KEY})

Executor getExecutor(URL url);

}

public class FixedThreadPool implements ThreadPool {

@Override

public Executor getExecutor(URL url) {

String name = url.getParameter(Constants.THREAD_NAME_KEY, Constants.DEFAULT_THREAD_NAME);//dubbo

int threads = url.getParameter(Constants.THREADS_KEY, Constants.DEFAULT_THREADS);//200

int queues = url.getParameter(Constants.QUEUES_KEY, Constants.DEFAULT_QUEUES);

return new ThreadPoolExecutor(threads, threads, 0, TimeUnit.MILLISECONDS,

queues == 0 ? new SynchronousQueue<Runnable>() :

(queues < 0 ? new LinkedBlockingQueue<Runnable>()

: new LinkedBlockingQueue<Runnable>(queues)),

new NamedInternalThreadFactory(name, true), new AbortPolicyWithReport(name, url));

}

}

2 DirectDispatcher:所有的消息都不派发到业务线程池,全都在 IO 线程上直接执行

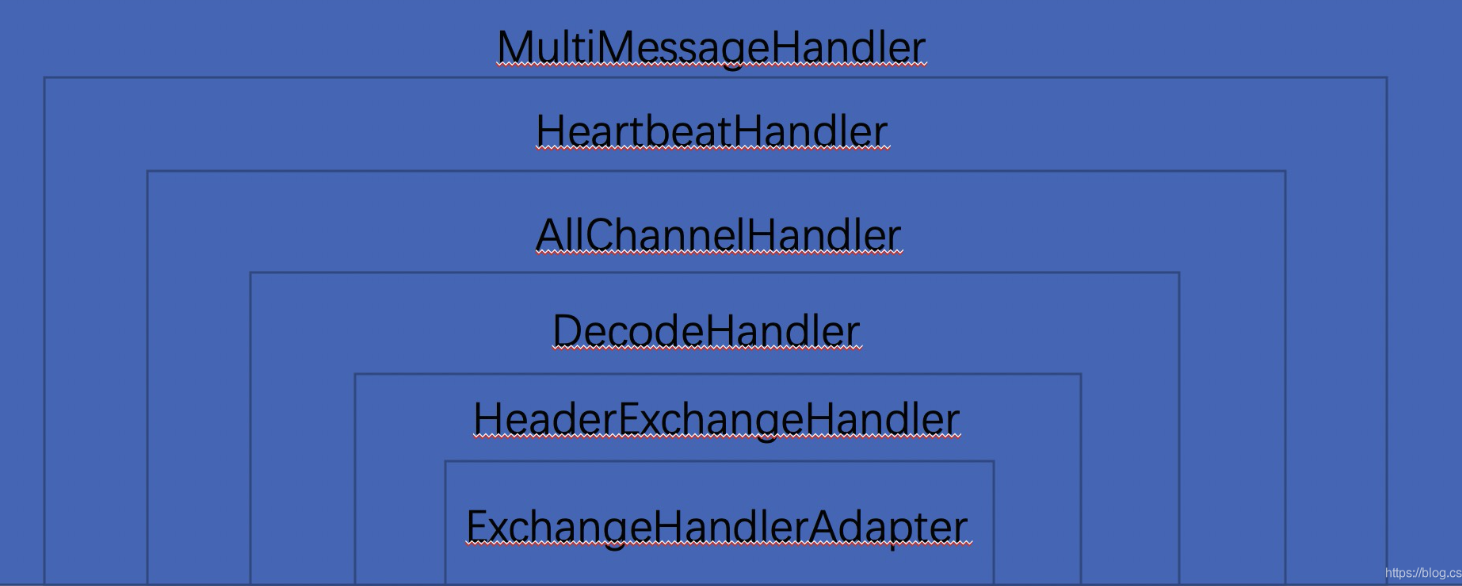

执行后的handler为:

由内到外的handler处理器:

HeaderExchangeHandler区分不同消息类型(provider端处理request;consumer端处理response;dubbo的qos是telnet消息),分别调用不同的处理逻辑;

DecodeHandler主要是对请求进行解码。

AllChannelHandler---线程池模型对应的处理器

HeartbeatHandler主要负责心跳检测:处理心跳返回、请求,直接在IO线程中执行,每次收到信息更新channel的read的时间戳;发送数据时,记录channel的write时间戳;

MultiMessageHandler主要负责将Dubbo内部定义的多条消息的聚合消息MultiMessage进行拆分处理,然后遍历消息逐个分别处理;

这里的handler处理器,在consumer端发来请求消息时,按照如下流程处理:

MultiMessageHandler#received -> HeartbeatHandler#received -> Dubbo线程模型指定的 Handler#received -> DecodeHandler#received -> HeaderExchangeHandler#received -> DubboProtocol.requestHandler#received

1 MultiMessageHandler#received 收到消息,判断是否为MultiMessage,然后传递给HeartbeatHandler#received;

2 HeartbeatHandler#received处理心跳消息,如果不是心跳消息,透传给线程模型指定的handler(此处是AllDispatcher线程模型对应的AllChannelHandler;

3 Dubbo线程模型指定的 Handler#received ,通过业务线程池,并将消息请求包装为ChannelEventRunnable,然后在线程中执行后续的DecodeHandler#received方法。

NS2 super(url, ChannelHandlers

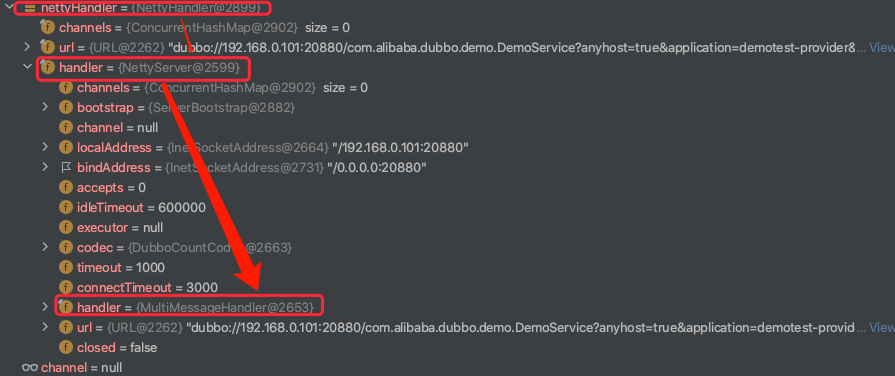

public abstract class AbstractServer extends AbstractEndpoint implements Server {

private InetSocketAddress localAddress;

private InetSocketAddress bindAddress;

private int accepts;

protected static final String SERVER_THREAD_POOL_NAME ="DubboServerHandler";

ExecutorService executor;

public AbstractServer(URL url, ChannelHandler handler) throws RemotingException {

super(url, handler);

///192.168.0.101:20880

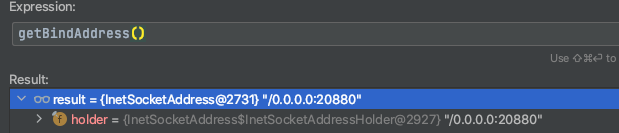

localAddress = getUrl().toInetSocketAddress();

//NetUtils.ANYHOST=0.0.0.0

String host = url.getParameter(Constants.ANYHOST_KEY, false)

|| NetUtils.isInvalidLocalHost(getUrl().getHost())

? NetUtils.ANYHOST : getUrl().getHost();

///0.0.0.0:20880

bindAddress = new InetSocketAddress(host, getUrl().getPort());

this.accepts = url.getParameter(Constants.ACCEPTS_KEY, Constants.DEFAULT_ACCEPTS);

this.idleTimeout = url.getParameter(Constants.IDLE_TIMEOUT_KEY, Constants.DEFAULT_IDLE_TIMEOUT);

try {

//NS2.1 doOpen 在相应端口开启网络监听

doOpen();

if (logger.isInfoEnabled()) {

logger.info("Start " + getClass().getSimpleName() + " bind " + getBindAddress() + ", export " + getLocalAddress());

}

} catch (Throwable t) {

throw new RemotingException(url.toInetSocketAddress(), null, "Failed to bind " + getClass().getSimpleName()

+ " on " + getLocalAddress() + ", cause: " + t.getMessage(), t);

}

if (handler instanceof WrappedChannelHandler ){

//从handler中获取执行任务的线程池

executor = ((WrappedChannelHandler)handler).getExecutor();

}

}

NS2.1 doOpen--netty服务端开启---handler配置

NettyServer

@Override

protected void doOpen() throws Throwable {

NettyHelper.setNettyLoggerFactory();

//boss、worker线程组---线程组使用java的executors线程框架创建

ExecutorService boss = Executors.newCachedThreadPool(new NamedThreadFactory("NettyServerBoss", true));

ExecutorService worker = Executors.newCachedThreadPool(new NamedThreadFactory("NettyServerWorker", true));

//NioServerSocketChannelFactory用来创建server端的NioServerSocketChannel

//bossgroup线程数默认为1;workergroup数量为CPU核数+1

ChannelFactory channelFactory = new NioServerSocketChannelFactory(boss, worker,

//默认io线程数量为cpu核心数+1

getUrl().getPositiveParameter(Constants.IO_THREADS_KEY, Constants.DEFAULT_IO_THREADS));

//启动类

bootstrap = new ServerBootstrap(channelFactory);

/** ………………………………………………………………………………………………………………Netty的handler…………………………………………………………………………………………………………………………*/

//netty的handler处理器---NettyServer也是ChannelHandler的实现类

//业务handler,这里将netty的个助攻请求分发给NettyServer的方法

final NettyHandler nettyHandler = new NettyHandler(getUrl(), this);

//channels是nettyHandler的属性:

//private final Map<String, Channel> channels = new ConcurrentHashMap<String, Channel>(); // <ip:port, channel>

channels = nettyHandler.getChannels();

//为netty启动类设置ChannelPipelineFactory--为一个新Chanel创建channelPipeline

bootstrap.setPipelineFactory(new ChannelPipelineFactory() {

public ChannelPipeline getPipeline() {

NettyCodecAdapter adapter = new NettyCodecAdapter(getCodec() ,getUrl(), NettyServer.this);

ChannelPipeline pipeline = Channels.pipeline();

/*int idleTimeout = getIdleTimeout();

if (idleTimeout > 10000) {

pipeline.addLast("timer", new IdleStateHandler(timer, idleTimeout / 1000, 0, 0));

}*/

//pipeline添加handler处理器

pipeline.addLast("decoder", adapter.getDecoder());

pipeline.addLast("encoder", adapter.getEncoder());

//handler处理器,加入pipeline

pipeline.addLast("handler", nettyHandler);

return pipeline;

}

});

// server端绑定操作,并启用netty服务

channel = bootstrap.bind(getBindAddress());

}

1 此外,注意netty的服务端开启中,配置的线程组是java的Executors框架创建的线程池,作为Netty的reactor响应模型使用。

2 向netty的pipeline中加入的handler为NettyHandler,其包裹了NettyServer以及handler的层次,如下:

添加到channel的三个handler:

1 decoder:adapter.getDecoder()是解码器,当服务接收到消息后使用该handler对数据进行解码;

2 encoder:adapter.getEncoder()是编码器,当服务发送消息时,使用该handler对数据进行编码;

3 handler:NettyHandler是业务处理器,是真正处理业务的handler。decoder解码后的数据会交由nettyHandler处理,由nettyHandler写会通道的数据会被encoder编码器编码后写入channel。

NettyHandler->NettyServer->MultiMessageHandler#received -> HeartbeatHandler#received -> Dubbo线程模型指定的 Handler#received -> DecodeHandler#received -> HeaderExchangeHandler#received -> DubboProtocol.requestHandler#received

3 最后,Server端绑定0.0.0.0:20880,在本地的20880端口开放。

jboss.Netty的绑定操作,这里不再追踪代码。

//NS2.1.1 NettyHandler添加入pipeline(provider的handler处理流程)

在doOpen中,将包装过的nettyHandler添加入pipeline上:

/** ………………………………………………………………………………………………………………Netty的handler…………………………………………………………………………………………………………………………*/

//netty的handler处理器---NettyServer也是ChannelHandler的实现类

//业务handler,这里将netty的个助攻请求分发给NettyServer的方法

final NettyHandler nettyHandler = new NettyHandler(getUrl(), this);

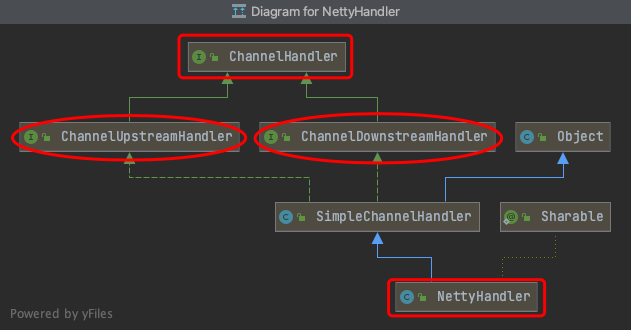

public class NettyHandler extends SimpleChannelHandler {

private final Map<String, Channel> channels = new ConcurrentHashMap<String, Channel>(); // <ip:port, channel>

private final URL url;//服务提供者的url--dubbo://……………………

private final ChannelHandler handler; //为nettyServer

public Map<String, Channel> getChannels() {

return channels;

}

//将连接操作,交给NettyServer.connected(channel)

@Override

public void channelConnected(ChannelHandlerContext ctx, ChannelStateEvent e) throws Exception {

NettyChannel channel = NettyChannel.getOrAddChannel(ctx.getChannel(), url, handler);

try {

if (channel != null) {

channels.put(NetUtils.toAddressString((InetSocketAddress) ctx.getChannel().getRemoteAddress()), channel);

}

handler.connected(channel);

} finally {

NettyChannel.removeChannelIfDisconnected(ctx.getChannel());

}

}

//将接收消息操作,交给NettyServer.received(channel)

@Override

public void messageReceived(ChannelHandlerContext ctx, MessageEvent e) throws Exception {

NettyChannel channel = NettyChannel.getOrAddChannel(ctx.getChannel(), url, handler);

try {

handler.received(channel, e.getMessage());

} finally {

NettyChannel.removeChannelIfDisconnected(ctx.getChannel());

}

}

//将写操作,交给NettyServer.send(channel)

@Override

public void writeRequested(ChannelHandlerContext ctx, MessageEvent e) throws Exception {

super.writeRequested(ctx, e);

NettyChannel channel = NettyChannel.getOrAddChannel(ctx.getChannel(), url, handler);

try {

handler.sent(channel, e.getMessage());

} finally {

NettyChannel.removeChannelIfDisconnected(ctx.getChannel());

}

}

可以发现,NettyHandler的作用,是将netty的网络事件,交给ChannelHandler处理,即传入的NettyHandler。

channelActive =》 ChannelHandler#connected : 客户端连接事件

channelInactive =》 ChannelHandler#disconnected : 客户端断连事件

channelRead =》 ChannelHandler#received : 服务端收到客户端消息(从管道中读取)

write =》 ChannelHandler#sent : 服务端向客户端发送消息(写入管道)

exceptionCaught =》 ChannelHandler#caught : 异常消息处理

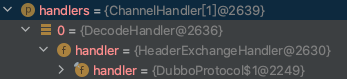

然后,nettyHandler的事件处理,交给NettyHandler的属性handler处理,其即是ChannelHandlers.wrap(handler)中,通过dubbo线程模型包装后的handler。NettyHandler内部handler如下:

NettyHandler->NettyServer->MultiMessageHandler#received -> HeartbeatHandler#received -> Dubbo线程模型指定的 Handler#received -> DecodeHandler#received -> HeaderExchangeHandler#received -> DubboProtocol.requestHandler#received

因此,provider端处理消息的流程如下:(省略了部分handler)

BIND2.2.3 HeaderExchangeServer

HeaderExchanger

//server端绑定URL

public ExchangeServer bind(URL url, ExchangeHandler handler) throws RemotingException {

//BIND2.2.1 new DecodeHandler(new HeaderExchangeHandler(handler))

//BIND2.2.2 Transporters.bind(url,handler)

//BIND2.2.3 HeaderExchangeServer

return new HeaderExchangeServer(Transporters.bind(url, new DecodeHandler(new HeaderExchangeHandler(handler))));

}

然后,构造HeaderExchangeServer

public HeaderExchangeServer(Server server) {

if (server == null) {

throw new IllegalArgumentException("server == null");

}

this.server = server;

this.heartbeat = server.getUrl().getParameter(Constants.HEARTBEAT_KEY, 0);

this.heartbeatTimeout = server.getUrl().getParameter(Constants.HEARTBEAT_TIMEOUT_KEY, heartbeat * 3);

if (heartbeatTimeout < heartbeat * 2) {

throw new IllegalStateException("heartbeatTimeout < heartbeatInterval * 2");

}

startHeatbeatTimer();

}

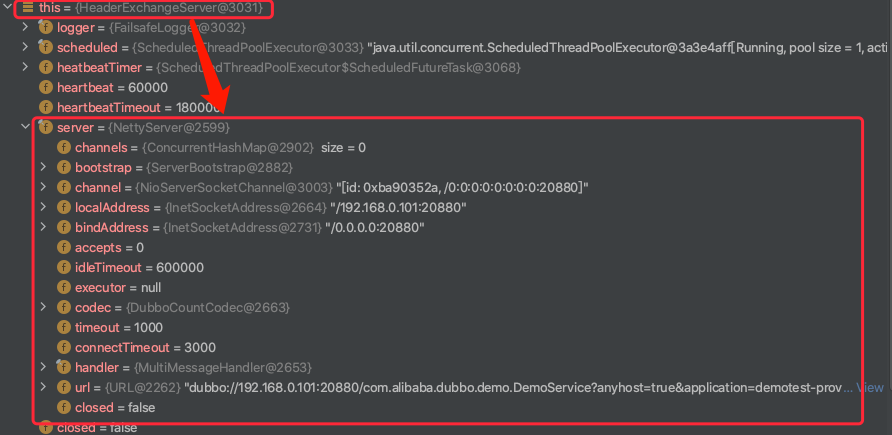

初始化后的HeaderExchangeServer为如下: