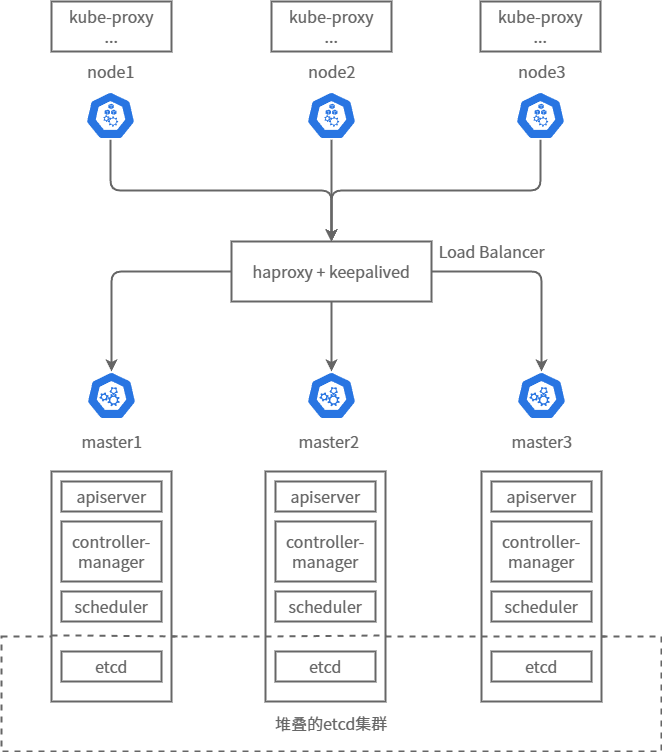

kubernetes高可用部署

部署的结构图如下

机器环境

| 类型 | 数量 |

|---|---|

| "haproxy + keepalived" | 2台 |

| "control-plane" | 3台 (etcd 堆叠) |

| "work node" | 3台 |

haproxy

- 端口采用默认的6443

- 配置 3 个后端

cat /etc/haproxy/haproxy.cfg

>

global

log /dev/log local0 warning

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

log global

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

frontend kube-apiserver

bind *:6443

mode tcp

option tcplog

default_backend kube-apiserver

backend kube-apiserver

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server kube-apiserver-1 192.168.31.45:6443 check

server kube-apiserver-2 192.168.31.103:6443 check

server kube-apiserver-3 192.168.31.141:6443 check

keepalived

- 检测haproxy存活

- 虚拟IP

cat /etc/keepalived/keepalived.conf

>

global_defs {

notification_email {

}

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_haproxy {

script "killall -0 haproxy"

interval 2

weight 2

}

vrrp_instance haproxy-vip {

state BACKUP

priority 100

interface ens33 # 对应的网卡接口

virtual_router_id 60

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

unicast_src_ip 192.168.31.16 # 本机IP

unicast_peer {

192.168.31.75 # 另一台LB机器的IP

}

virtual_ipaddress {

192.168.31.7/24 # 虚拟IP地址

}

track_script {

chk_haproxy

}

}

初始化

- 选择其中一台进行初始化

- --control-plane-endpoint "负载均衡器的地址:端口"

- --upload-certs "自动分发证书"

- --pod-network-cidr "指定Pod子网"

kubeadm init --control-plane-endpoint "192.168.31.7:6443" --upload-certs --pod-network-cidr=192.169.0.0/16

加入集群

- 默认token只存在一天

作为 "control-plane" 加入

kubeadm join 192.168.31.7:6443 --token 3cvd8q.98unh7gjfiy1izh6 \

--discovery-token-ca-cert-hash sha256:210ee3ad7c4f47e013732d61747ad9c9d86b8973ff7a125fb917c455905bdc67 \

--control-plane --certificate-key c6f660d4d1f54d0a06f96ac7956307cf3ec50f7e64b5c6159902007e901488f0

作为"work node"加入

kubeadm join 192.168.31.7:6443 --token 3cvd8q.98unh7gjfiy1izh6 \

--discovery-token-ca-cert-hash sha256:210ee3ad7c4f47e013732d61747ad9c9d86b8973ff7a125fb917c455905bdc67

配置 kubectl 客户端

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u)😒(id -g) $HOME/.kube/config

calico

wget https://raw.githubusercontent.com/projectcalico/calico/v3.29.1/manifests/tigera-operator.yaml

wget https://raw.githubusercontent.com/projectcalico/calico/v3.29.1/manifests/custom-resources.yaml

- 修改 custom-resources 中内容,ip网段和镜像源。

cat custom-resources.yaml

>

# This section includes base Calico installation configuration.

# For more information, see: https://docs.tigera.io/calico/latest/reference/installation/api#operator.tigera.io/v1.Installation

apiVersion: operator.tigera.io/v1

kind: Installation

metadata:

name: default

spec:

# Configures Calico networking.

#registry: m.daocloud.io

calicoNetwork:

ipPools:

- name: default-ipv4-ippool

blockSize: 26

cidr: 192.169.0.0/16 #pod-network-cidr

encapsulation: VXLANCrossSubnet

natOutgoing: Enabled

nodeSelector: all()

---

# This section configures the Calico API server.

# For more information, see: https://docs.tigera.io/calico/latest/reference/installation/api#operator.tigera.io/v1.APIServer

apiVersion: operator.tigera.io/v1

kind: APIServer

metadata:

name: default

spec: {}

问题记录

network is not ready: container runtime network not ready

问题查看方式

kubectl describe pod -n calico-system

问题更多信息:

"...NetworkReady=false reason:NetworkPluginNotReady message:Network plugin returns error: cni plugin not initialized..."

解决方式:

systemctl restart containerd

本文来自博客园,作者:ヾ(o◕∀◕)ノヾ,转载请注明原文链接:https://www.cnblogs.com/Jupiter-blog/p/18658582

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 【译】Visual Studio 中新的强大生产力特性

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 【设计模式】告别冗长if-else语句:使用策略模式优化代码结构