使用C#+FFmpeg+DirectX+dxva2硬件解码播放h264流

本文门槛较高,因此行文看起来会乱一些,如果你看到某处能会心一笑请马上联系我开始摆龙门阵

如果你跟随这篇文章实现了播放器,那你会得到一个高效率,无cpu占用(cpu仅用作网络数据接收和从数据入显存,因此几乎看不出占用),且代码和引用精简(无其他托管和非托管的dll依赖,更无需安装任何插件,你的程序完全绿色运行);并且如果硬解不可用,切换到软件是自动过程

首先需要准备好visual studio/msys2/ffmpeg源码/dx9sdk。因为我们要自己编译ffmpeg,并且是改动代码后编译,ffmpeg我们编译时会裁剪。

ffmpeg源码大家使用4.2.1,和我保持同步,这样比较好对应,下载地址为ffmpeg-4.2.1.tar.gz

msys2安装好后不需要装mingw和其他东西,只需要安装make(见下方图片;我们编译工具链会用msvc而非mingw-gcc)

visual studio版本新一些更好,需要安装c++和c#的模块(见下方图片;应该也不需要特意去打开什么功能)

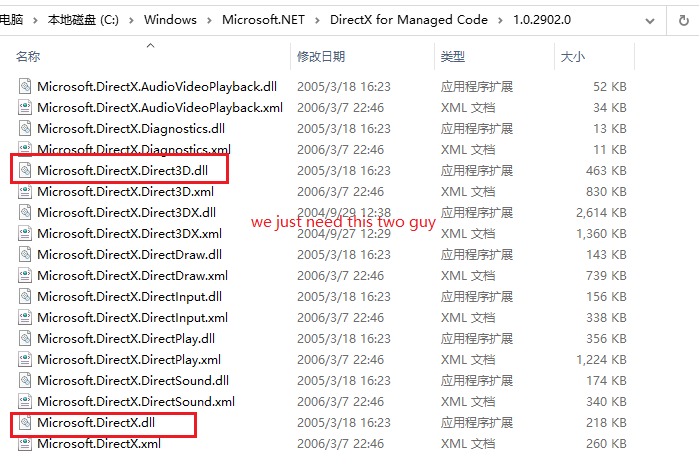

dx9的sdk理论上是不用安装的(如果你是高手,可以用c#的ilgenerator直接写calli;亦或者写unsafe代码直接进行内存call,文章最后我会为大家揭秘如何用c#调用c++甚至com组件)。我用了directx的managecode,由官方为我们做了dx的调用(见下方图片)

第二步是修改ffmpeg源码并编译,我们要修改的源码只有一个文件的十余行,而且是增量修改。

修改的文件位于libavutil/hwcontext_dxva2.c文件,我先将修改部分贴出来然后再给大家解释

static int dxva2_device_create9_extend(AVHWDeviceContext *ctx, UINT adapter, HWND hWnd) { DXVA2DevicePriv *priv = ctx->user_opaque; D3DPRESENT_PARAMETERS d3dpp = {0}; D3DDISPLAYMODE d3ddm; HRESULT hr; pDirect3DCreate9 *createD3D = (pDirect3DCreate9 *)dlsym(priv->d3dlib, "Direct3DCreate9"); if (!createD3D) { av_log(ctx, AV_LOG_ERROR, "Failed to locate Direct3DCreate9\n"); return AVERROR_UNKNOWN; } priv->d3d9 = createD3D(D3D_SDK_VERSION); if (!priv->d3d9) { av_log(ctx, AV_LOG_ERROR, "Failed to create IDirect3D object\n"); return AVERROR_UNKNOWN; } IDirect3D9_GetAdapterDisplayMode(priv->d3d9, adapter, &d3ddm); d3dpp.BackBufferFormat = d3ddm.Format; d3dpp.Windowed = TRUE; // 是否窗口显示 d3dpp.hDeviceWindow = hWnd; // 显示窗口句柄 d3dpp.SwapEffect = D3DSWAPEFFECT_DISCARD; // 交换链设置,后台缓冲使用后直接丢弃 d3dpp.Flags = D3DPRESENTFLAG_VIDEO; // 附加特性,显示视频 DWORD behaviorFlags = D3DCREATE_MULTITHREADED | D3DCREATE_FPU_PRESERVE; D3DDEVTYPE devType = D3DDEVTYPE_HAL; D3DCAPS9 caps; if (IDirect3D9_GetDeviceCaps(priv->d3d9, D3DADAPTER_DEFAULT, devType, &caps) >= 0) { if (caps.DevCaps & D3DDEVCAPS_HWTRANSFORMANDLIGHT) { behaviorFlags |= D3DCREATE_HARDWARE_VERTEXPROCESSING; } else { behaviorFlags |= D3DCREATE_SOFTWARE_VERTEXPROCESSING; } } if(!hWnd) hWnd = GetDesktopWindow(); hr = IDirect3D9_CreateDevice(priv->d3d9, adapter, D3DDEVTYPE_HAL, hWnd, behaviorFlags, &d3dpp, &priv->d3d9device); if (FAILED(hr)) { av_log(ctx, AV_LOG_ERROR, "Failed to create Direct3D device\n"); return AVERROR_UNKNOWN; } return 0; } static int dxva2_device_create(AVHWDeviceContext *ctx, const char *device, AVDictionary *opts, int flags) { AVDXVA2DeviceContext *hwctx = ctx->hwctx; DXVA2DevicePriv *priv; pCreateDeviceManager9 *createDeviceManager = NULL; unsigned resetToken = 0; UINT adapter = D3DADAPTER_DEFAULT; HRESULT hr; int err; AVDictionaryEntry *t = NULL; HWND hWnd = NULL; if (device) adapter = atoi(device); priv = av_mallocz(sizeof(*priv)); if (!priv) return AVERROR(ENOMEM); ctx->user_opaque = priv; ctx->free = dxva2_device_free; priv->device_handle = INVALID_HANDLE_VALUE; priv->d3dlib = dlopen("d3d9.dll", 0); if (!priv->d3dlib) { av_log(ctx, AV_LOG_ERROR, "Failed to load D3D9 library\n"); return AVERROR_UNKNOWN; } priv->dxva2lib = dlopen("dxva2.dll", 0); if (!priv->dxva2lib) { av_log(ctx, AV_LOG_ERROR, "Failed to load DXVA2 library\n"); return AVERROR_UNKNOWN; } createDeviceManager = (pCreateDeviceManager9 *)dlsym(priv->dxva2lib, "DXVA2CreateDirect3DDeviceManager9"); if (!createDeviceManager) { av_log(ctx, AV_LOG_ERROR, "Failed to locate DXVA2CreateDirect3DDeviceManager9\n"); return AVERROR_UNKNOWN; } t = av_dict_get(opts, "hWnd", NULL, 0); if(t) { hWnd = (HWND)atoi(t->value); } if(hWnd) { if((err = dxva2_device_create9_extend(ctx, adapter, hWnd)) < 0) return err; } else { if (dxva2_device_create9ex(ctx, adapter) < 0) { // Retry with "classic" d3d9 err = dxva2_device_create9(ctx, adapter); if (err < 0) return err; } } hr = createDeviceManager(&resetToken, &hwctx->devmgr); if (FAILED(hr)) { av_log(ctx, AV_LOG_ERROR, "Failed to create Direct3D device manager\n"); return AVERROR_UNKNOWN; } hr = IDirect3DDeviceManager9_ResetDevice(hwctx->devmgr, priv->d3d9device, resetToken); if (FAILED(hr)) { av_log(ctx, AV_LOG_ERROR, "Failed to bind Direct3D device to device manager\n"); return AVERROR_UNKNOWN; } hr = IDirect3DDeviceManager9_OpenDeviceHandle(hwctx->devmgr, &priv->device_handle); if (FAILED(hr)) { av_log(ctx, AV_LOG_ERROR, "Failed to open device handle\n"); return AVERROR_UNKNOWN; } return 0; }

代码中dxva2_device_create9_extend函数是我新加入的,并且在dxva2_device_create函数(这个函数是ffmpeg原始流程中的,我的改动不影响原本任何功能)中适时调用;简单来说,原来的ffmpeg也能基于dxva2硬件解码,但是它没法将解码得到的surface用于前台播放,因为它创建device时并未指定窗口和其他相关参数,大家可以参考我代码实现,我将窗口句柄传入后创建过程完全改变(其他人如果使用我们编译的代码,他没有传入窗口句柄,就执行原来的创建,因此百分百兼容)。

原始文件(版本不一致,仅供参考)

(ps:在这里我讲一下网络上另外一种写法(两年前我也用的他们的,因为没时间详细看ffmpeg源码),他们是在外面创建的device和surface然后想办法传到ffmpeg内部进行替换,这样做有好处,就是不用自己修改和编译ffmpeg,坏处是得自己维护device和surface。至于二进制兼容方面考虑,两种做法都不是太好)

代码修改完成后我们使用msys2编译

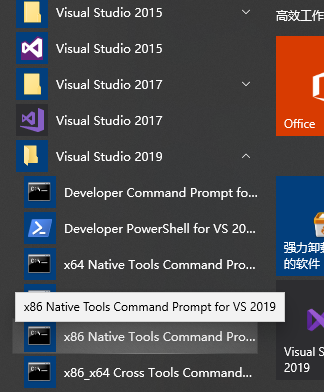

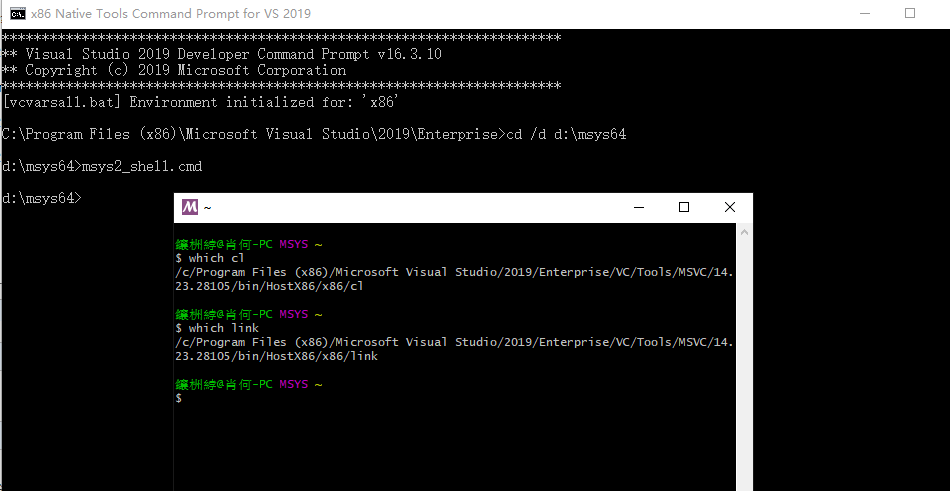

首先是需要把编译器设置为msvc,这个步骤通过使用vs的命令行工具即可,如下图

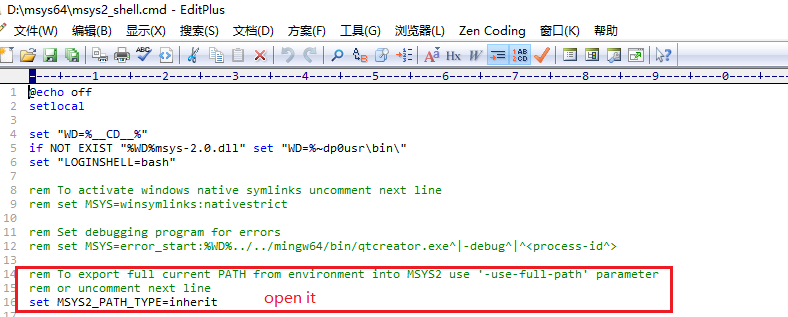

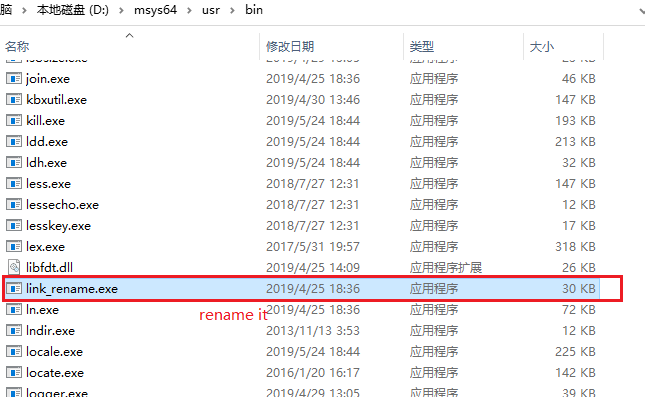

然后是设置msys2继承环境变量(这样make时才能找到cl/link)

打开msys,查看变量是否正确

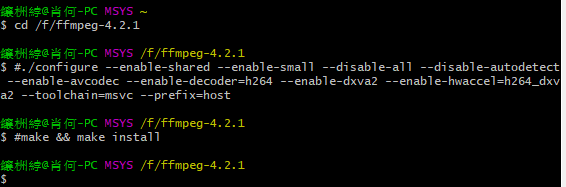

编译ffmpeg

./configure --enable-shared --enable-small --disable-all --disable-autodetect --enable-avcodec --enable-decoder=h264 --enable-dxva2 --enable-hwaccel=h264_dxva2 --toolchain=msvc --prefix=host

make && make install

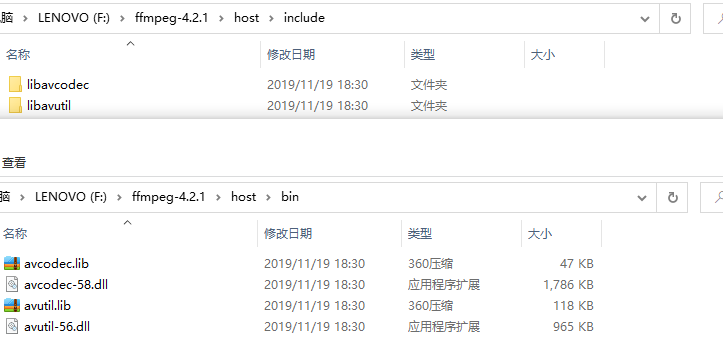

编译完成后头文件和dll在host文件夹内(编译产出的dll也是clear的,不依赖msvc**.dll)

在C#中使用我们产出的方式需要使用p/invoke和unsafe代码。

我先贴出我针对ffmpeg写的一个工具类,然后给大家稍微讲解一下

using System; using System.Runtime.InteropServices; namespace MultiPlayer { public enum AVCodecID { AV_CODEC_ID_NONE, /* video codecs */ AV_CODEC_ID_MPEG1VIDEO, AV_CODEC_ID_MPEG2VIDEO, ///< preferred ID for MPEG-1/2 video decoding AV_CODEC_ID_H261, AV_CODEC_ID_H263, AV_CODEC_ID_RV10, AV_CODEC_ID_RV20, AV_CODEC_ID_MJPEG, AV_CODEC_ID_MJPEGB, AV_CODEC_ID_LJPEG, AV_CODEC_ID_SP5X, AV_CODEC_ID_JPEGLS, AV_CODEC_ID_MPEG4, AV_CODEC_ID_RAWVIDEO, AV_CODEC_ID_MSMPEG4V1, AV_CODEC_ID_MSMPEG4V2, AV_CODEC_ID_MSMPEG4V3, AV_CODEC_ID_WMV1, AV_CODEC_ID_WMV2, AV_CODEC_ID_H263P, AV_CODEC_ID_H263I, AV_CODEC_ID_FLV1, AV_CODEC_ID_SVQ1, AV_CODEC_ID_SVQ3, AV_CODEC_ID_DVVIDEO, AV_CODEC_ID_HUFFYUV, AV_CODEC_ID_CYUV, AV_CODEC_ID_H264, AV_CODEC_ID_INDEO3, AV_CODEC_ID_VP3, AV_CODEC_ID_THEORA, AV_CODEC_ID_ASV1, AV_CODEC_ID_ASV2, AV_CODEC_ID_FFV1, AV_CODEC_ID_4XM, AV_CODEC_ID_VCR1, AV_CODEC_ID_CLJR, AV_CODEC_ID_MDEC, AV_CODEC_ID_ROQ, AV_CODEC_ID_INTERPLAY_VIDEO, AV_CODEC_ID_XAN_WC3, AV_CODEC_ID_XAN_WC4, AV_CODEC_ID_RPZA, AV_CODEC_ID_CINEPAK, AV_CODEC_ID_WS_VQA, AV_CODEC_ID_MSRLE, AV_CODEC_ID_MSVIDEO1, AV_CODEC_ID_IDCIN, AV_CODEC_ID_8BPS, AV_CODEC_ID_SMC, AV_CODEC_ID_FLIC, AV_CODEC_ID_TRUEMOTION1, AV_CODEC_ID_VMDVIDEO, AV_CODEC_ID_MSZH, AV_CODEC_ID_ZLIB, AV_CODEC_ID_QTRLE, AV_CODEC_ID_TSCC, AV_CODEC_ID_ULTI, AV_CODEC_ID_QDRAW, AV_CODEC_ID_VIXL, AV_CODEC_ID_QPEG, AV_CODEC_ID_PNG, AV_CODEC_ID_PPM, AV_CODEC_ID_PBM, AV_CODEC_ID_PGM, AV_CODEC_ID_PGMYUV, AV_CODEC_ID_PAM, AV_CODEC_ID_FFVHUFF, AV_CODEC_ID_RV30, AV_CODEC_ID_RV40, AV_CODEC_ID_VC1, AV_CODEC_ID_WMV3, AV_CODEC_ID_LOCO, AV_CODEC_ID_WNV1, AV_CODEC_ID_AASC, AV_CODEC_ID_INDEO2, AV_CODEC_ID_FRAPS, AV_CODEC_ID_TRUEMOTION2, AV_CODEC_ID_BMP, AV_CODEC_ID_CSCD, AV_CODEC_ID_MMVIDEO, AV_CODEC_ID_ZMBV, AV_CODEC_ID_AVS, AV_CODEC_ID_SMACKVIDEO, AV_CODEC_ID_NUV, AV_CODEC_ID_KMVC, AV_CODEC_ID_FLASHSV, AV_CODEC_ID_CAVS, AV_CODEC_ID_JPEG2000, AV_CODEC_ID_VMNC, AV_CODEC_ID_VP5, AV_CODEC_ID_VP6, AV_CODEC_ID_VP6F, AV_CODEC_ID_TARGA, AV_CODEC_ID_DSICINVIDEO, AV_CODEC_ID_TIERTEXSEQVIDEO, AV_CODEC_ID_TIFF, AV_CODEC_ID_GIF, AV_CODEC_ID_DXA, AV_CODEC_ID_DNXHD, AV_CODEC_ID_THP, AV_CODEC_ID_SGI, AV_CODEC_ID_C93, AV_CODEC_ID_BETHSOFTVID, AV_CODEC_ID_PTX, AV_CODEC_ID_TXD, AV_CODEC_ID_VP6A, AV_CODEC_ID_AMV, AV_CODEC_ID_VB, AV_CODEC_ID_PCX, AV_CODEC_ID_SUNRAST, AV_CODEC_ID_INDEO4, AV_CODEC_ID_INDEO5, AV_CODEC_ID_MIMIC, AV_CODEC_ID_RL2, AV_CODEC_ID_ESCAPE124, AV_CODEC_ID_DIRAC, AV_CODEC_ID_BFI, AV_CODEC_ID_CMV, AV_CODEC_ID_MOTIONPIXELS, AV_CODEC_ID_TGV, AV_CODEC_ID_TGQ, AV_CODEC_ID_TQI, AV_CODEC_ID_AURA, AV_CODEC_ID_AURA2, AV_CODEC_ID_V210X, AV_CODEC_ID_TMV, AV_CODEC_ID_V210, AV_CODEC_ID_DPX, AV_CODEC_ID_MAD, AV_CODEC_ID_FRWU, AV_CODEC_ID_FLASHSV2, AV_CODEC_ID_CDGRAPHICS, AV_CODEC_ID_R210, AV_CODEC_ID_ANM, AV_CODEC_ID_BINKVIDEO, AV_CODEC_ID_IFF_ILBM, //#define AV_CODEC_ID_IFF_BYTERUN1 AV_CODEC_ID_IFF_ILBM AV_CODEC_ID_KGV1, AV_CODEC_ID_YOP, AV_CODEC_ID_VP8, AV_CODEC_ID_PICTOR, AV_CODEC_ID_ANSI, AV_CODEC_ID_A64_MULTI, AV_CODEC_ID_A64_MULTI5, AV_CODEC_ID_R10K, AV_CODEC_ID_MXPEG, AV_CODEC_ID_LAGARITH, AV_CODEC_ID_PRORES, AV_CODEC_ID_JV, AV_CODEC_ID_DFA, AV_CODEC_ID_WMV3IMAGE, AV_CODEC_ID_VC1IMAGE, AV_CODEC_ID_UTVIDEO, AV_CODEC_ID_BMV_VIDEO, AV_CODEC_ID_VBLE, AV_CODEC_ID_DXTORY, AV_CODEC_ID_V410, AV_CODEC_ID_XWD, AV_CODEC_ID_CDXL, AV_CODEC_ID_XBM, AV_CODEC_ID_ZEROCODEC, AV_CODEC_ID_MSS1, AV_CODEC_ID_MSA1, AV_CODEC_ID_TSCC2, AV_CODEC_ID_MTS2, AV_CODEC_ID_CLLC, AV_CODEC_ID_MSS2, AV_CODEC_ID_VP9, AV_CODEC_ID_AIC, AV_CODEC_ID_ESCAPE130, AV_CODEC_ID_G2M, AV_CODEC_ID_WEBP, AV_CODEC_ID_HNM4_VIDEO, AV_CODEC_ID_HEVC, //#define AV_CODEC_ID_H265 AV_CODEC_ID_HEVC AV_CODEC_ID_FIC, AV_CODEC_ID_ALIAS_PIX, AV_CODEC_ID_BRENDER_PIX, AV_CODEC_ID_PAF_VIDEO, AV_CODEC_ID_EXR, AV_CODEC_ID_VP7, AV_CODEC_ID_SANM, AV_CODEC_ID_SGIRLE, AV_CODEC_ID_MVC1, AV_CODEC_ID_MVC2, AV_CODEC_ID_HQX, AV_CODEC_ID_TDSC, AV_CODEC_ID_HQ_HQA, AV_CODEC_ID_HAP, AV_CODEC_ID_DDS, AV_CODEC_ID_DXV, AV_CODEC_ID_SCREENPRESSO, AV_CODEC_ID_RSCC, AV_CODEC_ID_AVS2, AV_CODEC_ID_Y41P = 0x8000, AV_CODEC_ID_AVRP, AV_CODEC_ID_012V, AV_CODEC_ID_AVUI, AV_CODEC_ID_AYUV, AV_CODEC_ID_TARGA_Y216, AV_CODEC_ID_V308, AV_CODEC_ID_V408, AV_CODEC_ID_YUV4, AV_CODEC_ID_AVRN, AV_CODEC_ID_CPIA, AV_CODEC_ID_XFACE, AV_CODEC_ID_SNOW, AV_CODEC_ID_SMVJPEG, AV_CODEC_ID_APNG, AV_CODEC_ID_DAALA, AV_CODEC_ID_CFHD, AV_CODEC_ID_TRUEMOTION2RT, AV_CODEC_ID_M101, AV_CODEC_ID_MAGICYUV, AV_CODEC_ID_SHEERVIDEO, AV_CODEC_ID_YLC, AV_CODEC_ID_PSD, AV_CODEC_ID_PIXLET, AV_CODEC_ID_SPEEDHQ, AV_CODEC_ID_FMVC, AV_CODEC_ID_SCPR, AV_CODEC_ID_CLEARVIDEO, AV_CODEC_ID_XPM, AV_CODEC_ID_AV1, AV_CODEC_ID_BITPACKED, AV_CODEC_ID_MSCC, AV_CODEC_ID_SRGC, AV_CODEC_ID_SVG, AV_CODEC_ID_GDV, AV_CODEC_ID_FITS, AV_CODEC_ID_IMM4, AV_CODEC_ID_PROSUMER, AV_CODEC_ID_MWSC, AV_CODEC_ID_WCMV, AV_CODEC_ID_RASC, AV_CODEC_ID_HYMT, AV_CODEC_ID_ARBC, AV_CODEC_ID_AGM, AV_CODEC_ID_LSCR, AV_CODEC_ID_VP4, /* various PCM "codecs" */ AV_CODEC_ID_FIRST_AUDIO = 0x10000, ///< A dummy id pointing at the start of audio codecs AV_CODEC_ID_PCM_S16LE = 0x10000, AV_CODEC_ID_PCM_S16BE, AV_CODEC_ID_PCM_U16LE, AV_CODEC_ID_PCM_U16BE, AV_CODEC_ID_PCM_S8, AV_CODEC_ID_PCM_U8, AV_CODEC_ID_PCM_MULAW, AV_CODEC_ID_PCM_ALAW, AV_CODEC_ID_PCM_S32LE, AV_CODEC_ID_PCM_S32BE, AV_CODEC_ID_PCM_U32LE, AV_CODEC_ID_PCM_U32BE, AV_CODEC_ID_PCM_S24LE, AV_CODEC_ID_PCM_S24BE, AV_CODEC_ID_PCM_U24LE, AV_CODEC_ID_PCM_U24BE, AV_CODEC_ID_PCM_S24DAUD, AV_CODEC_ID_PCM_ZORK, AV_CODEC_ID_PCM_S16LE_PLANAR, AV_CODEC_ID_PCM_DVD, AV_CODEC_ID_PCM_F32BE, AV_CODEC_ID_PCM_F32LE, AV_CODEC_ID_PCM_F64BE, AV_CODEC_ID_PCM_F64LE, AV_CODEC_ID_PCM_BLURAY, AV_CODEC_ID_PCM_LXF, AV_CODEC_ID_S302M, AV_CODEC_ID_PCM_S8_PLANAR, AV_CODEC_ID_PCM_S24LE_PLANAR, AV_CODEC_ID_PCM_S32LE_PLANAR, AV_CODEC_ID_PCM_S16BE_PLANAR, AV_CODEC_ID_PCM_S64LE = 0x10800, AV_CODEC_ID_PCM_S64BE, AV_CODEC_ID_PCM_F16LE, AV_CODEC_ID_PCM_F24LE, AV_CODEC_ID_PCM_VIDC, /* various ADPCM codecs */ AV_CODEC_ID_ADPCM_IMA_QT = 0x11000, AV_CODEC_ID_ADPCM_IMA_WAV, AV_CODEC_ID_ADPCM_IMA_DK3, AV_CODEC_ID_ADPCM_IMA_DK4, AV_CODEC_ID_ADPCM_IMA_WS, AV_CODEC_ID_ADPCM_IMA_SMJPEG, AV_CODEC_ID_ADPCM_MS, AV_CODEC_ID_ADPCM_4XM, AV_CODEC_ID_ADPCM_XA, AV_CODEC_ID_ADPCM_ADX, AV_CODEC_ID_ADPCM_EA, AV_CODEC_ID_ADPCM_G726, AV_CODEC_ID_ADPCM_CT, AV_CODEC_ID_ADPCM_SWF, AV_CODEC_ID_ADPCM_YAMAHA, AV_CODEC_ID_ADPCM_SBPRO_4, AV_CODEC_ID_ADPCM_SBPRO_3, AV_CODEC_ID_ADPCM_SBPRO_2, AV_CODEC_ID_ADPCM_THP, AV_CODEC_ID_ADPCM_IMA_AMV, AV_CODEC_ID_ADPCM_EA_R1, AV_CODEC_ID_ADPCM_EA_R3, AV_CODEC_ID_ADPCM_EA_R2, AV_CODEC_ID_ADPCM_IMA_EA_SEAD, AV_CODEC_ID_ADPCM_IMA_EA_EACS, AV_CODEC_ID_ADPCM_EA_XAS, AV_CODEC_ID_ADPCM_EA_MAXIS_XA, AV_CODEC_ID_ADPCM_IMA_ISS, AV_CODEC_ID_ADPCM_G722, AV_CODEC_ID_ADPCM_IMA_APC, AV_CODEC_ID_ADPCM_VIMA, AV_CODEC_ID_ADPCM_AFC = 0x11800, AV_CODEC_ID_ADPCM_IMA_OKI, AV_CODEC_ID_ADPCM_DTK, AV_CODEC_ID_ADPCM_IMA_RAD, AV_CODEC_ID_ADPCM_G726LE, AV_CODEC_ID_ADPCM_THP_LE, AV_CODEC_ID_ADPCM_PSX, AV_CODEC_ID_ADPCM_AICA, AV_CODEC_ID_ADPCM_IMA_DAT4, AV_CODEC_ID_ADPCM_MTAF, AV_CODEC_ID_ADPCM_AGM, /* AMR */ AV_CODEC_ID_AMR_NB = 0x12000, AV_CODEC_ID_AMR_WB, /* RealAudio codecs*/ AV_CODEC_ID_RA_144 = 0x13000, AV_CODEC_ID_RA_288, /* various DPCM codecs */ AV_CODEC_ID_ROQ_DPCM = 0x14000, AV_CODEC_ID_INTERPLAY_DPCM, AV_CODEC_ID_XAN_DPCM, AV_CODEC_ID_SOL_DPCM, AV_CODEC_ID_SDX2_DPCM = 0x14800, AV_CODEC_ID_GREMLIN_DPCM, /* audio codecs */ AV_CODEC_ID_MP2 = 0x15000, AV_CODEC_ID_MP3, ///< preferred ID for decoding MPEG audio layer 1, 2 or 3 AV_CODEC_ID_AAC, AV_CODEC_ID_AC3, AV_CODEC_ID_DTS, AV_CODEC_ID_VORBIS, AV_CODEC_ID_DVAUDIO, AV_CODEC_ID_WMAV1, AV_CODEC_ID_WMAV2, AV_CODEC_ID_MACE3, AV_CODEC_ID_MACE6, AV_CODEC_ID_VMDAUDIO, AV_CODEC_ID_FLAC, AV_CODEC_ID_MP3ADU, AV_CODEC_ID_MP3ON4, AV_CODEC_ID_SHORTEN, AV_CODEC_ID_ALAC, AV_CODEC_ID_WESTWOOD_SND1, AV_CODEC_ID_GSM, ///< as in Berlin toast format AV_CODEC_ID_QDM2, AV_CODEC_ID_COOK, AV_CODEC_ID_TRUESPEECH, AV_CODEC_ID_TTA, AV_CODEC_ID_SMACKAUDIO, AV_CODEC_ID_QCELP, AV_CODEC_ID_WAVPACK, AV_CODEC_ID_DSICINAUDIO, AV_CODEC_ID_IMC, AV_CODEC_ID_MUSEPACK7, AV_CODEC_ID_MLP, AV_CODEC_ID_GSM_MS, /* as found in WAV */ AV_CODEC_ID_ATRAC3, AV_CODEC_ID_APE, AV_CODEC_ID_NELLYMOSER, AV_CODEC_ID_MUSEPACK8, AV_CODEC_ID_SPEEX, AV_CODEC_ID_WMAVOICE, AV_CODEC_ID_WMAPRO, AV_CODEC_ID_WMALOSSLESS, AV_CODEC_ID_ATRAC3P, AV_CODEC_ID_EAC3, AV_CODEC_ID_SIPR, AV_CODEC_ID_MP1, AV_CODEC_ID_TWINVQ, AV_CODEC_ID_TRUEHD, AV_CODEC_ID_MP4ALS, AV_CODEC_ID_ATRAC1, AV_CODEC_ID_BINKAUDIO_RDFT, AV_CODEC_ID_BINKAUDIO_DCT, AV_CODEC_ID_AAC_LATM, AV_CODEC_ID_QDMC, AV_CODEC_ID_CELT, AV_CODEC_ID_G723_1, AV_CODEC_ID_G729, AV_CODEC_ID_8SVX_EXP, AV_CODEC_ID_8SVX_FIB, AV_CODEC_ID_BMV_AUDIO, AV_CODEC_ID_RALF, AV_CODEC_ID_IAC, AV_CODEC_ID_ILBC, AV_CODEC_ID_OPUS, AV_CODEC_ID_COMFORT_NOISE, AV_CODEC_ID_TAK, AV_CODEC_ID_METASOUND, AV_CODEC_ID_PAF_AUDIO, AV_CODEC_ID_ON2AVC, AV_CODEC_ID_DSS_SP, AV_CODEC_ID_CODEC2, AV_CODEC_ID_FFWAVESYNTH = 0x15800, AV_CODEC_ID_SONIC, AV_CODEC_ID_SONIC_LS, AV_CODEC_ID_EVRC, AV_CODEC_ID_SMV, AV_CODEC_ID_DSD_LSBF, AV_CODEC_ID_DSD_MSBF, AV_CODEC_ID_DSD_LSBF_PLANAR, AV_CODEC_ID_DSD_MSBF_PLANAR, AV_CODEC_ID_4GV, AV_CODEC_ID_INTERPLAY_ACM, AV_CODEC_ID_XMA1, AV_CODEC_ID_XMA2, AV_CODEC_ID_DST, AV_CODEC_ID_ATRAC3AL, AV_CODEC_ID_ATRAC3PAL, AV_CODEC_ID_DOLBY_E, AV_CODEC_ID_APTX, AV_CODEC_ID_APTX_HD, AV_CODEC_ID_SBC, AV_CODEC_ID_ATRAC9, AV_CODEC_ID_HCOM, /* subtitle codecs */ AV_CODEC_ID_FIRST_SUBTITLE = 0x17000, ///< A dummy ID pointing at the start of subtitle codecs. AV_CODEC_ID_DVD_SUBTITLE = 0x17000, AV_CODEC_ID_DVB_SUBTITLE, AV_CODEC_ID_TEXT, ///< raw UTF-8 text AV_CODEC_ID_XSUB, AV_CODEC_ID_SSA, AV_CODEC_ID_MOV_TEXT, AV_CODEC_ID_HDMV_PGS_SUBTITLE, AV_CODEC_ID_DVB_TELETEXT, AV_CODEC_ID_SRT, AV_CODEC_ID_MICRODVD = 0x17800, AV_CODEC_ID_EIA_608, AV_CODEC_ID_JACOSUB, AV_CODEC_ID_SAMI, AV_CODEC_ID_REALTEXT, AV_CODEC_ID_STL, AV_CODEC_ID_SUBVIEWER1, AV_CODEC_ID_SUBVIEWER, AV_CODEC_ID_SUBRIP, AV_CODEC_ID_WEBVTT, AV_CODEC_ID_MPL2, AV_CODEC_ID_VPLAYER, AV_CODEC_ID_PJS, AV_CODEC_ID_ASS, AV_CODEC_ID_HDMV_TEXT_SUBTITLE, AV_CODEC_ID_TTML, AV_CODEC_ID_ARIB_CAPTION, /* other specific kind of codecs (generally used for attachments) */ AV_CODEC_ID_FIRST_UNKNOWN = 0x18000, ///< A dummy ID pointing at the start of various fake codecs. AV_CODEC_ID_TTF = 0x18000, AV_CODEC_ID_SCTE_35, ///< Contain timestamp estimated through PCR of program stream. AV_CODEC_ID_BINTEXT = 0x18800, AV_CODEC_ID_XBIN, AV_CODEC_ID_IDF, AV_CODEC_ID_OTF, AV_CODEC_ID_SMPTE_KLV, AV_CODEC_ID_DVD_NAV, AV_CODEC_ID_TIMED_ID3, AV_CODEC_ID_BIN_DATA, AV_CODEC_ID_PROBE = 0x19000, ///< codec_id is not known (like AV_CODEC_ID_NONE) but lavf should attempt to identify it AV_CODEC_ID_MPEG2TS = 0x20000, /**< _FAKE_ codec to indicate a raw MPEG-2 TS * stream (only used by libavformat) */ AV_CODEC_ID_MPEG4SYSTEMS = 0x20001, /**< _FAKE_ codec to indicate a MPEG-4 Systems * stream (only used by libavformat) */ AV_CODEC_ID_FFMETADATA = 0x21000, ///< Dummy codec for streams containing only metadata information. AV_CODEC_ID_WRAPPED_AVFRAME = 0x21001, ///< Passthrough codec, AVFrames wrapped in AVPacket } public enum AVHWDeviceType { AV_HWDEVICE_TYPE_NONE, AV_HWDEVICE_TYPE_VDPAU, AV_HWDEVICE_TYPE_CUDA, AV_HWDEVICE_TYPE_VAAPI, AV_HWDEVICE_TYPE_DXVA2, AV_HWDEVICE_TYPE_QSV, AV_HWDEVICE_TYPE_VIDEOTOOLBOX, AV_HWDEVICE_TYPE_D3D11VA, AV_HWDEVICE_TYPE_DRM, AV_HWDEVICE_TYPE_OPENCL, AV_HWDEVICE_TYPE_MEDIACODEC, } public enum AVPixelFormat { AV_PIX_FMT_NONE = -1, AV_PIX_FMT_YUV420P, ///< planar YUV 4:2:0, 12bpp, (1 Cr & Cb sample per 2x2 Y samples) AV_PIX_FMT_YUYV422, ///< packed YUV 4:2:2, 16bpp, Y0 Cb Y1 Cr AV_PIX_FMT_RGB24, ///< packed RGB 8:8:8, 24bpp, RGBRGB... AV_PIX_FMT_BGR24, ///< packed RGB 8:8:8, 24bpp, BGRBGR... AV_PIX_FMT_YUV422P, ///< planar YUV 4:2:2, 16bpp, (1 Cr & Cb sample per 2x1 Y samples) AV_PIX_FMT_YUV444P, ///< planar YUV 4:4:4, 24bpp, (1 Cr & Cb sample per 1x1 Y samples) AV_PIX_FMT_YUV410P, ///< planar YUV 4:1:0, 9bpp, (1 Cr & Cb sample per 4x4 Y samples) AV_PIX_FMT_YUV411P, ///< planar YUV 4:1:1, 12bpp, (1 Cr & Cb sample per 4x1 Y samples) AV_PIX_FMT_GRAY8, ///< Y , 8bpp AV_PIX_FMT_MONOWHITE, ///< Y , 1bpp, 0 is white, 1 is black, in each byte pixels are ordered from the msb to the lsb AV_PIX_FMT_MONOBLACK, ///< Y , 1bpp, 0 is black, 1 is white, in each byte pixels are ordered from the msb to the lsb AV_PIX_FMT_PAL8, ///< 8 bits with AV_PIX_FMT_RGB32 palette AV_PIX_FMT_YUVJ420P, ///< planar YUV 4:2:0, 12bpp, full scale (JPEG), deprecated in favor of AV_PIX_FMT_YUV420P and setting color_range AV_PIX_FMT_YUVJ422P, ///< planar YUV 4:2:2, 16bpp, full scale (JPEG), deprecated in favor of AV_PIX_FMT_YUV422P and setting color_range AV_PIX_FMT_YUVJ444P, ///< planar YUV 4:4:4, 24bpp, full scale (JPEG), deprecated in favor of AV_PIX_FMT_YUV444P and setting color_range AV_PIX_FMT_UYVY422, ///< packed YUV 4:2:2, 16bpp, Cb Y0 Cr Y1 AV_PIX_FMT_UYYVYY411, ///< packed YUV 4:1:1, 12bpp, Cb Y0 Y1 Cr Y2 Y3 AV_PIX_FMT_BGR8, ///< packed RGB 3:3:2, 8bpp, (msb)2B 3G 3R(lsb) AV_PIX_FMT_BGR4, ///< packed RGB 1:2:1 bitstream, 4bpp, (msb)1B 2G 1R(lsb), a byte contains two pixels, the first pixel in the byte is the one composed by the 4 msb bits AV_PIX_FMT_BGR4_BYTE, ///< packed RGB 1:2:1, 8bpp, (msb)1B 2G 1R(lsb) AV_PIX_FMT_RGB8, ///< packed RGB 3:3:2, 8bpp, (msb)2R 3G 3B(lsb) AV_PIX_FMT_RGB4, ///< packed RGB 1:2:1 bitstream, 4bpp, (msb)1R 2G 1B(lsb), a byte contains two pixels, the first pixel in the byte is the one composed by the 4 msb bits AV_PIX_FMT_RGB4_BYTE, ///< packed RGB 1:2:1, 8bpp, (msb)1R 2G 1B(lsb) AV_PIX_FMT_NV12, ///< planar YUV 4:2:0, 12bpp, 1 plane for Y and 1 plane for the UV components, which are interleaved (first byte U and the following byte V) AV_PIX_FMT_NV21, ///< as above, but U and V bytes are swapped AV_PIX_FMT_ARGB, ///< packed ARGB 8:8:8:8, 32bpp, ARGBARGB... AV_PIX_FMT_RGBA, ///< packed RGBA 8:8:8:8, 32bpp, RGBARGBA... AV_PIX_FMT_ABGR, ///< packed ABGR 8:8:8:8, 32bpp, ABGRABGR... AV_PIX_FMT_BGRA, ///< packed BGRA 8:8:8:8, 32bpp, BGRABGRA... AV_PIX_FMT_GRAY16BE, ///< Y , 16bpp, big-endian AV_PIX_FMT_GRAY16LE, ///< Y , 16bpp, little-endian AV_PIX_FMT_YUV440P, ///< planar YUV 4:4:0 (1 Cr & Cb sample per 1x2 Y samples) AV_PIX_FMT_YUVJ440P, ///< planar YUV 4:4:0 full scale (JPEG), deprecated in favor of AV_PIX_FMT_YUV440P and setting color_range AV_PIX_FMT_YUVA420P, ///< planar YUV 4:2:0, 20bpp, (1 Cr & Cb sample per 2x2 Y & A samples) AV_PIX_FMT_RGB48BE, ///< packed RGB 16:16:16, 48bpp, 16R, 16G, 16B, the 2-byte value for each R/G/B component is stored as big-endian AV_PIX_FMT_RGB48LE, ///< packed RGB 16:16:16, 48bpp, 16R, 16G, 16B, the 2-byte value for each R/G/B component is stored as little-endian AV_PIX_FMT_RGB565BE, ///< packed RGB 5:6:5, 16bpp, (msb) 5R 6G 5B(lsb), big-endian AV_PIX_FMT_RGB565LE, ///< packed RGB 5:6:5, 16bpp, (msb) 5R 6G 5B(lsb), little-endian AV_PIX_FMT_RGB555BE, ///< packed RGB 5:5:5, 16bpp, (msb)1X 5R 5G 5B(lsb), big-endian , X=unused/undefined AV_PIX_FMT_RGB555LE, ///< packed RGB 5:5:5, 16bpp, (msb)1X 5R 5G 5B(lsb), little-endian, X=unused/undefined AV_PIX_FMT_BGR565BE, ///< packed BGR 5:6:5, 16bpp, (msb) 5B 6G 5R(lsb), big-endian AV_PIX_FMT_BGR565LE, ///< packed BGR 5:6:5, 16bpp, (msb) 5B 6G 5R(lsb), little-endian AV_PIX_FMT_BGR555BE, ///< packed BGR 5:5:5, 16bpp, (msb)1X 5B 5G 5R(lsb), big-endian , X=unused/undefined AV_PIX_FMT_BGR555LE, ///< packed BGR 5:5:5, 16bpp, (msb)1X 5B 5G 5R(lsb), little-endian, X=unused/undefined /** @name Deprecated pixel formats */ /**@{*/ AV_PIX_FMT_VAAPI_MOCO, ///< HW acceleration through VA API at motion compensation entry-point, Picture.data[3] contains a vaapi_render_state struct which contains macroblocks as well as various fields extracted from headers AV_PIX_FMT_VAAPI_IDCT, ///< HW acceleration through VA API at IDCT entry-point, Picture.data[3] contains a vaapi_render_state struct which contains fields extracted from headers AV_PIX_FMT_VAAPI_VLD, ///< HW decoding through VA API, Picture.data[3] contains a VASurfaceID /**@}*/ AV_PIX_FMT_VAAPI = AV_PIX_FMT_VAAPI_VLD, AV_PIX_FMT_YUV420P16LE, ///< planar YUV 4:2:0, 24bpp, (1 Cr & Cb sample per 2x2 Y samples), little-endian AV_PIX_FMT_YUV420P16BE, ///< planar YUV 4:2:0, 24bpp, (1 Cr & Cb sample per 2x2 Y samples), big-endian AV_PIX_FMT_YUV422P16LE, ///< planar YUV 4:2:2, 32bpp, (1 Cr & Cb sample per 2x1 Y samples), little-endian AV_PIX_FMT_YUV422P16BE, ///< planar YUV 4:2:2, 32bpp, (1 Cr & Cb sample per 2x1 Y samples), big-endian AV_PIX_FMT_YUV444P16LE, ///< planar YUV 4:4:4, 48bpp, (1 Cr & Cb sample per 1x1 Y samples), little-endian AV_PIX_FMT_YUV444P16BE, ///< planar YUV 4:4:4, 48bpp, (1 Cr & Cb sample per 1x1 Y samples), big-endian AV_PIX_FMT_DXVA2_VLD, ///< HW decoding through DXVA2, Picture.data[3] contains a LPDIRECT3DSURFACE9 pointer AV_PIX_FMT_RGB444LE, ///< packed RGB 4:4:4, 16bpp, (msb)4X 4R 4G 4B(lsb), little-endian, X=unused/undefined AV_PIX_FMT_RGB444BE, ///< packed RGB 4:4:4, 16bpp, (msb)4X 4R 4G 4B(lsb), big-endian, X=unused/undefined AV_PIX_FMT_BGR444LE, ///< packed BGR 4:4:4, 16bpp, (msb)4X 4B 4G 4R(lsb), little-endian, X=unused/undefined AV_PIX_FMT_BGR444BE, ///< packed BGR 4:4:4, 16bpp, (msb)4X 4B 4G 4R(lsb), big-endian, X=unused/undefined AV_PIX_FMT_YA8, ///< 8 bits gray, 8 bits alpha AV_PIX_FMT_Y400A = AV_PIX_FMT_YA8, ///< alias for AV_PIX_FMT_YA8 AV_PIX_FMT_GRAY8A = AV_PIX_FMT_YA8, ///< alias for AV_PIX_FMT_YA8 AV_PIX_FMT_BGR48BE, ///< packed RGB 16:16:16, 48bpp, 16B, 16G, 16R, the 2-byte value for each R/G/B component is stored as big-endian AV_PIX_FMT_BGR48LE, ///< packed RGB 16:16:16, 48bpp, 16B, 16G, 16R, the 2-byte value for each R/G/B component is stored as little-endian /** * The following 12 formats have the disadvantage of needing 1 format for each bit depth. * Notice that each 9/10 bits sample is stored in 16 bits with extra padding. * If you want to support multiple bit depths, then using AV_PIX_FMT_YUV420P16* with the bpp stored separately is better. */ AV_PIX_FMT_YUV420P9BE, ///< planar YUV 4:2:0, 13.5bpp, (1 Cr & Cb sample per 2x2 Y samples), big-endian AV_PIX_FMT_YUV420P9LE, ///< planar YUV 4:2:0, 13.5bpp, (1 Cr & Cb sample per 2x2 Y samples), little-endian AV_PIX_FMT_YUV420P10BE,///< planar YUV 4:2:0, 15bpp, (1 Cr & Cb sample per 2x2 Y samples), big-endian AV_PIX_FMT_YUV420P10LE,///< planar YUV 4:2:0, 15bpp, (1 Cr & Cb sample per 2x2 Y samples), little-endian AV_PIX_FMT_YUV422P10BE,///< planar YUV 4:2:2, 20bpp, (1 Cr & Cb sample per 2x1 Y samples), big-endian AV_PIX_FMT_YUV422P10LE,///< planar YUV 4:2:2, 20bpp, (1 Cr & Cb sample per 2x1 Y samples), little-endian AV_PIX_FMT_YUV444P9BE, ///< planar YUV 4:4:4, 27bpp, (1 Cr & Cb sample per 1x1 Y samples), big-endian AV_PIX_FMT_YUV444P9LE, ///< planar YUV 4:4:4, 27bpp, (1 Cr & Cb sample per 1x1 Y samples), little-endian AV_PIX_FMT_YUV444P10BE,///< planar YUV 4:4:4, 30bpp, (1 Cr & Cb sample per 1x1 Y samples), big-endian AV_PIX_FMT_YUV444P10LE,///< planar YUV 4:4:4, 30bpp, (1 Cr & Cb sample per 1x1 Y samples), little-endian AV_PIX_FMT_YUV422P9BE, ///< planar YUV 4:2:2, 18bpp, (1 Cr & Cb sample per 2x1 Y samples), big-endian AV_PIX_FMT_YUV422P9LE, ///< planar YUV 4:2:2, 18bpp, (1 Cr & Cb sample per 2x1 Y samples), little-endian AV_PIX_FMT_GBRP, ///< planar GBR 4:4:4 24bpp AV_PIX_FMT_GBR24P = AV_PIX_FMT_GBRP, // alias for #AV_PIX_FMT_GBRP AV_PIX_FMT_GBRP9BE, ///< planar GBR 4:4:4 27bpp, big-endian AV_PIX_FMT_GBRP9LE, ///< planar GBR 4:4:4 27bpp, little-endian AV_PIX_FMT_GBRP10BE, ///< planar GBR 4:4:4 30bpp, big-endian AV_PIX_FMT_GBRP10LE, ///< planar GBR 4:4:4 30bpp, little-endian AV_PIX_FMT_GBRP16BE, ///< planar GBR 4:4:4 48bpp, big-endian AV_PIX_FMT_GBRP16LE, ///< planar GBR 4:4:4 48bpp, little-endian AV_PIX_FMT_YUVA422P, ///< planar YUV 4:2:2 24bpp, (1 Cr & Cb sample per 2x1 Y & A samples) AV_PIX_FMT_YUVA444P, ///< planar YUV 4:4:4 32bpp, (1 Cr & Cb sample per 1x1 Y & A samples) AV_PIX_FMT_YUVA420P9BE, ///< planar YUV 4:2:0 22.5bpp, (1 Cr & Cb sample per 2x2 Y & A samples), big-endian AV_PIX_FMT_YUVA420P9LE, ///< planar YUV 4:2:0 22.5bpp, (1 Cr & Cb sample per 2x2 Y & A samples), little-endian AV_PIX_FMT_YUVA422P9BE, ///< planar YUV 4:2:2 27bpp, (1 Cr & Cb sample per 2x1 Y & A samples), big-endian AV_PIX_FMT_YUVA422P9LE, ///< planar YUV 4:2:2 27bpp, (1 Cr & Cb sample per 2x1 Y & A samples), little-endian AV_PIX_FMT_YUVA444P9BE, ///< planar YUV 4:4:4 36bpp, (1 Cr & Cb sample per 1x1 Y & A samples), big-endian AV_PIX_FMT_YUVA444P9LE, ///< planar YUV 4:4:4 36bpp, (1 Cr & Cb sample per 1x1 Y & A samples), little-endian AV_PIX_FMT_YUVA420P10BE, ///< planar YUV 4:2:0 25bpp, (1 Cr & Cb sample per 2x2 Y & A samples, big-endian) AV_PIX_FMT_YUVA420P10LE, ///< planar YUV 4:2:0 25bpp, (1 Cr & Cb sample per 2x2 Y & A samples, little-endian) AV_PIX_FMT_YUVA422P10BE, ///< planar YUV 4:2:2 30bpp, (1 Cr & Cb sample per 2x1 Y & A samples, big-endian) AV_PIX_FMT_YUVA422P10LE, ///< planar YUV 4:2:2 30bpp, (1 Cr & Cb sample per 2x1 Y & A samples, little-endian) AV_PIX_FMT_YUVA444P10BE, ///< planar YUV 4:4:4 40bpp, (1 Cr & Cb sample per 1x1 Y & A samples, big-endian) AV_PIX_FMT_YUVA444P10LE, ///< planar YUV 4:4:4 40bpp, (1 Cr & Cb sample per 1x1 Y & A samples, little-endian) AV_PIX_FMT_YUVA420P16BE, ///< planar YUV 4:2:0 40bpp, (1 Cr & Cb sample per 2x2 Y & A samples, big-endian) AV_PIX_FMT_YUVA420P16LE, ///< planar YUV 4:2:0 40bpp, (1 Cr & Cb sample per 2x2 Y & A samples, little-endian) AV_PIX_FMT_YUVA422P16BE, ///< planar YUV 4:2:2 48bpp, (1 Cr & Cb sample per 2x1 Y & A samples, big-endian) AV_PIX_FMT_YUVA422P16LE, ///< planar YUV 4:2:2 48bpp, (1 Cr & Cb sample per 2x1 Y & A samples, little-endian) AV_PIX_FMT_YUVA444P16BE, ///< planar YUV 4:4:4 64bpp, (1 Cr & Cb sample per 1x1 Y & A samples, big-endian) AV_PIX_FMT_YUVA444P16LE, ///< planar YUV 4:4:4 64bpp, (1 Cr & Cb sample per 1x1 Y & A samples, little-endian) AV_PIX_FMT_VDPAU, ///< HW acceleration through VDPAU, Picture.data[3] contains a VdpVideoSurface AV_PIX_FMT_XYZ12LE, ///< packed XYZ 4:4:4, 36 bpp, (msb) 12X, 12Y, 12Z (lsb), the 2-byte value for each X/Y/Z is stored as little-endian, the 4 lower bits are set to 0 AV_PIX_FMT_XYZ12BE, ///< packed XYZ 4:4:4, 36 bpp, (msb) 12X, 12Y, 12Z (lsb), the 2-byte value for each X/Y/Z is stored as big-endian, the 4 lower bits are set to 0 AV_PIX_FMT_NV16, ///< interleaved chroma YUV 4:2:2, 16bpp, (1 Cr & Cb sample per 2x1 Y samples) AV_PIX_FMT_NV20LE, ///< interleaved chroma YUV 4:2:2, 20bpp, (1 Cr & Cb sample per 2x1 Y samples), little-endian AV_PIX_FMT_NV20BE, ///< interleaved chroma YUV 4:2:2, 20bpp, (1 Cr & Cb sample per 2x1 Y samples), big-endian AV_PIX_FMT_RGBA64BE, ///< packed RGBA 16:16:16:16, 64bpp, 16R, 16G, 16B, 16A, the 2-byte value for each R/G/B/A component is stored as big-endian AV_PIX_FMT_RGBA64LE, ///< packed RGBA 16:16:16:16, 64bpp, 16R, 16G, 16B, 16A, the 2-byte value for each R/G/B/A component is stored as little-endian AV_PIX_FMT_BGRA64BE, ///< packed RGBA 16:16:16:16, 64bpp, 16B, 16G, 16R, 16A, the 2-byte value for each R/G/B/A component is stored as big-endian AV_PIX_FMT_BGRA64LE, ///< packed RGBA 16:16:16:16, 64bpp, 16B, 16G, 16R, 16A, the 2-byte value for each R/G/B/A component is stored as little-endian AV_PIX_FMT_YVYU422, ///< packed YUV 4:2:2, 16bpp, Y0 Cr Y1 Cb AV_PIX_FMT_YA16BE, ///< 16 bits gray, 16 bits alpha (big-endian) AV_PIX_FMT_YA16LE, ///< 16 bits gray, 16 bits alpha (little-endian) AV_PIX_FMT_GBRAP, ///< planar GBRA 4:4:4:4 32bpp AV_PIX_FMT_GBRAP16BE, ///< planar GBRA 4:4:4:4 64bpp, big-endian AV_PIX_FMT_GBRAP16LE, ///< planar GBRA 4:4:4:4 64bpp, little-endian /** * HW acceleration through QSV, data[3] contains a pointer to the * mfxFrameSurface1 structure. */ AV_PIX_FMT_QSV, /** * HW acceleration though MMAL, data[3] contains a pointer to the * MMAL_BUFFER_HEADER_T structure. */ AV_PIX_FMT_MMAL, AV_PIX_FMT_D3D11VA_VLD, ///< HW decoding through Direct3D11 via old API, Picture.data[3] contains a ID3D11VideoDecoderOutputView pointer /** * HW acceleration through CUDA. data[i] contain CUdeviceptr pointers * exactly as for system memory frames. */ AV_PIX_FMT_CUDA, AV_PIX_FMT_0RGB, ///< packed RGB 8:8:8, 32bpp, XRGBXRGB... X=unused/undefined AV_PIX_FMT_RGB0, ///< packed RGB 8:8:8, 32bpp, RGBXRGBX... X=unused/undefined AV_PIX_FMT_0BGR, ///< packed BGR 8:8:8, 32bpp, XBGRXBGR... X=unused/undefined AV_PIX_FMT_BGR0, ///< packed BGR 8:8:8, 32bpp, BGRXBGRX... X=unused/undefined AV_PIX_FMT_YUV420P12BE, ///< planar YUV 4:2:0,18bpp, (1 Cr & Cb sample per 2x2 Y samples), big-endian AV_PIX_FMT_YUV420P12LE, ///< planar YUV 4:2:0,18bpp, (1 Cr & Cb sample per 2x2 Y samples), little-endian AV_PIX_FMT_YUV420P14BE, ///< planar YUV 4:2:0,21bpp, (1 Cr & Cb sample per 2x2 Y samples), big-endian AV_PIX_FMT_YUV420P14LE, ///< planar YUV 4:2:0,21bpp, (1 Cr & Cb sample per 2x2 Y samples), little-endian AV_PIX_FMT_YUV422P12BE, ///< planar YUV 4:2:2,24bpp, (1 Cr & Cb sample per 2x1 Y samples), big-endian AV_PIX_FMT_YUV422P12LE, ///< planar YUV 4:2:2,24bpp, (1 Cr & Cb sample per 2x1 Y samples), little-endian AV_PIX_FMT_YUV422P14BE, ///< planar YUV 4:2:2,28bpp, (1 Cr & Cb sample per 2x1 Y samples), big-endian AV_PIX_FMT_YUV422P14LE, ///< planar YUV 4:2:2,28bpp, (1 Cr & Cb sample per 2x1 Y samples), little-endian AV_PIX_FMT_YUV444P12BE, ///< planar YUV 4:4:4,36bpp, (1 Cr & Cb sample per 1x1 Y samples), big-endian AV_PIX_FMT_YUV444P12LE, ///< planar YUV 4:4:4,36bpp, (1 Cr & Cb sample per 1x1 Y samples), little-endian AV_PIX_FMT_YUV444P14BE, ///< planar YUV 4:4:4,42bpp, (1 Cr & Cb sample per 1x1 Y samples), big-endian AV_PIX_FMT_YUV444P14LE, ///< planar YUV 4:4:4,42bpp, (1 Cr & Cb sample per 1x1 Y samples), little-endian AV_PIX_FMT_GBRP12BE, ///< planar GBR 4:4:4 36bpp, big-endian AV_PIX_FMT_GBRP12LE, ///< planar GBR 4:4:4 36bpp, little-endian AV_PIX_FMT_GBRP14BE, ///< planar GBR 4:4:4 42bpp, big-endian AV_PIX_FMT_GBRP14LE, ///< planar GBR 4:4:4 42bpp, little-endian AV_PIX_FMT_YUVJ411P, ///< planar YUV 4:1:1, 12bpp, (1 Cr & Cb sample per 4x1 Y samples) full scale (JPEG), deprecated in favor of AV_PIX_FMT_YUV411P and setting color_range AV_PIX_FMT_BAYER_BGGR8, ///< bayer, BGBG..(odd line), GRGR..(even line), 8-bit samples */ AV_PIX_FMT_BAYER_RGGB8, ///< bayer, RGRG..(odd line), GBGB..(even line), 8-bit samples */ AV_PIX_FMT_BAYER_GBRG8, ///< bayer, GBGB..(odd line), RGRG..(even line), 8-bit samples */ AV_PIX_FMT_BAYER_GRBG8, ///< bayer, GRGR..(odd line), BGBG..(even line), 8-bit samples */ AV_PIX_FMT_BAYER_BGGR16LE, ///< bayer, BGBG..(odd line), GRGR..(even line), 16-bit samples, little-endian */ AV_PIX_FMT_BAYER_BGGR16BE, ///< bayer, BGBG..(odd line), GRGR..(even line), 16-bit samples, big-endian */ AV_PIX_FMT_BAYER_RGGB16LE, ///< bayer, RGRG..(odd line), GBGB..(even line), 16-bit samples, little-endian */ AV_PIX_FMT_BAYER_RGGB16BE, ///< bayer, RGRG..(odd line), GBGB..(even line), 16-bit samples, big-endian */ AV_PIX_FMT_BAYER_GBRG16LE, ///< bayer, GBGB..(odd line), RGRG..(even line), 16-bit samples, little-endian */ AV_PIX_FMT_BAYER_GBRG16BE, ///< bayer, GBGB..(odd line), RGRG..(even line), 16-bit samples, big-endian */ AV_PIX_FMT_BAYER_GRBG16LE, ///< bayer, GRGR..(odd line), BGBG..(even line), 16-bit samples, little-endian */ AV_PIX_FMT_BAYER_GRBG16BE, ///< bayer, GRGR..(odd line), BGBG..(even line), 16-bit samples, big-endian */ AV_PIX_FMT_XVMC,///< XVideo Motion Acceleration via common packet passing AV_PIX_FMT_YUV440P10LE, ///< planar YUV 4:4:0,20bpp, (1 Cr & Cb sample per 1x2 Y samples), little-endian AV_PIX_FMT_YUV440P10BE, ///< planar YUV 4:4:0,20bpp, (1 Cr & Cb sample per 1x2 Y samples), big-endian AV_PIX_FMT_YUV440P12LE, ///< planar YUV 4:4:0,24bpp, (1 Cr & Cb sample per 1x2 Y samples), little-endian AV_PIX_FMT_YUV440P12BE, ///< planar YUV 4:4:0,24bpp, (1 Cr & Cb sample per 1x2 Y samples), big-endian AV_PIX_FMT_AYUV64LE, ///< packed AYUV 4:4:4,64bpp (1 Cr & Cb sample per 1x1 Y & A samples), little-endian AV_PIX_FMT_AYUV64BE, ///< packed AYUV 4:4:4,64bpp (1 Cr & Cb sample per 1x1 Y & A samples), big-endian AV_PIX_FMT_VIDEOTOOLBOX, ///< hardware decoding through Videotoolbox AV_PIX_FMT_P010LE, ///< like NV12, with 10bpp per component, data in the high bits, zeros in the low bits, little-endian AV_PIX_FMT_P010BE, ///< like NV12, with 10bpp per component, data in the high bits, zeros in the low bits, big-endian AV_PIX_FMT_GBRAP12BE, ///< planar GBR 4:4:4:4 48bpp, big-endian AV_PIX_FMT_GBRAP12LE, ///< planar GBR 4:4:4:4 48bpp, little-endian AV_PIX_FMT_GBRAP10BE, ///< planar GBR 4:4:4:4 40bpp, big-endian AV_PIX_FMT_GBRAP10LE, ///< planar GBR 4:4:4:4 40bpp, little-endian AV_PIX_FMT_MEDIACODEC, ///< hardware decoding through MediaCodec AV_PIX_FMT_GRAY12BE, ///< Y , 12bpp, big-endian AV_PIX_FMT_GRAY12LE, ///< Y , 12bpp, little-endian AV_PIX_FMT_GRAY10BE, ///< Y , 10bpp, big-endian AV_PIX_FMT_GRAY10LE, ///< Y , 10bpp, little-endian AV_PIX_FMT_P016LE, ///< like NV12, with 16bpp per component, little-endian AV_PIX_FMT_P016BE, ///< like NV12, with 16bpp per component, big-endian /** * Hardware surfaces for Direct3D11. * * This is preferred over the legacy AV_PIX_FMT_D3D11VA_VLD. The new D3D11 * hwaccel API and filtering support AV_PIX_FMT_D3D11 only. * * data[0] contains a ID3D11Texture2D pointer, and data[1] contains the * texture array index of the frame as intptr_t if the ID3D11Texture2D is * an array texture (or always 0 if it's a normal texture). */ AV_PIX_FMT_D3D11, AV_PIX_FMT_GRAY9BE, ///< Y , 9bpp, big-endian AV_PIX_FMT_GRAY9LE, ///< Y , 9bpp, little-endian AV_PIX_FMT_GBRPF32BE, ///< IEEE-754 single precision planar GBR 4:4:4, 96bpp, big-endian AV_PIX_FMT_GBRPF32LE, ///< IEEE-754 single precision planar GBR 4:4:4, 96bpp, little-endian AV_PIX_FMT_GBRAPF32BE, ///< IEEE-754 single precision planar GBRA 4:4:4:4, 128bpp, big-endian AV_PIX_FMT_GBRAPF32LE, ///< IEEE-754 single precision planar GBRA 4:4:4:4, 128bpp, little-endian /** * DRM-managed buffers exposed through PRIME buffer sharing. * * data[0] points to an AVDRMFrameDescriptor. */ AV_PIX_FMT_DRM_PRIME, /** * Hardware surfaces for OpenCL. * * data[i] contain 2D image objects (typed in C as cl_mem, used * in OpenCL as image2d_t) for each plane of the surface. */ AV_PIX_FMT_OPENCL, AV_PIX_FMT_GRAY14BE, ///< Y , 14bpp, big-endian AV_PIX_FMT_GRAY14LE, ///< Y , 14bpp, little-endian AV_PIX_FMT_GRAYF32BE, ///< IEEE-754 single precision Y, 32bpp, big-endian AV_PIX_FMT_GRAYF32LE, ///< IEEE-754 single precision Y, 32bpp, little-endian AV_PIX_FMT_YUVA422P12BE, ///< planar YUV 4:2:2,24bpp, (1 Cr & Cb sample per 2x1 Y samples), 12b alpha, big-endian AV_PIX_FMT_YUVA422P12LE, ///< planar YUV 4:2:2,24bpp, (1 Cr & Cb sample per 2x1 Y samples), 12b alpha, little-endian AV_PIX_FMT_YUVA444P12BE, ///< planar YUV 4:4:4,36bpp, (1 Cr & Cb sample per 1x1 Y samples), 12b alpha, big-endian AV_PIX_FMT_YUVA444P12LE, ///< planar YUV 4:4:4,36bpp, (1 Cr & Cb sample per 1x1 Y samples), 12b alpha, little-endian AV_PIX_FMT_NV24, ///< planar YUV 4:4:4, 24bpp, 1 plane for Y and 1 plane for the UV components, which are interleaved (first byte U and the following byte V) AV_PIX_FMT_NV42, ///< as above, but U and V bytes are swapped AV_PIX_FMT_NB ///< number of pixel formats, DO NOT USE THIS if you want to link with shared libav* because the number of formats might differ between versions } /// <summary> /// ffmpeg中AVFrame结构体的前半部分,因为它太长了我不需要完全移植过来 /// </summary> [StructLayout(LayoutKind.Sequential, Pack = 1, Size = 408)] public struct AVFrame { //#define AV_NUM_DATA_POINTERS 8 // uint8_t* data[AV_NUM_DATA_POINTERS]; public IntPtr data1;// 一般是y分量 public IntPtr data2;// 一般是v分量 public IntPtr data3;// 一般是u分量 public IntPtr data4;// 一般是surface(dxva2硬解时) public IntPtr data5; public IntPtr data6; public IntPtr data7; public IntPtr data8; public int linesize1;// y分量每行长度(stride) public int linesize2;// v分量每行长度(stride) public int linesize3;// u分量每行长度(stride) public int linesize4; public int linesize5; public int linesize6; public int linesize7; public int linesize8; //uint8_t **extended_data; IntPtr extended_data; public int width; public int height; public int nb_samples; public AVPixelFormat format; } [StructLayout(LayoutKind.Sequential, Pack = 1, Size = 128)] public struct AVCodec { } [StructLayout(LayoutKind.Sequential, Pack = 1, Size = 72)] public unsafe struct AVPacket { fixed byte frontUnused[24]; // 前部无关数据 public void* data; public int size; } [StructLayout(LayoutKind.Sequential, Pack = 1, Size = 12)] public struct AVBufferRef { } [StructLayout(LayoutKind.Sequential, Pack = 1, Size = 904)] public unsafe struct AVCodecContext { fixed byte frontUnused[880]; // 前部无关数据 public AVBufferRef* hw_frames_ctx; } [StructLayout(LayoutKind.Sequential)] public struct AVDictionary { } public unsafe static class FFHelper { const string avcodec = "avcodec-58"; const string avutil = "avutil-56"; const CallingConvention callingConvention = CallingConvention.Cdecl; [DllImport(avcodec, CallingConvention = callingConvention)] public extern static void avcodec_register_all(); [DllImport(avcodec, CallingConvention = callingConvention)] public extern static AVCodec* avcodec_find_decoder(AVCodecID id); [DllImport(avcodec, CallingConvention = callingConvention)] public extern static AVPacket* av_packet_alloc(); [DllImport(avcodec, CallingConvention = callingConvention)] public extern static void av_init_packet(AVPacket* pkt); //[DllImport(avcodec, CallingConvention = callingConvention)] //public extern static void av_packet_unref(AVPacket* pkt); [DllImport(avcodec, CallingConvention = callingConvention)] public extern static void av_packet_free(AVPacket** pkt); [DllImport(avcodec, CallingConvention = callingConvention)] public extern static AVCodecContext* avcodec_alloc_context3(AVCodec* codec); [DllImport(avcodec, CallingConvention = callingConvention)] public extern static int avcodec_open2(AVCodecContext* avctx, AVCodec* codec, AVDictionary** options); //[DllImport(avcodec, CallingConvention = callingConvention)] //public extern static int avcodec_decode_video2(IntPtr avctx, IntPtr picture, ref int got_picture_ptr, IntPtr avpkt); [DllImport(avcodec, CallingConvention = callingConvention)] public extern static void avcodec_free_context(AVCodecContext** avctx); [DllImport(avcodec, CallingConvention = callingConvention)] public extern static int avcodec_send_packet(AVCodecContext* avctx, AVPacket* pkt); [DllImport(avcodec, CallingConvention = callingConvention)] public extern static int avcodec_receive_frame(AVCodecContext* avctx, AVFrame* frame); [DllImport(avutil, CallingConvention = callingConvention)] public extern static int av_hwdevice_ctx_create(AVBufferRef** device_ctx, AVHWDeviceType type, string device, AVDictionary* opts, int flags); [DllImport(avutil, CallingConvention = callingConvention)] public extern static AVBufferRef* av_buffer_ref(AVBufferRef* buf); [DllImport(avutil, CallingConvention = callingConvention)] public extern static void av_buffer_unref(AVBufferRef** buf); [DllImport(avutil, CallingConvention = callingConvention)] public extern static AVFrame* av_frame_alloc(); [DllImport(avutil, CallingConvention = callingConvention)] public extern static void av_frame_free(AVFrame** frame); [DllImport(avutil, CallingConvention = callingConvention)] public extern static void av_log_set_level(int level); [DllImport(avutil, CallingConvention = callingConvention)] public extern static int av_dict_set_int(AVDictionary** pm, string key, long value, int flags); [DllImport(avutil, CallingConvention = callingConvention)] public extern static void av_dict_free(AVDictionary** m); } }

上文中主要有几个地方是知识点,大家做c#的如果需要和底层交互可以了解一下

- 结构体的使用

结构体在c#与c/c++基本一致,都是内存连续变量的一种组合方式。与c/c++相同,在c#中,如果我们不知道(或者可以规避,因为结构体可能很复杂,很多无关字段)结构体细节只知道结构体整体大小时,我们可以用***Pack=1,SizeConst=***来表示一个大小已知的结构体。

- 指针的使用

c#中,有两种存储内存地址(指针)的方式,一是使用interop体系中的IntPtr类型(大家可以将其想象成void*),一是在不安全的上下文(unsafe)中使用结构体类型指针(此处不讨论c++类指针)

- unsafe和fixed使用

简单来说,有了unsafe你才能用指针;而有了fixed你才能确保指针指向位置不被GC压缩。我们使用fixed达到的效果就是显式跳过了结构体中前部无关数据(参考上文中AVCodecContext等结构体定义),后文中我们还会使用fixed。

现在我们开始编写解码和播放部分(即我们的具体应用)代码

1 using Microsoft.DirectX; 2 using Microsoft.DirectX.Direct3D; 3 using System; 4 using System.Drawing; 5 using System.Runtime.InteropServices; 6 using System.Text; 7 using System.Windows.Forms; 8 using static MultiPlayer.FFHelper; 9 10 namespace MultiPlayer 11 { 12 public unsafe partial class FFPlayer : UserControl 13 { 14 [DllImport("msvcrt", EntryPoint = "memcpy", CallingConvention = CallingConvention.Cdecl, SetLastError = false)] 15 static extern void memcpy(IntPtr dest, IntPtr src, int count); // 用于在解码器和directx间拷贝内存的c函数 16 17 18 private IntPtr contentPanelHandle; // 画面渲染的控件句柄,因为画面渲染时可能出于非UI线程,因此先保存句柄避免CLR报错 19 20 private int lastIWidth, lastIHeight; // 上次控件大小,用于在控件大小改变时做出判定重新初始化渲染上下文 21 private Rectangle lastCBounds; // 临时变量,存储上次控件区域(屏幕坐标) 22 private Rectangle lastVRect; // 临时变量,存储上次解码出的图像大小 23 private Device device; // 当使用软解时,这个变量生效,它是IDirect3Device9*对象,用于绘制YUV 24 private Surface surface; // 当使用软解时,这个变量生效,它是IDirect3Surface9*对象,用于接受解码后的YUV数据 25 AVPixelFormat lastFmt; // 上次解码出的图像数据类型,这个理论上不会变 26 27 AVCodec* codec; // ffmpeg的解码器 28 AVCodecContext* ctx; // ffmpeg的解码上下文 29 AVBufferRef* hw_ctx; // ffmpeg的解码器硬件加速上下文,作为ctx的扩展存在 30 AVPacket* avpkt; // ffmpeg的数据包,用于封送待解码数据 31 IntPtr nalData; // 一块预分配内存,作为avpkt中真正存储数据的内存地址 32 AVFrame* frame; // ffmpeg的已解码帧,用于回传解码后的图像 33 34 private volatile bool _released = false; // 资源释放标识,与锁配合使用避免重复释放资源(由于底层是c/c++,多线程下double free会导致程序崩溃) 35 private object _codecLocker = new object(); // 锁,用于多线程下的互斥 36 37 static FFPlayer() 38 { 39 avcodec_register_all(); // 静态块中注册ffmpeg解码器 40 } 41 42 public FFPlayer() 43 { 44 InitializeComponent(); 45 46 // 过程中,下列对象只需初始化一次 47 frame = av_frame_alloc(); 48 avpkt = av_packet_alloc(); 49 av_init_packet(avpkt); 50 nalData = Marshal.AllocHGlobal(1024 * 1024); 51 codec = avcodec_find_decoder(AVCodecID.AV_CODEC_ID_H264); 52 avpkt->data = (void*)nalData; 53 } 54 55 ~FFPlayer() 56 { 57 // 过程中,下列对象只需释放一次 58 if (null != frame) 59 fixed (AVFrame** LPframe = &frame) 60 av_frame_free(LPframe); 61 if (null != avpkt) 62 fixed (AVPacket** LPpkt = &avpkt) 63 av_packet_free(LPpkt); 64 if (default != nalData) 65 Marshal.FreeHGlobal(nalData); 66 } 67 68 // 释放资源 69 // 此函数并非表示“终止”,更多的是表示“改变”和“重置”,实际上对此函数的调用更多的是发生在界面大小发生变化时和网络掉包导致硬解异常时 70 private void Releases() 71 { 72 // 过程中,下列对象会重复创建和销毁多次 73 lock (_codecLocker) 74 { 75 if (_released) return; 76 if (null != ctx) 77 fixed (AVCodecContext** LPctx = &ctx) 78 avcodec_free_context(LPctx); 79 if (null != hw_ctx) 80 fixed (AVBufferRef** LPhw_ctx = &hw_ctx) 81 av_buffer_unref(LPhw_ctx); 82 // (PS:device和surface我们将其置为null,让GC帮我们调用Finalize,它则会自行释放资源) 83 surface = null; 84 device = null; 85 lastFmt = AVPixelFormat.AV_PIX_FMT_NONE; 86 _released = true; 87 } 88 } 89 90 // Load事件中保存控件句柄 91 private void FFPlayer_Load(object sender, EventArgs e) 92 { 93 contentPanelHandle = Handle; // 这个句柄也可以是你控件内真正要渲染画面的句柄 94 lastCBounds = ClientRectangle; // 同理,区域也不一定是自身显示区域 95 } 96 97 // 解码函数,由外部调用,送一一个分片好的nal 98 public void H264Received(byte[] nal) 99 { 100 lock (_codecLocker) 101 { 102 // 判断界面大小更改了,先重置一波 103 // (因为DirectX中界面大小改变是一件大事,没得法绕过,只能推倒从来) 104 // 如果你的显示控件不是当前控件本身,此处需要做修改 105 if (!ClientRectangle.Equals(lastCBounds)) 106 { 107 lastCBounds = ClientRectangle; 108 Releases(); 109 } 110 111 if (null == ctx) 112 { 113 // 第一次接收到待解码数据时初始化一个解码器上下文 114 ctx = avcodec_alloc_context3(codec); 115 if (null == ctx) 116 { 117 return; 118 } 119 // 通过参数传递控件句柄给硬件加速上下文 120 AVDictionary* dic; 121 av_dict_set_int(&dic, "hWnd", contentPanelHandle.ToInt64(), 0); 122 fixed (AVBufferRef** LPhw_ctx = &hw_ctx) 123 { 124 if (av_hwdevice_ctx_create(LPhw_ctx, AVHWDeviceType.AV_HWDEVICE_TYPE_DXVA2, 125 null, dic, 0) >= 0) 126 { 127 ctx->hw_frames_ctx = av_buffer_ref(hw_ctx); 128 } 129 } 130 av_dict_free(&dic); 131 ctx->hw_frames_ctx = av_buffer_ref(hw_ctx); 132 if (avcodec_open2(ctx, codec, null) < 0) 133 { 134 fixed (AVCodecContext** LPctx = &ctx) 135 avcodec_free_context(LPctx); 136 fixed (AVBufferRef** LPhw_ctx = &hw_ctx) 137 av_buffer_unref(LPhw_ctx); 138 return; 139 } 140 } 141 _released = false; 142 143 // 开始解码 144 Marshal.Copy(nal, 0, nalData, nal.Length); 145 avpkt->size = nal.Length; 146 if (avcodec_send_packet(ctx, avpkt) < 0) 147 { 148 Releases(); return; // 如果程序走到了这里,一般是因为网络掉包导致nal数据不连续,没办法, 推倒从来 149 } 150 receive_frame: 151 int err = avcodec_receive_frame(ctx, frame); 152 if (err == -11) return; // EAGAIN 153 if (err < 0) 154 { 155 Releases(); return; // 同上,一般这里很少出错,但一旦发生,只能推倒从来 156 } 157 158 // 尝试播放一帧画面 159 AVFrame s_frame = *frame; 160 // 这里由于我无论如何都要加速,而一般显卡最兼容的是yv12格式,因此我只对dxva2和420p做了处理,如果你的h264解出来不是这些,我建议转成rgb(那你就需要编译和使用swscale模块了) 161 if (s_frame.format != AVPixelFormat.AV_PIX_FMT_DXVA2_VLD && s_frame.format != AVPixelFormat.AV_PIX_FMT_YUV420P && s_frame.format != AVPixelFormat.AV_PIX_FMT_YUVJ420P) return; 162 try 163 { 164 int width = s_frame.width; 165 int height = s_frame.height; 166 if (lastIWidth != width || lastIHeight != height || lastFmt != s_frame.format) // 这个if判定的是第一次尝试渲染,因为一般码流的宽高和格式不会变 167 { 168 if (s_frame.format != AVPixelFormat.AV_PIX_FMT_DXVA2_VLD) 169 { 170 // 假如硬解不成功(例如h264是baseline的,ffmpeg新版不支持baseline的dxva2硬解) 171 // 我们就尝试用directx渲染yuv,至少省去yuv转rgb,可以略微节省一丢丢cpu 172 PresentParameters pp = new PresentParameters(); 173 pp.Windowed = true; 174 pp.SwapEffect = SwapEffect.Discard; 175 pp.BackBufferCount = 0; 176 pp.DeviceWindowHandle = contentPanelHandle; 177 pp.BackBufferFormat = Manager.Adapters.Default.CurrentDisplayMode.Format; 178 pp.EnableAutoDepthStencil = false; 179 pp.PresentFlag = PresentFlag.Video; 180 pp.FullScreenRefreshRateInHz = 0;//D3DPRESENT_RATE_DEFAULT 181 pp.PresentationInterval = 0;//D3DPRESENT_INTERVAL_DEFAULT 182 Caps caps = Manager.GetDeviceCaps(Manager.Adapters.Default.Adapter, DeviceType.Hardware); 183 CreateFlags behaviorFlas = CreateFlags.MultiThreaded | CreateFlags.FpuPreserve; 184 if (caps.DeviceCaps.SupportsHardwareTransformAndLight) 185 { 186 behaviorFlas |= CreateFlags.HardwareVertexProcessing; 187 } 188 else 189 { 190 behaviorFlas |= CreateFlags.SoftwareVertexProcessing; 191 } 192 device = new Device(Manager.Adapters.Default.Adapter, DeviceType.Hardware, contentPanelHandle, behaviorFlas, pp); 193 //(Format)842094158;//nv12 194 surface = device.CreateOffscreenPlainSurface(width, height, (Format)842094169, Pool.Default);//yv12,显卡兼容性最好的格式 195 } 196 lastIWidth = width; 197 lastIHeight = height; 198 lastVRect = new Rectangle(0, 0, lastIWidth, lastIHeight); 199 lastFmt = s_frame.format; 200 } 201 if (lastFmt != AVPixelFormat.AV_PIX_FMT_DXVA2_VLD) 202 { 203 // 如果硬解失败,我们还需要把yuv拷贝到surface 204 //ffmpeg没有yv12,只有i420,而一般显卡又支持的是yv12,因此下文中uv分量是反向的 205 int stride; 206 var gs = surface.LockRectangle(LockFlags.DoNotWait, out stride); 207 if (gs == null) return; 208 for (int i = 0; i < lastIHeight; i++) 209 { 210 memcpy(gs.InternalData + i * stride, s_frame.data1 + i * s_frame.linesize1, lastIWidth); 211 } 212 for (int i = 0; i < lastIHeight / 2; i++) 213 { 214 memcpy(gs.InternalData + stride * lastIHeight + i * stride / 2, s_frame.data3 + i * s_frame.linesize3, lastIWidth / 2); 215 } 216 for (int i = 0; i < lastIHeight / 2; i++) 217 { 218 memcpy(gs.InternalData + stride * lastIHeight + stride * lastIHeight / 4 + i * stride / 2, s_frame.data2 + i * s_frame.linesize2, lastIWidth / 2); 219 } 220 surface.UnlockRectangle(); 221 } 222 223 // 下面的代码开始烧脑了,如果是dxva2硬解出来的图像数据,则图像数据本身就是一个surface,并且它就绑定了device 224 // 因此我们可以直接用它,如果是x264软解出来的yuv,则我们需要用上文创建的device和surface搞事情 225 Surface _surface = lastFmt == AVPixelFormat.AV_PIX_FMT_DXVA2_VLD ? new Surface(s_frame.data4) : surface; 226 if (lastFmt == AVPixelFormat.AV_PIX_FMT_DXVA2_VLD) 227 GC.SuppressFinalize(_surface);// 这一句代码是点睛之笔,如果不加,程序一会儿就崩溃了,熟悉GC和DX的童鞋估计一下就能看出门道;整篇代码,就这句折腾了我好几天,其他都好说 228 Device _device = lastFmt == AVPixelFormat.AV_PIX_FMT_DXVA2_VLD ? _surface.Device : device; 229 _device.Clear(ClearFlags.Target, Color.Black, 1, 0); 230 _device.BeginScene(); 231 Surface backBuffer = _device.GetBackBuffer(0, 0, BackBufferType.Mono); 232 _device.StretchRectangle(_surface, lastVRect, backBuffer, lastCBounds, TextureFilter.Linear); 233 _device.EndScene(); 234 _device.Present(); 235 backBuffer.Dispose(); 236 } 237 catch (DirectXException ex) 238 { 239 StringBuilder msg = new StringBuilder(); 240 msg.Append("*************************************** \n"); 241 msg.AppendFormat(" 异常发生时间: {0} \n", DateTime.Now); 242 msg.AppendFormat(" 导致当前异常的 Exception 实例: {0} \n", ex.InnerException); 243 msg.AppendFormat(" 导致异常的应用程序或对象的名称: {0} \n", ex.Source); 244 msg.AppendFormat(" 引发异常的方法: {0} \n", ex.TargetSite); 245 msg.AppendFormat(" 异常堆栈信息: {0} \n", ex.StackTrace); 246 msg.AppendFormat(" 异常消息: {0} \n", ex.Message); 247 msg.Append("***************************************"); 248 Console.WriteLine(msg); 249 Releases(); 250 return; 251 } 252 goto receive_frame; // 尝试解出第二幅画面(实际上不行,因为我们约定了单次传入nal是一个,当然,代码是可以改的) 253 } 254 } 255 256 // 外部调用停止解码以显示释放资源 257 public void Stop() 258 { 259 Releases(); 260 } 261 } 262 }

下面讲解代码最主要的三个部分

- 初始化ffmpeg

主要在静态块和构造函数中,过程中我没有将AVPacket和AVFrame局部化,很多网上的代码包括官方代码都是局部化这两个对象。我对此持保留意见(等我程序报错了再说)

- 将收到的数据送入ffmpeg解码并将拿到的数据进行展示

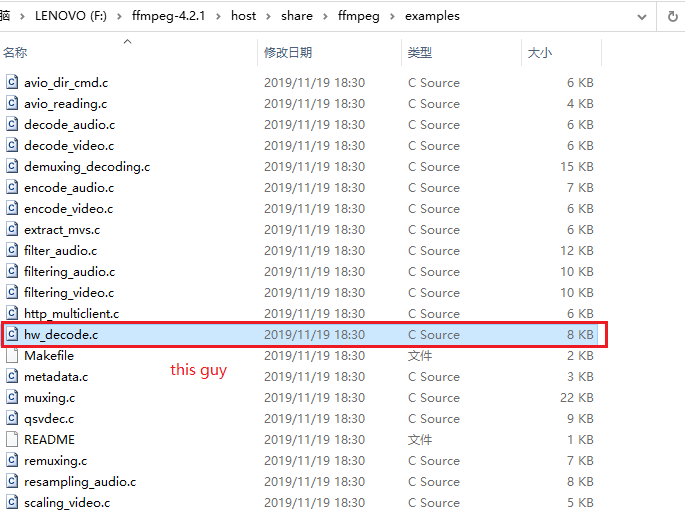

这里值得一提的是get_format,官方有一个示例,下图

它有一个get_format过程(详见215行和63行),我没有采用。这里给大家解释一下原因:

这个get_format的作用是ffmpeg给你提供了多个解码器让你来选一个,而且它内部有一个机制,如果你第一次选的解码器不生效(初始化错误等),它会调用get_format第二次(第三次。。。)让你再选一个,而我们首先认定了要用dxva2的硬件解码器,其次,如果dxva2初始化错误,ffmpeg内部会自动降级为内置264软解,因此我们无需多此一举。

- 发现解码和播放过程中出现异常的解决办法

- 不支持硬解

代码中已经做出了一部分兼容,因为baseline的判定必须解出sps/pps才能知道,因此这个错误可能会延迟爆出(不过不用担心,如果此时报错,ffmpeg会自动降级为软解)

- 窗体大小改变

基于DirectX中设备后台缓冲的宽高无法动态重设,我们只能在控件大小改变时推倒重来。如若不然,你绘制的画面会进行意向不到的缩放

- 网络掉包导致硬件解码器错误

见代码

- 其他directx底层异常

代码中我加了一个try-catch,捕获的异常类型是DirectXException,在c/c++中,我们一般是调用完函数后会得到一个HRESULT,并通过FAILED宏判定他,而这个步骤在c#自动帮我们做了,取而代之的是一个throw DirectXException过程,我们通过try-catch进行可能的异常处理(实际上还是推倒重来)

番外篇:C#对DiretX调用的封装

上文中我们使用DirectX的方式看起来即非COM组件,又非C-DLL的P/Invoke,难道DirectX真有托管代码?

答案是否定的,C#的dll当然也是调用系统的d3d9.dll。不过我们有必要一探究竟,因为这里面有一个隐藏副本

首先请大家准备好ildasm和visual studio,我们打开visual studio,创建一个c++工程(类型随意),然后新建一个cpp文件,然后填入下面的代码

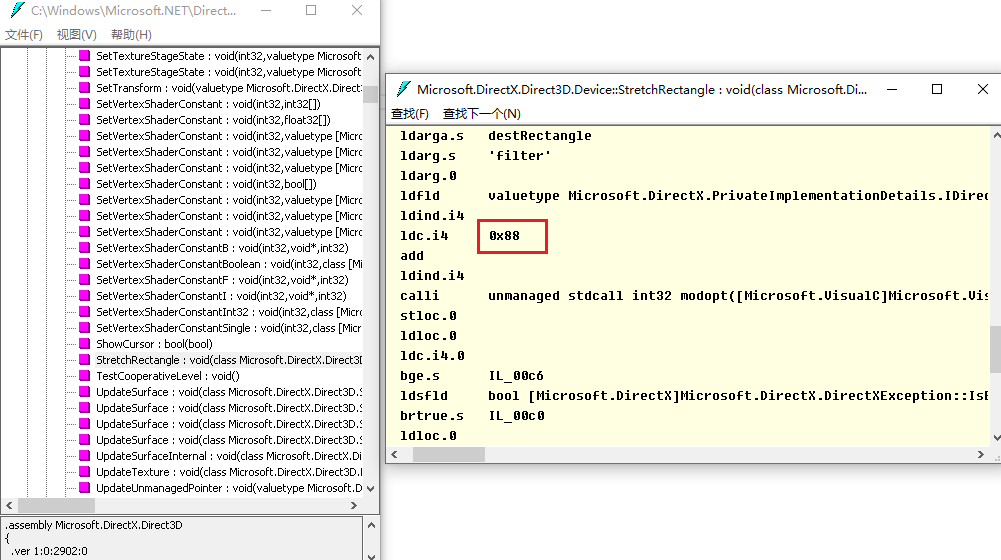

如果你能执行,你会发现输出是136(0x88);然后我们使用ildasm找到StrechRectangle的代码

你会发现也有一个+0x88的过程,那么其实道理就很容易懂了,c#通过calli(CLR指令)可以执行内存call,而得益于微软com组件的函数表偏移量约定,我们可以通过头文件知道函数对于对象指针的偏移(其实就是一个简单的ThisCall)。具体细节大家查阅d3d9.h和calli的网络文章即可。

At 2020-12-2 20:11

当然,你也可以用sharpdx替换旧的官方库

using SharpDX; using SharpDX.Direct3D9; using System; using System.Drawing; using System.Runtime.InteropServices; using System.Text; using System.Windows.Forms; using static WindowsFormsApp1.FFHelper; namespace WindowsFormsApp1 { public unsafe partial class FFH264Player : UserControl { [DllImport("msvcrt", EntryPoint = "memcpy", CallingConvention = CallingConvention.Cdecl, SetLastError = false)] static extern void memcpy(IntPtr dest, IntPtr src, int count); // 用于在解码器和directx间拷贝内存的c函数 static Direct3D d3d; private IntPtr contentPanelHandle; // 画面渲染的控件句柄,因为画面渲染时可能出于非UI线程,因此先保存句柄避免CLR报错 private int lastIWidth, lastIHeight; // 上次控件大小,用于在控件大小改变时做出判定重新初始化渲染上下文 private System.Drawing.Rectangle lastCBounds; // 临时变量,存储上次控件区域(屏幕坐标) private System.Drawing.Rectangle lastVRect; // 临时变量,存储上次解码出的图像大小 private Device device; // 当使用软解时,这个变量生效,它是IDirect3Device9*对象,用于绘制YUV private Surface surface; // 当使用软解时,这个变量生效,它是IDirect3Surface9*对象,用于接受解码后的YUV数据 AVPixelFormat lastFmt; // 上次解码出的图像数据类型,这个理论上不会变 AVCodec* codec; // ffmpeg的解码器 AVCodecContext* ctx; // ffmpeg的解码上下文 AVBufferRef* hw_ctx; // ffmpeg的解码器硬件加速上下文,作为ctx的扩展存在 AVPacket* avpkt; // ffmpeg的数据包,用于封送待解码数据 IntPtr nalData; // 一块预分配内存,作为avpkt中真正存储数据的内存地址 AVFrame* frame; // ffmpeg的已解码帧,用于回传解码后的图像 private volatile bool _released = false; // 资源释放标识,与锁配合使用避免重复释放资源(由于底层是c/c++,多线程下double free会导致程序崩溃) private object _codecLocker = new object(); // 锁,用于多线程下的互斥 static FFH264Player() { avcodec_register_all(); // 静态块中注册ffmpeg解码器 d3d = new Direct3D(); } public FFH264Player() { InitializeComponent(); // 过程中,下列对象只需初始化一次 frame = av_frame_alloc(); avpkt = av_packet_alloc(); av_init_packet(avpkt); nalData = Marshal.AllocHGlobal(1024 * 1024); codec = avcodec_find_decoder(AVCodecID.AV_CODEC_ID_H264); avpkt->data = (void*)nalData; } ~FFH264Player() { // 过程中,下列对象只需释放一次 if (null != frame) fixed (AVFrame** LPframe = &frame) av_frame_free(LPframe); if (null != avpkt) fixed (AVPacket** LPpkt = &avpkt) av_packet_free(LPpkt); if (default != nalData) Marshal.FreeHGlobal(nalData); } // 释放资源 // 此函数并非表示“终止”,更多的是表示“改变”和“重置”,实际上对此函数的调用更多的是发生在界面大小发生变化时和网络掉包导致硬解异常时 private void Releases() { // 过程中,下列对象会重复创建和销毁多次 lock (_codecLocker) { if (_released) return; if (null != ctx) fixed (AVCodecContext** LPctx = &ctx) avcodec_free_context(LPctx); if (null != hw_ctx) fixed (AVBufferRef** LPhw_ctx = &hw_ctx) av_buffer_unref(LPhw_ctx); // (PS:device和surface我们将其置为null,让GC帮我们调用Finalize,它则会自行释放资源) surface = null; device = null; lastFmt = AVPixelFormat.AV_PIX_FMT_NONE; _released = true; } } // Load事件中保存控件句柄 private void FFPlayer_Load(object sender, EventArgs e) { contentPanelHandle = Handle; // 这个句柄也可以是你控件内真正要渲染画面的句柄 lastCBounds = ClientRectangle; // 同理,区域也不一定是自身显示区域 } // 解码函数,由外部调用,送一一个分片好的nal public void H264Received(byte[] nal) { lock (_codecLocker) { // 判断界面大小更改了,先重置一波 // (因为DirectX中界面大小改变是一件大事,没得法绕过,只能推倒从来) // 如果你的显示控件不是当前控件本身,此处需要做修改 if (!ClientRectangle.Equals(lastCBounds)) { lastCBounds = ClientRectangle; Releases(); } if (null == ctx) { // 第一次接收到待解码数据时初始化一个解码器上下文 ctx = avcodec_alloc_context3(codec); if (null == ctx) { return; } // 通过参数传递控件句柄给硬件加速上下文 AVDictionary* dic; av_dict_set_int(&dic, "hWnd", contentPanelHandle.ToInt64(), 0); fixed (AVBufferRef** LPhw_ctx = &hw_ctx) { if (av_hwdevice_ctx_create(LPhw_ctx, AVHWDeviceType.AV_HWDEVICE_TYPE_DXVA2, null, dic, 0) >= 0) { ctx->hw_device_ctx = av_buffer_ref(hw_ctx); } } av_dict_free(&dic); if (avcodec_open2(ctx, codec, null) < 0) { fixed (AVCodecContext** LPctx = &ctx) avcodec_free_context(LPctx); if (null != hw_ctx) fixed (AVBufferRef** LPhw_ctx = &hw_ctx) av_buffer_unref(LPhw_ctx); return; } } _released = false; // 开始解码 Marshal.Copy(nal, 0, nalData, nal.Length); avpkt->size = nal.Length; if (avcodec_send_packet(ctx, avpkt) < 0) { Releases(); return; // 如果程序走到了这里,一般是因为网络掉包导致nal数据不连续,没办法, 推倒从来 } receive_frame: int err = avcodec_receive_frame(ctx, frame); if (err == -11) return; // EAGAIN if (err < 0) { Releases(); return; // 同上,一般这里很少出错,但一旦发生,只能推倒从来 } // 尝试播放一帧画面 AVFrame s_frame = *frame; // 这里由于我无论如何都要加速,而一般显卡最兼容的是yv12格式,因此我只对dxva2和420p做了处理,如果你的h264解出来不是这些,我建议转成rgb(那你就需要编译和使用swscale模块了) if (s_frame.format != AVPixelFormat.AV_PIX_FMT_DXVA2_VLD && s_frame.format != AVPixelFormat.AV_PIX_FMT_YUV420P && s_frame.format != AVPixelFormat.AV_PIX_FMT_YUVJ420P) return; try { int width = s_frame.width; int height = s_frame.height; if (lastIWidth != width || lastIHeight != height || lastFmt != s_frame.format) // 这个if判定的是第一次尝试渲染,因为一般码流的宽高和格式不会变 { if (s_frame.format != AVPixelFormat.AV_PIX_FMT_DXVA2_VLD) { // 假如硬解不成功(例如h264是baseline的,ffmpeg新版不支持baseline的dxva2硬解) // 我们就尝试用directx渲染yuv,至少省去yuv转rgb,可以略微节省一丢丢cpu PresentParameters pp = new PresentParameters(); pp.Windowed = true; pp.SwapEffect = SwapEffect.Discard; pp.BackBufferCount = 0; pp.DeviceWindowHandle = contentPanelHandle; pp.BackBufferFormat = d3d.Adapters[0].CurrentDisplayMode.Format;//Manager.Adapters.Default.CurrentDisplayMode.Format; pp.EnableAutoDepthStencil = false; pp.PresentFlags = PresentFlags.Video; pp.FullScreenRefreshRateInHz = 0;//D3DPRESENT_RATE_DEFAULT pp.PresentationInterval = 0;//D3DPRESENT_INTERVAL_DEFAULT Capabilities caps = d3d.GetDeviceCaps(d3d.Adapters[0].Adapter, DeviceType.Hardware); CreateFlags behaviorFlas = CreateFlags.Multithreaded | CreateFlags.FpuPreserve; if ((caps.DeviceCaps & DeviceCaps.HWTransformAndLight) != 0) { behaviorFlas |= CreateFlags.HardwareVertexProcessing; } else { behaviorFlas |= CreateFlags.SoftwareVertexProcessing; } device = new Device(d3d, d3d.Adapters[0].Adapter, DeviceType.Hardware, contentPanelHandle, behaviorFlas, pp); //(Format)842094158;//nv12 surface = Surface.CreateOffscreenPlain(device, width, height, (Format)842094169, Pool.Default);//yv12,显卡兼容性最好的格式 } lastIWidth = width; lastIHeight = height; lastVRect = new System.Drawing.Rectangle(0, 0, lastIWidth, lastIHeight); lastFmt = s_frame.format; } if (lastFmt != AVPixelFormat.AV_PIX_FMT_DXVA2_VLD) { // 如果硬解失败,我们还需要把yuv拷贝到surface //ffmpeg没有yv12,只有i420,而一般显卡又支持的是yv12,因此下文中uv分量是反向的 var gs = surface.LockRectangle(LockFlags.DoNotWait); int stride = gs.Pitch; for (int i = 0; i < lastIHeight; i++) { memcpy(gs.DataPointer + i * stride, s_frame.data1 + i * s_frame.linesize1, lastIWidth); } for (int i = 0; i < lastIHeight / 2; i++) { memcpy(gs.DataPointer + stride * lastIHeight + i * stride / 2, s_frame.data3 + i * s_frame.linesize3, lastIWidth / 2); } for (int i = 0; i < lastIHeight / 2; i++) { memcpy(gs.DataPointer + stride * lastIHeight + stride * lastIHeight / 4 + i * stride / 2, s_frame.data2 + i * s_frame.linesize2, lastIWidth / 2); } surface.UnlockRectangle(); } // 下面的代码开始烧脑了,如果是dxva2硬解出来的图像数据,则图像数据本身就是一个surface // 因此我们可以直接用它,如果是x264软解出来的yuv,则我们需要用上文创建的device和surface搞事情 Surface _surface = lastFmt == AVPixelFormat.AV_PIX_FMT_DXVA2_VLD ? new Surface(s_frame.data4) : surface; Device _device = lastFmt == AVPixelFormat.AV_PIX_FMT_DXVA2_VLD ? _surface.Device : device; _device.Clear(ClearFlags.Target, SharpDX.Color.Black, 1, 0); _device.BeginScene(); Surface backBuffer = _device.GetBackBuffer(0, 0); Size dst = lastCBounds.Size; GetScale(lastVRect.Size, ref dst); System.Drawing.Rectangle dstRect = lastCBounds; dstRect.X += (lastCBounds.Width - dst.Width) / 2; dstRect.Y += (lastCBounds.Height - dst.Height) / 2; dstRect.Size = dst; _device.StretchRectangle(_surface, new SharpDX.RectangleF(0, 0, lastVRect.Width, lastVRect.Height), backBuffer, new SharpDX.RectangleF(dstRect.X, dstRect.Y, dstRect.Width, dstRect.Height), TextureFilter.Linear); _device.EndScene(); _device.Present(); backBuffer.Dispose(); } catch (SharpDXException ex) { StringBuilder msg = new StringBuilder(); msg.Append("*************************************** \n"); msg.AppendFormat(" 异常发生时间: {0} \n", DateTime.Now); msg.AppendFormat(" 导致当前异常的 Exception 实例: {0} \n", ex.InnerException); msg.AppendFormat(" 导致异常的应用程序或对象的名称: {0} \n", ex.Source); msg.AppendFormat(" 引发异常的方法: {0} \n", ex.TargetSite); msg.AppendFormat(" 异常堆栈信息: {0} \n", ex.StackTrace); msg.AppendFormat(" 异常消息: {0} \n", ex.Message); msg.Append("***************************************"); Console.WriteLine(msg); Releases(); return; } goto receive_frame; // 尝试解出第二幅画面(实际上不行,因为我们约定了单次传入nal是一个,当然,代码是可以改的) } } private void GetScale(Size src, ref Size dst) { int width = 0, height = 0; //按比例缩放 int sourWidth = src.Width; int sourHeight = src.Height; if (sourHeight > dst.Height || sourWidth > dst.Width) { if ((sourWidth * dst.Height) > (sourHeight * dst.Width)) { width = dst.Width; height = dst.Width * sourHeight / sourWidth; } else { height = dst.Height; width = sourWidth * dst.Height / sourHeight; } } else { width = sourWidth; height = sourHeight; } dst.Width = width; dst.Height = height; } // 外部调用停止解码以显示释放资源 public void Stop() { Releases(); } } }

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· AI技术革命,工作效率10个最佳AI工具