转载请注明出处:http://www.cnblogs.com/Joanna-Yan/p/7814644.html

前面讲到:Netty(一)——Netty入门程序

主要内容:

- TCP粘包/拆包的基础知识

- 没考虑TCP粘包/拆包的问题案例

- 使用Netty解决读半包问题

1.TCP粘包/拆包

TCP是个“流”协议,所谓流,就是没有界限的一串数据。TCP底层并不了解上层业务数据的具体含义,它会根据TCP缓冲区的实际情况进行包的划分,所以在业务上认为,一个完整的包可能会被TCP拆分成多个包进行发送,也有可能把多个小的包封装成一个大的数据包发送,这就是所谓的TCP粘包和拆包的问题。

1.1 TCP粘包/拆包问题说明

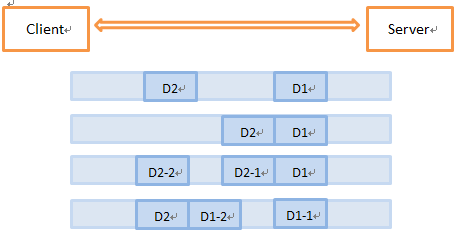

我们通过下图对TCP粘包和拆包问题进行说明:

假设客户端分别发送了两个数据包D1和D2给服务端,由于服务端一次读取到的字节数是不确定的,故可能存在以下四种情况。

- 服务端分两次读取到了两个独立的数据包,分别是D1和D2,没有粘包和拆包;

- 服务端一次接收到了两个数据包,D1和D2粘合在一起,被称为TCP粘包;

- 服务端分两次读取到了两个数据包,第一次读取到了完整的D1包和D2包的部分内容,第二次读取到了D2包的剩余内容,这被称为TCP拆包;

- 服务端分两次读取到了两个数据包,第一次读取到了D1包的部分内容D1_1,第二次读取到了D1包的剩余内容D1_2和D2包的整包。

如果此时服务端TCP接收滑窗非常小,而数据包D1和D2比较大,很可能会发生第五种可能,即服务端分多次才能将D1和D2包接收完全,期间发生多次拆包。

1.2 TCP粘包/拆包发生的原因

问题产生的原因有三个:

- 应用程序write写入的字节大小大于套接字发送缓存区大小;

- 进行MSS大小的TCP分段;

- 以太网帧的payload大于MTU进行IP分片。

1.3 粘包问题的解决策略

由于底层的TCP无法理解上层的业务数据,所以在底层是无法保证数据包不被拆分和重组的,这个问题只能通过上层的应用协议栈设计来解决,根据业界的主流协议的解决方案,可以归纳如下。

- 消息定长,例如每个报文的大小和固定长度200字节,如果不够,空位补空格;

- 在包尾增加回车换行符进行分割,例如FTP协议;

- 将消息分为消息头和消息体,消息头中包含表示消息总长度(或者消息体长度)的字段,通常设计思路为消息头的第一个字段使用int32来表示消息的总长度;

- 更复杂的应用层协议。

下面我们就通过实际示例来看看如何使用Netty提供的半包解码器来解决TCP粘包/拆包问题。

2.未考虑TCP粘包导致功能异常案例

在前面的时间服务器示例中,我们多次强调并没有考虑读半包问题,这在功能测试时往往没有问题,但是一旦压力上来,或者发送大报文之后,就会存在粘包/拆包问题。如果代码没有考虑,往往就会出现解码错位或者错误,导致程序不能正常工作。下面我们以前面的Netty(一)——Netty入门程序为例,模拟故障场景,然后看看如何正确使用Netty的半包解码器来解决TCP粘包/拆包问题。

2.1TimeServer的改造

package joanna.yan.netty; import io.netty.bootstrap.ServerBootstrap; import io.netty.channel.ChannelFuture; import io.netty.channel.ChannelInitializer; import io.netty.channel.ChannelOption; import io.netty.channel.EventLoopGroup; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioServerSocketChannel; public class TimeServer { public static void main(String[] args) throws Exception { int port=9090; if(args!=null&&args.length>0){ try { port=Integer.valueOf(args[0]); } catch (Exception e) { // 采用默认值 } } new TimeServer().bind(port); } public void bind(int port) throws Exception{ /* * 配置服务端的NIO线程组,它包含了一组NIO线程,专门用于网络事件的处理,实际上它们就是Reactor线程组。 * 这里创建两个的原因:一个用于服务端接受客户端的连接, * 另一个用于进行SocketChannel的网络读写。 */ EventLoopGroup bossGroup=new NioEventLoopGroup(); EventLoopGroup workerGroup=new NioEventLoopGroup(); try { //ServerBootstrap对象,Netty用于启动NIO服务端的辅助启动类,目的是降低服务端的开发复杂度。 ServerBootstrap b=new ServerBootstrap(); b.group(bossGroup, workerGroup) .channel(NioServerSocketChannel.class) .option(ChannelOption.SO_BACKLOG, 1024) /* * 绑定I/O事件的处理类ChildChannelHandler,它的作用类似于Reactor模式中的handler类, * 主要用于处理网络I/O事件,例如:记录日志、对消息进行编解码等。 */ .childHandler(new ChildChannelHandler()); /* * 绑定端口,同步等待成功(调用它的bind方法绑定监听端口,随后,调用它的同步阻塞方法sync等待绑定操作完成。 * 完成之后Netty会返回一个ChannelFuture,它的功能类似于JDK的java.util.concurrent.Future, * 主要用于异步操作的通知回调。) */ ChannelFuture f=b.bind(port).sync(); //等待服务端监听端口关闭(使用f.channel().closeFuture().sync()方法进行阻塞,等待服务端链路关闭之后main函数才退出。) f.channel().closeFuture().sync(); }finally{ //优雅退出,释放线程池资源 bossGroup.shutdownGracefully(); workerGroup.shutdownGracefully(); } } private class ChildChannelHandler extends ChannelInitializer<SocketChannel>{ @Override protected void initChannel(SocketChannel arg0) throws Exception { // arg0.pipeline().addLast(new TimeServerHandler()); //模拟粘包/拆包故障场景 arg0.pipeline().addLast(new TimeServerHandler1()); } } }

import io.netty.channel.ChannelHandlerContext; import io.netty.channel.ChannelInboundHandlerAdapter; /** * 用于对网络事件进行读写操作 * 模拟粘包/拆包故障场景 * @author Joanna.Yan * @date 2017年11月8日下午6:54:35 */ public class TimeServerHandler1 extends ChannelInboundHandlerAdapter{ private int counter; @Override public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception { ByteBuf buf=(ByteBuf) msg; byte[] req=new byte[buf.readableBytes()]; buf.readBytes(req); String body=new String(req, "UTF-8").substring(0, req.length-System.getProperty("line.separator").length()); System.out.println("The time server receive order : "+body+" ;the counter is :"+ ++counter); String currentTime="QUERY TIME ORDER".equalsIgnoreCase(body) ? new Date(System.currentTimeMillis()).toString() : "BAD ORDER"; currentTime=currentTime+System.getProperty("line.separator"); ByteBuf resp=Unpooled.copiedBuffer(currentTime.getBytes()); ctx.write(resp); } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { ctx.close(); } }

2.2TimeClient的改造

package joanna.yan.netty; import io.netty.bootstrap.Bootstrap; import io.netty.channel.ChannelFuture; import io.netty.channel.ChannelInitializer; import io.netty.channel.ChannelOption; import io.netty.channel.EventLoopGroup; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioSocketChannel; public class TimeClient { public static void main(String[] args) throws Exception { int port=9090; if(args!=null&&args.length>0){ try { port=Integer.valueOf(args[0]); } catch (Exception e) { // 采用默认值 } } new TimeClient().connect(port, "127.0.0.1"); } public void connect(int port,String host) throws Exception{ //配置客户端NIO线程组 EventLoopGroup group=new NioEventLoopGroup(); try { Bootstrap b=new Bootstrap(); b.group(group) .channel(NioSocketChannel.class) .option(ChannelOption.TCP_NODELAY, true) .handler(new ChannelInitializer<SocketChannel>() { @Override protected void initChannel(SocketChannel ch) throws Exception { // ch.pipeline().addLast(new TimeClientHandler()); //模拟粘包/拆包故障场景 ch.pipeline().addLast(new TimeClientHandler1()); } }); //发起异步连接操作 ChannelFuture f=b.connect(host, port).sync(); //等待客户端链路关闭 f.channel().closeFuture().sync(); }finally{ //优雅退出,释放NIO线程组 group.shutdownGracefully(); } } }

package joanna.yan.netty; import java.util.logging.Logger; import io.netty.buffer.ByteBuf; import io.netty.buffer.Unpooled; import io.netty.channel.ChannelHandlerContext; import io.netty.channel.ChannelInboundHandlerAdapter; /** * 模拟粘包/拆包故障场景 * @author Joanna.Yan * @date 2017年11月10日下午2:18:51 */ public class TimeClientHandler1 extends ChannelInboundHandlerAdapter{ private static final Logger logger=Logger.getLogger(TimeClientHandler1.class.getName()); private int counter; private byte[] req; public TimeClientHandler1(){ req=("QUER TIME ORDER"+System.getProperty("line.separator")).getBytes(); } @Override public void channelActive(ChannelHandlerContext ctx) throws Exception { ByteBuf message=null; for (int i = 0; i < 100; i++) { message=Unpooled.buffer(req.length); message.writeBytes(req); ctx.writeAndFlush(message); } } @Override public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception { ByteBuf buf=(ByteBuf) msg; byte[] req=new byte[buf.readableBytes()]; buf.readBytes(req); String body=new String(req, "UTF-8"); System.out.println("Now is :"+body+" ;the counter is :" + ++counter); } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { //释放资源 logger.warning("Unexpected exception from downstream : "+cause.getMessage()); ctx.close(); } }

2.3运行结果

分别执行服务端和客户端,运行结果如下:

服务端运行结果如下:

The time server receive order : QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QU ;the counter is :1 The time server receive order : TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER QUER TIME ORDER ;the counter is :2

服务端运行结果表明它只接收到了两条消息,总数正好是100条,我们期待的是收到100条消息,每条包括一条“QUERY TIME ORDER”指令。这说明发生了TCP粘包。

客户端运行结果如下:

Now is : BAD ORDER

BAD ORDER

; the counter is : 1

按照设计初衷,客户端应该收到100条当前系统时间的消息,但实际上只收到了一条。这不难理解,因为服务端只收到了2条请求消息,所以实际服务端只发送了2条应答,由于请求消息不满足查询条件,所以只返回了2条“BAD ORDER”应答消息。但是实际上客户端只收到了一条包含2条“BAD ORDER”指令的消息,说明服务端返回的应答消息也发送了粘包。

由于上面的例程没有考虑TCP的粘包/拆包,所以当发生TCP粘包时,我们的程序就不能正常工作。

下面我们通过Netty的LineBaseFrameDecoder和StringDecoder来解决TCP粘包问题。

3.利用LineBasedFrameDecoder解决TCP粘包问题

为了解决TCP粘包/拆包导致的半包读写问题,Netty默认提供了多种编解码器用于处理半包,只要能熟练掌握这些类库的使用,TCP粘包问题从此会变得非常容易,你甚至不需要关心它们,这也是其他NIO框架和JDK原生的NIO API所无法匹敌的。

下面我们对时间服务进行修改。

3.1支持TCP粘包的TimeServer

package joanna.yan.netty.sp; import io.netty.bootstrap.ServerBootstrap; import io.netty.channel.ChannelFuture; import io.netty.channel.ChannelInitializer; import io.netty.channel.ChannelOption; import io.netty.channel.EventLoopGroup; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioServerSocketChannel; import io.netty.handler.codec.LineBasedFrameDecoder; import io.netty.handler.codec.string.StringDecoder; public class TimeServer { public static void main(String[] args) throws Exception { int port=9090; if(args!=null&&args.length>0){ try { port=Integer.valueOf(args[0]); } catch (Exception e) { // 采用默认值 } } new TimeServer().bind(port); } public void bind(int port) throws Exception{ //配置服务端的NIO线程组 EventLoopGroup bossGroup=new NioEventLoopGroup(); EventLoopGroup workerGroup=new NioEventLoopGroup(); try { ServerBootstrap b=new ServerBootstrap(); b.group(bossGroup, workerGroup) .channel(NioServerSocketChannel.class) .option(ChannelOption.SO_BACKLOG, 1024) .childHandler(new ChildChannelHandler()); //绑定端口,同步等待成功 ChannelFuture f=b.bind(port).sync(); //等待服务端监听端口关闭 f.channel().closeFuture().sync(); }finally{ //优雅退出,释放线程池资源 bossGroup.shutdownGracefully(); workerGroup.shutdownGracefully(); } } private class ChildChannelHandler extends ChannelInitializer<SocketChannel>{ @Override protected void initChannel(SocketChannel arg0) throws Exception { arg0.pipeline().addLast(new LineBasedFrameDecoder(1024)); arg0.pipeline().addLast(new StringDecoder()); arg0.pipeline().addLast(new TimeServerHandler()); } } }

package joanna.yan.netty.sp; import java.util.Date; import io.netty.buffer.ByteBuf; import io.netty.buffer.Unpooled; import io.netty.channel.ChannelHandlerContext; import io.netty.channel.ChannelInboundHandlerAdapter; public class TimeServerHandler extends ChannelInboundHandlerAdapter{ private int counter; @Override public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception { String body=(String) msg; System.out.println("The time server receive order : "+body+" ;the counter is : "+ ++counter); String currentTime="QUERY TIME ORDER".equalsIgnoreCase(body) ? new Date(System.currentTimeMillis()).toString() : "BAD ORDER"; currentTime =currentTime+System.getProperty("line.separator"); ByteBuf resp=Unpooled.copiedBuffer(currentTime.getBytes()); ctx.writeAndFlush(resp); } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { ctx.close(); } }

3.2支持TCP粘包的TimeClient

package joanna.yan.netty.sp; import io.netty.bootstrap.Bootstrap; import io.netty.channel.ChannelFuture; import io.netty.channel.ChannelInitializer; import io.netty.channel.ChannelOption; import io.netty.channel.EventLoopGroup; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioSocketChannel; import io.netty.handler.codec.LineBasedFrameDecoder; import io.netty.handler.codec.string.StringDecoder; public class TimeClient { public static void main(String[] args) throws Exception { int port=9090; if(args!=null&&args.length>0){ try { port=Integer.valueOf(args[0]); } catch (Exception e) { // 采用默认值 } } new TimeClient().connect(port, "127.0.0.1"); } public void connect(int port,String host) throws Exception{ //配置客户端NIO线程组 EventLoopGroup group=new NioEventLoopGroup(); try { Bootstrap b=new Bootstrap(); b.group(group) .channel(NioSocketChannel.class) .option(ChannelOption.TCP_NODELAY, true) .handler(new ChannelInitializer<SocketChannel>() { @Override protected void initChannel(SocketChannel ch) throws Exception { //直接在TimeClientHandler之前新增LineBasedFrameDecoder和StringDecoder解码器 ch.pipeline().addLast(new LineBasedFrameDecoder(1024)); ch.pipeline().addLast(new StringDecoder()); ch.pipeline().addLast(new TimeClientHandler()); } }); //发起异步连接操作 ChannelFuture f=b.connect(host, port).sync(); //等待客户端链路关闭 f.channel().closeFuture().sync(); }finally{ //优雅退出,释放NIO线程组 group.shutdownGracefully(); } } }

package joanna.yan.netty.sp; import io.netty.buffer.ByteBuf; import io.netty.buffer.Unpooled; import io.netty.channel.ChannelHandlerContext; import io.netty.channel.ChannelInboundHandlerAdapter; import java.util.logging.Logger; public class TimeClientHandler extends ChannelInboundHandlerAdapter{ private static final Logger logger=Logger.getLogger(TimeClientHandler.class.getName()); private int counter; private byte[] req; public TimeClientHandler(){ req=("QUERY TIME ORDER"+System.getProperty("line.separator")).getBytes(); } @Override public void channelActive(ChannelHandlerContext ctx) throws Exception { ByteBuf message=null; for (int i = 0; i < 100; i++) { message=Unpooled.buffer(req.length); message.writeBytes(req); ctx.writeAndFlush(message); } } @Override public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception { //拿到的msg已经是解码成字符串之后的应答消息了,相比于之前的代码简洁明了很多。 String body=(String) msg; System.out.println("Now is :"+body+" ;the counter is :" + ++counter); } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { //释放资源 logger.warning("Unexpected exception from downstream : "+cause.getMessage()); ctx.close(); } }

3.3运行支持TCP粘包的时间服务器程序

为了尽量模拟TCP粘包和半包场景,采用简单的压力测试,链路建立成功之后,客户端连续发送100条消息给服务端,然后查看服务端和客户端的运行结果。

服务端执行结果如下:

The time server receive order : QUER TIME ORDER ;the counter is : 1 The time server receive order : QUER TIME ORDER ;the counter is : 2 The time server receive order : QUER TIME ORDER ;the counter is : 3 The time server receive order : QUER TIME ORDER ;the counter is : 4 The time server receive order : QUER TIME ORDER ;the counter is : 5 The time server receive order : QUER TIME ORDER ;the counter is : 6 The time server receive order : QUER TIME ORDER ;the counter is : 7 The time server receive order : QUER TIME ORDER ;the counter is : 8 The time server receive order : QUER TIME ORDER ;the counter is : 9 The time server receive order : QUER TIME ORDER ;the counter is : 10 The time server receive order : QUER TIME ORDER ;the counter is : 11 The time server receive order : QUER TIME ORDER ;the counter is : 12 The time server receive order : QUER TIME ORDER ;the counter is : 13 The time server receive order : QUER TIME ORDER ;the counter is : 14 The time server receive order : QUER TIME ORDER ;the counter is : 15 The time server receive order : QUER TIME ORDER ;the counter is : 16 The time server receive order : QUER TIME ORDER ;the counter is : 17 The time server receive order : QUER TIME ORDER ;the counter is : 18 The time server receive order : QUER TIME ORDER ;the counter is : 19 The time server receive order : QUER TIME ORDER ;the counter is : 20 The time server receive order : QUER TIME ORDER ;the counter is : 21 The time server receive order : QUER TIME ORDER ;the counter is : 22 The time server receive order : QUER TIME ORDER ;the counter is : 23 The time server receive order : QUER TIME ORDER ;the counter is : 24 The time server receive order : QUER TIME ORDER ;the counter is : 25 The time server receive order : QUER TIME ORDER ;the counter is : 26 The time server receive order : QUER TIME ORDER ;the counter is : 27 The time server receive order : QUER TIME ORDER ;the counter is : 28 The time server receive order : QUER TIME ORDER ;the counter is : 29 The time server receive order : QUER TIME ORDER ;the counter is : 30 The time server receive order : QUER TIME ORDER ;the counter is : 31 The time server receive order : QUER TIME ORDER ;the counter is : 32 The time server receive order : QUER TIME ORDER ;the counter is : 33 The time server receive order : QUER TIME ORDER ;the counter is : 34 The time server receive order : QUER TIME ORDER ;the counter is : 35 The time server receive order : QUER TIME ORDER ;the counter is : 36 The time server receive order : QUER TIME ORDER ;the counter is : 37 The time server receive order : QUER TIME ORDER ;the counter is : 38 The time server receive order : QUER TIME ORDER ;the counter is : 39 The time server receive order : QUER TIME ORDER ;the counter is : 40 The time server receive order : QUER TIME ORDER ;the counter is : 41 The time server receive order : QUER TIME ORDER ;the counter is : 42 The time server receive order : QUER TIME ORDER ;the counter is : 43 The time server receive order : QUER TIME ORDER ;the counter is : 44 The time server receive order : QUER TIME ORDER ;the counter is : 45 The time server receive order : QUER TIME ORDER ;the counter is : 46 The time server receive order : QUER TIME ORDER ;the counter is : 47 The time server receive order : QUER TIME ORDER ;the counter is : 48 The time server receive order : QUER TIME ORDER ;the counter is : 49 The time server receive order : QUER TIME ORDER ;the counter is : 50 The time server receive order : QUER TIME ORDER ;the counter is : 51 The time server receive order : QUER TIME ORDER ;the counter is : 52 The time server receive order : QUER TIME ORDER ;the counter is : 53 The time server receive order : QUER TIME ORDER ;the counter is : 54 The time server receive order : QUER TIME ORDER ;the counter is : 55 The time server receive order : QUER TIME ORDER ;the counter is : 56 The time server receive order : QUER TIME ORDER ;the counter is : 57 The time server receive order : QUER TIME ORDER ;the counter is : 58 The time server receive order : QUER TIME ORDER ;the counter is : 59 The time server receive order : QUER TIME ORDER ;the counter is : 60 The time server receive order : QUER TIME ORDER ;the counter is : 61 The time server receive order : QUER TIME ORDER ;the counter is : 62 The time server receive order : QUER TIME ORDER ;the counter is : 63 The time server receive order : QUER TIME ORDER ;the counter is : 64 The time server receive order : QUER TIME ORDER ;the counter is : 65 The time server receive order : QUER TIME ORDER ;the counter is : 66 The time server receive order : QUER TIME ORDER ;the counter is : 67 The time server receive order : QUER TIME ORDER ;the counter is : 68 The time server receive order : QUER TIME ORDER ;the counter is : 69 The time server receive order : QUER TIME ORDER ;the counter is : 70 The time server receive order : QUER TIME ORDER ;the counter is : 71 The time server receive order : QUER TIME ORDER ;the counter is : 72 The time server receive order : QUER TIME ORDER ;the counter is : 73 The time server receive order : QUER TIME ORDER ;the counter is : 74 The time server receive order : QUER TIME ORDER ;the counter is : 75 The time server receive order : QUER TIME ORDER ;the counter is : 76 The time server receive order : QUER TIME ORDER ;the counter is : 77 The time server receive order : QUER TIME ORDER ;the counter is : 78 The time server receive order : QUER TIME ORDER ;the counter is : 79 The time server receive order : QUER TIME ORDER ;the counter is : 80 The time server receive order : QUER TIME ORDER ;the counter is : 81 The time server receive order : QUER TIME ORDER ;the counter is : 82 The time server receive order : QUER TIME ORDER ;the counter is : 83 The time server receive order : QUER TIME ORDER ;the counter is : 84 The time server receive order : QUER TIME ORDER ;the counter is : 85 The time server receive order : QUER TIME ORDER ;the counter is : 86 The time server receive order : QUER TIME ORDER ;the counter is : 87 The time server receive order : QUER TIME ORDER ;the counter is : 88 The time server receive order : QUER TIME ORDER ;the counter is : 89 The time server receive order : QUER TIME ORDER ;the counter is : 90 The time server receive order : QUER TIME ORDER ;the counter is : 91 The time server receive order : QUER TIME ORDER ;the counter is : 92 The time server receive order : QUER TIME ORDER ;the counter is : 93 The time server receive order : QUER TIME ORDER ;the counter is : 94 The time server receive order : QUER TIME ORDER ;the counter is : 95 The time server receive order : QUER TIME ORDER ;the counter is : 96 The time server receive order : QUER TIME ORDER ;the counter is : 97 The time server receive order : QUER TIME ORDER ;the counter is : 98 The time server receive order : QUER TIME ORDER ;the counter is : 99 The time server receive order : QUER TIME ORDER ;the counter is : 100

客户端执行结果如下:

Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :1 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :2 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :3 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :4 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :5 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :6 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :7 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :8 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :9 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :10 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :11 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :12 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :13 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :14 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :15 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :16 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :17 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :18 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :19 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :20 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :21 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :22 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :23 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :24 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :25 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :26 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :27 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :28 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :29 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :30 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :31 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :32 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :33 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :34 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :35 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :36 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :37 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :38 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :39 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :40 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :41 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :42 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :43 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :44 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :45 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :46 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :47 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :48 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :49 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :50 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :51 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :52 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :53 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :54 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :55 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :56 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :57 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :58 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :59 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :60 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :61 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :62 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :63 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :64 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :65 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :66 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :67 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :68 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :69 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :70 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :71 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :72 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :73 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :74 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :75 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :76 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :77 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :78 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :79 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :80 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :81 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :82 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :83 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :84 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :85 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :86 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :87 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :88 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :89 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :90 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :91 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :92 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :93 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :94 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :95 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :96 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :97 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :98 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :99 Now is :Wed Aug 30 16:38:34 CST 2017 ;the counter is :100

程序的运行结果完全符合预期,说明通过使用LineBasedFrameDecoder和StringDecoder成功解决了TCP粘包导致的读半包问题。对于使用者来说,只要将支持半包解码的handler添加到ChannelPipeline中即可,不需要写额外的代码,用户使用起来非常简单。

3.4 LineBasedFrameDecoder和StringDecoder原理分析

LineBasedFrameDecoder的工作原理是它依次遍历ByteBuf中的可读字节,判断看是否有"\n"或者“\r\n”,如果有,就以此位置为结束位置,从可读索引到结束位置区间的字节就组成了一行。它是以换行符为结束标志的解码器,支持携带结束符或者不携带结束符两种解码方式。同时支持配置单行的最大长度。如果连续读取到的最大长度后仍没有发现换行符,就会抛出异常,同时忽略掉之前督导的异常码流。

StringDecoder的功能非常简单,就是将收到到的对象转换成字符串,然后继续调用后面的handler。LineBasedFrameDecoder+StringDecoder组合就是按行切换的文本解码器,它被设计用来支持TCP的粘包和拆包。

疑问:如果发送的消息不是以换行符结束的该怎么办呢?或者没有回车换行符,靠消息头中的长度字段来分包怎么办?是不是需要自己写半包解码器?答案是否定的,Netty提供了多种支持TCP粘包/拆包的解码器,用来满足用户的不同诉求。

如果此文对您有帮助,微信打赏我一下吧~

作者:Joanna.Yan

出处:http://www.cnblogs.com/Joanna-Yan/

本文版权归作者所有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文链接,否则保留追究法律责任的权利。