0x00 filebeat配置多个topic

filebeat.prospectors:

- input_type: log

encoding: GB2312

# fields_under_root: true

fields: ##添加字段

serverip: 192.168.1.10

logtopic: wap

enabled: True

paths:

- /app/wap/logs/catalina.out

multiline.pattern: '^\[' #java报错过滤

multiline.negate: true

multiline.match: after

tail_files: false

- input_type: log

encoding: GB2312

# fields_under_root: true

fields: ##添加字段

serverip: 192.168.1.10

logtopic: api

enabled: True

paths:

- /app/api/logs/catalina.out

multiline.pattern: '^\[' #java报错过滤

multiline.negate: true

multiline.match: after

tail_files: false

#----------------------------- Logstash output --------------------------------

output.kafka:

enabled: true

hosts: ["192.168.16.222:9092","192.168.16.237:9092","192.168.16.238:9092"]

topic: 'elk-%{[fields.logtopic]}' ##匹配fileds字段下的logtopic

partition.hash:

reachable_only: true

compression: gzip

max_message_bytes: 1000000

required_acks: 1

logging.to_files: true

0x01 查看是否输出到kafka

$ bin/kafka-topics.sh --list --zookeeper kafka-01:2181, kafka-02:2181,kafka-03:2181

elk-wap

elk-api

0x02 配置logstash集群

input{

kafka{

bootstrap_servers => "kafka-01:9092,kafka-02:9092,kafka-03:9092"

topics_pattern => "elk-.*"

consumer_threads => 5

decorate_events => true

codec => "json"

auto_offset_reset => "latest"

group_id => "logstash1"##logstash 集群需相同

}

}

filter {

ruby {

code => "event.timestamp.time.localtime"

}

mutate {

remove_field => ["beat"]

}

grok {

match => {"message" => "\[(?<time>\d+-\d+-\d+\s\d+:\d+:\d+)\] \[(?<level>\w+)\] (?<thread>[\w|-]+) (?<class>[\w|\.]+) (?<lineNum>\d+):(?<msg>.+)"

}

}

}

output {

elasticsearch {

hosts => ["192.168.16.221:9200","192.168.16.251:9200","192.168.16.252:9200"]

# index => "%{[fields][logtopic}" ##直接在日志中匹配,索引会去掉elk

index => "%{[@metadata][topic]}-%{+YYYY-MM-dd}"

}

stdout {

codec => rubydebug

}

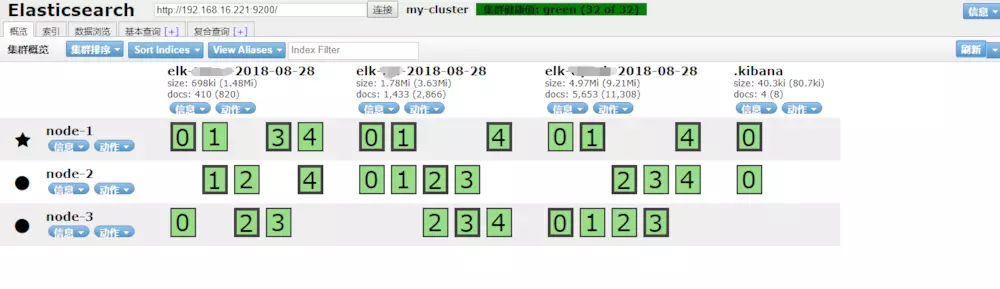

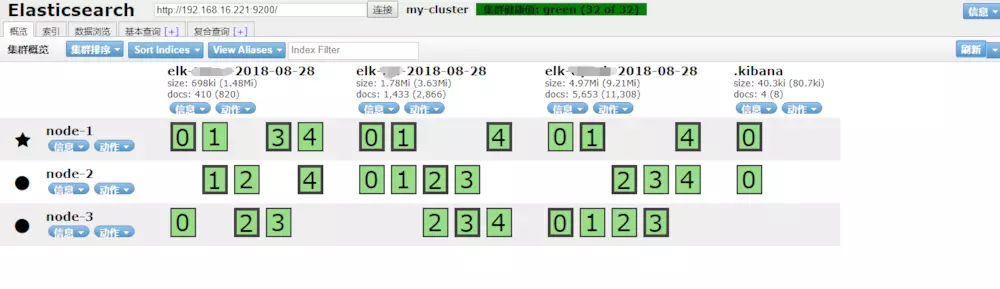

0x03 Es查看是否创建索引

0x04 logstash集群配置

# 一机多实例,同一个配置文件,启动时只需更改数据路径

./bin/logstash -f test.conf --path.data=/usr/local/logdata/

# 多台机器 logstash配置文件group_id 相同即可

浙公网安备 33010602011771号

浙公网安备 33010602011771号