kafka集群管理工具kafka-manager部署安装

一、kafka-manager 简介

为了简化开发者和服务工程师维护Kafka集群的工作,yahoo构建了一个叫做Kafka管理器的基于Web工具,叫做 Kafka Manager。这个管理工具可以很容易地发现分布在集群中的哪些topic分布不均匀,或者是分区在整个集群分布不均匀的的情况。它支持管理多个集群、选择副本、副本重新分配以及创建Topic。同时,这个管理工具也是一个非常好的可以快速浏览这个集群的工具,有如下功能:

1.管理多个kafka集群

2.便捷的检查kafka集群状态(topics,brokers,备份分布情况,分区分布情况)

3.选择你要运行的副本

4.基于当前分区状况进行

5.可以选择topic配置并创建topic(0.8.1.1和0.8.2的配置不同)

6.删除topic(只支持0.8.2以上的版本并且要在broker配置中设置delete.topic.enable=true)

7.Topic list会指明哪些topic被删除(在0.8.2以上版本适用)

8.为已存在的topic增加分区

9.为已存在的topic更新配置

10.在多个topic上批量重分区

11.在多个topic上批量重分区(可选partition broker位置)

kafka-manager 项目地址:https://github.com/yahoo/kafka-manager

二、安装

1. 环境要求

1.安装jdk8 jdk-1.8.0_60 2,kafka集群 服务器: 10.0.0.50:12181 10.0.0.60:12181 10.0.0.70:12181 软件: kafka_2.8.0-0.8.1.1 zookeeper-3.3.6 3.系统 Linux kafka50 2.6.32-642.el6.x86_64 #1 SMP Tue May 10 17:27:01 UTC 2016 x86_64 x86_64 x86_64 GNU/Linux

2. 下载安装 kafka-manager

2.1 .下载kafka-manager

想要查看和管理Kafka,完全使用命令并不方便,我们可以使用雅虎开源的Kafka-manager,GitHub地址如下:

我们可以使用Git或者直接从Releases中下载,此处从下面的地址下载 1.3.3.7 版本:

下载完成后解压。

注意:上面下载的是源码,下载后需要按照后面步骤进行编译。如果觉得麻烦,可以直接从下面地址下载编译好的 kafka-manager-1.3.3.7.zip。 链接:https://pan.baidu.com/s/1qYifoa4 密码:el4o

2.2.解压

unzip kafka-manager-1.3.3.7.zip -d /data/ cd /data/kafka-manager-1.3.3.7

2.3.修改配置 conf/application.properties

[root@kafka50 conf]# pwd /data/kafka-manager-1.3.3.7/conf [root@kafka50 conf]# ls application.conf consumer.properties logback.xml logger.xml nohup.out routes 编辑配置文件application.conf #kafka-manager.zkhosts="localhost:2181" ##注释这一行,下面添加一行 kafka-manager.zkhosts="10.0.0.50:12181,10.0.0.60:12181,10.0.0.70:12181"

2.4启动

bin/kafka-manager kafka-manager 默认的端口是9000,可通过 -Dhttp.port,指定端口; -Dconfig.file=conf/application.conf指定配置文件: nohup bin/kafka-manager -Dconfig.file=conf/application.conf -Dhttp.port=8080 &

启动过程:

[root@kafka50 kafka-manager-1.3.3.7]# /data/kafka-manager-1.3.3.7/bin/kafka-manager 17:41:03,313 |-INFO in ch.qos.logback.classic.LoggerContext[default] - Could NOT find resource [logback.groovy] 17:41:03,313 |-INFO in ch.qos.logback.classic.LoggerContext[default] - Could NOT find resource [logback-test.xml] 17:41:03,313 |-INFO in ch.qos.logback.classic.LoggerContext[default] - Found resource [logback.xml] at [file:/data/kafka-manager-1.3.3.7/conf/logback.xml] 17:41:03,785 |-INFO in ch.qos.logback.classic.joran.action.ConfigurationAction - debug attribute not set 17:41:03,787 |-INFO in ch.qos.logback.core.joran.action.ConversionRuleAction - registering conversion word coloredLevel with class [play.api.Logger$ColoredLevel] 17:41:03,787 |-INFO in ch.qos.logback.core.joran.action.AppenderAction - About to instantiate appender of type [ch.qos.logback.core.rolling.RollingFileAppender] 17:41:03,795 |-INFO in ch.qos.logback.core.joran.action.AppenderAction - Naming appender as [FILE] 17:41:03,899 |-INFO in ch.qos.logback.core.joran.action.NestedComplexPropertyIA - Assuming default type [ch.qos.logback.classic.encoder.PatternLayoutEncoder] for [encoder] property 17:41:04,002 |-ERROR in ch.qos.logback.core.joran.spi.Interpreter@18:27 - no applicable action for [totalSizeCap], current ElementPath is [[configuration][appender][rollingPolicy][totalSizeCap]] 17:41:04,017 |-INFO in c.q.l.core.rolling.TimeBasedRollingPolicy - No compression will be used 17:41:04,020 |-INFO in c.q.l.core.rolling.TimeBasedRollingPolicy - Will use the pattern application.home_IS_UNDEFINED/logs/application.%d{yyyy-MM-dd}.log for the active file 17:41:04,029 |-INFO in c.q.l.core.rolling.DefaultTimeBasedFileNamingAndTriggeringPolicy - The date pattern is 'yyyy-MM-dd' from file name pattern 'application.home_IS_UNDEFINED/logs/application.%d{yyyy-MM-dd}.log'. 17:41:04,029 |-INFO in c.q.l.core.rolling.DefaultTimeBasedFileNamingAndTriggeringPolicy - Roll-over at midnight. 17:41:04,053 |-INFO in c.q.l.core.rolling.DefaultTimeBasedFileNamingAndTriggeringPolicy - Setting initial period to Fri Jan 05 17:41:04 CST 2018 17:41:04,059 |-INFO in ch.qos.logback.core.rolling.RollingFileAppender[FILE] - Active log file name: application.home_IS_UNDEFINED/logs/application.log 17:41:04,059 |-INFO in ch.qos.logback.core.rolling.RollingFileAppender[FILE] - File property is set to [application.home_IS_UNDEFINED/logs/application.log] 17:41:04,063 |-INFO in ch.qos.logback.core.joran.action.AppenderAction - About to instantiate appender of type [ch.qos.logback.core.ConsoleAppender] 17:41:04,065 |-INFO in ch.qos.logback.core.joran.action.AppenderAction - Naming appender as [STDOUT] 17:41:04,076 |-INFO in ch.qos.logback.core.joran.action.NestedComplexPropertyIA - Assuming default type [ch.qos.logback.classic.encoder.PatternLayoutEncoder] for [encoder] property 17:41:04,083 |-INFO in ch.qos.logback.core.joran.action.AppenderAction - About to instantiate appender of type [ch.qos.logback.classic.AsyncAppender] 17:41:04,085 |-INFO in ch.qos.logback.core.joran.action.AppenderAction - Naming appender as [ASYNCFILE] 17:41:04,085 |-INFO in ch.qos.logback.core.joran.action.AppenderRefAction - Attaching appender named [FILE] to ch.qos.logback.classic.AsyncAppender[ASYNCFILE] 17:41:04,085 |-INFO in ch.qos.logback.classic.AsyncAppender[ASYNCFILE] - Attaching appender named [FILE] to AsyncAppender. 17:41:04,086 |-INFO in ch.qos.logback.classic.AsyncAppender[ASYNCFILE] - Setting discardingThreshold to 51 17:41:04,089 |-INFO in ch.qos.logback.core.joran.action.AppenderAction - About to instantiate appender of type [ch.qos.logback.classic.AsyncAppender] 17:41:04,090 |-INFO in ch.qos.logback.core.joran.action.AppenderAction - Naming appender as [ASYNCSTDOUT] 17:41:04,090 |-INFO in ch.qos.logback.core.joran.action.AppenderRefAction - Attaching appender named [STDOUT] to ch.qos.logback.classic.AsyncAppender[ASYNCSTDOUT] 17:41:04,090 |-INFO in ch.qos.logback.classic.AsyncAppender[ASYNCSTDOUT] - Attaching appender named [STDOUT] to AsyncAppender. 17:41:04,090 |-INFO in ch.qos.logback.classic.AsyncAppender[ASYNCSTDOUT] - Setting discardingThreshold to 51 17:41:04,094 |-INFO in ch.qos.logback.classic.joran.action.LoggerAction - Setting level of logger [play] to INFO 17:41:04,094 |-INFO in ch.qos.logback.classic.joran.action.LoggerAction - Setting level of logger [application] to INFO 17:41:04,094 |-INFO in ch.qos.logback.classic.joran.action.LoggerAction - Setting level of logger [kafka.manager] to INFO 17:41:04,096 |-INFO in ch.qos.logback.classic.joran.action.LoggerAction - Setting level of logger [com.avaje.ebean.config.PropertyMapLoader] to OFF 17:41:04,096 |-INFO in ch.qos.logback.classic.joran.action.LoggerAction - Setting level of logger [com.avaje.ebeaninternal.server.core.XmlConfigLoader] to OFF 17:41:04,096 |-INFO in ch.qos.logback.classic.joran.action.LoggerAction - Setting level of logger [com.avaje.ebeaninternal.server.lib.BackgroundThread] to OFF 17:41:04,096 |-INFO in ch.qos.logback.classic.joran.action.LoggerAction - Setting level of logger [com.gargoylesoftware.htmlunit.javascript] to OFF 17:41:04,096 |-INFO in ch.qos.logback.classic.joran.action.LoggerAction - Setting level of logger [org.apache.zookeeper] to INFO 17:41:04,096 |-INFO in ch.qos.logback.classic.joran.action.RootLoggerAction - Setting level of ROOT logger to WARN 17:41:04,096 |-INFO in ch.qos.logback.core.joran.action.AppenderRefAction - Attaching appender named [ASYNCFILE] to Logger[ROOT] 17:41:04,096 |-INFO in ch.qos.logback.core.joran.action.AppenderRefAction - Attaching appender named [ASYNCSTDOUT] to Logger[ROOT] 17:41:04,096 |-INFO in ch.qos.logback.classic.joran.action.ConfigurationAction - End of configuration. 17:41:04,097 |-INFO in ch.qos.logback.classic.joran.JoranConfigurator@67c99d6b - Registering current configuration as safe fallback point [warn] o.a.c.r.ExponentialBackoffRetry - maxRetries too large (100). Pinning to 29 [info] k.m.a.KafkaManagerActor - Starting curator... [info] o.a.z.ZooKeeper - Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT [info] o.a.z.ZooKeeper - Client environment:host.name=kafka50 [info] o.a.z.ZooKeeper - Client environment:java.version=1.8.0_60 [info] o.a.z.ZooKeeper - Client environment:java.vendor=Oracle Corporation [info] o.a.z.ZooKeeper - Client environment:java.home=/application/jdk1.8.0_60/jre [info] o.a.z.ZooKeeper - Client environment:java.class.path=/data/kafka-manager-1.3.3.7/lib/../conf/:/data/kafka-manager-1.3.3.7/lib/kafka-manager.kafka-manager-1.3.3.7-sans-externalized.jar:/data/kafka-manager-1.3.3.7/lib/org.scala-lang.scala-library-2.11.8.jar:/data/kafka-manager-1.3.3.7/lib/com.typesafe.play.twirl-api_2.11-1.1.1.jar:/data/kafka-manager-1.3.3.7/lib/org.apache.commons.commons-lang3-3.4.jar:/data/kafka-manager-1.3.3.7/lib/com.typesafe.play.play-server_2.11-2.4.6.jar:/data/kafka-manager-1.3.3.7/lib/com.typesafe.play.play_2.11-2.4.6.jar:/data/kafka-manager-1.3.3.7/lib/com.typesafe.play.build-link-2.4.6.jar:/data/kafka-manager-1.3.3.7/lib/com.typesafe.play.play-exceptions-2.4.6.jar:/data/kafka-manager-1.3.3.7/lib/org.javassist.javassist-3.19.0-GA.jar:/data/kafka-manager-1.3.3.7/lib/com.typesafe.play.play-iteratees_2.11-2.4.6.jar:/data/kafka-manager-1.3.3.7/lib/org.scala-stm.scala-stm_2.11-0.7.jar:/data/kafka-manager-1.3.3.7/lib/com.typesafe.config-1.3.0.jar:/data/kafka-manager-1.3.3.7/lib/com.typesafe.play.play-json_2.11-2.4.6.jar:/data/kafka-manager-1.3.3.7/lib/com.typesafe.play.play-functional_2.11-2.4.6.jar:/data/kafka-manager-1.3.3.7/lib/com.typesafe.play.play-datacommons_2.11-2.4.6.jar:/data/kafka-manager-1.3.3.7/lib/joda-time.joda-time-2.8.1.jar:/data/kafka-manager-1.3.3.7/lib/org.joda.joda-convert-1.7.jar:/data/kafka-manager-1.3.3.7/lib/com.fasterxml.jackson.datatype.jackson-datatype-jdk8-2.5.4.jar:/data/kafka-manager-1.3.3.7/lib/com.fasterxml.jackson.datatype.jackson-datatype-jsr310-2.5.4.jar:/data/kafka-manager-1.3.3.7/lib/com.typesafe.play.play-netty-utils-2.4.6.jar:/data/kafka-manager-1.3.3.7/lib/org.slf4j.jul-to-slf4j-1.7.12.jar:/data/kafka-manager-1.3.3.7/lib/org.slf4j.jcl-over-slf4j-1.7.12.jar:/data/kafka-manager-1.3.3.7/lib/ch.qos.logback.logback-core-1.1.3.jar:/data/kafka-manager-1.3.3.7/lib/ch.qos.logback.logback-classic-1.1.3.jar:/data/kafka-manager-1.3.3.7/lib/com.typesafe.akka.akka-actor_2.11-2.3.14.jar:/data/kafka-manager-1.3.3.7/lib/com.typesafe.akka.akka-slf4j_2.11-2.3.14.jar:/data/kafka-manager-1.3.3.7/lib/commons-codec.commons-codec-1.10.jar:/data/kafka-manager-1.3.3.7/lib/xerces.xercesImpl-2.11.0.jar:/data/kafka-manager-1.3.3.7/lib/xml-apis.xml-apis-1.4.01.jar:/data/kafka-manager-1.3.3.7/lib/javax.transaction.jta-1.1.jar:/data/kafka-manager-1.3.3.7/lib/com.google.inject.guice-4.0.jar:/data/kafka-manager-1.3.3.7/lib/javax.inject.javax.inject-1.jar:/data/kafka-manager-1.3.3.7/lib/aopalliance.aopalliance-1.0.jar:/data/kafka-manager-1.3.3.7/lib/com.google.guava.guava-16.0.1.jar:/data/kafka-manager-1.3.3.7/lib/com.google.inject.extensions.guice-assistedinject-4.0.jar:/data/kafka-manager-1.3.3.7/lib/com.typesafe.play.play-netty-server_2.11-2.4.6.jar:/data/kafka-manager-1.3.3.7/lib/io.netty.netty-3.10.4.Final.jar:/data/kafka-manager-1.3.3.7/lib/com.typesafe.netty.netty-http-pipelining-1.1.4.jar:/data/kafka-manager-1.3.3.7/lib/com.google.code.findbugs.jsr305-2.0.1.jar:/data/kafka-manager-1.3.3.7/lib/org.webjars.webjars-play_2.11-2.4.0-2.jar:/data/kafka-manager-1.3.3.7/lib/org.webjars.requirejs-2.1.20.jar:/data/kafka-manager-1.3.3.7/lib/org.webjars.webjars-locator-0.28.jar:/data/kafka-manager-1.3.3.7/lib/org.webjars.webjars-locator-core-0.27.jar:/data/kafka-manager-1.3.3.7/lib/org.apache.commons.commons-compress-1.9.jar:/data/kafka-manager-1.3.3.7/lib/org.webjars.npm.validate.js-0.8.0.jar:/data/kafka-manager-1.3.3.7/lib/org.webjars.bootstrap-3.3.5.jar:/data/kafka-manager-1.3.3.7/lib/org.webjars.jquery-2.1.4.jar:/data/kafka-manager-1.3.3.7/lib/org.webjars.backbonejs-1.2.3.jar:/data/kafka-manager-1.3.3.7/lib/org.webjars.underscorejs-1.8.3.jar:/data/kafka-manager-1.3.3.7/lib/org.webjars.dustjs-linkedin-2.6.1-1.jar:/data/kafka-manager-1.3.3.7/lib/org.webjars.json-20121008-1.jar:/data/kafka-manager-1.3.3.7/lib/org.apache.curator.curator-framework-2.10.0.jar:/data/kafka-manager-1.3.3.7/lib/org.apache.curator.curator-client-2.10.0.jar:/data/kafka-manager-1.3.3.7/lib/org.apache.zookeeper.zookeeper-3.4.6.jar:/data/kafka-manager-1.3.3.7/lib/jline.jline-0.9.94.jar:/data/kafka-manager-1.3.3.7/lib/org.apache.curator.curator-recipes-2.10.0.jar:/data/kafka-manager-1.3.3.7/lib/org.json4s.json4s-jackson_2.11-3.4.0.jar:/data/kafka-manager-1.3.3.7/lib/org.json4s.json4s-core_2.11-3.4.0.jar:/data/kafka-manager-1.3.3.7/lib/org.json4s.json4s-ast_2.11-3.4.0.jar:/data/kafka-manager-1.3.3.7/lib/org.json4s.json4s-scalap_2.11-3.4.0.jar:/data/kafka-manager-1.3.3.7/lib/com.thoughtworks.paranamer.paranamer-2.8.jar:/data/kafka-manager-1.3.3.7/lib/org.scala-lang.modules.scala-xml_2.11-1.0.5.jar:/data/kafka-manager-1.3.3.7/lib/com.fasterxml.jackson.core.jackson-databind-2.6.7.jar:/data/kafka-manager-1.3.3.7/lib/com.fasterxml.jackson.core.jackson-annotations-2.6.0.jar:/data/kafka-manager-1.3.3.7/lib/com.fasterxml.jackson.core.jackson-core-2.6.7.jar:/data/kafka-manager-1.3.3.7/lib/org.json4s.json4s-scalaz_2.11-3.4.0.jar:/data/kafka-manager-1.3.3.7/lib/org.scalaz.scalaz-core_2.11-7.2.4.jar:/data/kafka-manager-1.3.3.7/lib/org.slf4j.log4j-over-slf4j-1.7.12.jar:/data/kafka-manager-1.3.3.7/lib/com.adrianhurt.play-bootstrap3_2.11-0.4.5-P24.jar:/data/kafka-manager-1.3.3.7/lib/org.clapper.grizzled-slf4j_2.11-1.0.2.jar:/data/kafka-manager-1.3.3.7/lib/org.apache.kafka.kafka_2.11-0.10.0.1.jar:/data/kafka-manager-1.3.3.7/lib/com.101tec.zkclient-0.8.jar:/data/kafka-manager-1.3.3.7/lib/com.yammer.metrics.metrics-core-2.2.0.jar:/data/kafka-manager-1.3.3.7/lib/org.scala-lang.modules.scala-parser-combinators_2.11-1.0.4.jar:/data/kafka-manager-1.3.3.7/lib/net.sf.jopt-simple.jopt-simple-4.9.jar:/data/kafka-manager-1.3.3.7/lib/org.apache.kafka.kafka-clients-0.10.0.1.jar:/data/kafka-manager-1.3.3.7/lib/net.jpountz.lz4.lz4-1.3.0.jar:/data/kafka-manager-1.3.3.7/lib/org.xerial.snappy.snappy-java-1.1.2.6.jar:/data/kafka-manager-1.3.3.7/lib/org.slf4j.slf4j-api-1.7.21.jar:/data/kafka-manager-1.3.3.7/lib/com.beachape.enumeratum_2.11-1.4.4.jar:/data/kafka-manager-1.3.3.7/lib/com.beachape.enumeratum-macros_2.11-1.4.4.jar:/data/kafka-manager-1.3.3.7/lib/org.scala-lang.scala-reflect-2.11.8.jar:/data/kafka-manager-1.3.3.7/lib/kafka-manager.kafka-manager-1.3.3.7-assets.jar [info] o.a.z.ZooKeeper - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib [info] o.a.z.ZooKeeper - Client environment:java.io.tmpdir=/tmp [info] o.a.z.ZooKeeper - Client environment:java.compiler=<NA> [info] o.a.z.ZooKeeper - Client environment:os.name=Linux [info] o.a.z.ZooKeeper - Client environment:os.arch=amd64 [info] o.a.z.ZooKeeper - Client environment:os.version=2.6.32-642.el6.x86_64 [info] o.a.z.ZooKeeper - Client environment:user.name=root [info] o.a.z.ZooKeeper - Client environment:user.home=/root [info] o.a.z.ZooKeeper - Client environment:user.dir=/data/kafka-manager-1.3.3.7 [info] o.a.z.ZooKeeper - Initiating client connection, connectString=10.0.0.50:12181,10.0.0.60:12181,10.0.0.70:12181 sessionTimeout=60000 watcher=org.apache.curator.ConnectionState@b6c3e2e [info] o.a.z.ClientCnxn - Opening socket connection to server 10.0.0.50/10.0.0.50:12181. Will not attempt to authenticate using SASL (unknown error) [info] k.m.a.KafkaManagerActor - zk=10.0.0.50:12181,10.0.0.60:12181,10.0.0.70:12181 [info] k.m.a.KafkaManagerActor - baseZkPath=/kafka-manager [info] o.a.z.ClientCnxn - Socket connection established to 10.0.0.50/10.0.0.50:12181, initiating session [warn] o.a.z.ClientCnxnSocket - Connected to an old server; r-o mode will be unavailable [info] o.a.z.ClientCnxn - Session establishment complete on server 10.0.0.50/10.0.0.50:12181, sessionid = 0x160c59c8a510001, negotiated timeout = 40000 [info] k.m.a.KafkaManagerActor - Started actor akka://kafka-manager-system/user/kafka-manager [info] k.m.a.KafkaManagerActor - Starting delete clusters path cache... [info] k.m.a.DeleteClusterActor - Started actor akka://kafka-manager-system/user/kafka-manager/delete-cluster [info] k.m.a.DeleteClusterActor - Starting delete clusters path cache... [info] k.m.a.DeleteClusterActor - Adding kafka manager path cache listener... [info] k.m.a.DeleteClusterActor - Scheduling updater for 10 seconds [info] k.m.a.KafkaManagerActor - Starting kafka manager path cache... [info] k.m.a.KafkaManagerActor - Adding kafka manager path cache listener... [info] k.m.a.KafkaManagerActor - Updating internal state... [info] play.api.Play - Application started (Prod) [info] p.c.s.NettyServer - Listening for HTTP on /0:0:0:0:0:0:0:0:9000 [info] k.m.a.KafkaManagerActor - Adding new cluster manager for cluster : kafka-cluster-1 [warn] o.a.c.r.ExponentialBackoffRetry - maxRetries too large (100). Pinning to 29 [info] k.m.a.c.ClusterManagerActor - Starting curator... [info] o.a.z.ZooKeeper - Initiating client connection, connectString=10.0.0.50:12181,10.0.0.60:12181,10.0.0.70:12181 sessionTimeout=60000 watcher=org.apache.curator.ConnectionState@7621f705 [info] o.a.z.ClientCnxn - Opening socket connection to server 10.0.0.50/10.0.0.50:12181. Will not attempt to authenticate using SASL (unknown error) [info] o.a.z.ClientCnxn - Socket connection established to 10.0.0.50/10.0.0.50:12181, initiating session [warn] o.a.c.r.ExponentialBackoffRetry - maxRetries too large (100). Pinning to 29 [info] k.m.a.c.ClusterManagerActor - Starting shared curator... [info] o.a.z.ZooKeeper - Initiating client connection, connectString=10.0.0.50:12181,10.0.0.60:12181,10.0.0.70:12181 sessionTimeout=60000 watcher=org.apache.curator.ConnectionState@34545ff9 [info] o.a.z.ClientCnxn - Opening socket connection to server 10.0.0.70/10.0.0.70:12181. Will not attempt to authenticate using SASL (unknown error) [info] o.a.z.ClientCnxn - Socket connection established to 10.0.0.70/10.0.0.70:12181, initiating session [warn] o.a.z.ClientCnxnSocket - Connected to an old server; r-o mode will be unavailable [info] o.a.z.ClientCnxn - Session establishment complete on server 10.0.0.50/10.0.0.50:12181, sessionid = 0x160c59c8a510002, negotiated timeout = 40000 [warn] o.a.z.ClientCnxnSocket - Connected to an old server; r-o mode will be unavailable [info] o.a.z.ClientCnxn - Session establishment complete on server 10.0.0.70/10.0.0.70:12181, sessionid = 0x360c59eb4ac0002, negotiated timeout = 40000 [info] k.m.a.c.KafkaCommandActor - Started actor akka://kafka-manager-system/user/kafka-manager/kafka-cluster-1/kafka-command [info] k.m.a.c.KafkaAdminClientActor - KafkaAdminClientActorConfig(ClusterContext(ClusterFeatures(Set()),ClusterConfig(kafka-cluster-1,CuratorConfig(10.0.0.50:12181,10.0.0.60:12181,10.0.0.70:12181,100,100,1000),true,0.8.1.1,false,None,None,false,false,false,false,false,false,Some(ClusterTuning(Some(30),Some(2),Some(100),Some(2),Some(100),Some(2),Some(100),Some(30),Some(5),Some(2),Some(1000),Some(2),Some(1000),Some(2),Some(1000))))),LongRunningPoolConfig(2,1000),akka://kafka-manager-system/user/kafka-manager/kafka-cluster-1/kafka-state) [info] k.m.a.c.BrokerViewCacheActor - Started actor akka://kafka-manager-system/user/kafka-manager/kafka-cluster-1/broker-view [info] k.m.a.c.BrokerViewCacheActor - Scheduling updater for 30 seconds [info] k.m.a.c.BrokerViewCacheActor - Updating broker view... [info] k.m.a.c.ClusterManagerActor - Started actor akka://kafka-manager-system/user/kafka-manager/kafka-cluster-1 [info] k.m.a.c.ClusterManagerActor - Starting cluster manager topics path cache... [info] k.m.a.c.KafkaStateActor - KafkaStateActorConfig(org.apache.curator.framework.imps.CuratorFrameworkImpl@62d9cd2d,pinned-dispatcher,ClusterContext(ClusterFeatures(Set()),ClusterConfig(kafka-cluster-1,CuratorConfig(10.0.0.50:12181,10.0.0.60:12181,10.0.0.70:12181,100,100,1000),true,0.8.1.1,false,None,None,false,false,false,false,false,false,Some(ClusterTuning(Some(30),Some(2),Some(100),Some(2),Some(100),Some(2),Some(100),Some(30),Some(5),Some(2),Some(1000),Some(2),Some(1000),Some(2),Some(1000))))),LongRunningPoolConfig(2,1000),LongRunningPoolConfig(2,1000),5,10000,None) [info] k.m.a.c.KafkaStateActor - Started actor akka://kafka-manager-system/user/kafka-manager/kafka-cluster-1/kafka-state [info] k.m.a.c.KafkaStateActor - Starting topics tree cache... [info] k.m.a.c.KafkaStateActor - Starting topics config path cache... [info] k.m.a.c.KafkaStateActor - Starting brokers path cache... [info] k.m.a.c.KafkaStateActor - Starting admin path cache... [info] k.m.a.c.KafkaStateActor - Starting delete topics path cache... [info] k.m.a.c.KafkaStateActor - Adding topics tree cache listener... [info] k.m.a.c.KafkaStateActor - Adding admin path cache listener... [info] k.m.a.c.KafkaStateActor - Starting offset cache... [info] k.m.a.c.OffsetCachePassive - Starting consumers path children cache... [info] k.m.a.c.OffsetCachePassive - Adding consumers path children cache listener... [info] k.m.a.KafkaManagerActor - Updating internal state... [info] k.m.a.KafkaManagerActor - Updating internal state... [info] k.m.a.KafkaManagerActor - Updating internal state... [info] k.m.a.c.BrokerViewCacheActor - Updating broker view... [info] k.m.a.KafkaManagerActor - Updating internal state... ##如果不放后台启动,就会一直在前台显示 ^C[info] k.m.a.KafkaManagerActor - Shutting down kafka manager [info] k.m.a.DeleteClusterActor - Stopped actor akka://kafka-manager-system/user/kafka-manager/delete-cluster [info] k.m.a.DeleteClusterActor - Removing delete clusters path cache listener... [info] k.m.a.DeleteClusterActor - Shutting down delete clusters path cache... [info] k.m.a.c.KafkaCommandActor - Shutting down long running executor... [info] k.m.a.c.KafkaAdminClientActor - Closing admin client...

启动完毕后可以查看端口是否启动,由于启动过程需要一段时间,端口起来的时间可能会延后。

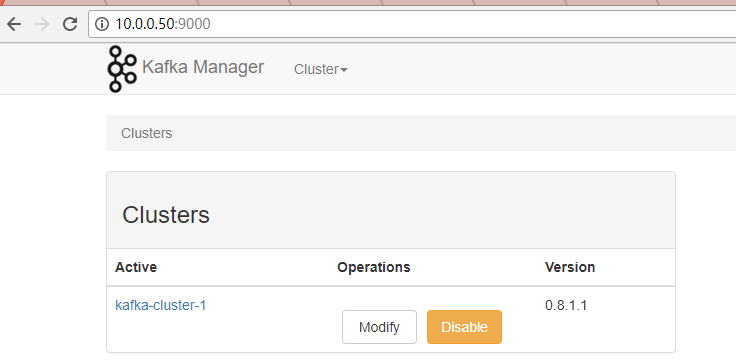

3.浏览器访问

使用ip地址:端口访问

三、测试 kafka-mamager

1. 新建 Cluster

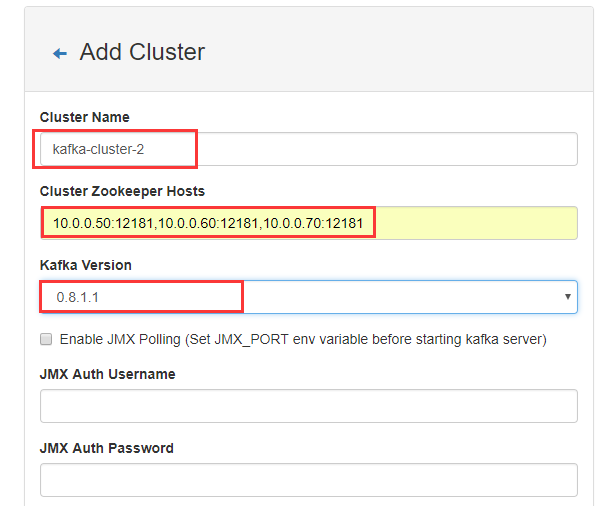

点击【Cluster】>【Add Cluster】打开如下添加集群的配置界面:

输入集群的名字(如Kafka-Cluster-1)和 Zookeeper 服务器地址(如localhost:2181),选择最接近的Kafka版本(如0.8.1.1)

注意:如果没有在 Kafka 中配置过 JMX_PORT,千万不要选择第一个复选框。 Enable JMX Polling 如果选择了该复选框,Kafka-manager 可能会无法启动。

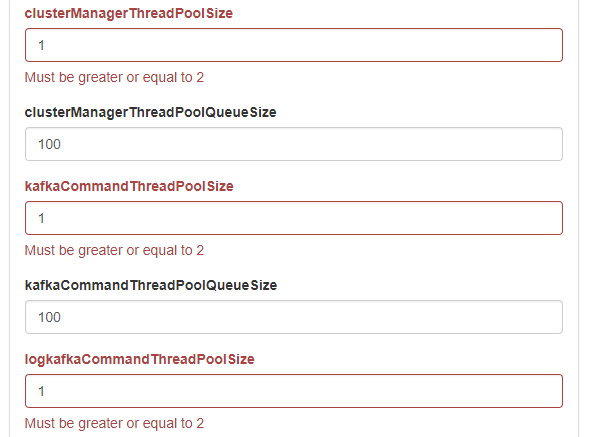

其他broker的配置可以根据自己需要进行配置,默认情况下,点击【保存】时,会提示几个默认值为1的配置错误,需要配置为>=2的值。提示如下。

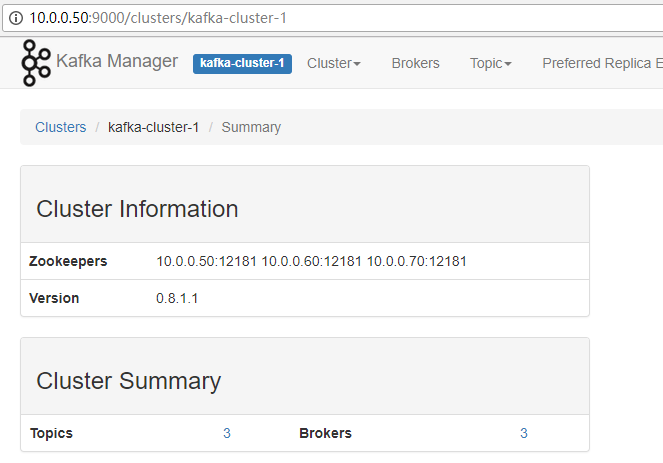

新建完成后,运行界面如下:

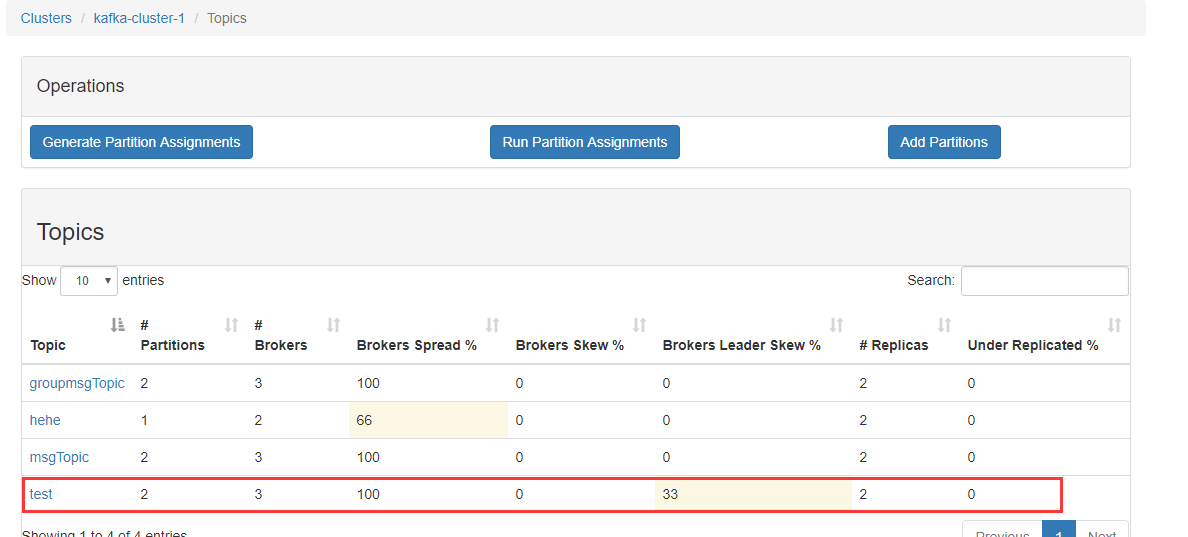

TOPIC list

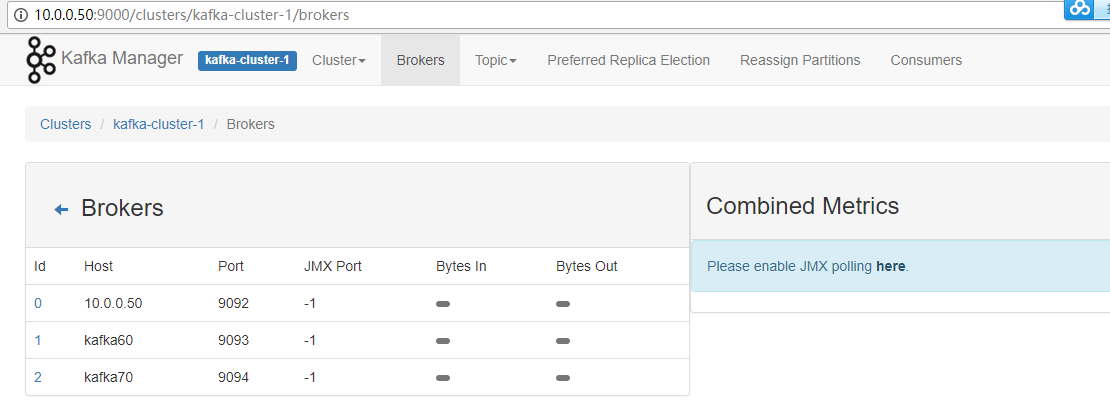

broker

三、管理 kafka-mamager

3.1.新建主题

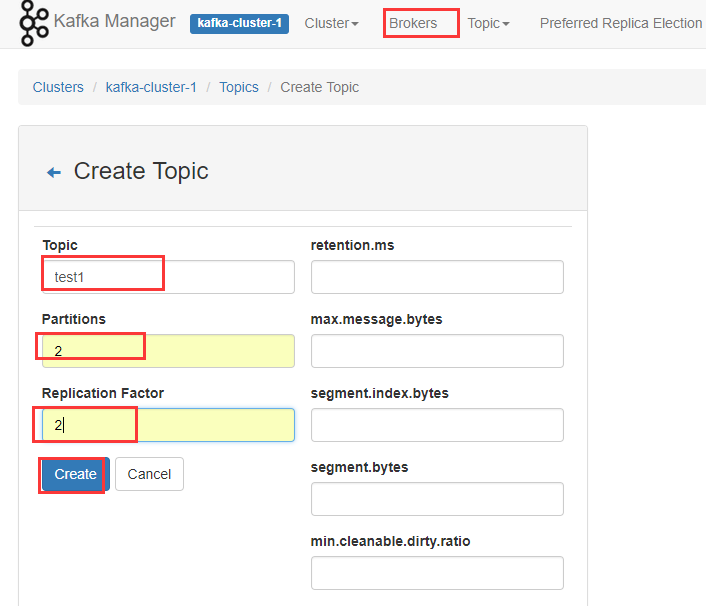

点击【Topic】>【Create】可以方便的创建并配置主题。如下显示。

接下来我们根据一张图讲解

在上图一个Kafka集群中,有两个服务器,每个服务器上都有2个分区。P0,P3可能属于同一个主题,也可能是两个不同的主题。 如果设置的Partitons和Replication Factor都是2,这种情况下该主题的分步就和上图中Kafka集群显示的相同,此时P0,P3是同一个主题的两个分区。P1,P2也是同一个主题的两个分区,Server1和Server2其中一个会作为Leader进行读写操作,另一个通过复制进行同步。 如果设置的Partitons和Replication Factor都是1,这时只会根据算法在某个Server上创建一个分区,可以是P0~4中的某一个(分区都是新建的,不是先存在4个然后从中取1个)。

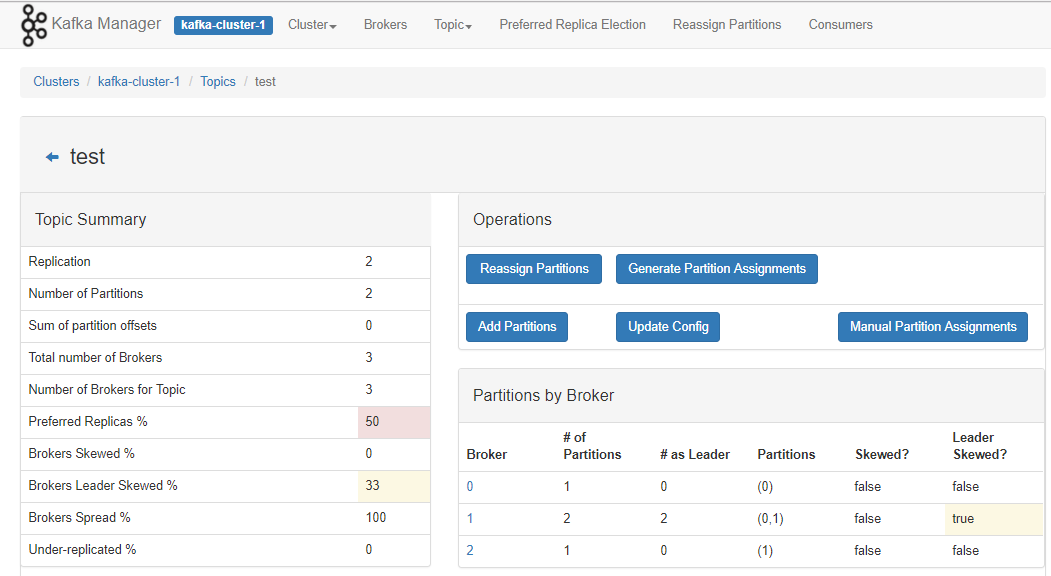

这里我们都设置为2,点击【Create】然后进入创建的这个主题,显示如下。

3.2.查看主题

点击【topic】下面的主题名称,即可查看主题

浙公网安备 33010602011771号

浙公网安备 33010602011771号