docker swarm 搭建kafka集群

docker swarm 部署Kafka集群

本次部署主要是搭建zookeeper * 3 + kafka_broker * + kafka_manager * 1 的集群。

0. 创建专属网络 & 打标签

# 创建网络

docker network create --driver overlay kafka

# 给节点打标签

docker node update --label-add kafka.replica==1 node1

docker node update --label-add kafka.replica==2 node2

docker node update --label-add kafka.replica==3 node3

1. docker-compose文件准备

- 多节点:docker-compose-kafka-cluster1.yml

version: '3'

services:

zoo1:

image: zookeeper:3.6.1

hostname: zoo1

ports:

- 2181:2181

volumes:

- /etc/localtime:/etc/localtime:ro

- zookeeper1_data:/data

- zookeeper1_datalog:/datalog

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

networks:

- kafka

deploy:

placement:

constraints:

- node.labels.kafka.replica==1

zoo2:

image: zookeeper:3.6.1

hostname: zoo2

ports:

- 2182:2181

volumes:

- /etc/localtime:/etc/localtime:ro

- zookeeper2_data:/data

- zookeeper2_datalog:/datalog

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

networks:

- kafka

deploy:

placement:

constraints:

- node.labels.kafka.replica==2

zoo3:

image: zookeeper:3.6.1

hostname: zoo3

ports:

- 2183:2181

volumes:

- /etc/localtime:/etc/localtime:ro

- zookeeper3_data:/data

- zookeeper3_datalog:/datalog

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

networks:

- kafka

deploy:

placement:

constraints:

- node.labels.kafka.replica==3

kafka1:

hostname: kafka1

image: wurstmeister/kafka:2.13-2.7.0

depends_on:

- zoo1

- zoo2

- zoo3

ports:

- "19092:9092"

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- kafka1_data:/kafka

networks:

- kafka

environment:

HOSTNAME_COMMAND: "docker info -f '{{`{{.Swarm.NodeAddr}}`}}'"

TZ: "Asia/Shanghai"

KAFKA_BROKER_ID: 1

KAFKA_ZOOKEEPER_CONNECT: _{HOSTNAME_COMMAND}:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://_{HOSTNAME_COMMAND}:19092

KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9092

deploy:

placement:

constraints:

- node.labels.kafka.replica==1

kafka2:

hostname: kafka2

image: wurstmeister/kafka:2.13-2.7.0

depends_on:

- zoo1

- zoo2

- zoo3

ports:

- "19093:9093"

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- kafka2_data:/kafka

networks:

- kafka

environment:

HOSTNAME_COMMAND: "docker info -f '{{`{{.Swarm.NodeAddr}}`}}'"

TZ: "Asia/Shanghai"

KAFKA_BROKER_ID: 2

KAFKA_ZOOKEEPER_CONNECT: _{HOSTNAME_COMMAND}:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://_{HOSTNAME_COMMAND}:19093

KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9093

deploy:

placement:

constraints:

- node.labels.kafka.replica==2

kafka3:

hostname: kafka3

image: wurstmeister/kafka:2.13-2.7.0

depends_on:

- zoo1

- zoo2

- zoo3

ports:

- "19094:9094"

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- kafka3_data:/kafka

networks:

- kafka

environment:

HOSTNAME_COMMAND: "docker info -f '{{`{{.Swarm.NodeAddr}}`}}'"

TZ: "Asia/Shanghai"

KAFKA_BROKER_ID: 3

KAFKA_ZOOKEEPER_CONNECT: _{HOSTNAME_COMMAND}:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://_{HOSTNAME_COMMAND}:19094

KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9094

deploy:

placement:

constraints:

- node.labels.kafka.replica==3

kafka-console:

image: redpandadata/console

ports:

- "19095:8080"

environment:

KAFKA_BROKERS: "kafka1:9092,kafka2:9093,kafka3:9094"

networks:

- kafka

depends_on:

- "kafka1"

- "kafka2"

- "kafka3"

deploy:

placement:

constraints:

- node.labels.kafka.replica==1

volumes:

zookeeper1_data:

driver: local

zookeeper1_datalog:

driver: local

zookeeper2_data:

driver: local

zookeeper2_datalog:

driver: local

zookeeper3_data:

driver: local

zookeeper3_datalog:

driver: local

kafka1_data:

driver: local

kafka2_data:

driver: local

kafka3_data:

driver: local

networks:

kafka:

external: true

- docker-compose-kafka2-cluster2.yml

version: '3.3'

services:

zoo1:

image: zookeeper:3.6.1

hostname: zoo1

ports:

- "2181:2181"

volumes:

- /etc/localtime:/etc/localtime:ro

- zookeeper1_data:/data

- zookeeper1_datalog:/datalog

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

ZOOKEEPER_CLIENT_PORT: 2181

ZOOKEEPER_TICK_TIME: 2000

networks:

- kafka

deploy:

mode: replicated

replicas: 1

resources:

limits: # 资源使用上限

cpus: "0.50"

memory: 1G

reservations: # 随时可以使用的资源

cpus: "0.25"

memory: 1G

placement:

constraints:

- node.labels.kafka.replica==1 # 部署位置

zoo2:

image: zookeeper:3.6.1

hostname: zoo2

ports:

- "2182:2181"

volumes:

- /etc/localtime:/etc/localtime:ro

- zookeeper2_data:/data

- zookeeper2_datalog:/datalog

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

ZOOKEEPER_CLIENT_PORT: 2182

ZOOKEEPER_TICK_TIME: 2000

networks:

- kafka

deploy:

mode: replicated

replicas: 1

resources:

limits: # 资源使用上限

cpus: "0.50"

memory: 1G

reservations: # 随时可以使用的资源

cpus: "0.25"

memory: 1G

placement:

constraints:

- node.labels.kafka.replica==2 # 部署位置

zoo3:

image: zookeeper:3.6.1

hostname: zoo3

ports:

- "2183:2181"

volumes:

- /etc/localtime:/etc/localtime:ro

- zookeeper3_data:/data

- zookeeper3_datalog:/datalog

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

ZOOKEEPER_CLIENT_PORT: 2183

ZOOKEEPER_TICK_TIME: 2000

networks:

- kafka

deploy:

mode: replicated

replicas: 1

resources:

limits: # 资源使用上限

cpus: "0.50"

memory: 1G

reservations: # 随时可以使用的资源

cpus: "0.25"

memory: 1G

placement:

constraints:

- node.labels.kafka.replica==3 # 部署位置

kafka1:

image: wurstmeister/kafka:2.13-2.7.0

hostname: kafka1

ports:

- "9093:9093"

depends_on:

- zoo1

- zoo2

- zoo3

environment:

- TZ=Asia/Shanghai

- KAFKA_ZOOKEEPER_CONNECT=zoo1:2181,zoo2:2181,zoo3:2181/kafka

- KAFKA_BROKER_ID=1

- KAFKA_AUTO_CREATE_TOPICS_ENABLE=true

- KAFKA_LISTENER_SECURITY_PROTOCOL_MAP=INSIDE:PLAINTEXT,OUTSIDE:PLAINTEXT

- KAFKA_ADVERTISED_LISTENERS=INSIDE://:9092,OUTSIDE://172.18.2.115:9093

- KAFKA_LISTENERS=INSIDE://:9092,OUTSIDE://:9093

- KAFKA_INTER_BROKER_LISTENER_NAME=INSIDE

- ALLOW_PLAINTEXT_LISTENER=yes

volumes:

- kafka1_data:/kafka

networks:

- kafka

deploy:

mode: replicated

replicas: 1

resources:

limits: # 资源使用上限

cpus: "0.50"

memory: 1G

reservations: # 随时可以使用的资源

cpus: "0.25"

memory: 1G

placement:

constraints:

- node.labels.kafka.replica==1

kafka2:

image: wurstmeister/kafka:2.13-2.7.0

hostname: kafka2

ports:

- "9094:9094"

depends_on:

- zoo1

- zoo2

- zoo3

environment:

- TZ=Asia/Shanghai

- KAFKA_ZOOKEEPER_CONNECT=zoo1:2181,zoo2:2181,zoo3:2181/kafka

- KAFKA_BROKER_ID=2

- KAFKA_AUTO_CREATE_TOPICS_ENABLE=true

- KAFKA_LISTENER_SECURITY_PROTOCOL_MAP=INSIDE:PLAINTEXT,OUTSIDE:PLAINTEXT

- KAFKA_ADVERTISED_LISTENERS=INSIDE://:9092,OUTSIDE://172.18.2.116:9094

- KAFKA_LISTENERS=INSIDE://:9092,OUTSIDE://:9094

- KAFKA_INTER_BROKER_LISTENER_NAME=INSIDE

- ALLOW_PLAINTEXT_LISTENER=yes

volumes:

- kafka2_data:/kafka

networks:

- kafka

deploy:

mode: replicated

replicas: 1

resources:

limits: # 资源使用上限

cpus: "0.50"

memory: 1G

reservations: # 随时可以使用的资源

cpus: "0.25"

memory: 1G

placement:

constraints:

- node.labels.kafka.replica==2

kafka3:

image: wurstmeister/kafka:2.13-2.7.0

hostname: kafka3

ports:

- "9095:9095"

depends_on:

- zoo1

- zoo2

- zoo3

environment:

- TZ=Asia/Shanghai

- KAFKA_ZOOKEEPER_CONNECT=zoo1:2181,zoo2:2181,zoo3:2181/kafka

- KAFKA_BROKER_ID=3

- KAFKA_AUTO_CREATE_TOPICS_ENABLE=true

- KAFKA_LISTENER_SECURITY_PROTOCOL_MAP=INSIDE:PLAINTEXT,OUTSIDE:PLAINTEXT

- KAFKA_ADVERTISED_LISTENERS=INSIDE://:9092,OUTSIDE://172.18.2.117:9095

- KAFKA_LISTENERS=INSIDE://:9092,OUTSIDE://:9095

- KAFKA_INTER_BROKER_LISTENER_NAME=INSIDE

- ALLOW_PLAINTEXT_LISTENER=yes

volumes:

- kafka3_data:/kafka

networks:

- kafka

deploy:

mode: replicated

replicas: 1

resources:

limits: # 资源使用上限

cpus: "0.50"

memory: 1G

reservations: # 随时可以使用的资源

cpus: "0.25"

memory: 1G

placement:

constraints:

- node.labels.kafka.replica==3

kafka-console:

image: redpandadata/console

ports:

- "9090:8080"

environment:

KAFKA_BROKERS: "kafka1:9093,kafka2:9094,kafka3:9095"

networks:

- kafka

depends_on:

- "kafka1"

- "kafka2"

- "kafka3"

deploy:

placement:

constraints:

- node.labels.kafka.replica==1

volumes:

zookeeper1_data:

driver: local

zookeeper1_datalog:

driver: local

zookeeper2_data:

driver: local

zookeeper2_datalog:

driver: local

zookeeper3_data:

driver: local

zookeeper3_datalog:

driver: local

kafka1_data:

driver: local

kafka2_data:

driver: local

kafka3_data:

driver: local

networks:

kafka:

external: true

- 精简docker-compose文件,去掉zookeeper的端口暴露

version: '3'

services:

zoo1:

image: zookeeper:3.6.1

hostname: zoo1

volumes:

- /etc/localtime:/etc/localtime:ro

- zookeeper_1_data:/data

- zookeeper_1_datalog:/datalog

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

networks:

- kafka

deploy:

placement:

constraints:

- node.labels.kafka.replica==1

zoo2:

image: zookeeper:3.6.1

hostname: zoo2

volumes:

- /etc/localtime:/etc/localtime:ro

- zookeeper_2_data:/data

- zookeeper_2_datalog:/datalog

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

networks:

- kafka

deploy:

placement:

constraints:

- node.labels.kafka.replica==2

zoo3:

image: zookeeper:3.6.1

hostname: zoo3

volumes:

- /etc/localtime:/etc/localtime:ro

- zookeeper_3_data:/data

- zookeeper_3_datalog:/datalog

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

networks:

- kafka

deploy:

placement:

constraints:

- node.labels.kafka.replica==3

kafka1:

hostname: kafka1

image: wurstmeister/kafka:2.13-2.7.0

depends_on:

- zoo1

- zoo2

- zoo3

ports:

- "9093:9092"

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- kafka_1_data:/kafka

networks:

- kafka

environment:

HOSTNAME_COMMAND: "docker info -f '{{`{{.Swarm.NodeAddr}}`}}'"

TZ: "Asia/Shanghai"

KAFKA_BROKER_ID: 1

KAFKA_ZOOKEEPER_CONNECT: zoo1:2181,zoo2:2181,zoo3:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://_{HOSTNAME_COMMAND}:9093

KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9092

KAFKA_HEAP_OPTS: -Xmx4G -Xms4G

deploy:

placement:

constraints:

- node.labels.kafka.replica==1

kafka2:

hostname: kafka2

image: wurstmeister/kafka:2.13-2.7.0

depends_on:

- zoo1

- zoo2

- zoo3

ports:

- "9094:9092"

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- kafka_2_data:/kafka

networks:

- kafka

environment:

HOSTNAME_COMMAND: "docker info -f '{{`{{.Swarm.NodeAddr}}`}}'"

TZ: "Asia/Shanghai"

KAFKA_BROKER_ID: 2

KAFKA_ZOOKEEPER_CONNECT: zoo1:2181,zoo2:2181,zoo3:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://_{HOSTNAME_COMMAND}:9094

KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9092

KAFKA_HEAP_OPTS: -Xmx4G -Xms4G

deploy:

placement:

constraints:

- node.labels.kafka.replica==2

kafka3:

hostname: kafka3

image: wurstmeister/kafka:2.13-2.7.0

depends_on:

- zoo1

- zoo2

- zoo3

ports:

- "9095:9092"

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- kafka_3_data:/kafka

networks:

- kafka

environment:

HOSTNAME_COMMAND: "docker info -f '{{`{{.Swarm.NodeAddr}}`}}'"

TZ: "Asia/Shanghai"

KAFKA_BROKER_ID: 3

KAFKA_ZOOKEEPER_CONNECT: zoo1:2181,zoo2:2181,zoo3:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://_{HOSTNAME_COMMAND}:9095

KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9092

KAFKA_HEAP_OPTS: -Xmx4G -Xms4G

deploy:

placement:

constraints:

- node.labels.kafka.replica==3

kafka-console:

image: kafka-console:latest

ports:

- "9091:8080"

environment:

KAFKA_BROKERS: "kafka1:9092,kafka2:9092,kafka3:9092"

networks:

- kafka

depends_on:

- "kafka1"

- "kafka2"

- "kafka3"

deploy:

placement:

constraints:

- node.labels.kafka.replica==1

volumes:

zookeeper_1_data:

driver: local

zookeeper_1_datalog:

driver: local

zookeeper_2_data:

driver: local

zookeeper_2_datalog:

driver: local

zookeeper_3_data:

driver: local

zookeeper_3_datalog:

driver: local

kafka_1_data:

driver: local

kafka_2_data:

driver: local

kafka_3_data:

driver: local

networks:

kafka:

external: true

2. 部署服务

部署服务使用 docker stack deploy,其中 -c 参数指定 compose 文件名。

两个docker-compose文件任选一个就可以

$ docker stack deploy -c docker-compose-kafka-cluster1.yml kafka

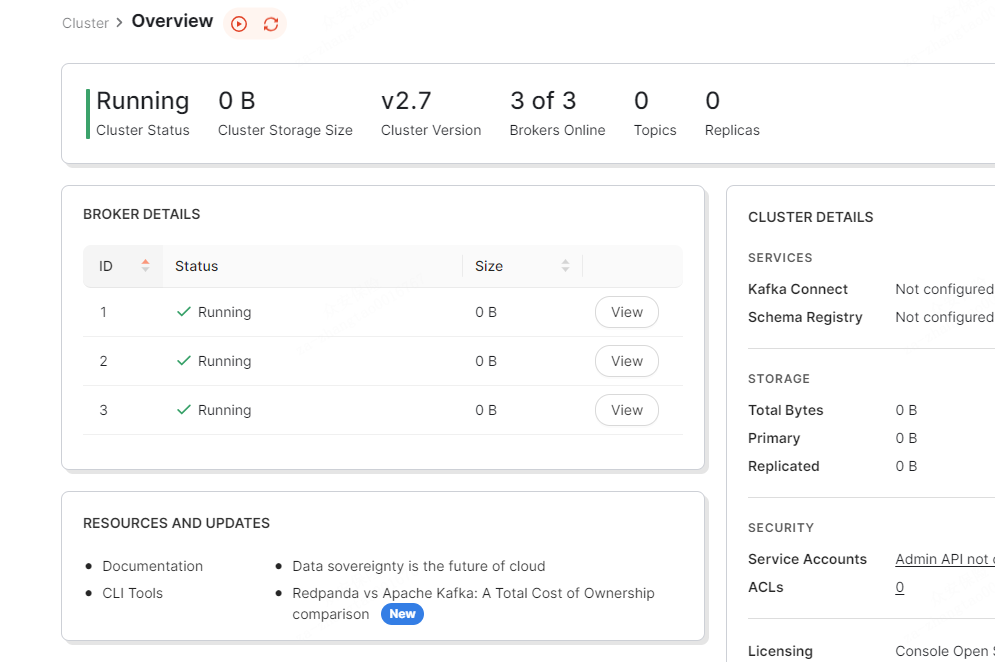

验证kafka:在浏览器访问kafka的控制后台任一节点IP:19095,打开如下界面:

可以看到三个kafka的节点都出现。

创建topics,如能同步值所有kafka节点,则表示kafka集群成功搭建。

3. 查看服务

$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

1b8pwy2draz5 kafka_kafka1 replicated 1/1 wurstmeister/kafka:2.13-2.7.0 *:19092->9092/tcp

4rw6uoiten6d kafka_kafka2 replicated 1/1 wurstmeister/kafka:2.13-2.7.0 *:19093->9093/tcp

jlj9hpaiw6oc kafka_kafka3 replicated 1/1 wurstmeister/kafka:2.13-2.7.0 *:19094->9094/tcp

bwgnebm01eit kafka_kafka-console replicated 1/1 redpandadata/console:latest *:19095->8080/tcp

ze8fuxcyifvs kafka_zoo1 replicated 1/1 zookeeper:3.6.1 *:2181->2181/tcp

ov3i2zyi0j7g kafka_zoo2 replicated 1/1 zookeeper:3.6.1 *:2182->2181/tcp

v36w99yjlyar kafka_zoo3 replicated 1/1 zookeeper:3.6.1 *:2183->2181/tcp

4. 常见问题解决

好记性不如烂笔头!

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· winform 绘制太阳,地球,月球 运作规律

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· AI与.NET技术实操系列(五):向量存储与相似性搜索在 .NET 中的实现

· 超详细:普通电脑也行Windows部署deepseek R1训练数据并当服务器共享给他人