throughput

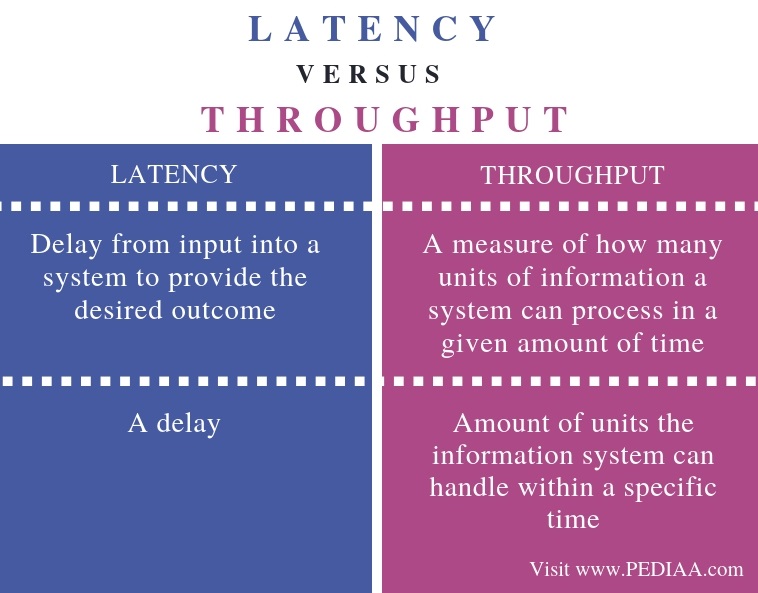

The main difference between latency and throughput is that latency refers to the delay to produce the outcome from the input while throughput refers to how much data can be transmitted from one place to another in a given time.

Latency and throughput are two common terms we generally use when using computer resources such as disk storage or when sending data from source to destination in a computer network. Overall, both can consider the time of processing data or transmitting data.

Key Areas Covered

1. What is Latency

-Definition, Functionality

2. What is Throughput

-Definition, Functionality

3. Difference Between Latency and Throughput

-Comparison of key differences

Key Terms

Latency, Throughput

What is Latency

Latency refers to a delay. The delay can occur in transmission or processing data. Network latency and disk latency are two types of latency. Network latency refers to the time to send data from the source to the destination over a network. For example, assume a computer in one network connects to another computer in some other network, which is far away. As there are a lot of hops between the source and the destination, there will be latency when establishing the connection.

Disk latency is another type of latency. It is the time between requesting data from a storage device and the start receiving that data. Furthermore, rotational latency and seek time are two factors that associate with disk latency. Generally, Solid State Drives (SSD) do not rotate similar to a traditional Hard Disk Drive (HDD). Therefore, SSDs have lower latency.

What is Throughput

Throughput refers to how much data is transferred from one location to another in a given time. It helps in measuring the performance of hard disks, RAM and network connections. Moreover, system throughput or aggregate throughput is the sum of the data rates that are delivered to all terminals in a network. However, the actual data transfer speed can minimize due to facts such as connection speed and network traffic.

When considering communication networks, network throughput refers to the rate of successful message delivery over a communication channel. Generally, the measurement of throughput is bits per second (bit/s or bps) and data packets per second (pps) or data packets per time slot. Furthermore, the throughput of a communication system depends on the analog physical medium, the available processing power of the system components and end-user behavior.

Difference Between Latency and Throughput

Definition

Latency is the delay from input into a system to provide the desired outcome. In contrast, throughput is a measure of how many units of information a system can process in a given amount of time.

Basis

Moreover, latency is a delay, whereas throughput is the amount of units the information system can handle within a specific time.

Conclusion

In brief, latency and throughput are two terms used when processing and sending data over a network. The main difference between latency and throughput is that latency is the delay to produce the outcome from the input, while throughput is how much data can be transmitted from one place to another in a given time.

References:

1. “Latency.” Latency Definition, Available here.

2. “Throughput.” Throughput Definition, Available here.

4. “Throughput.” Wikipedia, Wikimedia Foundation, 24 July 2019, Available here.

Image Courtesy:

1.”1449807″ via Pxhere.

浙公网安备 33010602011771号

浙公网安备 33010602011771号