ffmpeg gpu

ls ..|xargs -I @ ffmpeg -i '../@' -acodec copy -vcodec libx264 '@' -hwaccel cuvid

Duration: 00:45:30.22, start: 0.000000, bitrate: 2446 kb/s

Stream #0:0[0x1](und): Video: h264 (Constrained Baseline) (avc1 / 0x31637661), yuv420p(progressive), 1920x1080 [SAR 1:1 DAR 16:9], 2348 kb/s, 25 fps, 25 tbr, 12800 tbn (default)

Stream #0:1[0x2](und): Audio: aac (LC) (mp4a / 0x6134706D), 48000 Hz, stereo, fltp, 93 kb/s (default)

ffmpeg -i 12.mp4 -c:a copy -c:v hevc -b:v 1200k h.mp4

-r 25

-s 1920x1080

-ss 00:00:05 -to 00:00:10

-ss 00:00:10 -t 00:05:00

-vf "hqdn3d"

-vf "eq=brightness=0.1:saturation=1.5"

-f concat -safe 0 -i filelist.txt -c copy

filelist.txt:

-map 0

-c copy

-vf "crop=400:300:100:100"

-c:v libvvenc

H.266/VVC

--enable-libvvenc

-f rawvideo -pixel_format yuv420p out.yuv

Every single time you reencode you will lose quality. If you reencode twice you will lose more quality than if you reencode once. There is absolutely no benefit for not using the original. If you tune for SSIM and then measure the SSIM you can get some idea of how much has been lost.

#git clone https://code.videolan.org/videolan/x264.git

#git clone https://bitbucket.org/multicoreware/x265_git.git

#git clone https://github.com/fraunhoferhhi/vvenc

#git clone https://code.videolan.org/videolan/dav1d.git

#git clone https://github.com/mstorsjo/fdk-aac

#curl -O -L http://downloads.sourceforge.net/project/lame/lame/3.100/lame-3.100.tar.gz && tar xzf lame-3.100.tar.gz && rm lame-3.100.tar.gz

#curl -O -L https://ffmpeg.org/releases/ffmpeg-snapshot.tar.bz2 && tar xzf ffmpeg-snapshot.tar.bz2 && rm ffmpeg-snapshot.tar.bz2

#curl -O -L https://ffmpeg.org/releases/ffmpeg-7.0.2.tar.gz && tar xzf ffmpeg-7.0.2.tar.gz && rm ffmpeg-7.0.2.tar.gz

export d=$PWD

mkdir -p ffmpeg_build/lib/pkgconfig && mkdir ffmpeg_build/bin

cd vvenc && mkdir build && cd build

cmake -DCMAKE_BUILD_TYPE=Debug -DCMAKE_INSTALL_PREFIX="$d/ffmpeg_build" -DENABLE_SHARED:bool=on ..

make -j 8

make install

cd ../..

cd x264

PKG_CONFIG_PATH="$d/ffmpeg_build/lib/pkgconfig" ./configure --prefix="$d/ffmpeg_build" --bindir="$d/ffmpeg_build/bin" --enable-shared --enable-debug

make -j 8

make install

cd ..

cd x265_git && mkdir builds && cd builds

cmake -DCMAKE_BUILD_TYPE=Debug -DCMAKE_INSTALL_PREFIX="$d/ffmpeg_build" -DENABLE_SHARED:bool=on ../source

make -j 8

make install

cd ../..

cd fdk-aac

autoreconf -fiv

./configure --prefix="$d/ffmpeg_build" --enable-debug

make -j 8

make install

cd ..

tar xzf ffmpeg-snapshot.tar.bz2

cd ffmpeg

PATH="$d/ffmpeg_build/bin:$PATH" PKG_CONFIG_PATH="$d/ffmpeg_build/lib/pkgconfig" ./configure \

--prefix="$d/ffmpeg_build" \

--pkg-config-flags="--static" \

--extra-cflags="-I$d/ffmpeg_build/include" \

--extra-ldflags="-L$d/ffmpeg_build/lib" \

--extra-libs=-lpthread \

--extra-libs=-lm \

--bindir="$d/ffmpeg_build/bin" \

--enable-gpl \

--enable-libx264 \

--enable-libx265 \

--enable-libvvenc \

--enable-nonfree \

--enable-libfdk_aac\

--enable-debug \

--disable-stripping\

--enable-shared

make -j 8

make install

cd ..

brew install libxml2 ffmpeg nasm # macOS-only; if on Linux, use your native package manager. Package names may differ.

git clone https://github.com/fraunhoferhhi/vvenc

git clone https://github.com/fraunhoferhhi/vvdec

git clone https://github.com/mstorsjo/fdk-aac

cd vvenc && mkdir build && cd build

cmake -DCMAKE_BUILD_TYPE=Release -DCMAKE_INSTALL_PREFIX=/usr/local ..

sudo cmake --build . --target install -j $nproc

cd ../../

cd vvdec && mkdir build && cd build

cmake -DCMAKE_BUILD_TYPE=Release -DCMAKE_INSTALL_PREFIX=/usr/local ..

sudo cmake --build . --target install -j $nproc

cd ../../

cd fdk-aac && ./autogen.sh && ./configure

make -j

sudo make install

cd ../

git clone --depth=1 https://github.com/MartinEesmaa/FFmpeg-VVC

cd FFmpeg-VVC

export PKG_CONFIG_PATH=/usr/local/lib/pkgconfig

./configure --enable-libfdk-aac --enable-libvvenc --enable-libvvdec --enable-static --enable-pic --enable-libxml2 --pkg-config-flags="--static" --enable-sdl2

make -jvvencapp -i input.y4m --preset slow --qpa on --qp 20 -c yuv420_10 -o output.266

./x264 input_1920x1080.yuv -o a.264

本文内容包括:

- 在Linux环境下安装FFmpeg

- 通过命令行实现视频格式识别和转码

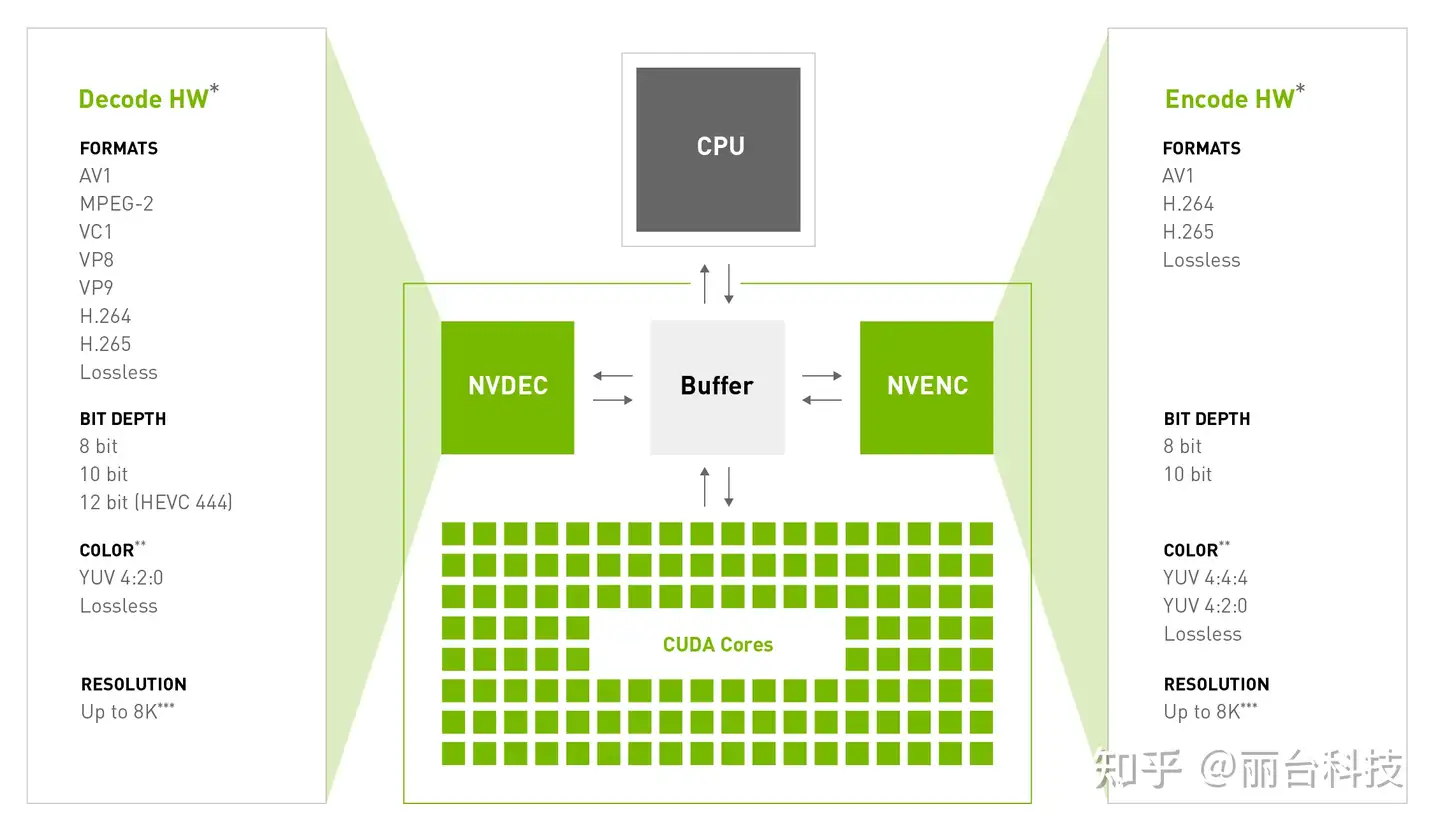

- 有Nvidia显卡的情况下,在Linux下使用GPU进行视频转码加速的方法

FFmpeg编译安装

在FFmpeg官网https://ffmpeg.org/download.html可以下载到ubunto/debian的发行包,其他Linux发行版需自行编译。同时,如果要使用GPU进行硬件加速的话,也是必须自己编译FFmpeg的,所以本节将介绍从源码编译安装FFmpeg的方法(基于RHEL/Centos)

安装依赖工具

yum install autoconf automake bzip2 cmake freetype-devel gcc gcc-c++ git libtool make mercurial pkgconfig zlib-devel

准备工作

在$HOME下创建ffmpeg_sources目录

编译并安装依赖库

本节中的依赖库基本都是必须的,建议全部安装

nasm

汇编编译器,编译某些依赖库的时候需要

cd ~/ffmpeg_sources

curl -O -L http://www.nasm.us/pub/nasm/releasebuilds/2.13.02/nasm-2.13.02.tar.bz2

tar xjvf nasm-2.13.02.tar.bz2

cd nasm-2.13.02

./autogen.sh

./configure --prefix="$HOME/ffmpeg_build" --bindir="$HOME/bin"

make

make install

yasm

汇编编译器,编译某些依赖库的时候需要

cd ~/ffmpeg_sources

curl -O -L http://www.tortall.net/projects/yasm/releases/yasm-1.3.0.tar.gz

tar xzvf yasm-1.3.0.tar.gz

cd yasm-1.3.0

./configure --prefix="$HOME/ffmpeg_build" --bindir="$HOME/bin"

make

make install

libx264

H.264视频编码器,如果需要输出H.264编码的视频就需要此库,所以可以说是必备

cd ~/ffmpeg_sources

git clone --depth 1 http://git.videolan.org/git/x264

cd x264

PKG_CONFIG_PATH="$HOME/ffmpeg_build/lib/pkgconfig" ./configure --prefix="$HOME/ffmpeg_build" --bindir="$HOME/bin" --enable-static

make

make install

libx265

H.265/HEVC视频编码器。

如果不需要此编码器,可以跳过,并在ffmpeg的configure命令中移除--enable-libx265

cd ~/ffmpeg_sources

hg clone https://bitbucket.org/multicoreware/x265

cd ~/ffmpeg_sources/x265/build/linux

cmake -G "Unix Makefiles" -DCMAKE_INSTALL_PREFIX="$HOME/ffmpeg_build" -DENABLE_SHARED:bool=off ../../source

make

make install

libfdk_acc

AAC音频编码器,必备

cd ~/ffmpeg_sources

git clone --depth 1 --branch v0.1.6 https://github.com/mstorsjo/fdk-aac.git

cd fdk-aac

autoreconf -fiv

./configure --prefix="$HOME/ffmpeg_build" --disable-shared

make

make install

libmp3lame

MP3音频编码器,必备

cd ~/ffmpeg_sources

curl -O -L http://downloads.sourceforge.net/project/lame/lame/3.100/lame-3.100.tar.gz

tar xzvf lame-3.100.tar.gz

cd lame-3.100

./configure --prefix="$HOME/ffmpeg_build" --bindir="$HOME/bin" --disable-shared --enable-nasm

make

make install

libops

OPUS音频编码器

如果不需要此编码器,可以跳过,并在ffmpeg的configure命令中移除--enable-libopus

cd ~/ffmpeg_sources

curl -O -L https://archive.mozilla.org/pub/opus/opus-1.2.1.tar.gz

tar xzvf opus-1.2.1.tar.gz

cd opus-1.2.1

./configure --prefix="$HOME/ffmpeg_build" --disable-shared

make

make install

libogg

被libvorbis依赖

cd ~/ffmpeg_sources

curl -O -L http://downloads.xiph.org/releases/ogg/libogg-1.3.3.tar.gz

tar xzvf libogg-1.3.3.tar.gz

cd libogg-1.3.3

./configure --prefix="$HOME/ffmpeg_build" --disable-shared

make

make install

libvorbis

Vorbis音频编码器

如果不需要此编码器,可以跳过,并在ffmpeg的configure命令中移除--enable-libvorbis

cd ~/ffmpeg_sources

curl -O -L http://downloads.xiph.org/releases/vorbis/libvorbis-1.3.5.tar.gz

tar xzvf libvorbis-1.3.5.tar.gz

cd libvorbis-1.3.5

./configure --prefix="$HOME/ffmpeg_build" --with-ogg="$HOME/ffmpeg_build" --disable-shared

make

make install

libvpx

VP8/VP9视频编/解码器

如果不需要此编/解码器,可以跳过,并在ffmpeg的configure命令中移除--enable-libvpx

cd ~/ffmpeg_sources

git clone --depth 1 https://github.com/webmproject/libvpx.git

cd libvpx

./configure --prefix="$HOME/ffmpeg_build" --disable-examples --disable-unit-tests --enable-vp9-highbitdepth --as=yasm

make

make install

编译安装ffmpeg 3.3.8

cd ~/ffmpeg_sources

curl -O -L https://ffmpeg.org/releases/ffmpeg-3.3.8.tar.bz2

tar xjvf ffmpeg-3.3.8.tar.bz2

cd ffmpeg-3.3.8

PATH="$HOME/bin:$PATH" PKG_CONFIG_PATH="$HOME/ffmpeg_build/lib/pkgconfig" ./configure \

--prefix="$HOME/ffmpeg_build" \

--pkg-config-flags="--static" \

--extra-cflags="-I$HOME/ffmpeg_build/include" \

--extra-ldflags="-L$HOME/ffmpeg_build/lib" \

--extra-libs=-lpthread \

--extra-libs=-lm \

--bindir="$HOME/bin" \

--enable-gpl \

--enable-libfdk_aac \

--enable-libfreetype \

--enable-libmp3lame \

--enable-libopus \

--enable-libvorbis \

--enable-libvpx \

--enable-libx264 \

--enable-libx265 \

--enable-nonfree

make

make install

hash -r

验证安装

ffmpeg -h

使用FFmpeg

识别视频信息

通过ffprobe命令识别并输出视频信息

ffprobe -v error -show_streams -print_format json <input>

为方便程序解析,将视频信息输出为json格式,样例如下:

{

"streams": [

{

"index": 0,

"codec_name": "h264",

"codec_long_name": "H.264 / AVC / MPEG-4 AVC / MPEG-4 part 10",

"profile": "High",

"codec_type": "video",

"codec_time_base": "61127/3668400",

"codec_tag_string": "avc1",

"codec_tag": "0x31637661",

"width": 1920,

"height": 1080,

"coded_width": 1920,

"coded_height": 1080,

"has_b_frames": 0,

"sample_aspect_ratio": "0:1",

"display_aspect_ratio": "0:1",

"pix_fmt": "yuv420p",

"level": 40,

"color_range": "tv",

"color_space": "bt709",

"color_transfer": "bt709",

"color_primaries": "bt709",

"chroma_location": "left",

"refs": 1,

"is_avc": "true",

"nal_length_size": "4",

"r_frame_rate": "30/1",

"avg_frame_rate": "1834200/61127",

"time_base": "1/600",

"start_pts": 0,

"start_time": "0.000000",

"duration_ts": 61127,

"duration": "101.878333",

"bit_rate": "16279946",

"bits_per_raw_sample": "8",

"nb_frames": "3057",

"disposition": {

"default": 1,

"dub": 0,

"original": 0,

"comment": 0,

"lyrics": 0,

"karaoke": 0,

"forced": 0,

"hearing_impaired": 0,

"visual_impaired": 0,

"clean_effects": 0,

"attached_pic": 0,

"timed_thumbnails": 0

},

"tags": {

"rotate": "90",

"creation_time": "2018-08-09T09:13:33.000000Z",

"language": "und",

"handler_name": "Core Media Data Handler",

"encoder": "H.264"

},

"side_data_list": [

{

"side_data_type": "Display Matrix",

"displaymatrix": "\n00000000: 0 65536 0\n00000001: -65536 0 0\n00000002: 70778880 0 1073741824\n",

"rotation": -90

}

]

},

{