centos安装单机版kafka

1.下载

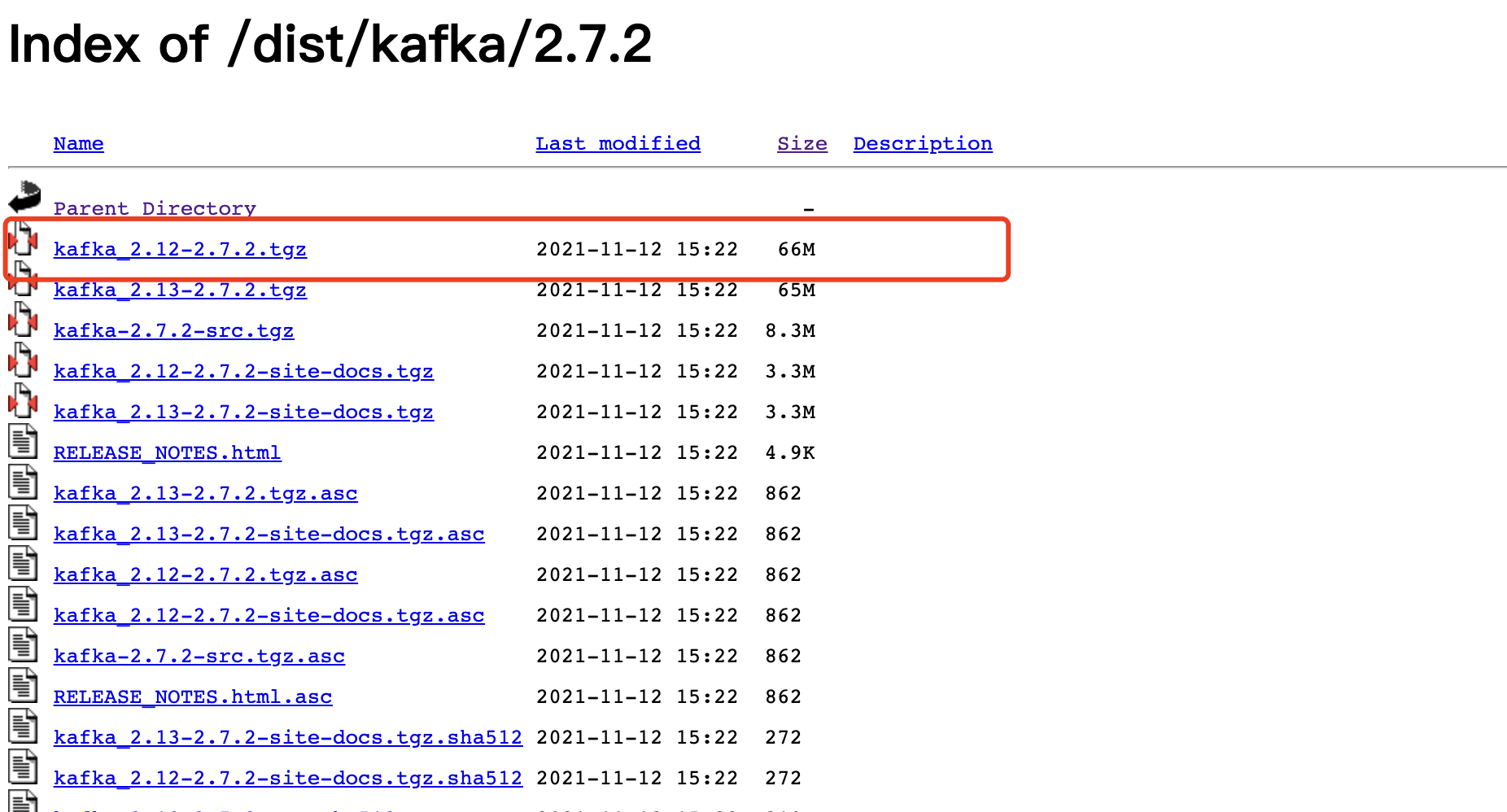

下载地址 http://archive.apache.org/dist/kafka/2.7.2/?C=S;O=D

kafka安装依赖JDK和zookeeper 但是单机版kafak集成了zookeeper 所以不需要单独安装zookeeper

2.解压包和创建目录

解压:tar zxvf kafka_2.12-2.7.2.tgz

创建一个zk目录 在目录里创建一个data和logs目录存在zookeeper日志和数据

3.config配置

第一步 配置zookeeper

vi /opt/kafka-2.7.2/config/zookeeper.properties

dataDir=/opt/kafka-2.7.2/zk/data dataLogDir=/opt/kafka-2.7.2/zk/logs # the port at which the clients will connect clientPort=2181 maxClientCnxns=100 tickTime=2000 # the port at which the clients will connect clientPort=2181 # disable the per-ip limit on the number of connections since this is a non-production config maxClientCnxns=0 # Disable the adminserver by default to avoid port conflicts. # Set the port to something non-conflicting if choosing to enable this admin.enableServer=false # admin.serverPort=8080

第二步 配置kafka配置文件

vi /opt/kafka-2.7.2/config/server.properties # 主要有以下几处改动

留一个坑点注意项:安装过程看了一些博客 没有提到要配置listeners和advertised.listeners,用python检查连接写入数据时,发现连接是没问题 也能使用producer.toptics()可以看到kafka有哪些tocptic 但是producer.close()这一句一直会卡顿着, 虽然没报错 程序也能运行结束 但是数据是没有写进去的,最后listeners和advertised.listeners都加上也没解决 重点是把advertised.listeners 改成外网地址解决了

############################# Server Basics ############################# broker.id=0 port=9092 zookeeper.connect=localhost:2181 ############################# Socket Server Settings ############################# listeners=PLAINTEXT://内网ip:9092 advertised.listeners=PLAINTEXT://外网ip:9092

4.启动和停止kafka

启动

注:先启动zookeeper 再启动kafka

./zookeeper-server-start.sh ../config/zookeeper.properties

./kafka-server-start.sh ../config/server.properties

运行这两步后可以用客户端写代码检查是否能写入数据和消费数据

上面启动不能作为守护进程启动 需要加上-daemon

./zookeeper-server-start.sh -daemon ../config/zookeeper.properties

./kafka-server-start.sh -daemon ../config/server.properties

停止

根据ps -ef|grep kafka可以看到有两个pid在运行 然后使用kill -9 pid

五、python简单验证消费和生产

pip3 install kafka-python

生产数据

from kafka import KafkaProducer

# 生产数据

producer = KafkaProducer(bootstrap_servers='ip:9092') # 连接kafka

msg = "Hello World1111".encode('utf-8') # 发送内容,必须是bytes类型

producer.send('testa', msg) # 发送的topic为test

producer.close()

消费数据

from kafka import KafkaConsumer

consumer = KafkaConsumer('testa', bootstrap_servers=['ip:9092'])

for i in consumer:

print(i)

如果是消费ckafka需要加上认证的用户名密码

from kafka import KafkaConsumer

import json

consumer = KafkaConsumer('需要查询的topic',

bootstrap_servers=['ip:9092'],

sasl_mechanism="PLAIN",

auto_offset_reset='earliest',

security_protocol='SASL_PLAINTEXT',

sasl_plain_username="username", # 这里填用户名

sasl_plain_password="pwd", # 这里填密码

api_version=(0, 10, 0))

for message in consumer:

print(message.value)