opencv的学习笔记

图像处理的相关知识点

-

灰度图 : 由黑到白 , 0 ~ 255

-

RGB: 与相机传感器输出的原始数据相对应 r,g,b三通道

-

HSV: 与人类的直观视觉更相符,

- H表示色调,0~360,可以看成角度

- S表示饱和度,值越高表示颜色越少,越鲜艳、视觉效果越强烈,取值范围0 ~ 1

- V通道像素值表示图像的明亮程度,取值范围也是0 ~ 1,值越大表示越亮

-

RGB图像与硬件输出相对应,而HSV图像则更符合人眼的直观视觉,因此处理图像时,往往先将RGB图像转换为HSV图像,在HSV色彩空间对图像进行处理,处理完毕后再将HSV图像转换为RGB图像

-

__declspec(dllexport): 用于Windows中,从别的动态库中声明导入函数、类、对象等供本动态库或exe文件使用

-

感兴趣的区域 ROI == region of interest

-

将多分类的输出值转换为范围在[0, 1],且和为1的概率分布

\[softmax( x_i ) = \frac{e^i}{\sum e^i} \] -

序列化与反序列化

- 序列化 : 对象 -> 字典 -> json,将模型转化为json

- 反序列化 : json -> 字典 -> 对象, 将json转化为模型

-

NMS非极大值抑制

- 合并同一目标的类似边界框,保留这些边界框中最好的一个

-

override: 当使用 override时,编译器会生成错误,而不会在不提示的情况下创建新的成员函数

-

cudastream : 在使用cuda编程的时候默认使用一个流,有提升的空间,如果使用nvidia

- 数据拷贝和数值计算可以同时进行

- 两个方向的拷贝可以同时进行(GPU到CPU,和CPU到GPU),数据如同行驶在双向快车道

-

多流计算: 与多线程并用可达到50%以上的gpu利用率

1.将数据拆分称许多块,每一块交给一个Stream来处理

2.每一个Stream包含了三个步骤:将属于该Stream的数据从CPU内存转移到GPU内存

GPU进行运算并将结果保存在GPU内存

将该Stream的结果从GPU内存拷贝到CPU内存3.所有的Stream被同时启动,由GPU的scheduler决定如何并行

example -

opencv_mat中y轴向下

-

直方图匹配检测相似度

-

SIFT: 尺度不变特征变换

- SIFT特征是基于物体上的一些局部外观的兴趣点而与影像的大小和旋转无关。对于光线、噪声、微视角改变的容忍度也相当高

- 优点:区分性好、多量性、高速性,可扩展性

-

SURF: Speeded-Up Robust Features。该算子在保持 SIFT 算子优良性能特点的基础上,同时解决了 SIFT 计算复杂度高、耗时长的缺点,对兴趣点提取及其特征向量描述方面进行了改进,且计算速度得到提高

-

ORB:Oriented FAST and Rotated BRIEF, 特征点检测和提取算法

- ORB = Oriented FAST(特征点) + Rotated BRIEF(特征描述)

-

常见灰度处理方式

- 反转,对数变换,伽马变换,灰度拉伸,灰度切割,位图切割 -

Mat下标访问

-

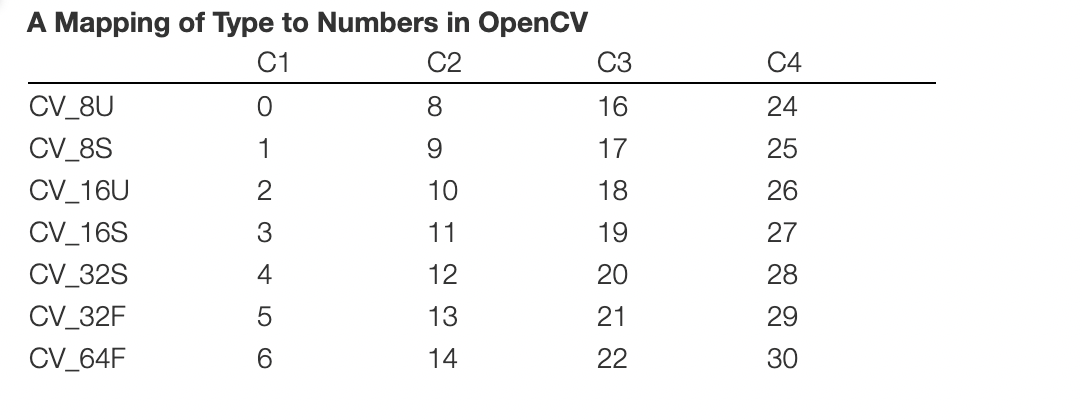

type对应

环境配置

-

path配置

- 添加C:\Leon\opencv\build\x64\vc14\bin 到 path,路径动态变化

-

vs属性管理器

- vc++目录 -> 包含目录输入

D:\Program Files (x86)\opencv\build\include D:\Program Files (x86)\opencv\build\include\opencv2 - vc++目录 -> 库目录输入

D:\Program Files (x86)\opencv\build\x64\vc16\lib - 链接器->输入->附加依赖项输入所需lib

- opencv_world480.lib -> release

- opencv_world480d.lib -> debug

- vc++目录 -> 包含目录输入

main

主要是理解opencv里的mat类

点击查看代码

#include <iostream>

#include <stdio.h>

#include <ctime>

#include <sys/timeb.h>

#include <opencv2/opencv.hpp>

#include <opencv2/features2d.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <assert.h>

#include <xmmintrin.h>

#include <immintrin.h>

#include <intrin.h>

#include "clock_.h"

#include "QuickDemo.h"

using namespace cv;

using namespace std;

#define MAX_PATH_LEN 256

void mat_example() {

Mat src1_gray = imread("E:\\project_file\\1.jpg", 0);//读入图像转换为单通道

Mat src1_color = imread("E:\\project_file\\1.jpg");//读入图像转换为3通道

//imshow("src1_gray", src1_gray);

//imshow("src1_color", src1_color);

Mat src1_gray_1 = src1_gray; //浅拷贝

Mat src1_gray_2;

src1_gray.copyTo(src1_gray_2); //深拷贝

//单通道图像像素遍历,把左上角四分之一的相纸值置0

//for (int i = 0; i < src1_gray.rows / 2; i++)

//{

// for (int j = 0; j < src1_gray.cols / 2; j++)

// {

// src1_gray.at<uchar>(i, j) = 0;//使用at来对单通道i行j列像素进行操作

// }

//}

//imshow("src1_gray 像素操作后", src1_gray);

//imshow("src1_gray_1", src1_gray_1);//由于是浅拷贝,共用同一块内存数据,对src1_gray的操作影响到了src1_gray_1;

//imshow("src1_gray_2", src1_gray_2);//由于是深拷贝,src1_gray_2是真正的拷贝以一份数据,所以不受对src1_gray进行操作的影像;

//图像加减操作,乘除操作也类似,可以自己写代码查看效果

//Mat mat_add = src1_gray + src1_gray_2;

//imshow("图像相加", mat_add);

//Mat mat_sub = src1_gray_2 - src1_gray;

//imshow("图像相减", mat_sub);

//单通道图像转换为三通道,其他转换,如三通道转单通道操作类似,可以在网上查

Mat mat_3chanel;

cvtColor(src1_gray, src1_gray, COLOR_GRAY2RGB);

imshow("单通道图像转换为三通道", src1_gray);

//数据类型转换,uchar转换为float类型,其他转换,如uchar转换为double,float转换为uchar可以网上查;

Mat mat_float;

src1_gray.convertTo(mat_float, CV_32F);

//图像ROI操作(切片)

//Rect rec(0, 0, 300, 500);

//Mat mat_color_roi = src1_color(rec);

//imshow("图像ROI操作", mat_color_roi);

///图像mask操作;

Mat mask;

threshold(src1_gray_2, mask, 128, 255, 0);

imshow("mask", mask);

Mat src_color_mask;

src1_color.copyTo(src_color_mask, mask);

imshow("mask操作", src_color_mask);

waitKey();

}

void mat_my_test() {

Mat src = imread("E:\\project_file\\1.jpg");//读入图像转换为3通道

Mat my, mycpy, cpy;

src.copyTo(cpy); src.copyTo(my); src.copyTo(mycpy);

auto p1 = my.data;

auto p2 = mycpy.data;

struct timeb timep;

ftime(&timep);

auto now = timep.time * 1000 + timep.millitm, last = now;

src += cpy; //本质是cv::add, 在core.hpp可能调用了cuda并行计算

//imshow("opencv+=", src);

ftime(&timep); now = timep.time * 1000 + timep.millitm;

printf("opencv cp cost : %d ms\n", now - last); last = now;

for (int i = 0; i < my.rows; i++)

{

for (int j = 0; j < my.cols; j++)

{

my.at<Vec3b>(i, j) += mycpy.at<Vec3b>(i, j);

}

}

//imshow("my +=", my);

//waitKey();

ftime(&timep); now = timep.time * 1000 + timep.millitm;

printf("my at function cpy cost : %d ms\n", now - last); last = now;

/*

opencv cp cost : 1 ms

my at function cpy cost : 79 ms

*/

//读入图像转换为1通道

src = imread("E:\\project_file\\1.jpg", 0);

src.copyTo(cpy); src.copyTo(my); src.copyTo(mycpy);

now = timep.time * 1000 + timep.millitm, last = now;

src += cpy;

ftime(&timep); now = timep.time * 1000 + timep.millitm;

printf("opencv 1 channel cp cost : %d ms\n", now - last); last = now;

for (int i = 0; i < my.rows; i++)

{

for (int j = 0; j < my.cols; j++)

{

my.at<uchar>(i, j) = mycpy.at<uchar>(i, j);//使用at来对单通道i行j列像素进行操作

}

}

ftime(&timep); now = timep.time * 1000 + timep.millitm;

printf("my at 1 channel cp cost : %d ms\n", now - last); last = now;

/*

opencv 1 channel cp cost : 28 ms

my at 1 channel cp cost : 85 ms

*/

}

void quikdemo_test() {

QuickDemo ep;

Mat img(imread("D:\\project_file\\i1.jpg"));

Mat img2(imread("D:\\project_file\\i2.jpg"));

ep.Sift(img, img2);

}

int main() {

return 0;

}

demo.h

//QuickDemo.h

#pragma once

#include <opencv2/opencv.hpp>

#include <iostream>

#include <opencv2/features2d/features2d.hpp>

#include <opencv2/dnn.hpp>

#include <windows.h>

#include "clock_.h"

using namespace cv;

using namespace cv::dnn;

using namespace std;

class QuickDemo{

public:

//SURF特征点检测

void Sift(Mat &src1, Mat &src2);

//色调一维直方图

void Hue(Mat &src);

//色调-饱和度二维直方图

void Hue_Saruration(Mat &src);

//分水岭算法(有bug)

//void water_shed(Mat &src);

//查找轮廓

void find_contours(Mat &src);

//直方图均衡化

void equa_hist(Mat &src);

//霍夫变换

void SHT(Mat &src);

//scharr边缘检测

void scharr_edge(Mat &src);

//laplacian边缘检测

void laplacian_edge(Mat &src);

//sobel边缘检测

void sobel_edge(Mat &src);

//高阶canny边缘检测

void canny_edge_plus(Mat &src);

//canny边缘检测

void canny_edge(Mat &src);

//图像金字塔-向下采集

void pyr_down(Mat &img);

//图像金字塔-向上采集

void pyr_up(Mat &img);

//漫水填充

void flood_fill(Mat &img);

//形态学汇总

void morpho(Mat &img, int op);

//形态学梯度

void morpho_gradient(Mat &img);

//顶帽

void top_hat(Mat &img);

//黑帽

void black_hat(Mat &img);

//开运算

void op_opening();

//闭运算

void op_closing();

//膨胀

void img_dilate();

//腐蚀

void img_erode();

//xml, yml文件读写

void file_storage();

//ROI_AddImage

void ROI_AddImage();

~QuickDemo();

//空间色彩转换

void colorSpace_Demo(Mat &image);

//Mat创建图像

void matCreation_Demo(Mat &image);

//图像像素读写

void pixelVisit_Demo(Mat &image);

//图像像素算术操作

void operators_Demo(Mat &image);

//滚动条调整图像亮度

void trackingBar_Demo(Mat &image);

//键盘响应操作图像

void key_Demo(Mat &image);

//自带颜色表操作

void colorStyle_Demo(Mat &image);

//图像像素的逻辑操作

void bitwise_Demo(Mat &image);

//通道分离与合并

void channels_Demo(Mat &image);

//图像色彩空间转换

void inrange_Demo(Mat &image);

//图像像素值统计

void pixelStatistic_Demo(Mat &image);

//图像几何形状绘制

void drawing_Demo(Mat &image);

//随机绘制几何形状

void randomDrawing_Demo();

//多边形填充与绘制

void polylineDrawing_Demo();

//鼠标操作与响应

void mouseDrawing_Demo(Mat &image);

//图像像素类型转换与归一化

void norm_Demo(Mat &image);

//图像放缩与插值

void resize_Demo(Mat &image);

//图像翻转

void flip_Demo(Mat &image);

//图像旋转

void rotate_Demo(Mat &image);

//视频文件摄像头使用

void video_Demo(Mat &image);

//视频文件摄像头使用

void video2_Demo(Mat &image);

//视频文件摄像头使用 RTMP拉流

void video3_Demo(Mat &image);

//图像直方图

void histogram_Demo(Mat &image);

//二维直方图

void histogram2d_Demo(Mat &image);

//直方图均衡化

void histogramEq_Demo(Mat &image);

//图像卷积操作(模糊)

void blur_Demo(Mat &image);

//高斯模糊

void gaussianBlur_Demo(Mat &image);

//高斯双边模糊

void bifilter_Demo(Mat &image);

//实时人脸检测

void faceDetection_Demo(Mat &image);

void _array_sum_avx(double *a, double *b, double *re, int ssz);

void _array_sum(double *a, double *b, double *re, int ssz);

};

demo.cpp

点击查看代码

//QuickDemo.cpp

#include "QuickDemo.h"

void QuickDemo::Sift(Mat &imageL, Mat &imageR) {

//提取特征点方法

//SIFT

cv::Ptr<cv::SIFT> sift = cv::SIFT::create();

//cv::Ptr<cv::SIFT> sift = cv::SIFT::Creat(); //OpenCV 4.4.0 及之后版本

//ORB

//cv::Ptr<cv::ORB> sift = cv::ORB::create();

//SURF

//cv::Ptr<cv::SURF> surf = cv::features2d::SURF::create();

//特征点

std::vector<cv::KeyPoint> keyPointL, keyPointR;

//单独提取特征点

sift->detect(imageL, keyPointL);

sift->detect(imageR, keyPointR);

////画特征点

//cv::Mat keyPointImageL;

//cv::Mat keyPointImageR;

//drawKeypoints(imageL, keyPointL, keyPointImageL, cv::Scalar::all(-1), cv::DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

//drawKeypoints(imageR, keyPointR, keyPointImageR, cv::Scalar::all(-1), cv::DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

////显示特征点

//cv::imshow("KeyPoints of imageL", keyPointImageL);

//cv::imshow("KeyPoints of imageR", keyPointImageR);

//特征点匹配

cv::Mat despL, despR;

//提取特征点并计算特征描述子

sift->detectAndCompute(imageL, cv::Mat(), keyPointL, despL);

sift->detectAndCompute(imageR, cv::Mat(), keyPointR, despR);

//Struct for DMatch: query descriptor index, train descriptor index, train image index and distance between descriptors.

//int queryIdx –>是测试图像的特征点描述符(descriptor)的下标,同时也是描述符对应特征点(keypoint)的下标。

//int trainIdx –> 是样本图像的特征点描述符的下标,同样也是相应的特征点的下标。

//int imgIdx –>当样本是多张图像的话有用。

//float distance –>代表这一对匹配的特征点描述符(本质是向量)的欧氏距离,数值越小也就说明两个特征点越相像。

std::vector<cv::DMatch> matches;

//如果采用flannBased方法 那么 desp通过orb的到的类型不同需要先转换类型

if (despL.type() != CV_32F || despR.type() != CV_32F)

{

despL.convertTo(despL, CV_32F);

despR.convertTo(despR, CV_32F);

}

cv::Ptr<cv::DescriptorMatcher> matcher = cv::DescriptorMatcher::create("FlannBased");

matcher->match(despL, despR, matches);

//计算特征点距离的最大值

double maxDist = 0;

for (int i = 0; i < despL.rows; i++)

{

double dist = matches[i].distance;

if (dist > maxDist)

maxDist = dist;

}

//挑选好的匹配点

std::vector< cv::DMatch > good_matches;

for (int i = 0; i < despL.rows; i++){

if (matches[i].distance < 0.5*maxDist){

good_matches.push_back(matches[i]);

}

}

cv::Mat imageOutput;

cv::drawMatches(imageL, keyPointL, imageR, keyPointR, good_matches, imageOutput);

cv::namedWindow("picture of matching");

cv::imshow("picture of matching", imageOutput);

//imwrite("D:\\project_file\\i3.jpg", imageOutput);

}

void QuickDemo::Hue(Mat &src) {

cvtColor(src, src, COLOR_BGR2GRAY);

imshow("src", src);

Mat dsth;

int dims = 1;

float hranges[] = { 0, 255 };

const float *ranges[] = { hranges };

int size = 256, channels = 0;

//计算直方图

calcHist(&src, 1, &channels, Mat(), dsth,

dims, &size, ranges);

int scale = 1;

Mat dst(size * scale, size, CV_8U, Scalar(0));

double mv = 0, mx = 0;

minMaxLoc(dsth, &mv, &mx, 0, 0);

//绘图

int hpt = saturate_cast<int>(0.9 * size);

for (int i = 0; i < 256; ++i) {

float binvalue = dsth.at<float>(i);

int realvalue = saturate_cast<int>(binvalue * hpt / mx);

rectangle(dst, Point(i * scale, size - 1),

Point((i + 1) * scale - 1,size - realvalue ),

Scalar(rand() & 255, rand() & 255, rand()&255 ));

}

imshow("Hue", dst);

}

void QuickDemo::Hue_Saruration(Mat &src) {

imshow("原图", src);

Mat hsv;

cvtColor(src, hsv, COLOR_BGR2HSV);

int huebinnum = 30, saturationbinnum = 32;

int hs_size[] = { huebinnum, saturationbinnum };

//定义色调变化范围

float hueRanges[] = { 0, 180 };

//定义饱和度的变化范围为0(黑,白,灰)到 255 (纯光谱颜色

float saRanges[] = { 0, 256 };

const float *ranges[] = { hueRanges, saRanges };

MatND dst;

int channel[] = { 0, 1 };

calcHist(&hsv, 1, channel, Mat(), dst, 2, hs_size, ranges, true, false);

double mv = 0;

minMaxLoc(dst, 0, &mv, 0, 0);

int scale = 10;

Mat hs_img = Mat::zeros(saturationbinnum * scale, huebinnum * 10, CV_8UC3);

for(int i = 0; i < huebinnum ; ++ i)

for (int j = 0; j < saturationbinnum; ++j) {

float binv = dst.at<float>(i, j);

int intensity = cvRound(binv * 255 / mv); //强度

rectangle(hs_img, Point(i * scale, j * scale),

Point((i + 1) * scale - 1, (j + 1) * scale),

Scalar::all(intensity), FILLED);

}

imshow("H-S 直方图", hs_img);

}

/*

Mat g_mask, g_src;Point prevPt(-1, -1);

static void on_Mouse(int event, int x, int y, int flag, void *) {

if (x < 0 || x >= g_src.cols || y < 0 || y >= g_src.rows)

return;

if (event == EVENT_LBUTTONUP || !(flag & EVENT_FLAG_LBUTTON))

prevPt = Point(-1, -1);

else if (event == EVENT_LBUTTONDOWN)

prevPt = Point(x, y);

else if (event == EVENT_MOUSEMOVE && (flag & EVENT_FLAG_LBUTTON)) {

Point pt(x, y);

if (prevPt.x < 0)

prevPt = pt;

line(g_mask, prevPt, pt, Scalar::all(255), 5, 8, 0);

line(g_src, prevPt, pt, Scalar::all(255), 5, 8, 0);

prevPt = pt;

imshow("----程序窗口----", g_src);

}

};

void QuickDemo::water_shed(Mat &src) {

//cvtColor(src, src, COLOR_BGR2GRAY);

imshow("----程序窗口----", src);

src.copyTo(g_src);

Mat gray;

cvtColor(g_src, g_mask, COLOR_BGR2GRAY);

cvtColor(g_mask, gray, COLOR_GRAY2BGR);

g_mask = Scalar::all(0);

setMouseCallback("----程序窗口----", on_Mouse, 0);

while (true) {

int c = waitKeyEx(0);

if ((char)c == 27) break;

if ((char)c == '2') {

g_mask = Scalar::all(0);

src.copyTo(g_src);

imshow("image", g_src);

}

//若检测到按键值为 1 或者空格,则进行处理

if ((char)c == '1' || (char)c == ' ') {

//定义一些参数

int i, j, compCount = 0;

vector<vector<Point> > contours;

vector<Vec4i> hierarchy;

//寻找轮廓

findContours(g_mask, contours, hierarchy,

RETR_CCOMP, CHAIN_APPROX_SIMPLE);

//轮靡为空时的处理

if (contours.empty())

continue;

//复制掩膜

Mat maskImage(g_mask.size(), CV_32S);

maskImage = Scalar::all(0);

//循环绘制出轮廓

for (int index = 0; index >= 0; index = hierarchy[index][0], compCount++)

drawContours(maskImage, contours, index, Scalar::all(compCount + 1),

-1, 8, hierarchy, INT_MAX);

//compCount 为零时的处理if( compCount == 0 )

continue;

//生成随机颜色

vector<Vec3b> colorTab;

for (i = 0; i < compCount; i++) {

int b = theRNG().uniform(0, 255);

int g = theRNG().uniform(0, 255);

int r = theRNG().uniform(0, 255);

colorTab.push_back(Vec3b((uchar)b, (uchar)g, (uchar)r));

}

//计算处理时间并输出到窗口中

double dTime = (double)getTickCount();

watershed(src, maskImage);

dTime = (double)getTickCount() - dTime;

printf("\t处理时间 = ggms\n", dTime*1000. / getTickFrequency());

//双层循环,将分水岭图像遍历存入 watershedImage 中

Mat watershedImage(maskImage.size(), CV_8UC3);

for (i = 0; i < maskImage.rows; i++)

for (j = 0; j < maskImage.cols; j++) {

int index = maskImage.at<int>(i, j);

if (index == -1)

watershedImage.at<Vec3b>(i, j) = Vec3b(255, 255, 255);

else if (index <= 0 || index > compCount)watershedImage.at<Vec3b>(i, j) = Vec3b(0, 0, 0);

else

watershedImage.at<Vec3b>(i, j) = colorTab[index - 1];

}

//混合灰度图和分水岭效果图并显示最终的窗口

watershedImage = watershedImage * 0.5 + gray * 0.5;

imshow("watershed transform", watershedImage);

}

}

}

*/

void QuickDemo::find_contours(Mat &src) {

cvtColor(src, src, COLOR_BGR2GRAY);

imshow("src", src);

Mat dst = Mat::zeros(src.rows, src.cols, CV_8UC3);

src = src > 118;

imshow("阈值化118", src);

vector<vector<Point>> contours;

vector<Vec4i> hierarchy;

findContours(src, contours, hierarchy, RETR_CCOMP,

CHAIN_APPROX_SIMPLE);

int id = 0;

while (id >= 0) {

printf("%d\n", (int)contours[id].size());

Scalar color(rand() & 255, rand() & 255, rand() & 255);

drawContours(dst, contours, id, color, FILLED, 8,

hierarchy);

id = hierarchy[id][0];

}

imshow("find_contours", dst);

}

void QuickDemo::equa_hist(Mat &src) {

cvtColor(src, src, COLOR_BGR2GRAY);

imshow("src", src);

Mat dst;

equalizeHist(src, dst);

imshow("equalizeHist", dst);

}

void QuickDemo::SHT(Mat &src) {

imshow("src", src);

Mat mid, dst , tmp;

Canny(src, mid, 50, 200, 3);

cvtColor(mid, dst, COLOR_GRAY2BGR);

//进行变换

tmp.create(Size(mid.cols, mid.rows), 1);

imshow("canny", mid);

vector<Vec2f> lines;

HoughLines(mid, lines, 1, CV_PI / 180, 50, 0, 0);

//画线段

cout << lines.size() << '\n';

for (size_t i = 0; i < lines.size(); ++i) {

float rho = lines[i][0], theta = lines[i][1];

Point p1, p2;

double a = cos(theta), b = sin(theta);

double x0 = a * rho, y0 = b * rho;

p1.x = cvRound(x0 + 1000 * (-b));

p1.y = cvRound(y0 + 1000 * (a));

p2.x = cvRound(x0 - 1000 * (-b));

p2.y = cvRound(y0 - 1000 * (a));

line(dst, p1, p2, Scalar(55, 100, 195), 1, LINE_AA);

}

imshow("Hough", dst);

}

void QuickDemo::scharr_edge(Mat &src) {

imshow("src", src);

Mat grad_x, grad_y, abs_x, abs_y;

GaussianBlur(src, src, Size(3, 3), 0, 0, BORDER_DEFAULT);

// x方向

Scharr(src, grad_x, CV_16S, 1, 0, 1, 1, BORDER_DEFAULT);

convertScaleAbs(grad_x, abs_x);

imshow("x方向", abs_x);

//y方向

Scharr(src, grad_y, CV_16S, 0, 1, 1, 1, BORDER_DEFAULT);

convertScaleAbs(grad_y, abs_y);

imshow("y方向", abs_y);

//合并梯度

Mat dst;

addWeighted(abs_x, 0.5, abs_y, 0.5, 0, dst);

imshow("合并", dst);

}

void QuickDemo::laplacian_edge(Mat &src) {

imshow("src", src);

Mat gray, dst, abs_dst;

GaussianBlur(src, src, Size(3, 3), 0, 0, BORDER_DEFAULT);

cvtColor(src, gray, COLOR_RGB2GRAY);

Laplacian(gray, dst, CV_16S, 3, 1, 0, BORDER_DEFAULT);

convertScaleAbs(dst, abs_dst);

imshow("laplacian_edge", abs_dst);

}

void QuickDemo::sobel_edge(Mat &src) {

imshow("src", src);

Mat grad_x, grad_y, abs_x, abs_y;

// x方向

Sobel(src, grad_x, CV_16S, 1, 0, 3, 1, 1, BORDER_DEFAULT);

convertScaleAbs(grad_x, abs_x);

imshow("x方向", abs_x);

//y方向

Sobel(src, grad_y, CV_16S, 0, 1, 3, 1, 1, BORDER_DEFAULT);

convertScaleAbs(grad_y, abs_y);

imshow("y方向", abs_y);

//合并梯度

Mat dst;

addWeighted(abs_x, 0.5, abs_y, 0.5, 0, dst);

imshow("合并", dst);

}

void QuickDemo::canny_edge_plus(Mat &src) {

imshow("src", src);

Mat dst, edge, gray;

dst.create(src.size(), src.type());

cvtColor(src, gray, COLOR_BGR2GRAY);

blur(gray, edge, Size(3, 3));

Canny(edge, edge, 3, 9, 3);

dst = Scalar::all(0);

src.copyTo(dst, edge);

imshow("canny_edge_plus", dst);

}

void QuickDemo::canny_edge(Mat &src) {

imshow("src", src);

Mat up;

Canny(src, up, 150, 100, 3);

imshow("canny_edge", up);

}

void QuickDemo::pyr_down(Mat &img) {

imshow("src", img);

Mat up;

pyrDown(img, up, Size(img.cols / 2, img.rows / 2));

imshow("pyr_up", up);

}

void QuickDemo::pyr_up(Mat &img) {

imshow("src", img);

Mat up;

pyrUp(img, up, Size(img.cols * 2, img.rows * 2));

imshow("pyr_up", up);

}

void QuickDemo::flood_fill(Mat &img) {

Rect ccomp;

imshow("src", img);

floodFill(img, Point(50, 50), Scalar(155, 10, 55),

&ccomp, Scalar(5, 5, 5), Scalar(5, 5, 5));

imshow("flood_fill", img);

}

/*

- op

- MORPH_OPEN

- MORPH_CLOSE

- MORPH_GRADIENT

- MORPH_TOPHAT

- MORPH_BLACKHAT

- MORPH_ERODE

- MORPH_DILATE

*/

void QuickDemo::morpho(Mat &img, int op) {

Mat out;

imshow("src", img);

morphologyEx(img, out, op,

getStructuringElement(MORPH_RECT, Size(5, 5)));

imshow("out", out);

}

void QuickDemo::morpho_gradient(Mat &img) {

imshow("src", img);

Mat di, er;

dilate(img, di, getStructuringElement(MORPH_RECT, Size(5, 5)));

erode(img, er, getStructuringElement(MORPH_RECT, Size(5, 5)));

di -= er;

imshow("morpho_gradient", di);

}

void QuickDemo::top_hat(Mat &img) {

imshow("src", img);

Mat out, top_hat;

img.copyTo(top_hat);

erode(img, out, getStructuringElement(MORPH_RECT, Size(5, 5)));

dilate(out, img, getStructuringElement(MORPH_RECT, Size(5, 5)));

top_hat -= img;

imshow("top_hat", top_hat);

}

void QuickDemo::black_hat(Mat &img) {

imshow("src", img);

Mat out, tmp;

img.copyTo(tmp);

dilate(img, out, getStructuringElement(MORPH_RECT, Size(5, 5)));

erode(out, img, getStructuringElement(MORPH_RECT, Size(5, 5)));

img -= tmp;

imshow("black_hat", img);

}

void QuickDemo::op_opening() {

Mat img(imread("E:\\project_file\\b.jpg"));

//ep.file_storage();

imshow("before", img);

Mat out;

erode(img, out, getStructuringElement(MORPH_RECT, Size(5, 5)));

dilate(out, img, getStructuringElement(MORPH_RECT, Size(5, 5)));

imshow("after", img);

}

void QuickDemo::op_closing() {

Mat img(imread("E:\\project_file\\b.jpg"));

//ep.file_storage();

imshow("before", img);

Mat out;

dilate(img, out, getStructuringElement(MORPH_RECT, Size(5, 5)));

erode(out, img, getStructuringElement(MORPH_RECT, Size(5, 5)));

imshow("after", img);

}

void QuickDemo::img_dilate() {

Mat img(imread("E:\\project_file\\b.jpg"));

//ep.file_storage();

imshow("before", img);

Mat out;

dilate(img, out, getStructuringElement(MORPH_RECT, Size(5, 5)));

imshow("after", out);

}

void QuickDemo::img_erode() {

Mat img(imread("E:\\project_file\\b.jpg"));

//ep.file_storage();

imshow("before", img);

Mat out;

erode(img, out, getStructuringElement(MORPH_RECT, Size(5, 5)));

imshow("after", out);

}

void QuickDemo::file_storage() {

FileStorage fs("E:\\project_file\\s.txt", FileStorage::WRITE);

Mat R = Mat_<uchar >::eye(3, 3);

Mat T = Mat_<double>::zeros(3, 1);

fs << "R" << R;

fs << "T" << T;

fs.release();

}

//ROI_AddImage

void QuickDemo::ROI_AddImage() {

Mat logeImg = imread("E:\\project_file\\b.jpg");

Mat img = imread("E:\\project_file\\1.jpg");

Mat ImgRoi = img(Rect(0, 0, logeImg.cols, logeImg.rows));

Mat mask(imread("E:\\project_file\\b.jpg", 0));

imshow("<1>ImgRoi", ImgRoi);

logeImg.copyTo(ImgRoi, mask);

imshow("<2>ROI实现图像叠加实例窗口", img);

}

QuickDemo::~QuickDemo() {

waitKey();

}

//空间色彩转换Demo

void QuickDemo::colorSpace_Demo(Mat &image)

{

Mat hsv, gray;

cvtColor(image, hsv, COLOR_RGB2HSV);

cvtColor(image, gray, COLOR_RGB2GRAY);

imshow("HSV", hsv); //Hue色相, Saturation饱和度, Value即色调

imshow("灰度", gray);

//imwrite("F:/OpenCV/Image/hsv.png", hsv);

//imwrite("F:\\OpenCV\\Image\\gray.png", gray);

}

//Mat创建图像

void QuickDemo::matCreation_Demo(Mat &image)

{

//Mat m1, m2;

//m1 = image.clone();

//image.copyTo(m2);

//imshow("图像1", m1);

//imshow("图像2", m2);

Mat m3 = Mat::zeros(Size(400, 400), CV_8UC3);

m3 = Scalar(255, 255, 0);

cout << "width:" << m3.cols << " height:" << m3.rows << " channels:" << m3.channels() << endl;

//cout << m3 << endl;

imshow("图像3", m3);

Mat m4 = m3;

//imshow("图像4", m4);

m4 = Scalar(0, 255, 255);

imshow("图像33", m3);

//imshow("图像44", m4);

}

//图像像素读写

void QuickDemo::pixelVisit_Demo(Mat &image)

{

int w = image.cols;

int h = image.rows;

int dims = image.channels();

//for (int row = 0; row < h; row++) {

// for (int col = 0; col < w; col++) {

// if (dims == 1) { //灰度图像

// int pv = image.at<uchar>(Point(row, col));

// image.at<uchar>(Point(row, col)) = 255 - saturate_cast<uchar>(pv);

// }

// else if (dims == 3) { //彩色图像

// Vec3b bgr = image.at<Vec3b>(row, col);

// image.at<Vec3b>(row, col)[0] = 255 - bgr[0];

// image.at<Vec3b>(row, col)[1] = 255 - bgr[1];

// image.at<Vec3b>(row, col)[2] = 255 - bgr[2];

// }

// }

//}

uchar *img_prt = image.ptr<uchar>();

for (int row = 0; row < h; row++) {

for (int col = 0; col < w; col++) {

for (int dim = 0; dim < dims; dim++) {

*img_prt++ = 255 - *img_prt;

}

//if (dims == 1) { //灰度图像

// *img_prt++ = 255 - *img_prt;

//}

//else if (dims == 3) { //彩色图像

// *img_prt++ = 255 - *img_prt;

// *img_prt++ = 255 - *img_prt;

// *img_prt++ = 255 - *img_prt;

}

}

imshow("图像像素读写演示", image);

}

//图像像素算术操作

void QuickDemo::operators_Demo(Mat &image)

{

Mat dst;

Mat m = Mat::zeros(image.size(), image.type());

m = Scalar(50, 50, 50);

add(image, m, dst);

imshow("加法操作", dst);

m = Scalar(50, 50, 50);

subtract(image, m, dst);

imshow("减法操作", dst);

m = Scalar(2, 2, 2);

multiply(image, m, dst);

imshow("乘法操作", dst);

m = Scalar(2, 2, 2);

divide(image, m, dst);

imshow("除法操作", dst);

}

//滚动条回调函数

void onTrack(int b, void* userdata)

{

Mat image = *((Mat *)userdata);

Mat dst = Mat::zeros(image.size(), image.type());

Mat m = Mat::zeros(image.size(), image.type());

if (b > 100) {

m = Scalar(b - 100, b - 100, b - 100);

add(image, m, dst);

}

else {

m = Scalar(100 - b, 100 - b, 100 - b);

subtract(image, m, dst);

}

//addWeighted(image, 1.0, m, 0, b, dst);

imshow("亮度与对比度调整", dst);

}

//滚动条回调函数

void onContrast(int b, void* userdata)

{

Mat image = *((Mat *)userdata);

Mat dst = Mat::zeros(image.size(), image.type());

Mat m = Mat::zeros(image.size(), image.type());

double contrast = b / 100.0;

addWeighted(image, contrast, m, 0.0, 0, dst);

imshow("亮度与对比度调整", dst);

}

//滚动条调整图像亮度

void QuickDemo::trackingBar_Demo(Mat &image)

{

int max_value = 200;

int lightness = 100;

int contrast_value = 100;

namedWindow("亮度与对比度调整", WINDOW_AUTOSIZE);

createTrackbar("Value Bar", "亮度与对比度调整", &lightness, max_value, onTrack, (void *)&image);

createTrackbar("Contrast Bar", "亮度与对比度调整", &contrast_value, max_value, onContrast, (void *)&image);

onTrack(lightness, &image);

}

//键盘响应操作图像

void QuickDemo::key_Demo(Mat &image)

{

Mat dst = Mat::zeros(image.size(), image.type());

while (true) {

int c = waitKey(100);

if (c == 27) {//ESC 退出

break;

}

else if (c == 49) {

cout << "key #1" << endl;

cvtColor(image, dst, COLOR_RGB2GRAY);

}

else if (c == 50) {

cout << "key #2" << endl;

cvtColor(image, dst, COLOR_RGB2HSV);

}

else if (c == 51) {

cout << "key #3" << endl;

dst = Scalar(50, 50, 50);

add(image, dst, dst);

}

imshow("键盘响应", dst);

}

}

//自带颜色表操作

void QuickDemo::colorStyle_Demo(Mat &image)

{

Mat dst = Mat::zeros(image.size(), image.type());

int index = 0;

int pixNum = 0;

while (true) {

int c = waitKey(2000);

if (c == 27) {

break;

}

else if (c == 49) {

String pixPath = "./Image/color";

pixPath = pixPath.append(to_string(pixNum++));

pixPath = pixPath.append(".png");

imwrite(pixPath, dst);

}

applyColorMap(image, dst, (index++) % 19);

imshow("颜色风格", dst);

}

}

//图像像素的逻辑操作

void QuickDemo::bitwise_Demo(Mat &image)

{

Mat m1 = Mat::zeros(Size(256, 256), CV_8UC3);

Mat m2 = Mat::zeros(Size(256, 256), CV_8UC3);

rectangle(m1, Rect(100, 100, 80, 80), Scalar(255, 255, 0), -1, LINE_8, 0);

rectangle(m2, Rect(150, 150, 80, 80), Scalar(0, 255, 255), -1, LINE_8, 0);

imshow("m1", m1);

imshow("m2", m2);

Mat dst;

bitwise_and(m1, m2, dst);

imshow("像素位与操作", dst);

bitwise_or(m1, m2, dst);

imshow("像素位或操作", dst);

bitwise_xor(m1, m2, dst);

imshow("像素位异或操作", dst);

}

//通道分离与合并

void QuickDemo::channels_Demo(Mat &image)

{

vector<Mat> mv;

split(image, mv);

//imshow("蓝色", mv[0]);

//imshow("绿色", mv[1]);

//imshow("红色", mv[2]);

Mat dst;

vector<Mat> mv2;

//mv[1] = 0;

//mv[2] = 0;

//merge(mv, dst);

//imshow("蓝色", dst);

mv[0] = 0;

mv[2] = 0;

merge(mv, dst);

imshow("绿色", dst);

//mv[0] = 0;

//mv[1] = 0;

//merge(mv, dst);

//imshow("红色", dst);

int from_to[] = { 0,2,1,1,2,0 };

mixChannels(&image, 1, &dst, 1, from_to, 3);

imshow("通道混合", dst);

}

//图像色彩空间转换

void QuickDemo::inrange_Demo(Mat &image)

{

Mat hsv;

cvtColor(image, hsv, COLOR_RGB2HSV);

imshow("hsv", hsv);

Mat mask;

inRange(hsv, Scalar(35, 43, 46), Scalar(77, 255, 255), mask);

//imshow("mask", mask);

bitwise_not(mask, mask);

imshow("mask", mask);

Mat readback = Mat::zeros(image.size(), image.type());

readback = Scalar(40, 40, 200);

image.copyTo(readback, mask);

imshow("roi区域提取", readback);

}

//图像像素值统计

void QuickDemo::pixelStatistic_Demo(Mat &image)

{

double minv, maxv;

Point minLoc, maxLoc;

vector<Mat> mv;

split(image, mv);

for (int i = 0; i < mv.size(); i++) {

minMaxLoc(mv[i], &minv, &maxv, &minLoc, &maxLoc);

cout << "No." << i << " min:" << minv << " max:" << maxv << endl;

}

Mat mean, stddev;

meanStdDev(image, mean, stddev);

cout << "means:" << mean << endl;

cout << "stddev:" << stddev << endl;

}

//图像几何形状绘制

void QuickDemo::drawing_Demo(Mat &image)

{

Mat bg = Mat::zeros(image.size(), image.type());

rectangle(bg, Rect(250, 100, 100, 150), Scalar(0, 0, 255), -1, 8, 0);

circle(bg, Point(300, 175), 50, Scalar(255, 0, 0), 1, 8, 0);

line(bg, Point(250, 100), Point(350, 250), Scalar(0, 255, 0), 4, 8, 0);

line(bg, Point(350, 100), Point(250, 250), Scalar(0, 255, 0), 4, 8, 0);

ellipse(bg, RotatedRect(Point2f(200.0, 200.0), Size2f(100.0, 200.0), 00.0), Scalar(0, 255, 255), 2, 8);

imshow("bg", bg);

Mat dst;

addWeighted(image, 1.0, bg, 0.3, 0, dst);

imshow("几何绘制", dst);

}

//随机绘制几何形状

void QuickDemo::randomDrawing_Demo()

{

Mat canvas = Mat::zeros(Size(512, 512), CV_8UC3);

RNG rng(12345);

int w = canvas.cols;

int h = canvas.rows;

while (true) {

int c = waitKey(10);

if (c == 27) {

break;

}

int x1 = rng.uniform(0, w);

int y1 = rng.uniform(0, h);

int x2 = rng.uniform(0, w);

int y2 = rng.uniform(0, h);

int b = rng.uniform(0, 255);

int g = rng.uniform(0, 255);

int r = rng.uniform(0, 255);

//canvas = Scalar(0, 0, 0);

line(canvas, Point(x1, y1), Point(x2, y2), Scalar(b, g, r), 1, 8, 0);

imshow("随机绘制演示", canvas);

}

}

//多边形填充与绘制

void QuickDemo::polylineDrawing_Demo()

{

Mat canvas = Mat::zeros(Size(512, 512), CV_8UC3);

Point p1(100, 100);

Point p2(350, 100);

Point p3(450, 280);

Point p4(320, 450);

Point p5(80, 400);

vector<Point> pts;

pts.push_back(p1);

pts.push_back(p2);

pts.push_back(p3);

pts.push_back(p4);

pts.push_back(p5);

Point p11(50, 50);

Point p12(200, 50);

Point p13(250, 150);

Point p14(160, 300);

Point p15(30, 350);

vector<Point> pts1;

pts1.push_back(p11);

pts1.push_back(p12);

pts1.push_back(p13);

pts1.push_back(p14);

pts1.push_back(p15);

//fillPoly(canvas, pts, Scalar(255, 255, 0), 8, 0);

//polylines(canvas, pts, true, Scalar(0, 255, 255), 2, LINE_AA, 0);

vector<vector<Point>> contours;

contours.push_back(pts);

contours.push_back(pts1);

drawContours(canvas, contours, -1, Scalar(255, 0, 0), 4);

imshow("多边形", canvas);

}

static void onDraw(int event, int x, int y, int flags, void *userdata)

{

static Point sp(-1, -1), ep(-1, -1);

Mat srcImg = *((Mat *)userdata);

Mat image = srcImg.clone();

if (event == EVENT_LBUTTONDOWN) {

sp.x = x;

sp.y = y;

//cout << "down_x = " <<sp.x << " dwon_y = " << sp.y << endl;

}

else if (event == EVENT_LBUTTONUP) {

ep.x = x;

ep.y = y;

if (ep.x > image.cols) {

ep.x = image.cols;

}

if (ep.y > image.rows) {

ep.y = image.rows;

}

//cout << "up_x = " << ep.x << " up_y = " << ep.y << endl;

int dx = ep.x - sp.x;

int dy = ep.y - sp.y;

if (dx > 0 && dy > 0) {

Rect box(sp.x, sp.y, dx, dy);

//rectangle(image, box, Scalar(0, 0, 255), 2, 8, 0);

//imshow("鼠标绘制", image);

imshow("ROI区域", image(box));

sp.x = -1;

sp.y = -1;

}

}

else if (event == EVENT_MOUSEMOVE) {

if (sp.x > 0 && sp.y > 0) {

ep.x = x;

ep.y = y;

//cout << "up_x = " << ep.x << " up_y = " << ep.y << endl;

if (ep.x > image.cols) {

ep.x = image.cols;

}

if (ep.y > image.rows) {

ep.y = image.rows;

}

int dx = ep.x - sp.x;

int dy = ep.y - sp.y;

if (dx > 0 && dy > 0) {

//srcImg.copyTo(image);

image = srcImg.clone();

Rect box(sp.x, sp.y, dx, dy);

rectangle(image, box, Scalar(0, 0, 255), 2, 8, 0);

imshow("鼠标绘制", image);

}

}

}

}

//鼠标操作与响应

void QuickDemo::mouseDrawing_Demo(Mat &image)

{

namedWindow("鼠标绘制", WINDOW_AUTOSIZE);

setMouseCallback("鼠标绘制", onDraw, (void *)(&image));

imshow("鼠标绘制", image);

}

//图像像素类型转换与归一化

void QuickDemo::norm_Demo(Mat &image)

{

Mat dst;

cout << image.type() << endl;

//CV_8UC3 转换为 CV_32FC3

image.convertTo(image, CV_32F);

cout << image.type() << endl;

normalize(image, dst, 1.0, 0.0, NORM_MINMAX);

cout << dst.type() << endl;

imshow("图像像素归一化", dst);

}

//图像放缩与插值

void QuickDemo::resize_Demo(Mat &image)

{

Mat zoomSmall, zoomLarge;

int w = image.cols;

int h = image.rows;

resize(image, zoomSmall, Size(w / 2, h / 2), 0, 0, INTER_LINEAR);

imshow("zoomSamll", zoomSmall);

resize(image, zoomLarge, Size(w *1.5, h *1.5), 0, 0, INTER_LINEAR);

imshow("zoomLarge", zoomLarge);

}

//图像翻转

void QuickDemo::flip_Demo(Mat &image)

{

Mat dst;

flip(image, dst, 0);//上下翻转(水中倒影)

//flip(image, dst, 1);//左右翻转(镜子映像)

//flip(image, dst, -1);//180°翻转(对角线翻转)

imshow("图像翻转", dst);

}

//图像旋转

void QuickDemo::rotate_Demo(Mat &image)

{

Mat dst, M;

int w = image.cols;

int h = image.rows;

M = getRotationMatrix2D(Point(w / 2, h / 2), 60, 2);

double cos = abs(M.at<double>(0, 0));

double sin = abs(M.at<double>(0, 1));

int nw = cos * w + sin * h;

int nh = sin * w + cos * h;

M.at<double>(0, 2) += nw / 2 - w / 2;

M.at<double>(1, 2) += nh / 2 - h / 2;

warpAffine(image, dst, M, Size(nw, nh), INTER_LINEAR, 0, Scalar(255, 255, 0));

imshow("图像旋转", dst);

}

//视频文件摄像头使用

void QuickDemo::video_Demo(Mat &image)

{

VideoCapture capture(0);

//VideoCapture capture("./Image/sample.mp4");

Mat frame;

while (true) {

capture.read(frame);

if (frame.empty()) {

cout << "frame empty" << endl;

break;

}

flip(frame, frame, 1);//视频图像左右翻转

imshow("摄像头实时监控", frame);

//TODO:do something ...

//mouseDrawing_Demo(frame);//视频图像截图

//colorSpace_Demo(frame);//HSV GRAY

int c = waitKey(10);

if (c == 27) {

break;

}

}

//release 释放摄像头资源

capture.release();

}

//视频文件摄像头使用

void QuickDemo::video2_Demo(Mat &image)

{

//VideoCapture capture(0);

VideoCapture capture("./Image/lane.avi");

int frame_width = capture.get(CAP_PROP_FRAME_WIDTH);//视频宽度

int frame_height = capture.get(CAP_PROP_FRAME_HEIGHT);//视频高度

int count = capture.get(CAP_PROP_FRAME_COUNT);//视频总帧数

double fps = capture.get(CAP_PROP_FPS);//FPS 一秒刷新帧数

double fourcc = capture.get(CAP_PROP_FOURCC);//视频编码格式

cout << "frame width:" << frame_width << endl;

cout << "frame height:" << frame_height << endl;

cout << "frames sum:" << count << endl;

cout << "FPS:" << fps << endl;

cout << "frame fourcc:" << fourcc << endl;

VideoWriter writer("./video/lane_save.avi", fourcc, fps, Size(frame_width, frame_height), true);

Mat frame;

while (true) {

capture.read(frame);

if (frame.empty()) {

cout << "frame empty" << endl;

break;

}

//flip(frame, frame, 1);//视频图像左右翻转

imshow("摄像头实时监控", frame);

writer.write(frame);

//TODO:do something ...

//mouseDrawing_Demo(frame);//视频图像截图

//colorSpace_Demo(frame);//HSV GRAY

int c = waitKey(10);

if (c == 27) {

break;

}

}

//release 释放摄像头资源

capture.release();

writer.release();

}

//视频文件摄像头使用 RTMP拉流

void QuickDemo::video3_Demo(Mat &image)

{

//VideoCapture capture(0);

VideoCapture vcap;

Mat frame;

string videoStreamAddress = "rtmp://192.168.254.104:1935/live/live";

if (!vcap.open(videoStreamAddress)) {

cout << "Error opening video stream or file" << endl;

return;

}

while (true) {

vcap.read(frame);

if (frame.empty()) {

cout << "frame empty" << endl;

break;

}

flip(frame, frame, 1);//视频图像左右翻转

imshow("RTMP", frame);

int c = waitKey(10);

if (c == 27) {

break;

}

}

//release 释放摄像头资源

vcap.release();

}

//图像直方图

void QuickDemo::histogram_Demo(Mat &image)

{

//三通道分离

vector<Mat> bgr_plane;

split(image, bgr_plane);

//定义参数变量

const int channels[1] = { 0 };

const int bins[1] = { 256 };

float hranges[2] = { 0,255 };

const float * ranges[1] = { hranges };

Mat b_hist;

Mat g_hist;

Mat r_hist;

//计算Blue,Green,Red通道的直方图

calcHist(&bgr_plane[0], 1, 0, Mat(), b_hist, 1, bins, ranges);

calcHist(&bgr_plane[1], 1, 0, Mat(), g_hist, 1, bins, ranges);

calcHist(&bgr_plane[2], 1, 0, Mat(), r_hist, 1, bins, ranges);

//定义直方图窗口

int hist_w = 512;

int hist_h = 400;

int bin_w = cvRound((double)hist_w / bins[0]);

Mat histImage = Mat::zeros(Size(hist_w, hist_h), CV_8UC3);

//归一化直方图数据

normalize(b_hist, b_hist, 0, histImage.rows, NORM_MINMAX, -1, Mat());

normalize(g_hist, g_hist, 0, histImage.rows, NORM_MINMAX, -1, Mat());

normalize(r_hist, r_hist, 0, histImage.rows, NORM_MINMAX, -1, Mat());

//绘制直方图曲线

for (int i = 1; i < bins[0]; i++) {

line(histImage, Point(bin_w*(i - 1), hist_h - cvRound(b_hist.at<float>(i - 1))),

Point(bin_w*(i), hist_h - cvRound(b_hist.at<float>(i))), Scalar(255, 0, 0), 2, 8, 0);

line(histImage, Point(bin_w*(i - 1), hist_h - cvRound(g_hist.at<float>(i - 1))),

Point(bin_w*(i), hist_h - cvRound(g_hist.at<float>(i))), Scalar(0, 255, 0), 2, 8, 0);

line(histImage, Point(bin_w*(i - 1), hist_h - cvRound(r_hist.at<float>(i - 1))),

Point(bin_w*(i), hist_h - cvRound(r_hist.at<float>(i))), Scalar(0, 0, 255), 2, 8, 0);

}

//显示直方图

namedWindow("Histogrma Demo", WINDOW_AUTOSIZE);

imshow("Histogrma Demo", histImage);

}

//二维直方图

void QuickDemo::histogram2d_Demo(Mat &image)

{

//2D直方图

Mat hsv, hs_hist;

cvtColor(image, hsv, COLOR_RGB2HSV);//转成HSV图像

int hbins = 30, sbins = 32;//划分比例 180/30=5 256/32=8

int hist_bins[] = { hbins,sbins };

float h_range[] = { 0,180 };//H范围

float s_range[] = { 0,256 };//S范围

const float* hs_ranges[] = { h_range,s_range };//范围指针的指针

int hs_channels[] = { 0,1 };//通道数

calcHist(&hsv, 1, hs_channels, Mat(), hs_hist, 2, hist_bins, hs_ranges);//二维直方图转换

double maxVal = 0;

minMaxLoc(hs_hist, 0, &maxVal, 0, 0);

int scale = 10;

//定义2D直方图窗口

Mat hist2d_image = Mat::zeros(sbins*scale, hbins*scale, CV_8UC3);

for (int h = 0; h < hbins; h++) {

for (int s = 0; s < sbins; s++) {

float binVal = hs_hist.at<float>(h, s);

//占比数量

int intensity = cvRound(binVal * 255 / maxVal);

//画矩形

rectangle(hist2d_image, Point(h*scale, s*scale),

Point((h + 1)*scale - 1, (s + 1)*scale - 1),

Scalar::all(intensity),

-1);

}

}

//图像颜色转换

applyColorMap(hist2d_image, hist2d_image, COLORMAP_JET);

imshow("H-S Histogram", hist2d_image);

imwrite("./Image/hist_2d.png", hist2d_image);

}

//直方图均衡化

void QuickDemo::histogramEq_Demo(Mat &image)

{

Mat gray;

cvtColor(image, gray, COLOR_RGB2GRAY);

imshow("灰度图像", gray);

Mat dst;

equalizeHist(gray, dst);

imshow("直方图均衡化演示", dst);

//彩色图像直方图均衡化

//Mat hsv;

//cvtColor(image, hsv, COLOR_RGB2HSV);

//vector<Mat> hsvVec;

//split(hsv, hsvVec);

//equalizeHist(hsvVec[2], hsvVec[2]);

//Mat hsvTmp;

//merge(hsvVec, hsvTmp);

//Mat dst;

//cvtColor(hsvTmp, dst, COLOR_HSV2RGB);

//imshow("直方图均衡化演示", dst);

}

//图像卷积操作(模糊)

void QuickDemo::blur_Demo(Mat &image)

{

Mat dst;

blur(image, dst, Size(13, 13), Point(-1, -1));

imshow("图像模糊", dst);

}

//高斯模糊

void QuickDemo::gaussianBlur_Demo(Mat &image)

{

Mat dst;

GaussianBlur(image, dst, Size(7, 7), 15);

imshow("高斯模糊", dst);

}

//高斯双边模糊

void QuickDemo::bifilter_Demo(Mat &image)

{

Mat dst;

bilateralFilter(image, dst, 0, 100, 10);

imshow("高斯双边模糊", dst);

imwrite("C:\\Users\\fujiahuang\\Desktop\\b.jpg", dst);

}

/*

实时人脸检测

void QuickDemo::faceDetection_Demo(Mat &image)

{

string root_dir = "D://x86//opencv4.6//opencv//sources//samples//dnn//face_detector//";

Net net = readNetFromTensorflow(root_dir + "opencv_face_detector_uint8.pb", root_dir + "opencv_face_detector.pbtxt");

VideoCapture capture(0);

//VideoCapture capture;

//string videoStreamAddress = "rtmp://192.168.254.104:1935/live/live";

//if (!capture.open(videoStreamAddress)) {

// cout << "Error opening video stream or file" << endl;

// return;

//}

Mat frame;

while (true) {

capture.read(frame);

if (frame.empty()) {

cout << "frame empty" << endl;

break;

}

flip(frame, frame, 1);//视频图像左右翻转

Mat blob = blobFromImage(frame, 1.0, Size(300, 300), Scalar(104, 177, 123), false, false);

net.setInput(blob);//NCHW

Mat probs = net.forward();

Mat detectionMat(probs.size[2], probs.size[3], CV_32F, probs.ptr<float>());

//解析结果

int num = 0;

float confidence = 0.0;

float fTemp = 0.0;

for (int i = 0; i < detectionMat.rows; i++) {

confidence = detectionMat.at<float>(i, 2);

if (confidence > 0.5) {

fTemp = confidence;

int x1 = static_cast<int>(detectionMat.at<float>(i, 3)*frame.cols);

int y1 = static_cast<int>(detectionMat.at<float>(i, 4)*frame.cols);

int x2 = static_cast<int>(detectionMat.at<float>(i, 5)*frame.cols);

int y2 = static_cast<int>(detectionMat.at<float>(i, 6)*frame.cols);

Rect box(x1, y1, x2 - x1, y2 - y1);

rectangle(frame, box, Scalar(0, 0, 255), 2, 8, 0);

num++;

}

}

//Mat dst;

//bilateralFilter(frame, dst, 0, 100, 10);//高斯双边模糊

putText(frame, "NO." + to_string(num) + " SSIM:" + to_string(fTemp), Point(30, 50), FONT_HERSHEY_TRIPLEX, 1.3, Scalar(26, 28, 124), 4);

imshow("人脸实时检测", frame);

int c = waitKey(1);

if (c == 27) {

break;

}

}

}

人脸照片检测

void QuickDemo::faceDetection_Demo(Mat &image)

{

string root_dir = "D:/opencv4.5.0/opencv/sources/samples/dnn/face_detector/";

Net net = readNetFromTensorflow(root_dir + "opencv_face_detector_uint8.pb", root_dir + "opencv_face_detector.pbtxt");

Mat frame;

frame = image.clone();

while (true) {

frame = image.clone();

//flip(frame, frame, 1);//视频图像左右翻转

Mat blob = blobFromImage(frame, 1.0, Size(300, 300), Scalar(104, 177, 123), false, false);

net.setInput(blob);//NCHW

Mat probs = net.forward();

Mat detectionMat(probs.size[2], probs.size[3], CV_32F, probs.ptr<float>());

//解析结果

int num = 0;

for (int i = 0; i < detectionMat.rows; i++) {

float confidence = detectionMat.at<float>(i, 2);

if (confidence > 0.5) {

int x1 = static_cast<int>(detectionMat.at<float>(i, 3)*frame.cols);

int y1 = static_cast<int>(detectionMat.at<float>(i, 4)*frame.cols);

int x2 = static_cast<int>(detectionMat.at<float>(i, 5)*frame.cols);

int y2 = static_cast<int>(detectionMat.at<float>(i, 6)*frame.cols);

Rect box(x1, y1, x2 - x1, y2 - y1);

rectangle(frame, box, Scalar(0, 0, 255), 2, 8, 0);

num++;

}

}

putText(frame, "NO." + to_string(num) + " pcs", Point(30, 50), FONT_HERSHEY_TRIPLEX, 1.3, Scalar(124, 28, 26), 2);

imshow("人脸实时检测", frame);

int c = waitKey(1000);

if (c == 27) {

break;

}

}

}+*/

void QuickDemo::_array_sum_avx(double *a, double *b, double *re, int ssz)

{

clock_ t;

__m256d m1, m2;

//for (int k = 0; k < 4; ++ k)

//{

for (int i = 0; i < ssz; i += 4)

{

m1 = _mm256_set_pd(a[i], a[i + 1], a[i + 2], a[i + 3]);

m2 = _mm256_set_pd(b[i], b[i + 1], b[i + 2], b[i + 3]);

__m256d l1 = _mm256_mul_pd(m1, m2);

re[i + 3] = l1.m256d_f64[0];

re[i + 2] = l1.m256d_f64[1];

re[i + 1] = l1.m256d_f64[2];

re[i] = l1.m256d_f64[3];

}

size_t en = clock();

t.show("avx");

}

void QuickDemo::_array_sum(double *a, double *b, double *re, int ssz)

{

clock_ t;

//for (int k = 0; k < 4; ++ k)

//{

for (int i = 0; i < ssz; ++i)

{

re[i] = a[i] * b[i];

}

t.show("normal cpu cost");

}

本文来自博客园,作者:InsiApple,转载请注明原文链接:https://www.cnblogs.com/InsiApple/p/17049616.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号