Good morning, ladies and gentlemen. My name is Houman Zarrinkoub. I am the product manager of all the wireless products at MathWorks, including 5G, LTE, WLAN, and satellite communications, and it is my pleasure to be with you today in this MathWorks webinar entitled Deep Learning for Wireless Communication.

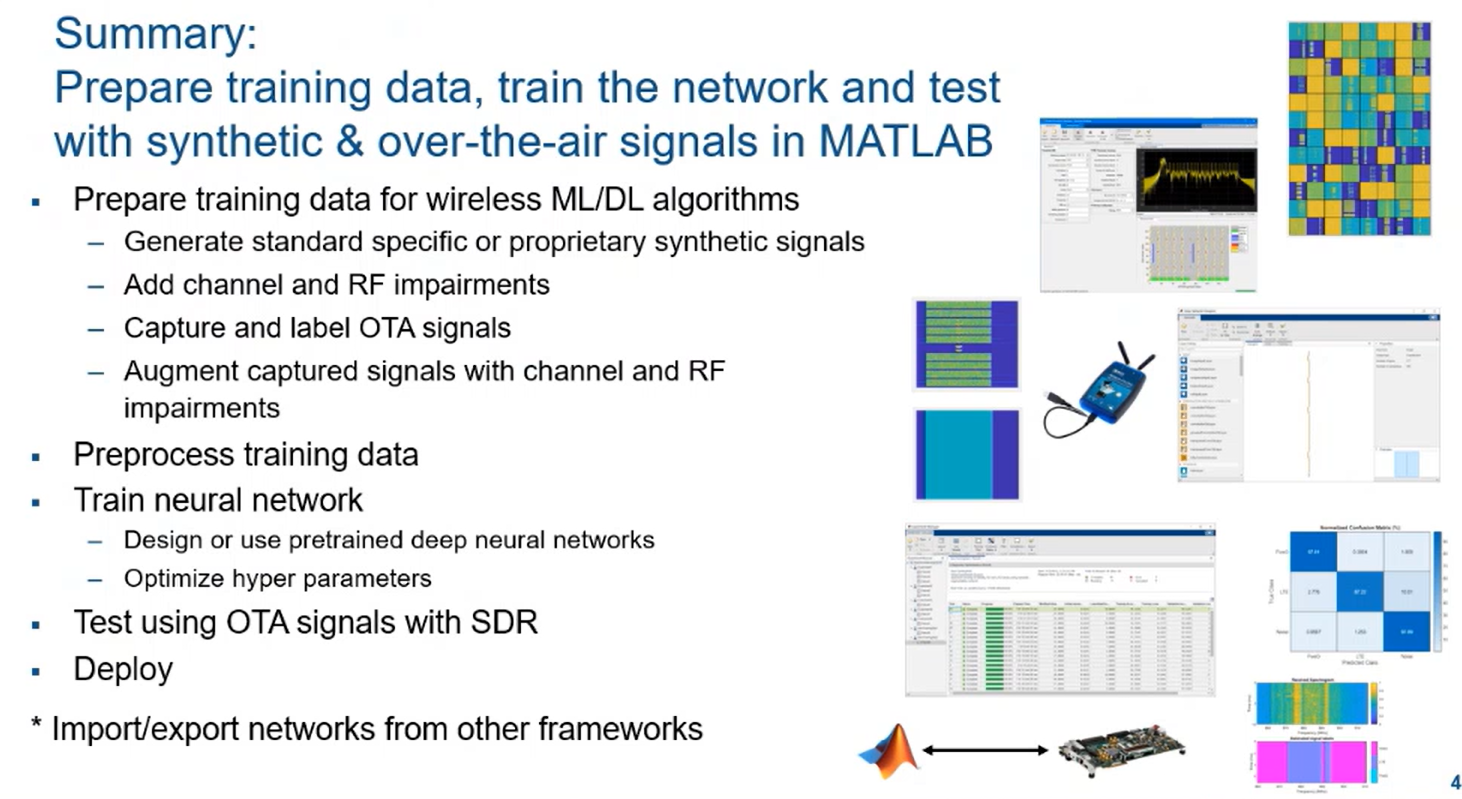

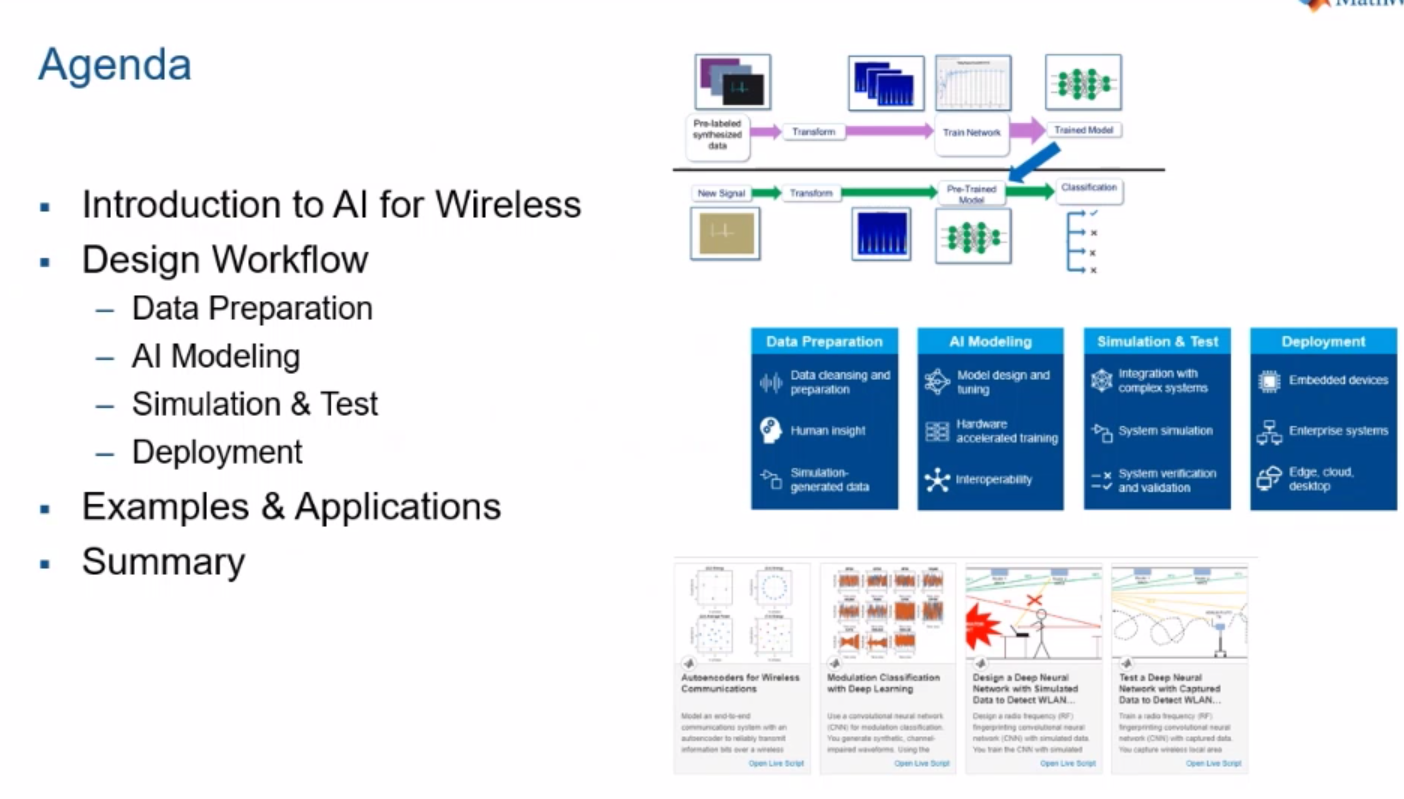

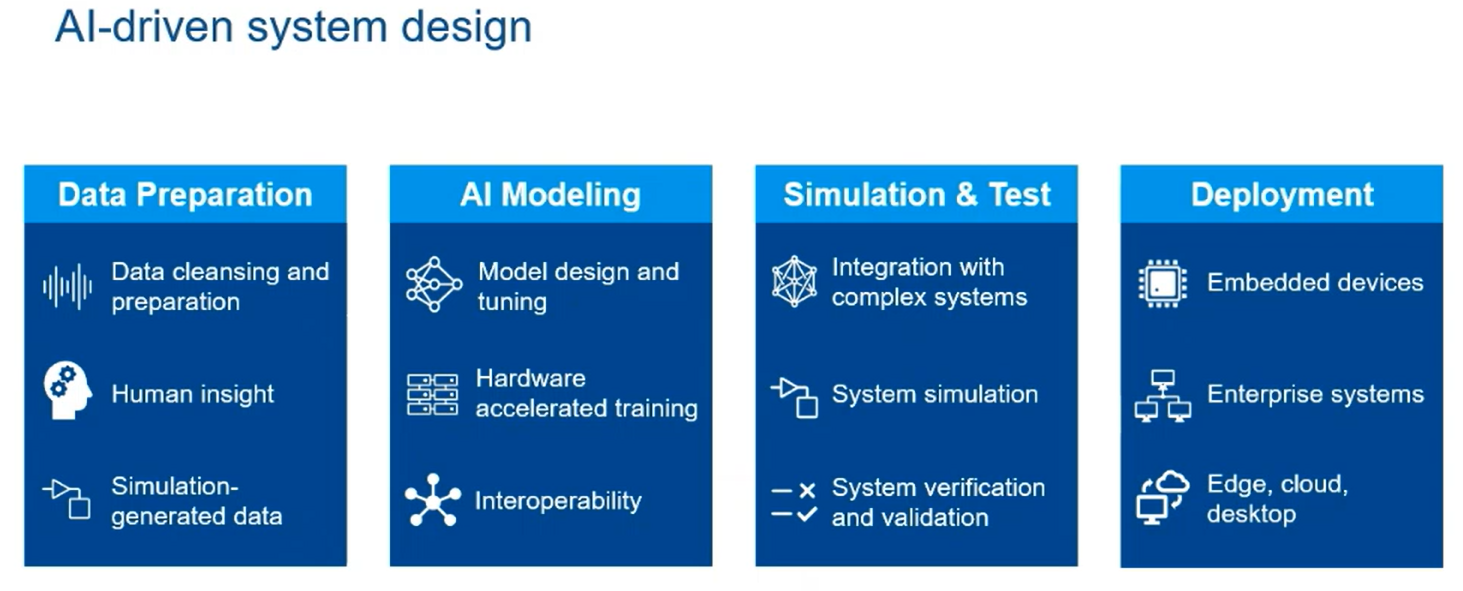

Let's go over the agenda of today's talk. Following some introductory remarks on AI for wireless and its role these days, I'm going to essentially take you through a design workflow involve with application of AI techniques in wireless. And the workflow has essentially four components-- the preparation of data, the AI modeling, simulation and testing, and deployment. I'm going to use examples and a particular application to put that all into context, and I'm going to summarize by providing you more areas to learn.

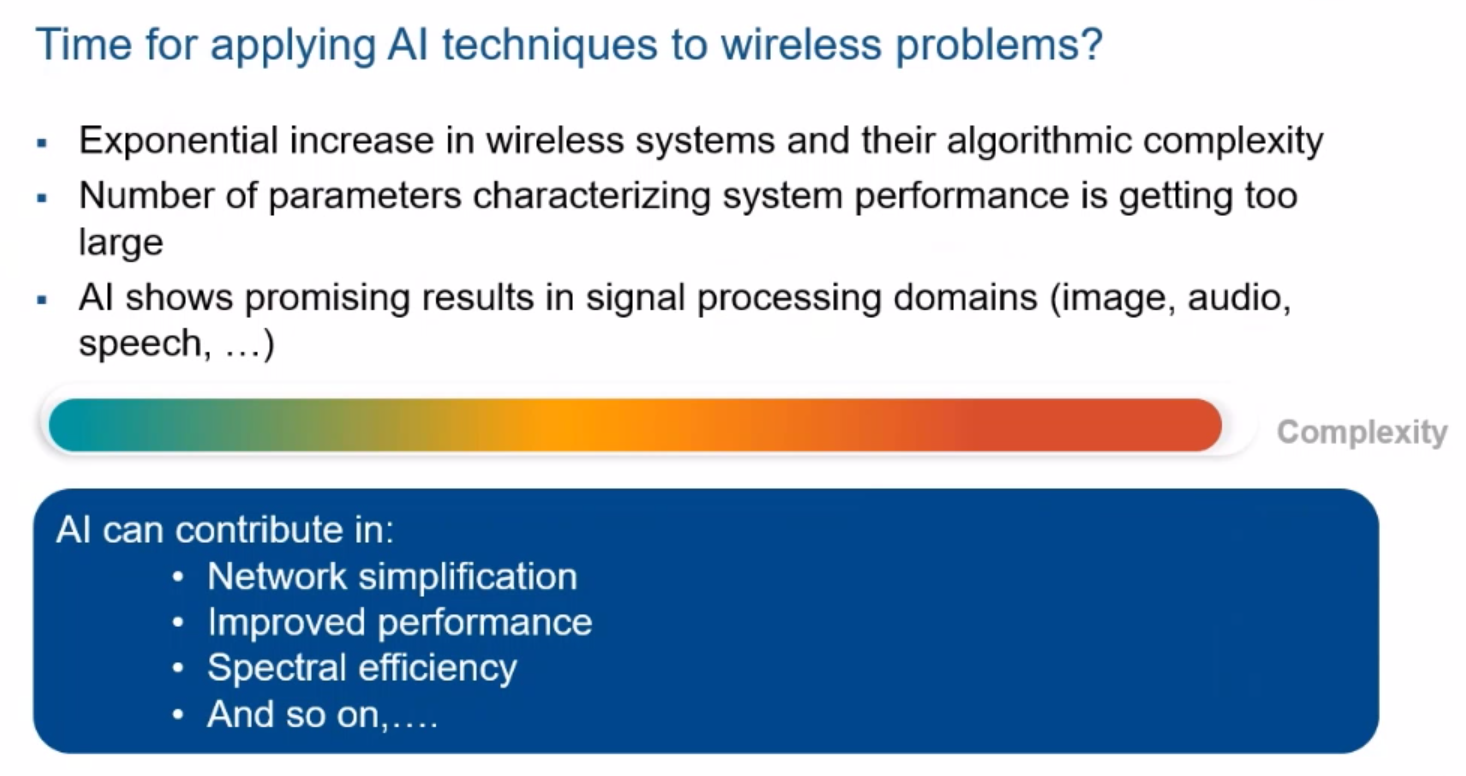

Now, is it time for applying AI techniques to wireless problem? The wireless systems and technologies today are becoming increasingly complex, as you can imagine. And when you have the number of parameters characterizing a system increasing substantially, then writing typical traditional algorithms that take all the parameters into account becomes an arduous task. Therefore, AI techniques that essentially learn from the operations and apply those algorithms based on learning are emerging these days.

As you know AI, or artificial intelligence has been successfully applied to other areas of signal processing-- image recognition and so on, audio and speech processing, automatic language processing. So some would say it is time for AI to contribute to solving wireless problem. That includes stuff like network simplification or network modeling and simulation, improved performance based on monitoring the performance based on real data, spectral efficiency, and so on.

So what are typical applications that our customers are telling us are using AI techniques in their solutions? We have been in contact with customers who are working on signal classification-- a typical AI application that you classify different signals, in this case modulation techniques, based on the signals you get. Identifying friendly and unfriendly devices-- where the signal comes from. Even the technique of digital predistortion which is used to improve performance.

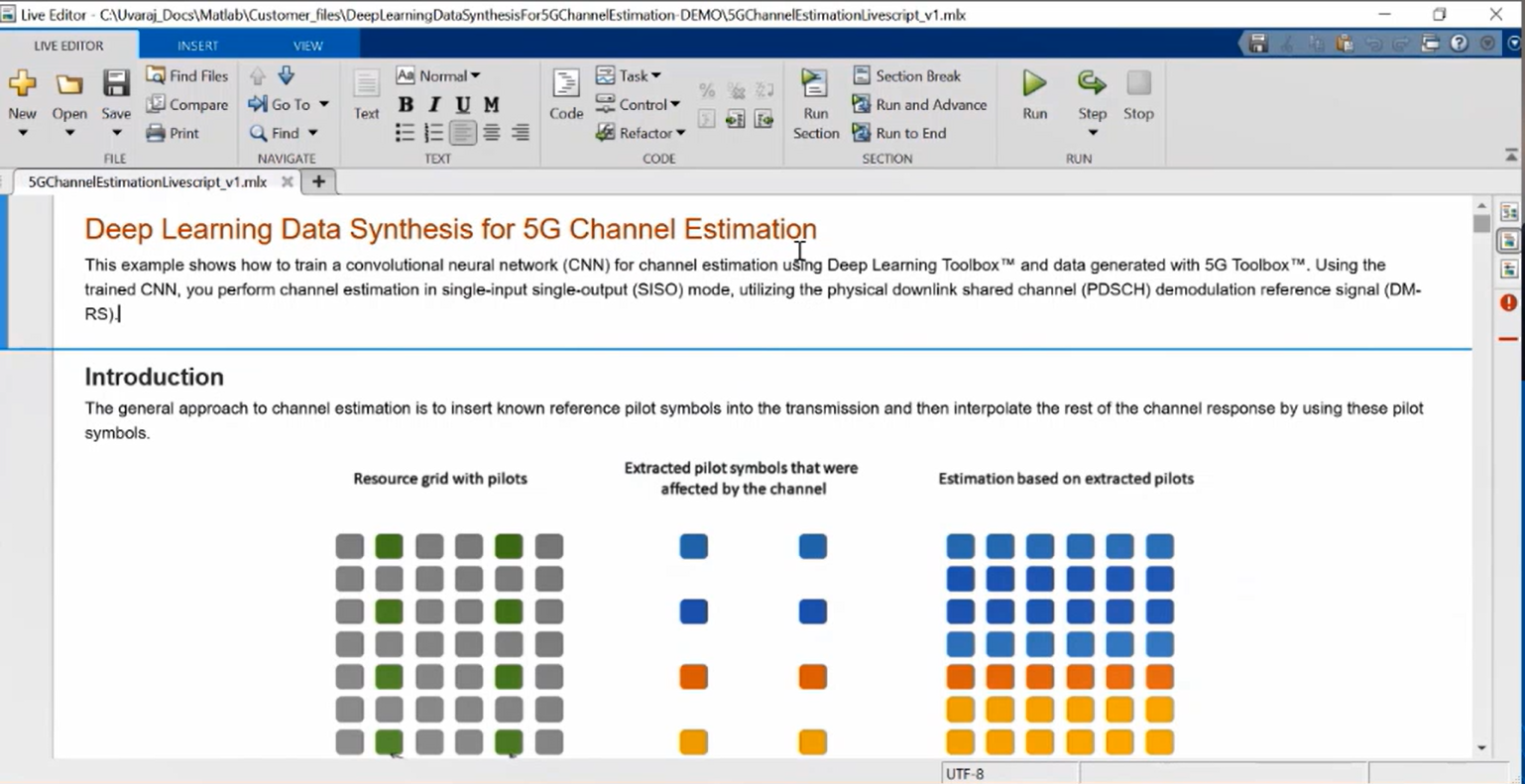

There are some AI techniques to capture the nonlinearities properly, devising better receiver algorithms to adapt to the changing nature of the channel, and so on. Even channel modeling and channel estimation and prediction, as well as automatic encoding and decoding. Beamforming, which is very highly leveraged in 5G and beyond. And wireless network optimization-- allocating the right resources to multiple users. These are typical applications we hear that AI techniques have been applied to.

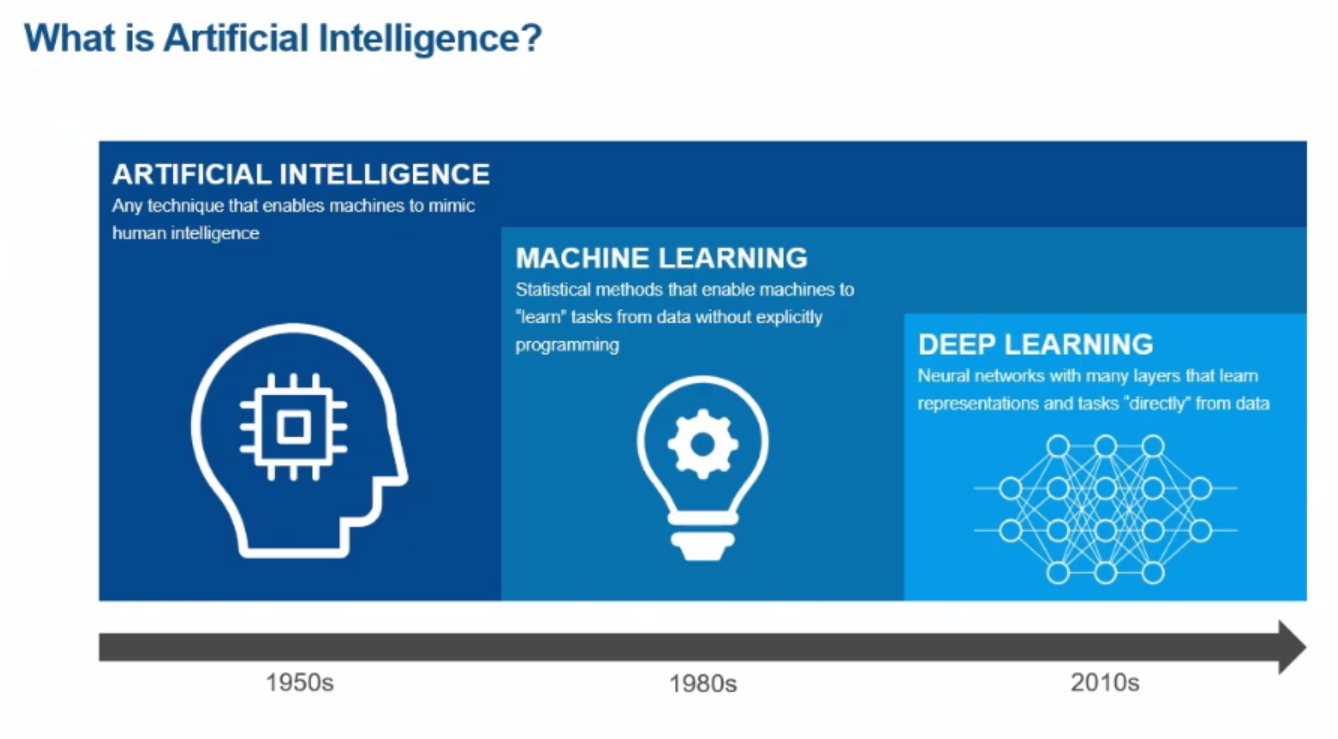

Let's look at some definitions. What is artificial intelligence for the purpose of this talk? Any technique that enables machines to learn and mimic human intelligence, that is AI. And historically, we started with machine learning techniques which are statistical methods that essentially extract some features from physical measurements and try to apply pattern recognition techniques to classify or regress and extract information. And then deep learning are newer, and it's essentially neural networks with many layers that combine the task of feature extraction and learning about a pattern and they're mapping representations directly from data. And these are essentially progressed through time.

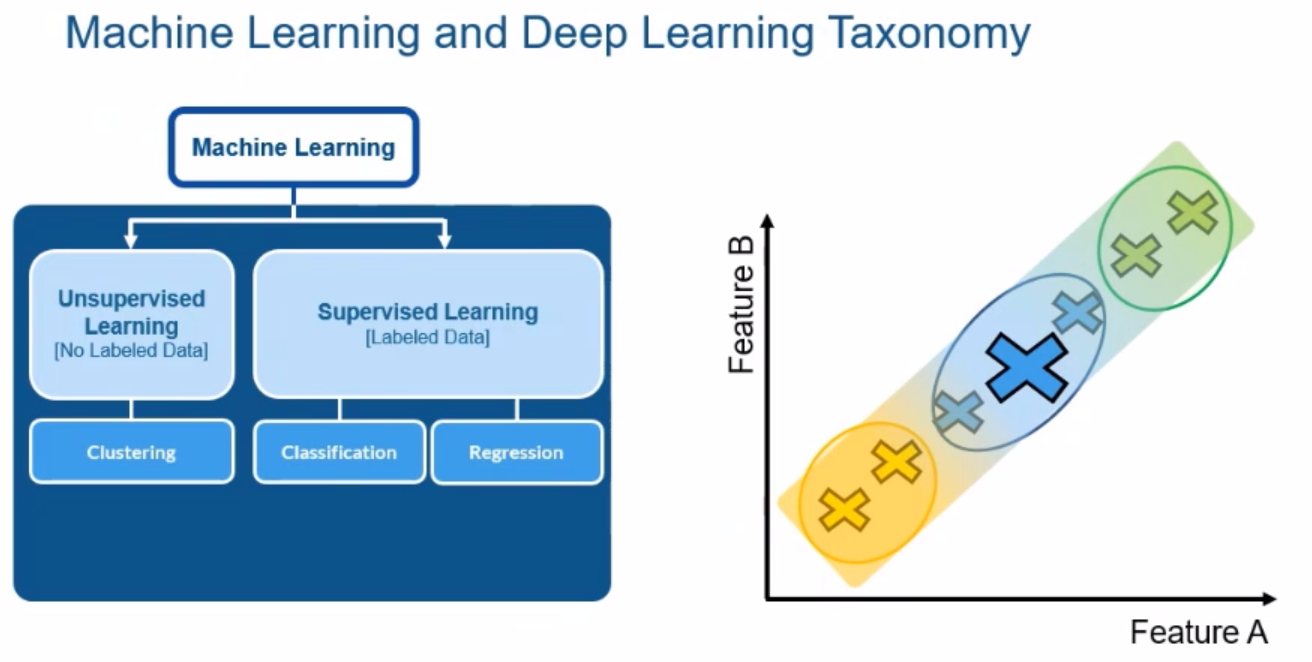

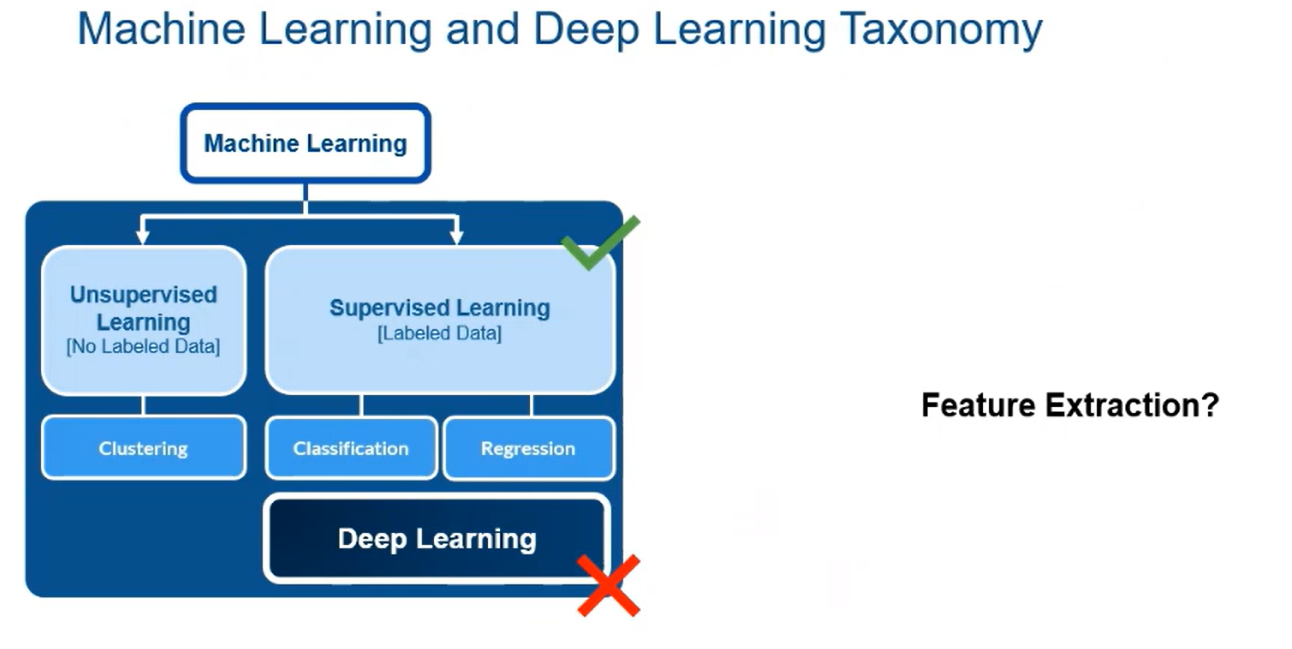

So let's look at the machine learning and deep learning taxonomy so we can get clear on that. So machine learning, you can have some unsupervised learning or supervised learning. In unsupervised learning, you don't essentially label your data, meaning that you don't go and using human intelligence to supervise to come from human intelligence, saying that this class of signals are this, and this class of signals are that, and then allowing the AI models to learn different characteristics.

In unsupervised learning, you just specify a criteria, a cost function, a metric to optimize, and let clustering and machines going through all kinds of iterations to essentially, without supervision of humans, extract the patterns. But, as in supervised learning, you label data. The intelligence of humans says that these are the class of signals that are A. These are the class of signals that are B-- based on the features. And based on that, you either classify or find the regression, find the rule that matches the noisy data.

Now, deep learning is a class or subclass of supervised learning and machine learning, and distinction lies in whether you are doing feature extraction explicitly or not. If you are doing feature extraction explicitly-- meaning you have raw data, and you perform some preprocessing to extract features-- if you're doing that, and you're applying labeling on features, that's called machine learning, supervised learning. But if you are not doing the feature extraction, you are letting the multilayer deep learning network do both feature extraction and training, that is essentially deep learning.

And MathWorks' tools-- our tools, our tool boxes and MATLAB and our add-on products essentially enable you to do wireless with AI better. We have lots of features and products to help you make the task of AI, machine learning, deep learning, and reinforcement learning easier and better. So what does the AI-driven system design look like? From a high point of view, you have some data that you want to classify, regress, or you want to develop some AI-based rules from them. So first you need lots of training data. You need the data to be cleaned and prepared, so you do some preprocessing.

Again, if you do a supervised learning, you need human insight or human intelligence to help you label, and you need to make as much data possible for each class, such that you can find all possible ways that noise and distortions modeled into your classification process. You need simulation-generated data. Then, after data is prepared and labeled and everything, you go through AI modeling. You model the layers and the learning mechanism, and you tune that learning and model in order to get better performance. And then, because these things take time, you want to accelerate their training by using techniques that make the training not-- do the same performance much faster. And you worry about interoperability between your environment and the environment that you want to implement.

Now, after you have trained your AI model, and in the AI model there is rules generated for classification, regression, all the other applications-- you want to actually see if it works properly. You want to simulate and test that that system that you just trained works properly. So you want to integrate within a complex system-- in a complex system, plug in your AI-based classifier and so on.

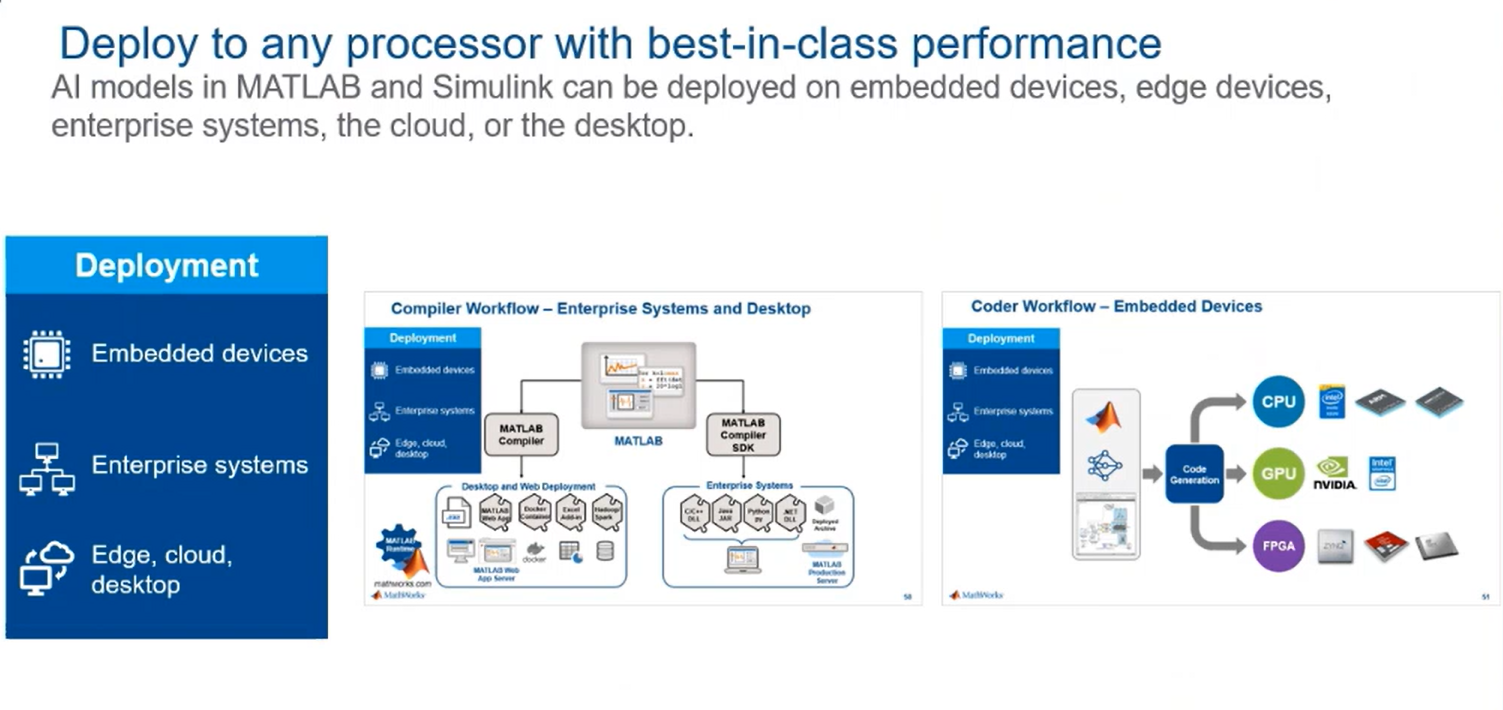

You want to simulate the entire system and look at the metrics across the entire system and verify that the system operates properly and verify and validate. And finally, when you're done with all these three simulation levels, you want to deploy it on a hard drive-- on embedded devices or enterprise system, like Azure or AWS or other systems that are in the cloud, and Edge and desktop, and you want to deploy that. So that's a whole process in one snapshot.

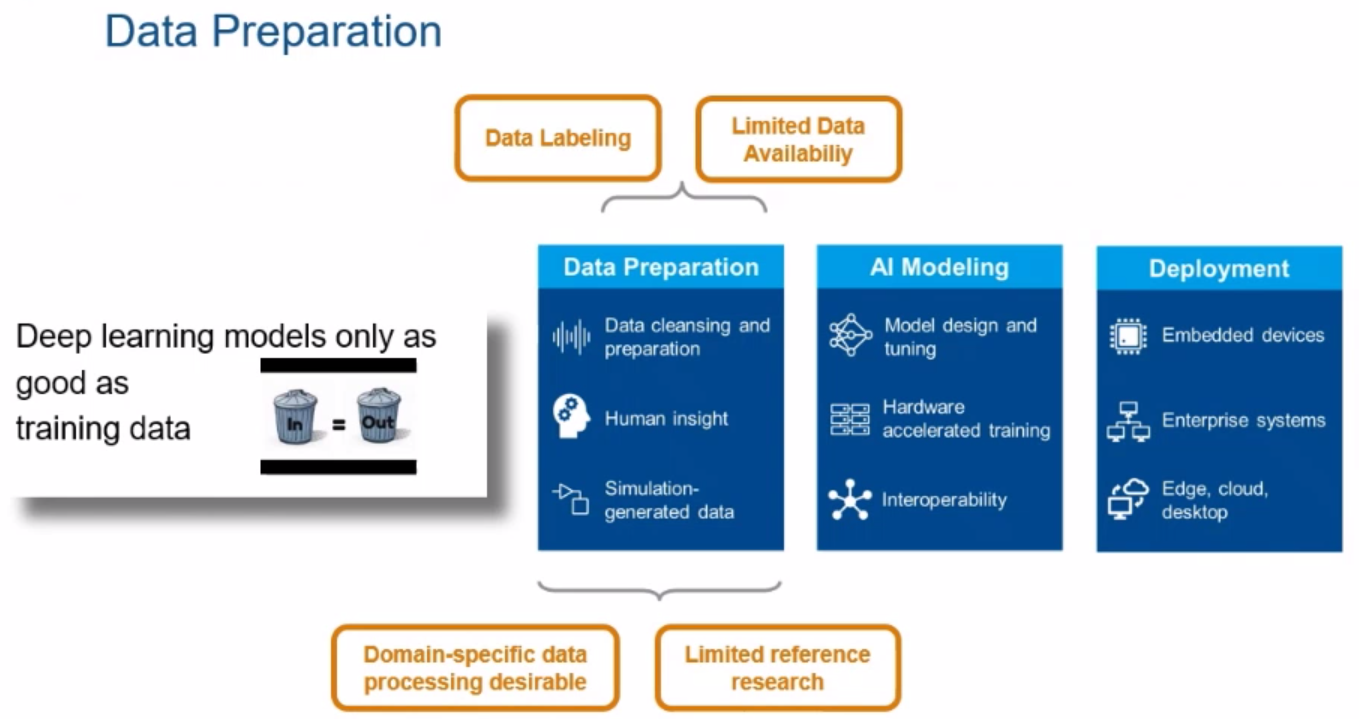

Let's look at each of them one by one. So deep learning models are only as good as training data. So that's a classical idiom in engineering lingo. If you have nonsense in, you have nonsense out. And essentially you have to prepare a lot of data-- representative data but huge amount that covers the space of each class that you are assigning to so that your system becomes robust.

So you need to apply human intelligence label and say all these classes of data that I have here belong to 5G, all this class belongs to Wi-Fi, all this class belongs to this device, that device. So you have to have lots of data with lots of possible distortion noise interference and so on, and you have to say, this bucket of data is labeled as such. And if you have limited data availability, real world signals, you have to generate synthesized signals to augment your stuff.

Now, you also need to have domain-specific data processing. You can't just apply a same classifier across various domains. You have to do some preprocessing and postprocessing to achieve that. And there is not enough reference research done on all possible classes. So you are at the mercy of training set that you develop.

As I mentioned, in order to create large amounts of data, which robustifies your AI model, you have to augment your existing data and generize synthesize additional data. And one example here that I'm showing is I create a lot of different 5G signals here. This is a resource grid of a 5G system with CSI-RS reference signals, or pilots. So you need to create that, and you need to create other reference signals and other channels. So you create a lot of these possible signals that can be seen over time and frequency over 5G in order to create a classified on a device 5G.

And we have tools, as you can see here. And our example, the Generative Adversarial Network, GAN, for sound synthesis is one of the examples that we show how we generate a lot of existing synthesized signals for, in this case, audio. But you can use phased array and communication tool boxes to generate a lot of IQ samples for your wireless train.

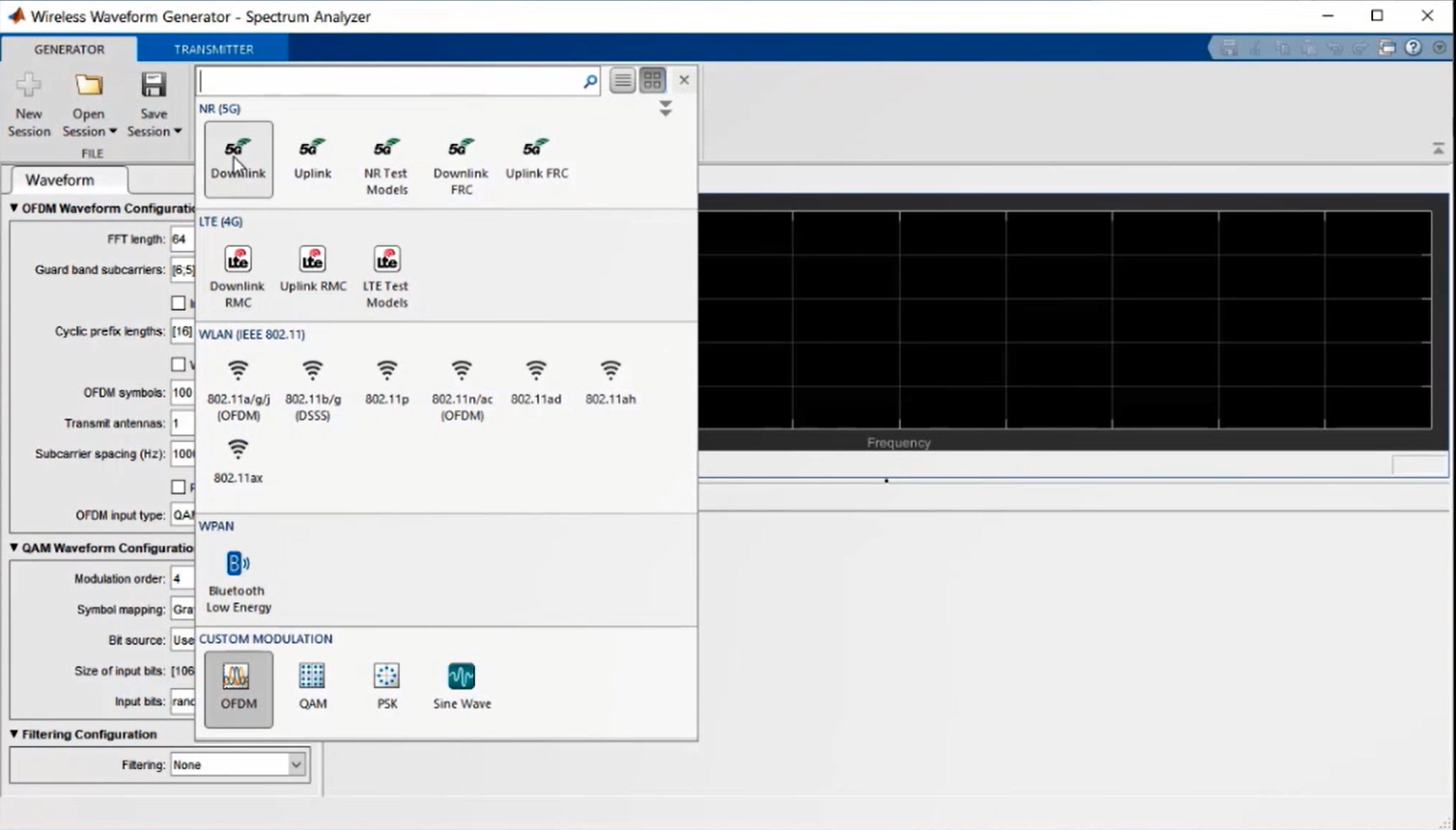

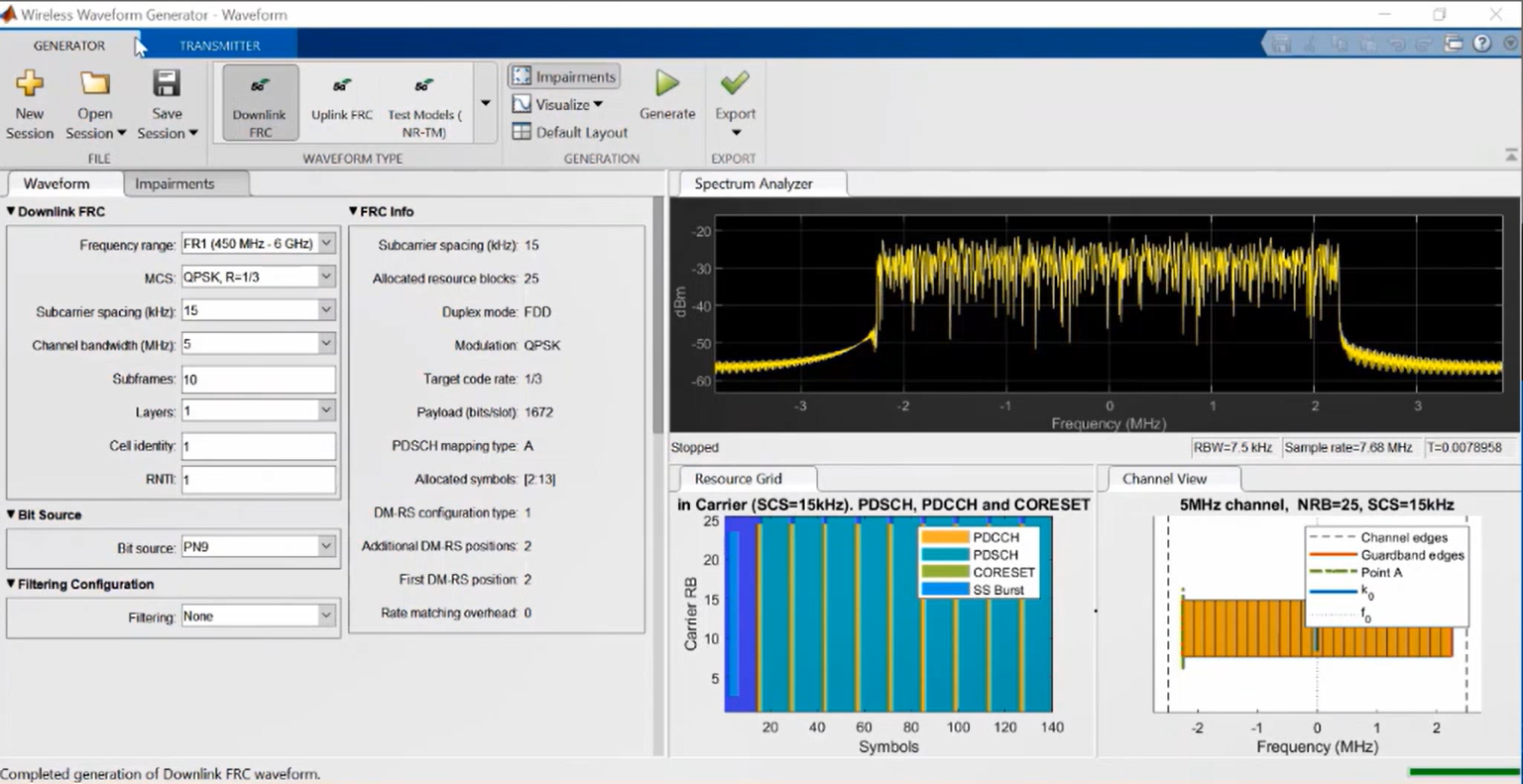

This is one way to do it. As of 2018b and up to now, we have been evolving this tool or app called Wireless Waveform Generator app. With a click of a button we can create 5G, LTE, wireless LAN, and all kinds of custom signals, OFDM, QAM, and so on. All you have to do is go to MATLAB, go to Apps, find the Wireless Waveform Generator app, pick one of those. in this case, I chose 5G downlink FRC, for example.

Assign some parameters, and click on Generate. You will see the time domain, frequency domain, the time frequency resource grid, and the channel is created. And it can export all of that to workspace as a variable. So you generate these signals, you generate a bunch of them, and you create a lot of stuff. And you can apply that, for example, to e plotting deep learning for channel estimation that I'm going to show you at the end-- go through this example.

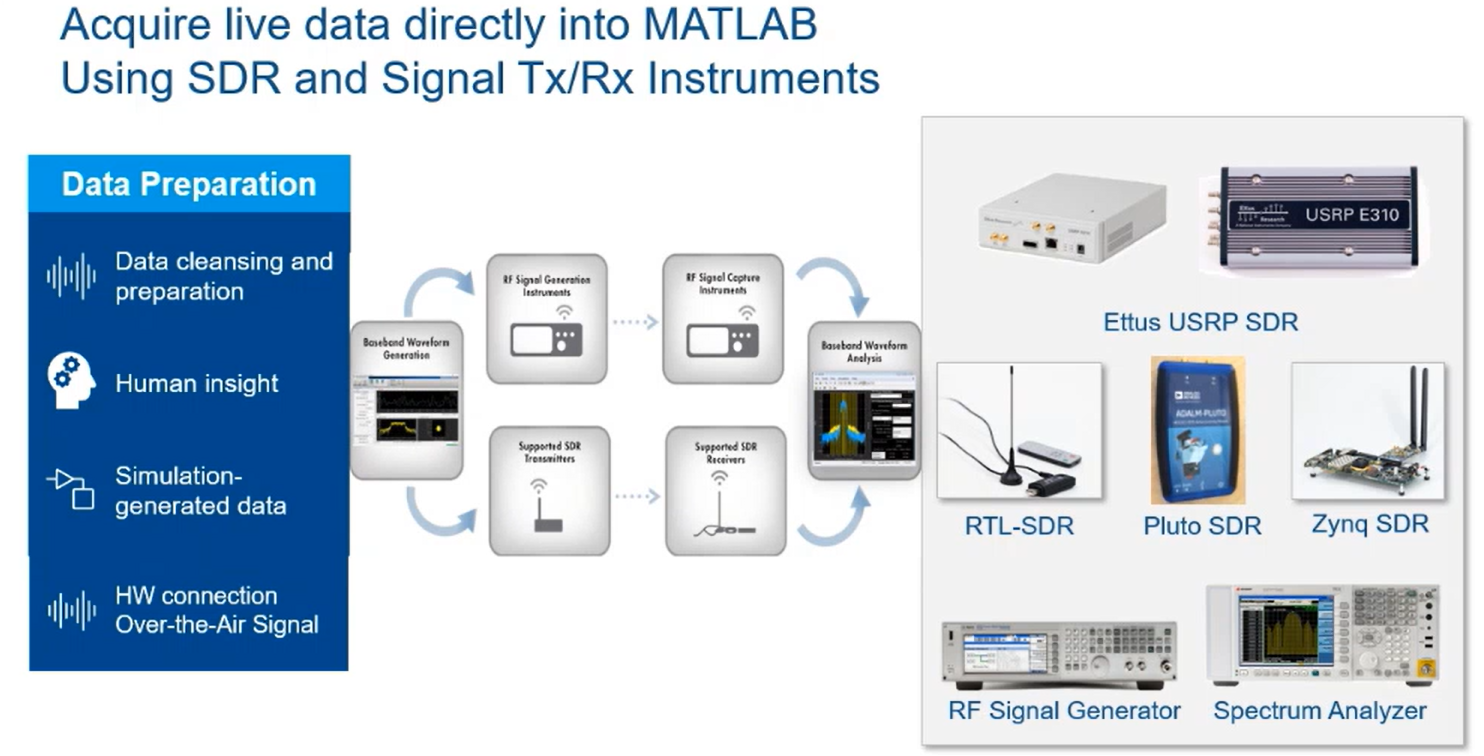

What else MATLAB provides, beside the Wireless Waveform Generator app, to make your synthetic data creation for AI modeling easier? We have connectivity to lots of software-defined radios, guys, and RF instruments, meaning from MATLAB directly you can acquire live signals into MATLAB environment and add that to your training data for the purpose of application of AI techniques to wireless. So as you can see, that only can generate the waveform based on the Wireless Waveform Generator app.

With one function call, you can send that generated waveform to an RF signal generator, like Agilent or Rhode & Schwarz, or all kinds of stuff, and radiate that over the air. Or you can set it to a low-cost software-defined radio, either USRP or RTL-SDR, which is the receiver, Pluto SDR, Zynq SDR. We have a lot of support for software-defined radios which enables you to transmit them over the air.

So that's on the transmission side. On the receive side, you cannot only rely on the stuff you just transmitted, you can also tune to frequency of anything-- FM radio station, ADBS, all kinds of towers and Wi-Fi signals can actually-- if you tune the right frequency, you can get the signal over the air using our RF signal analyzers and software-defined radios like all RTL-SDR, Pluto SDR, and so on. And you bring that into MATLAB. So our connectivity to hardware makes the task of generating live signals for your AI possible. All you have to do is go to MATLAB, there's an Add-On button in the MATLAB environment, and ask for Get Hardware Support.

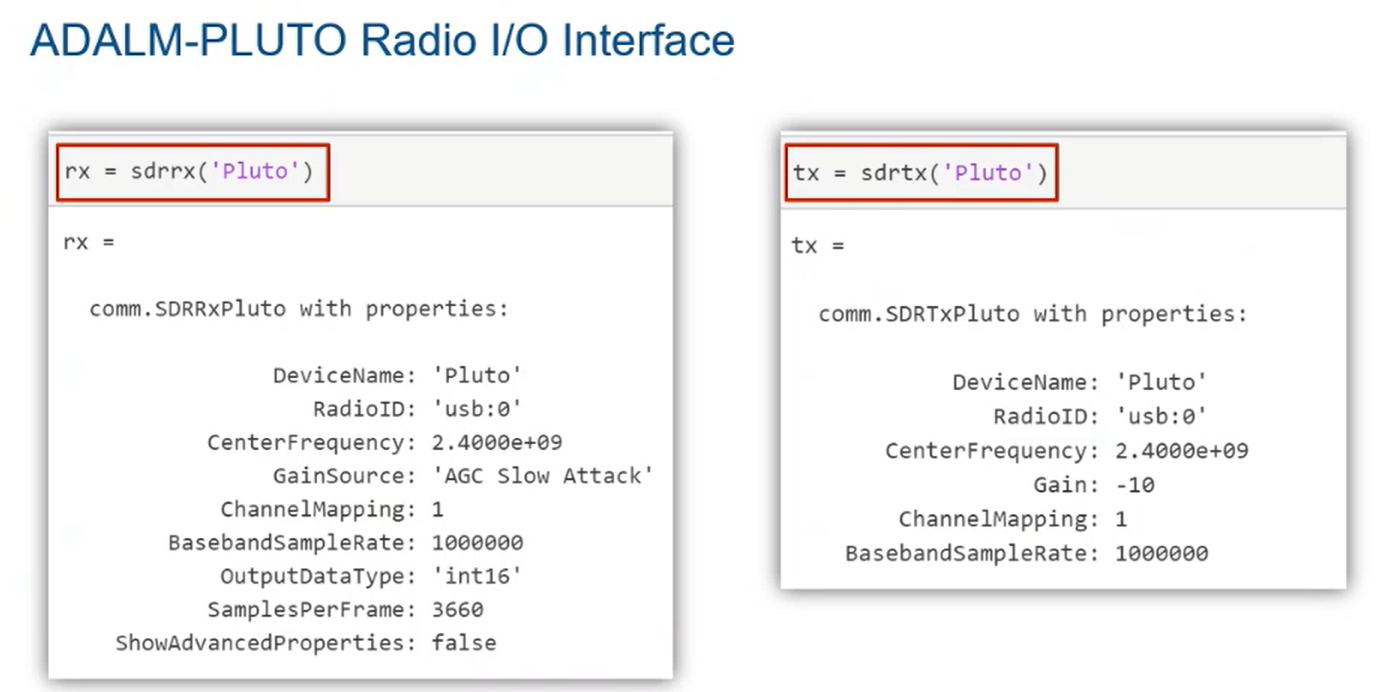

And you get the same thing by calling in MATLAB Support Package Installer. A library of available hardware catalog shows up, and you can go through the hardware setup and install a free add-on for connecting to, for example, Pluto SDR or for connecting to USRP and so on. And after you do that, for example, for Pluto SDR, after you install that connectivity add-on that we have, there is one function called MATLAB SDRTx to transmit, SDRRx to receive. And you specify the kind of radio that you just installed, Pluto or USRP and this and that. You set the parameters, you tune the frequency to set the frequency of interest, and you just keep acquiring data, as simple as that.

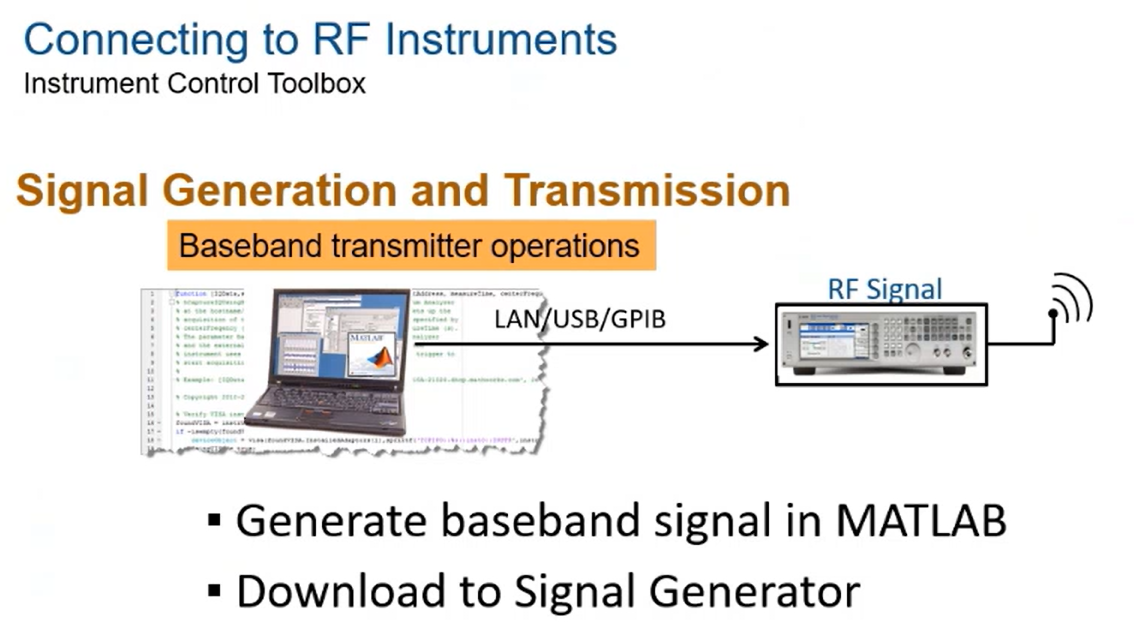

Same thing, the RF instruments. You can connect to RF instruments using our Instrument Control Toolbox. You install the toolbox, which is one of the toolboxes we have in MATLAB, and as long as you connect the computer that MATLAB is installed on to a networks, using all kinds of network connection, and the RF signal generator has IP on that network, then you generate baseband signal as you saw, and you download that to signal generator, and you transmit that over the air. With the same Instrument Control Toolbox, you can connect MATLAB and the computer's installed onto a spectrum analyzer, for example, and yeah, you can-- Instrument Control Toolbox has functions and apps that lets you retrieve IQ baseband samples into MATLAB, which is of the class you want to use in AI modeling and augment your training data. And it can perform visualization analysis and model and train your AI systems, as simple as that.

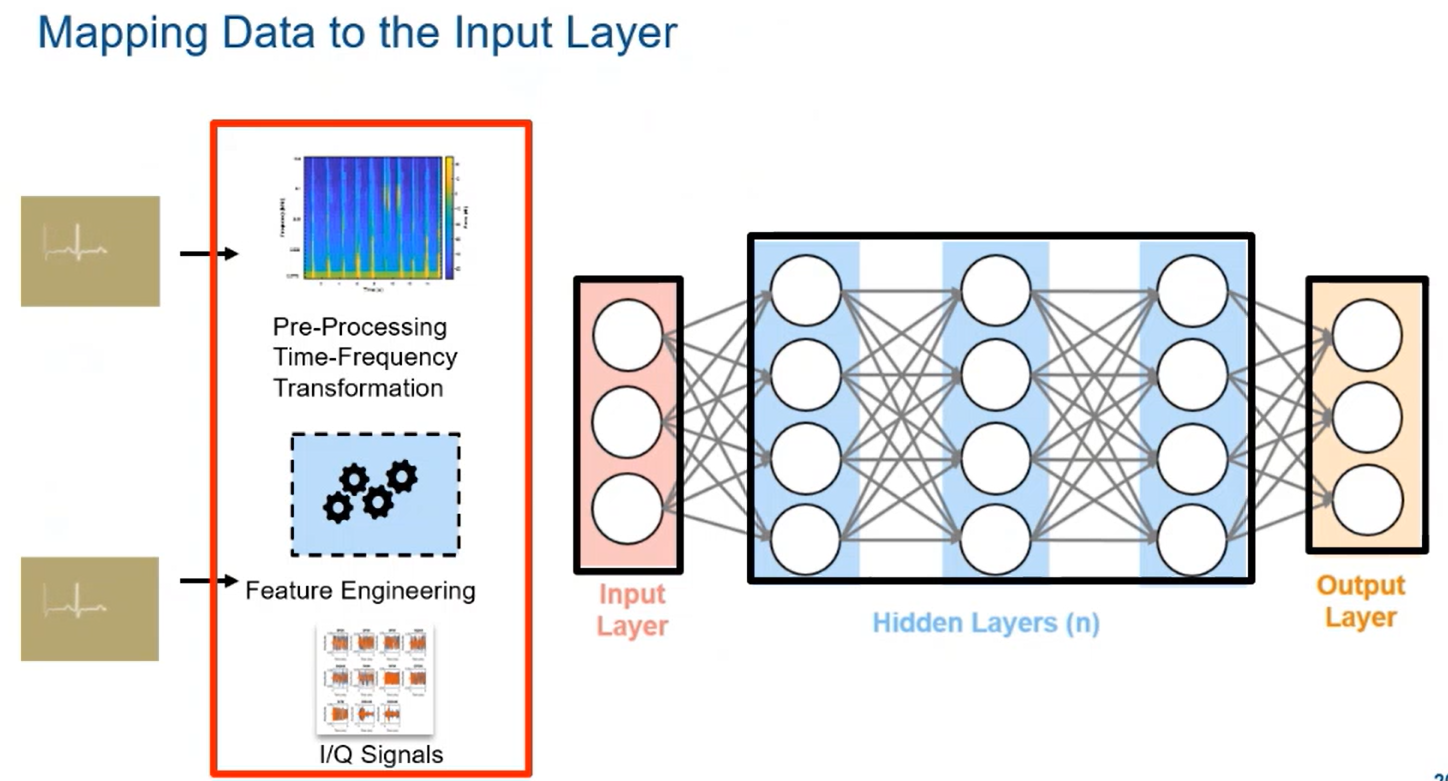

So after you have gotten these synthetic data, which is easy to generate in MATLAB using Wireless Waveform Generator app, or this live data, using SDRs or RF instruments into MATLAB, you have to perform preprocessing to make the signal that you got in ready to be fed as input to the input layer of your AI model. So things that are important here is one technique, which we're going to go through, is if you represent the signal in time frequency using spectrogram or other techniques where time is on the x-axis and frequency is on the y-axis, and the signal strength in each time and frequency coordinate is captured by colors and heat map, that lends itself to images, guys. So that image of time frequency representation can easily be fed to existing deep learning networks that are trained to classify on images.

So that's one very important way we can bring your IQ samples. And by providing a time frequency transformation on them, they become readily available as input to existing deep learning networks. You can also do feature engineering and find features and take those features and feed the network, or you can just do the IQ samples directly by I and Q separately, and so on. So these are the mapping of data to input layers of AI that you have to do as a last step of preprocessing.

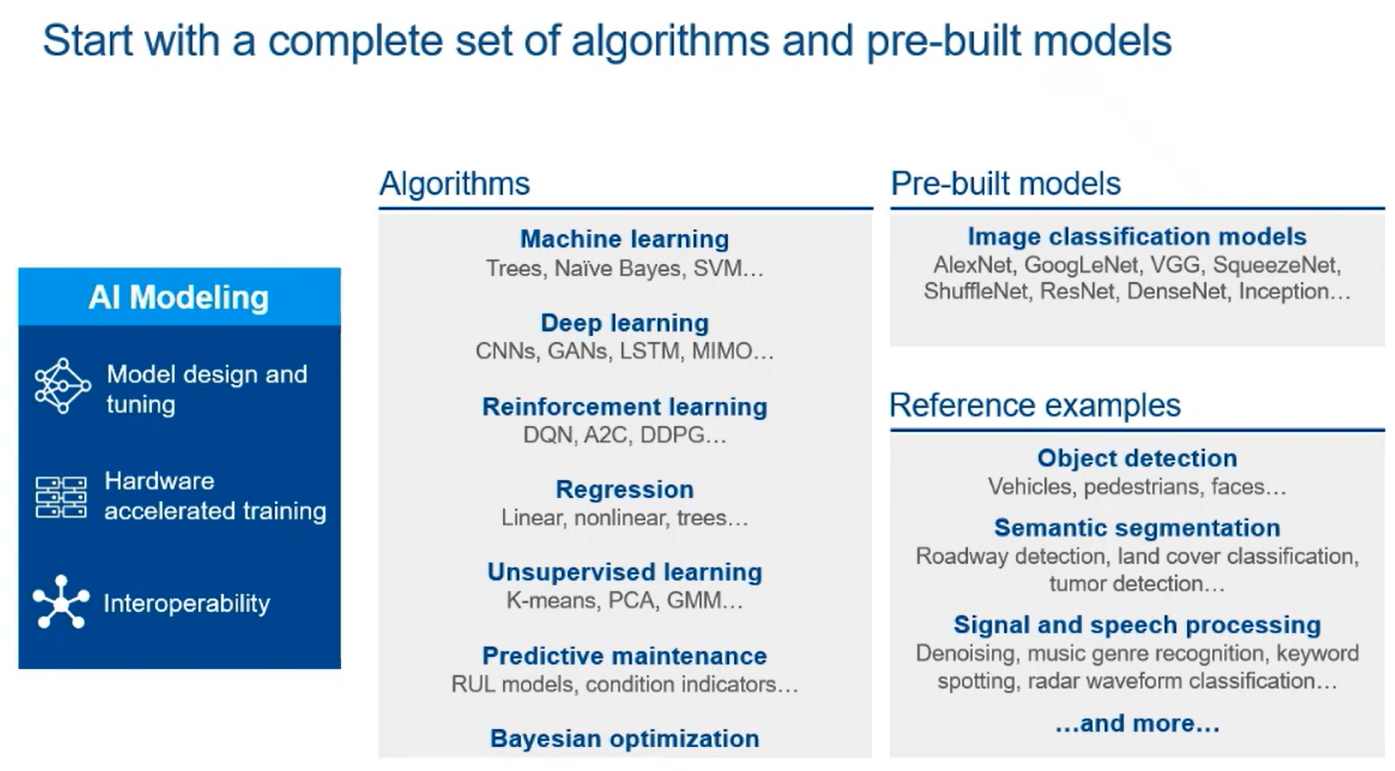

So now you are in the AI modeling part. You have to model your AI network and go through AI modeling. So we did our MathWorks tools that are designed for AI, and there are essentially four of them-- Deep Learning Toolbox, Machine Learning Toolbox-- Machine Learning and Statistics Toolbox, we have Predictive Maintenance and Reinforcement Learning. We have lots of algorithms for machine learning, Bayesian techniques and theory and so on. And for deep learning, convolutional neural networks, CNNs, GANs that I told you about, and so on, reinforcement learning, different kind of algorithms.

So you can build your entire deep learning, machine learning, even reinforcement learning network that takes as input the data that I told you about using our toolboxes. If you want to use prebuilt models that are available outside MathWorks tools-- AlexNet GoogLeNet, ResNet, all kinds of stuff has been developed outside MATLAB environment, and we easily connect to it, and actually we can take it into our environment-- you can use that. And we have done lots of reference example where these-- we show the whole process of object detection for vehicles, pedestrians, so on, semantic segmentation, and signal and speech processing. We have those examples, so you can start not from scratch, from a lot of good done that it's worked for you. So MATLAB becomes an environment that all these techniques are available and you can pick and choose which mode and modality you want to use.

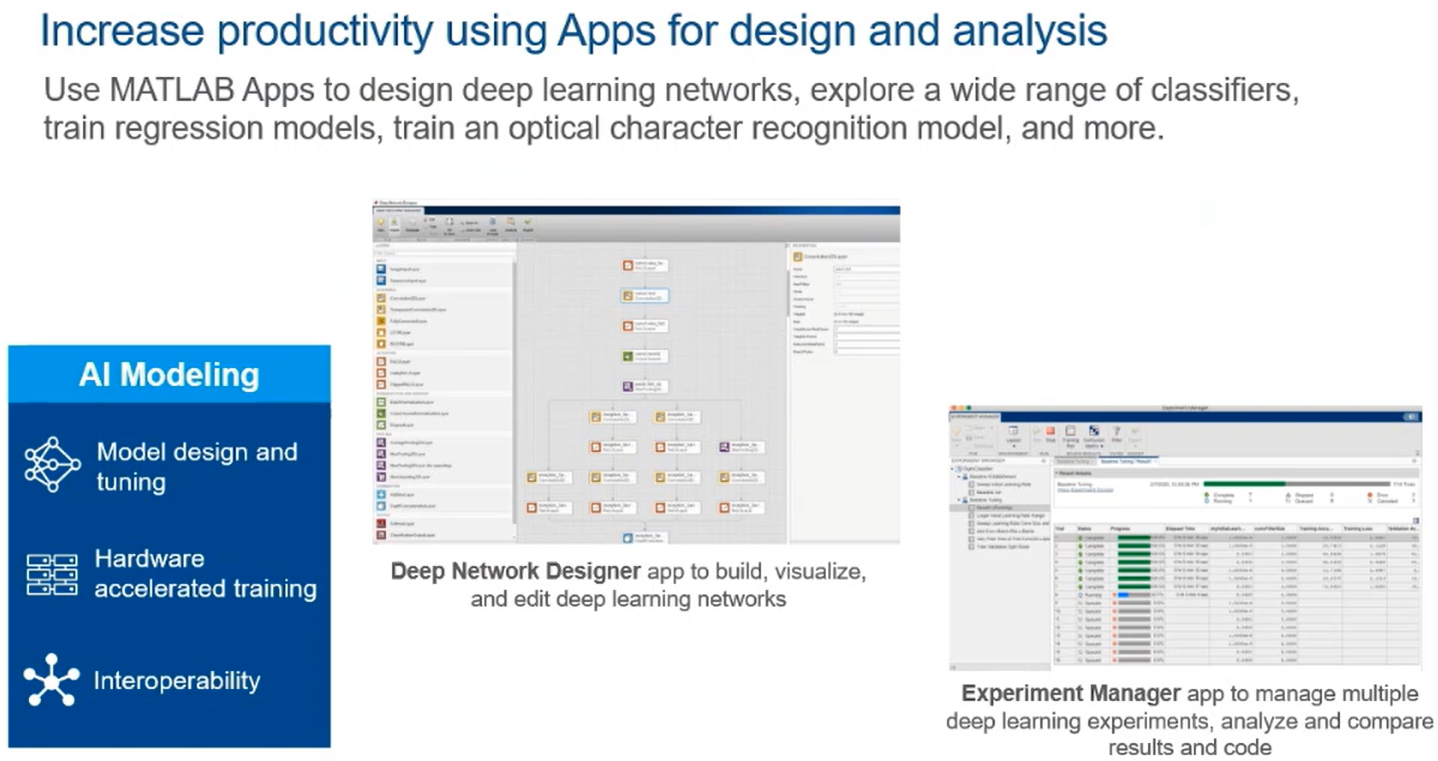

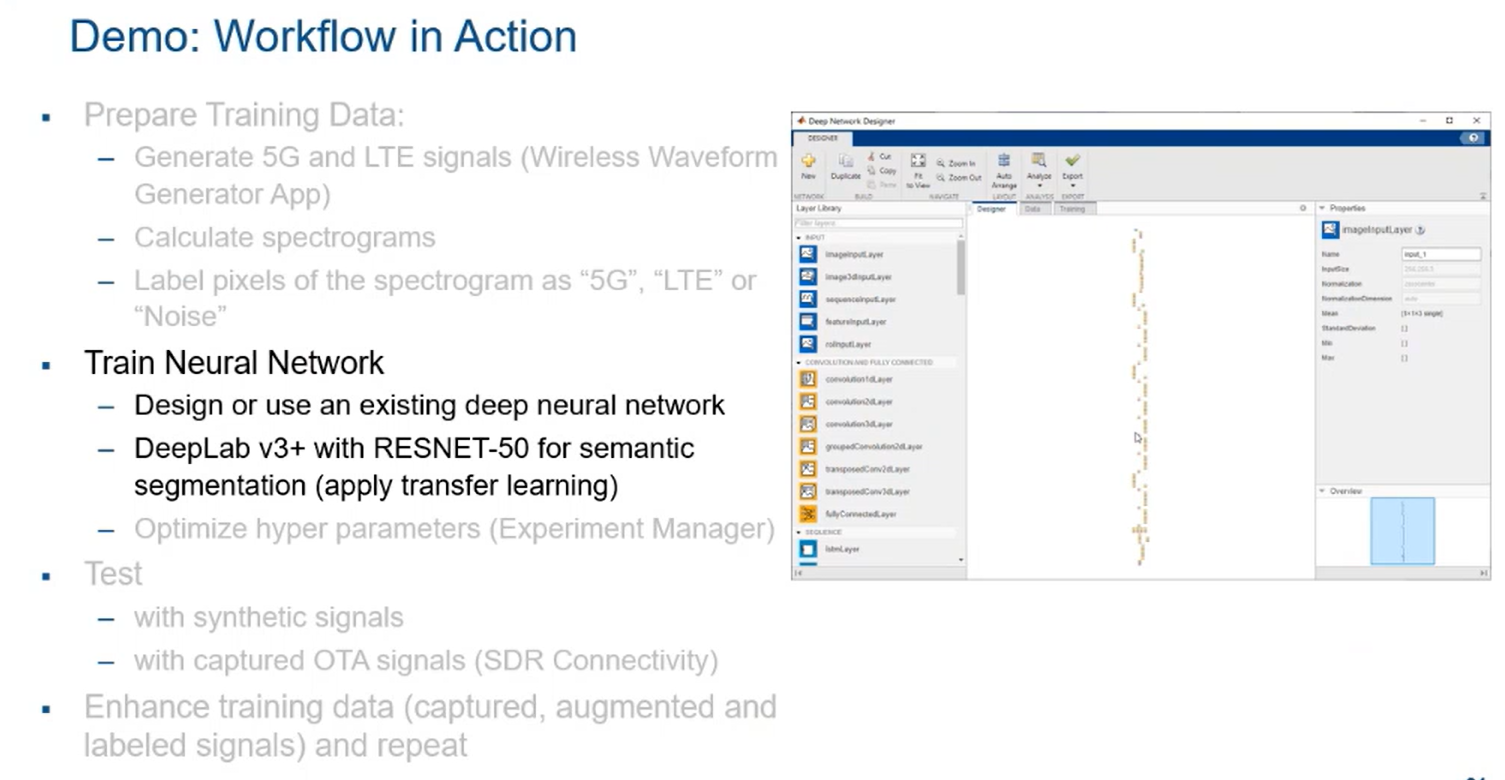

For example, besides generating synthetic and live data, one of the advantages of using deep learning and machine learning in MATLAB is MATLAB provides-- increases productivity using apps we have designed for deep learning networks and allow you to explore a wide range of classifiers, train regression models, and more. For example, you see here I have a monitor-- I'm going to go through it a little bit later on. We have a deep network design that comes with Deep Learning Toolbox, and that app allows you to not only essentially build all the layers of your deep learning network one by one, connect them and create any deep learning network model at any number of layer width and depth with all parameters, but also allows you to import those predefined networks, prebuilt networks, and connect to them.

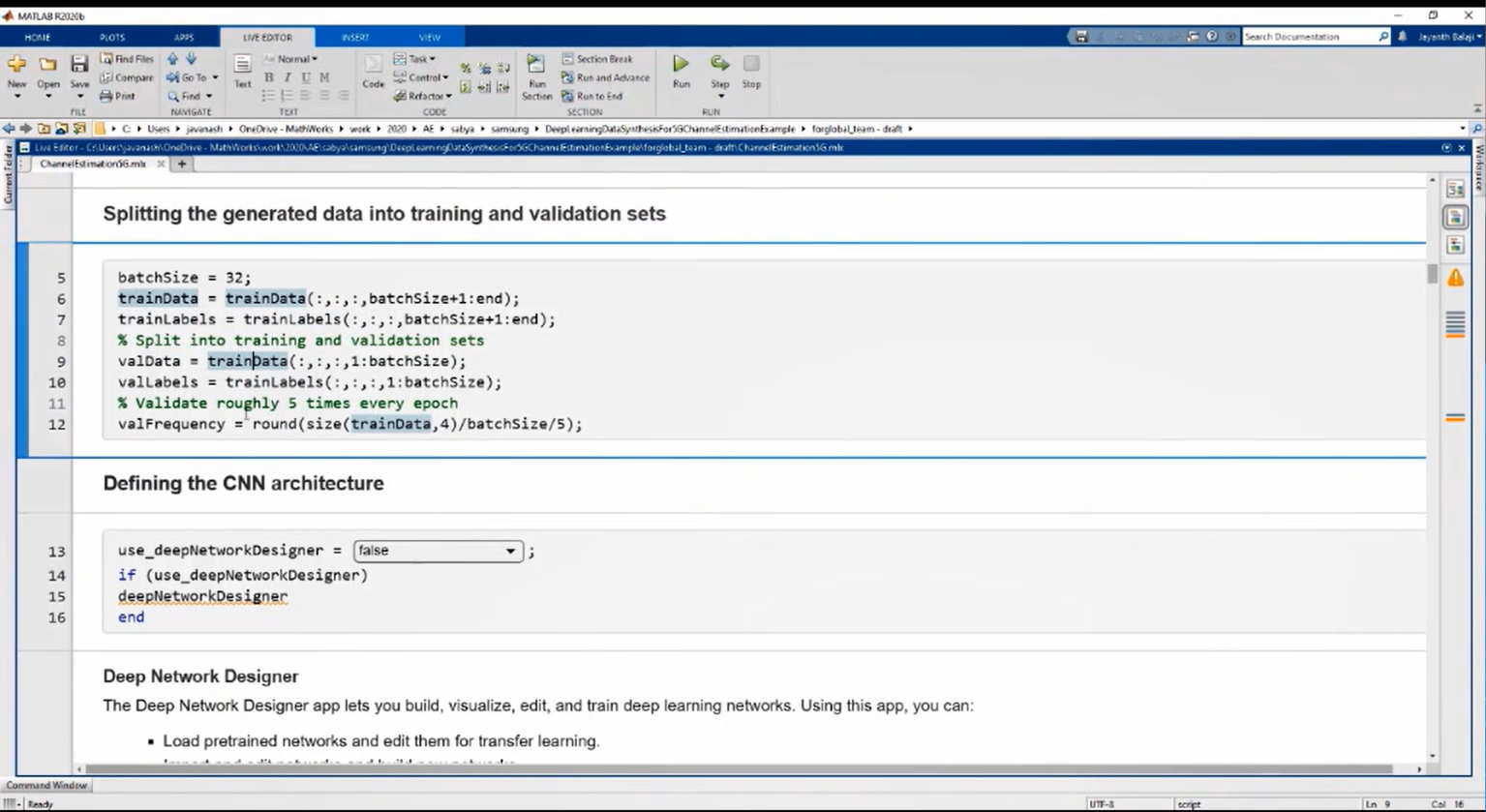

And also Experiment Manager-- after you set up your deep learning network, you can use Experiment Manager to set up an experiment which goes through all kinds of different trials and look at the hyperparameters and all the parameters of deep learning network and of the parameters of training them and find you the best match or the best profile of hyperparameters needed and compare the results and gives you the code to deploy. So for example here, we're talking about all the stuff that you're doing with deep training network, and you generate the data, you define the CNN structure. So you see, in MATLAB we are getting training data and training labels. This is supervised learning.

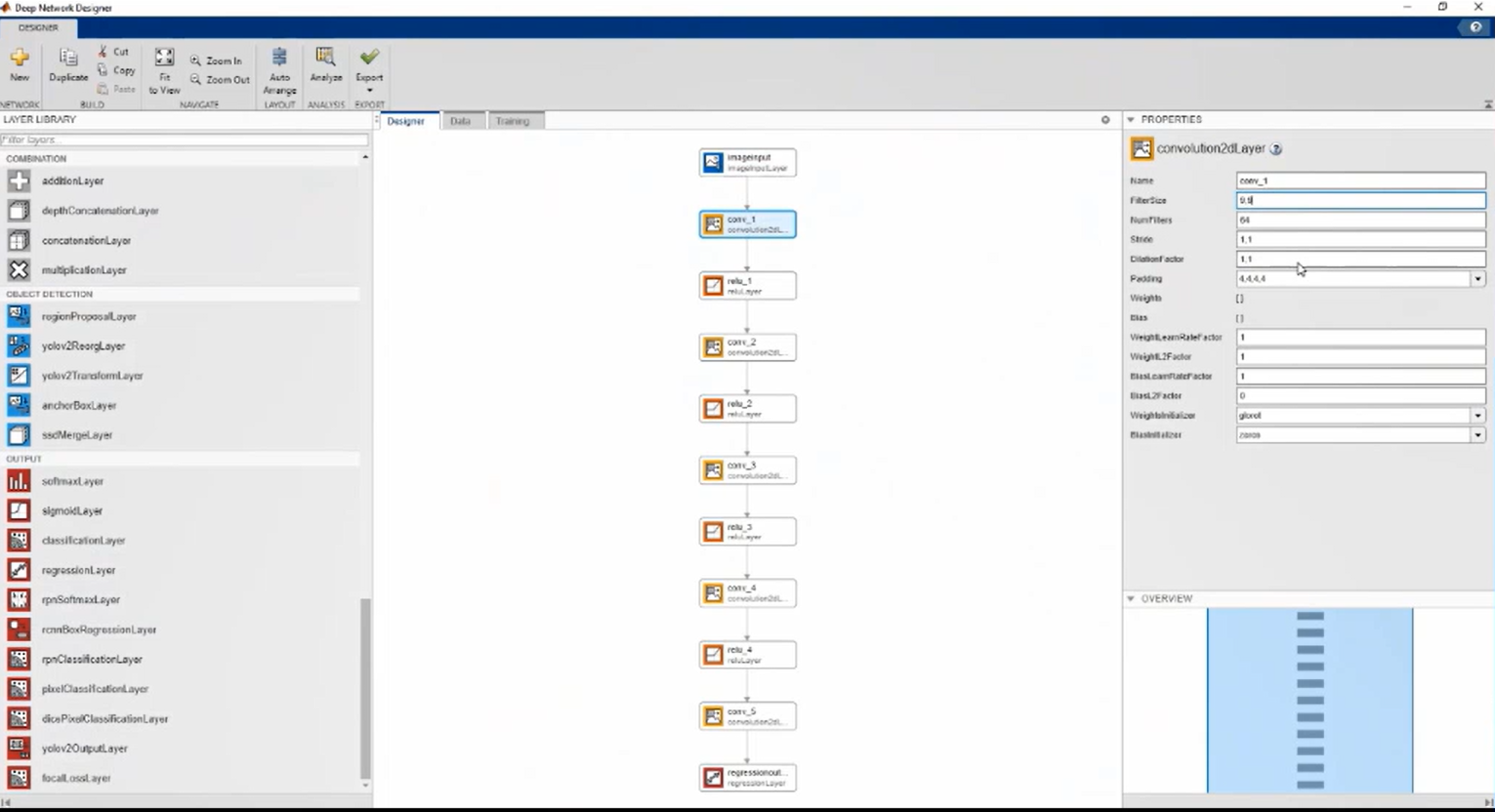

And then we have-- we go to our apps and we open in the Machine Learning and so on, we open the Deep Network Designer. And If you do that it shows up, as you can see, in deep learning-- Deep Network Designer app, you can either create a blank network, and you use either specific from the library-- you can use all kinds of different convolution, reduction, image point, all kinds of layers, and easily augment the number of layers you need to develop your network. You connect them easily, and every one of them has lots of parameters. You parameterize them, you set parameters, or you make the parameter subject to further optimization by the Experiment Manager, and so on and so forth.

And you go and you generate your deep learning network. And you can analyze it, and the analysis shows you all kinds of reports about various aspects of activation, the type, and the states and the sizes of different variables as they go through this network, and also it can export that as generated code or to a twin environment. And then you can go and further optimize your system.

So hardware acceleration and scaling also is needed. When you go through that training, provide large amounts of data, go through all that training and you train the model, you notice that it takes a long time to go through all trials and so on and so forth. So MATLAB has another set of tools which other environments may not have for accelerating the AI training, which is notoriously long on stuff like CPUs and cloud and data center resources.

So for GPU we have GPU Coder product and other techniques that allows you to create a-- take a GPU-enabled MATLAB functions and even your network and generate CUDA-enabled GPU code that runs on Nvidia, for example, GPUs and make it much faster. We have techniques to generate a faster running of the simulation on Azure or AWS.

And you don't need to do specialized programming for that. We take care of all the generation of code that accelerates your stuff. All you have to do is get the corresponding toolbox, learn how to use them, and get the result of accelerating. And the good news is that numerically, they're almost identical, plus minus the accuracy of levels, but it runs much faster.

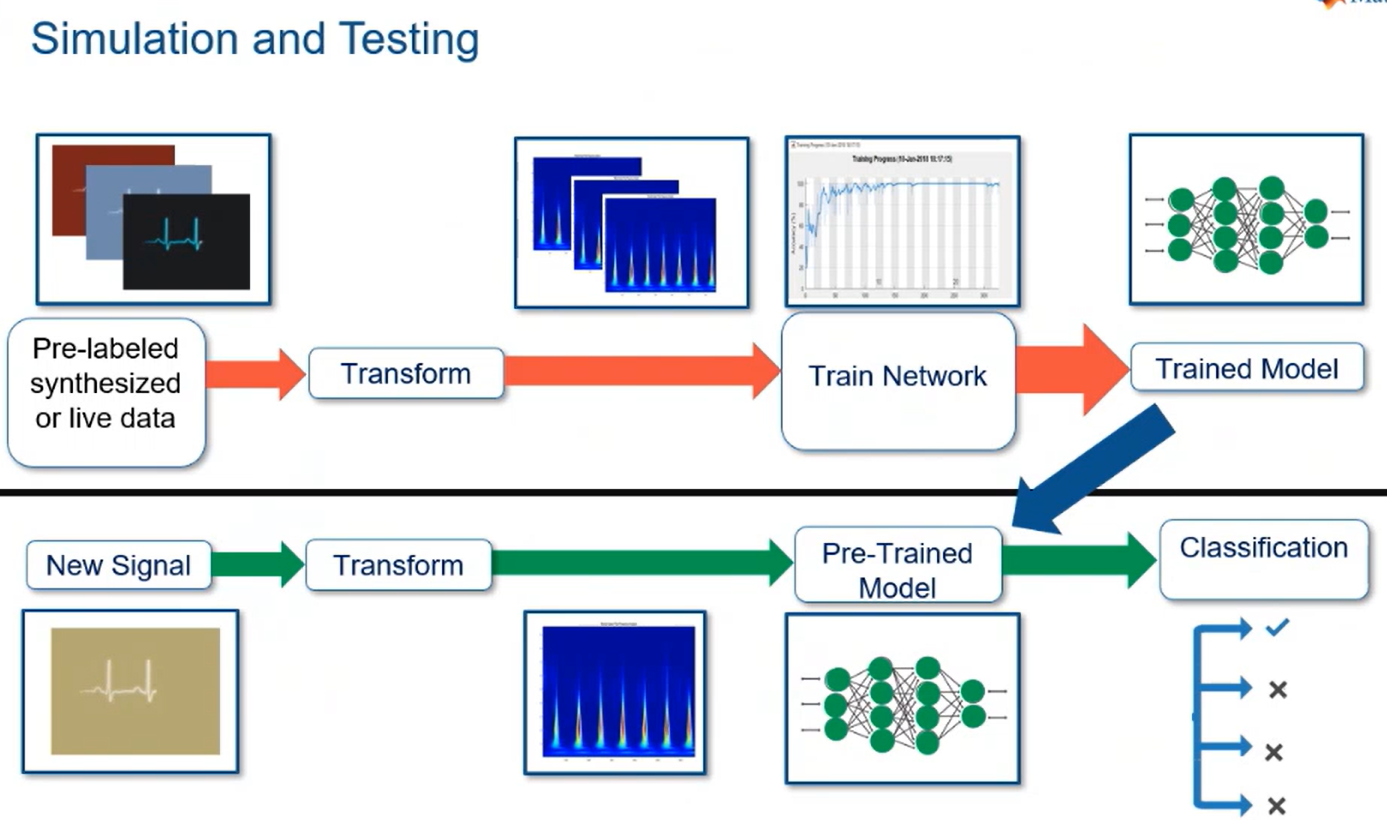

How about simulation testing? So you see, you got prelabeled synthesized or live data in, you transform them to time frequency representation that make it look like an image, you train your network with our deep learning training network analyzers, and you have a trained model. This is trained, it understands, it extracts features, it maps them to the right classifiers.

Now what do you do? You need to have new signals which were not biased toward training data-- repetitive signals but not from the same data set. That is the trick. If you use the same data that you used in training for testing, you are deluding yourself. You have to use new signals that hasn't been seen by a training process, realistic signals, and you have to transform them similarly to the same domain, give to the pretrained network, and see how the pretrained network will classify them.

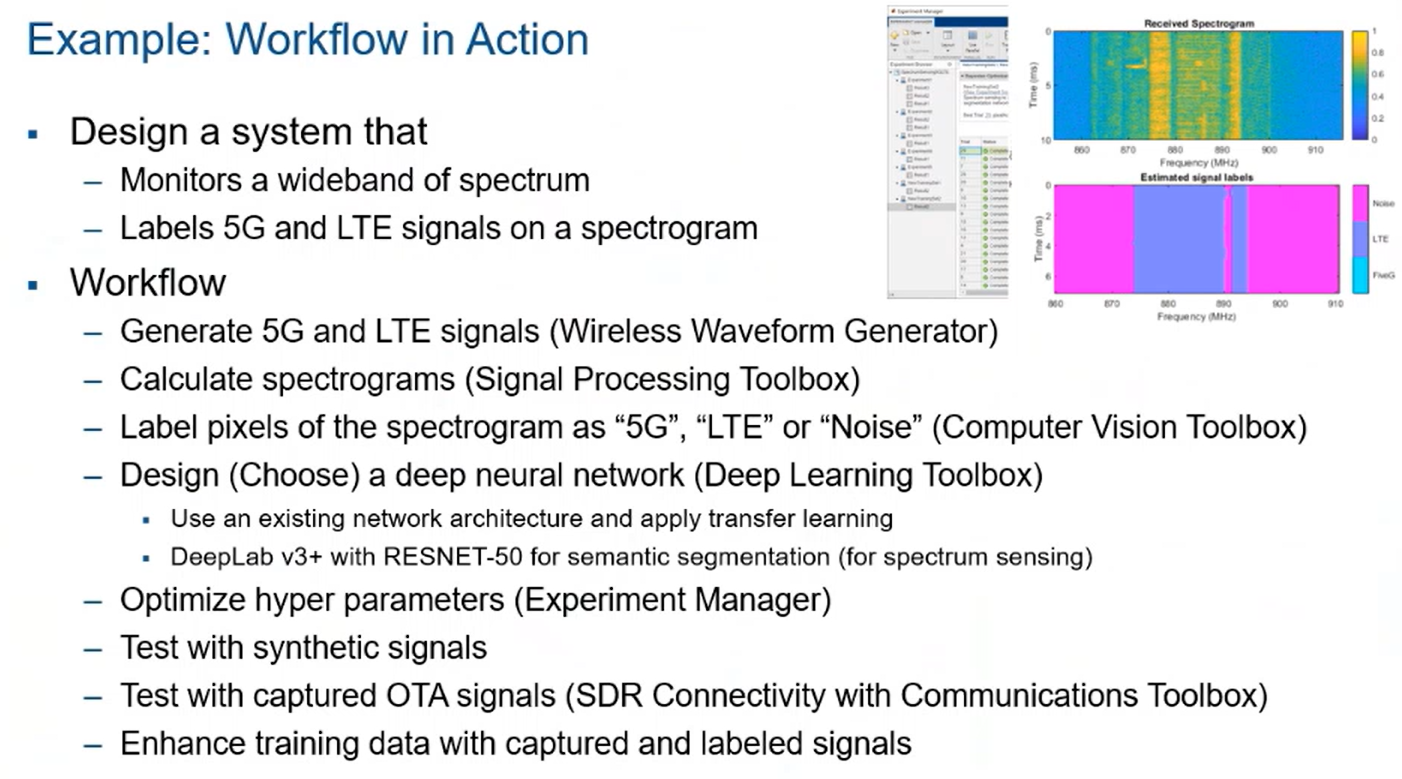

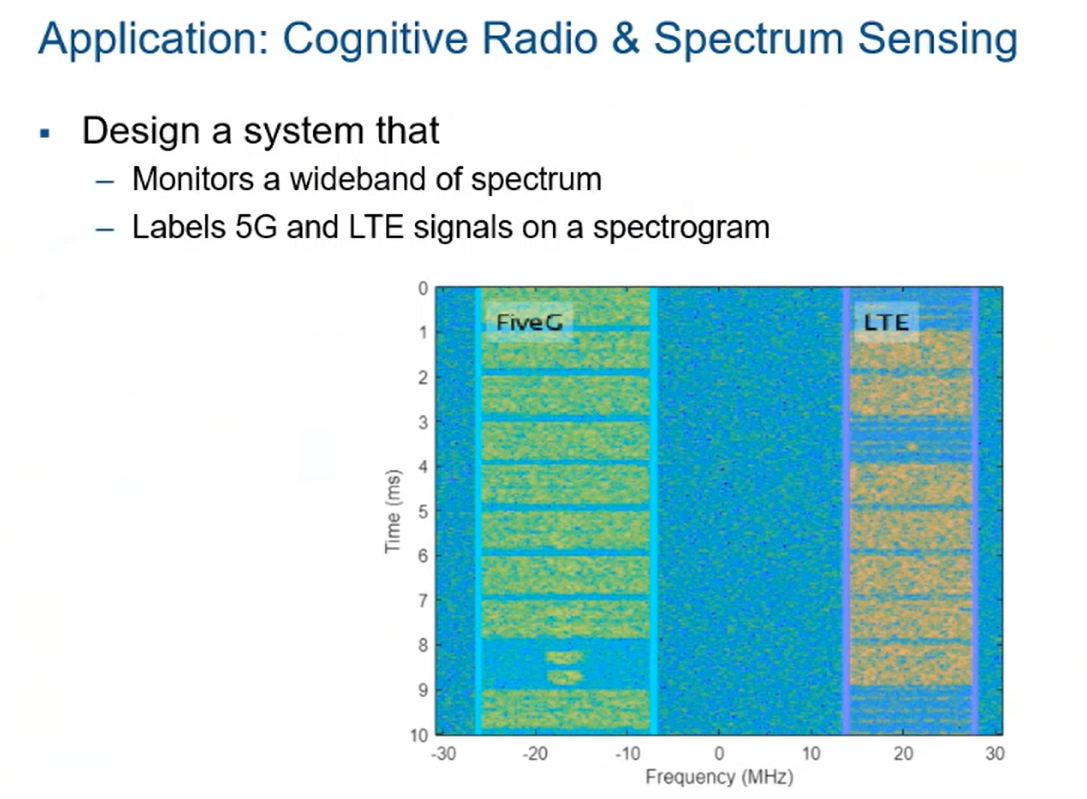

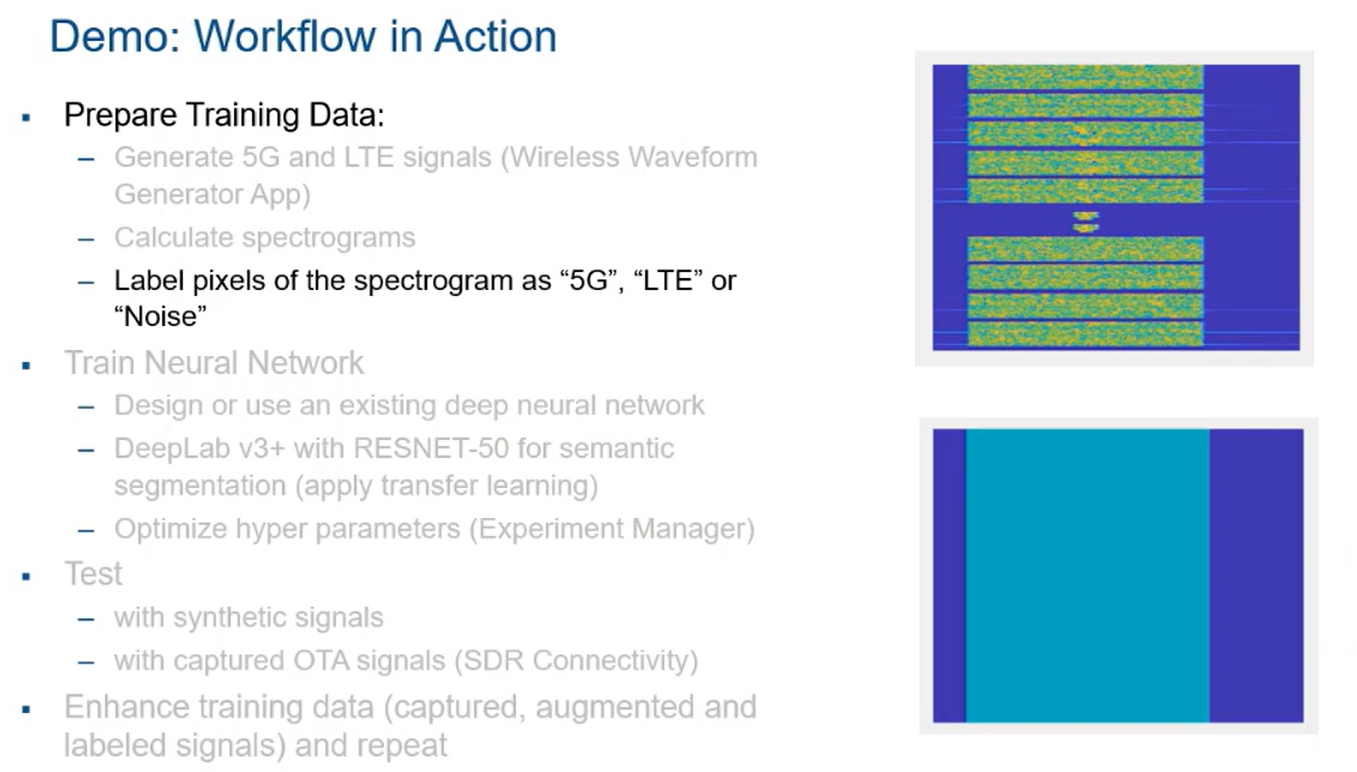

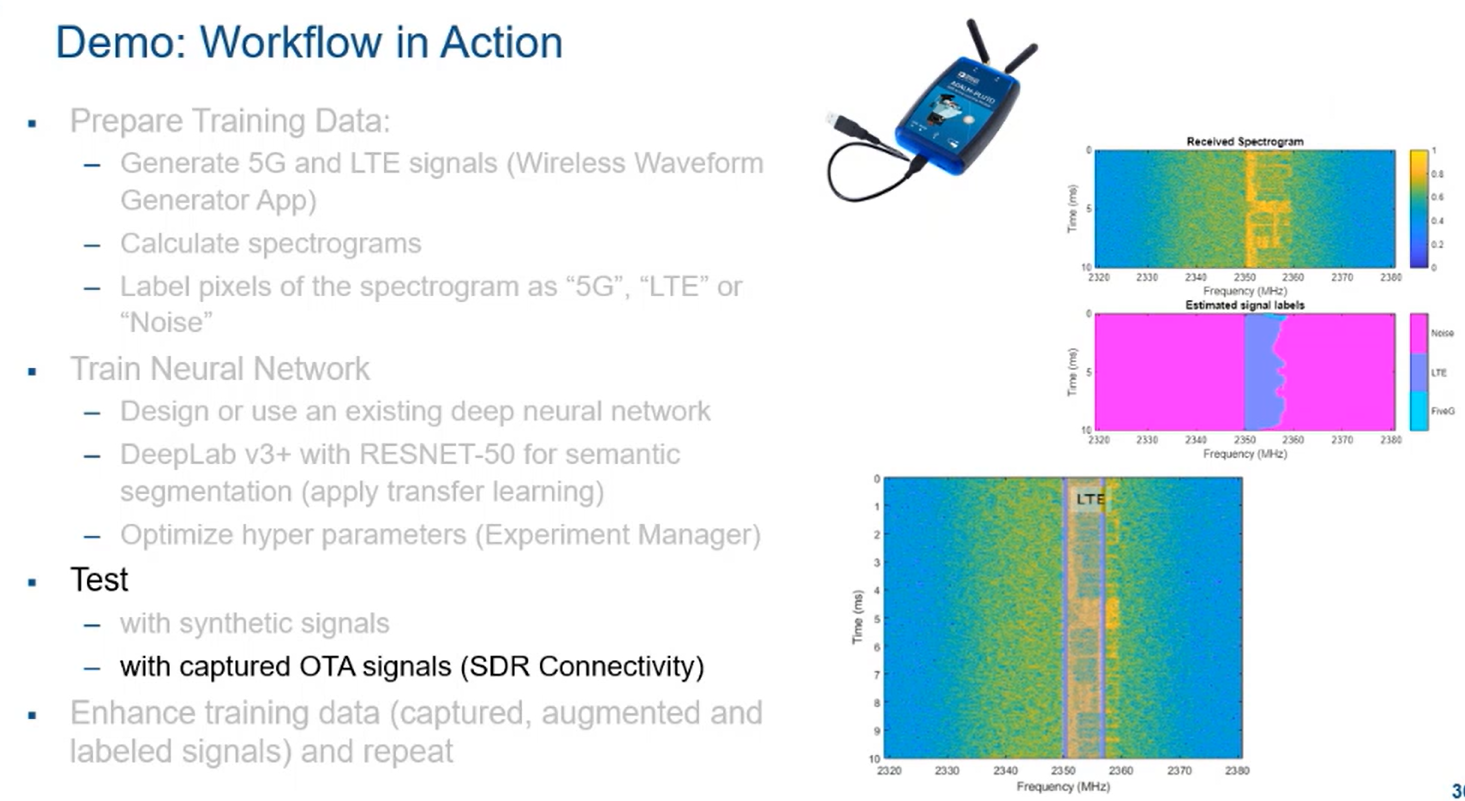

And you actually have labeled also the training data. So you know if it's true or not, you create confusion matrix, how much success, how much failure, and you keep iterating these results. To show all of that in action, I'm going to design a system that monitors a wide band of a spectrum. I'm going to apply essentially spectrum sensing and cognitive radio, and we're going to label 5G and LTE signals on a spectrogram.

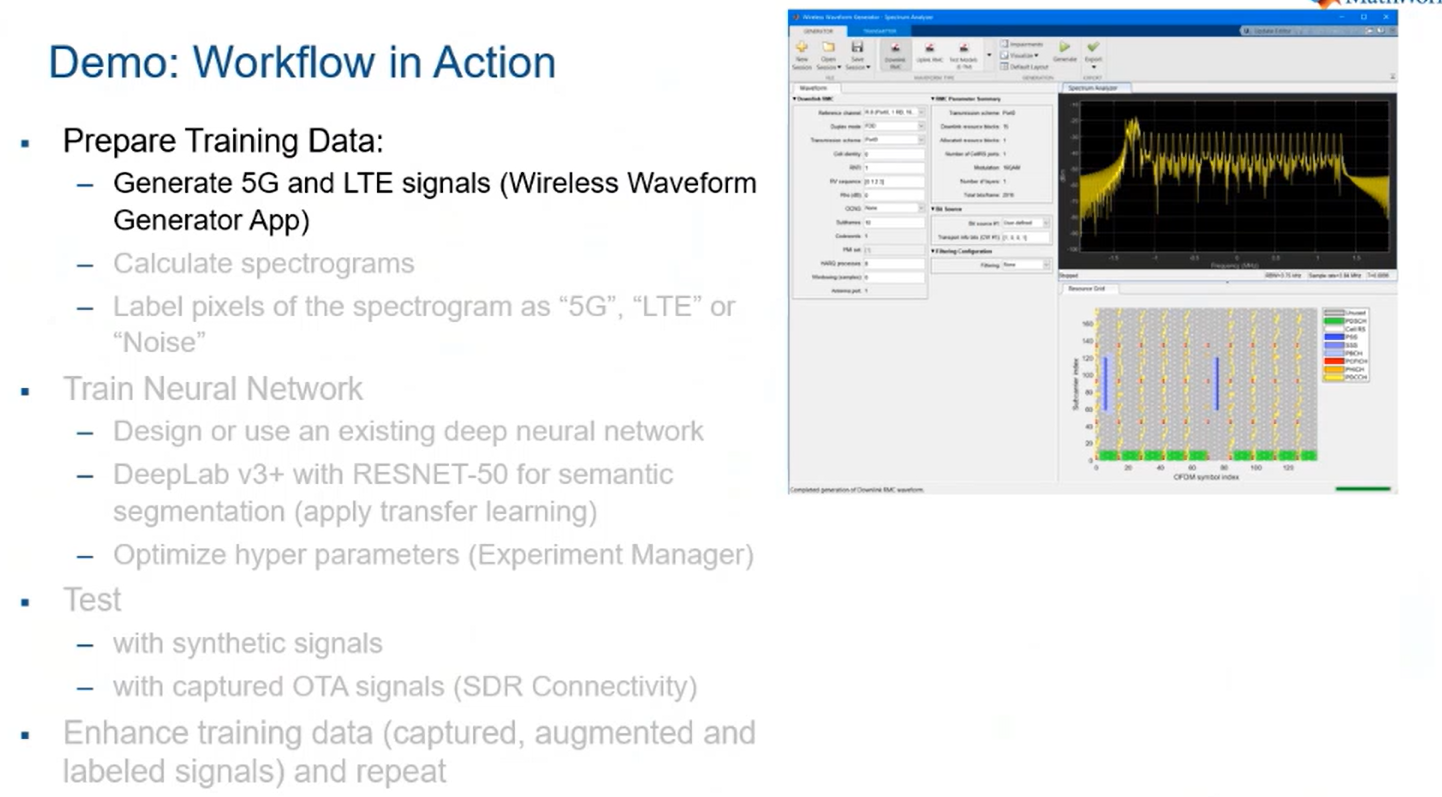

And we're going to go through the workflow, generate 5G and LTE signals using Wireless Waveform Generator, calculate spectrogram to put into time frequency presentation, label pixels as the spectrogram, as 5G LTE or noise. They're going to choose a deep neural network to leverage an existing network architecture, in this case DeepLab ResNet, and essentially train the network to classify properly, begin to optimize the hyperparameters on Experiment Manager I told you about, and we're going to test. And we're going to use the over the year captured signal as just the environment and enhanced training by iterations.

So the application, I told you, is a cognitive radio and spectrum sensor. Design a system that monitors a wide band of spectrum. In this case, I'm going from about 60 megahertz of a bandwidth. So in this case, frequency is in my x-axis as time is on my y-axis. And we're going to label different segments of time and frequency as LTE or 5G or noise.

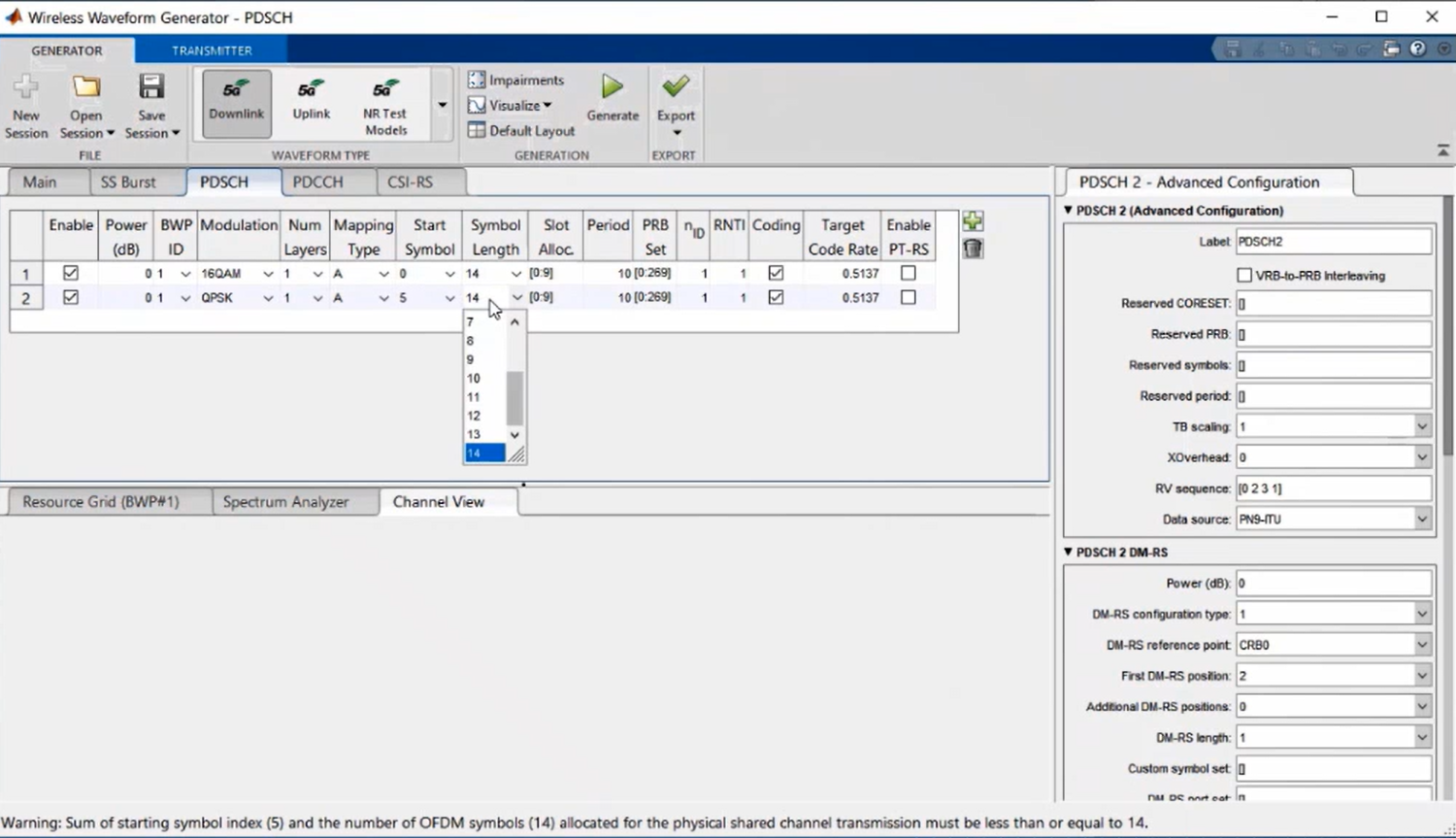

So first thing is to prepare training data. We're going to generate 5G and LTE signals using our Wireless Waveform Generator app. So here I open the Wireless Waveform Generator app. I have 5G, I have LTE.

So I'm going to go and click on one of them, 5G for example. It creates all kinds of parameters for 5G signal generation. In this case, the domain parameters, the frequency range, FR1, FR2, the bandwidth and so on and so forth. And then I'm going to set other parameters, like SS burst, synchronization burst, parameters of that, PDSCH to user data. And as soon as you click on that, all kinds of parameters shows up of how much-- what kind of modulation scheme you want to use, what kind of coding scheme.

You can add different modulation to different bandwidth parts and so on. And you specify different-- all the parameters that 5G expects you to set. And then you can go through all kinds of other additions, look at the resource grid. This is the time frequency resource grid. In this case, symbols are on the time domain and frequency domain.

And you can look at Spectrum Analyzer. That is essentially 50 megahertz signal you saw. And it can export to a file, to MATLAB script, and it can run that MATLAB script to generate in MATLAB lots of data by adding a lot of different noise or impairments you see can generate using R. So you generate the waveform, the actual MATLAB code. And by doing that you can set together a larger training set for your future testing.

Now we're going to go and calculate the spectrogram. And when you calculate the spectrogram, essentially you take the signal and you go to the signal processing toolbox called spectrogram function, and from the IQ samples' time domain data creates a spectrogram where you choose where is the location of your time and frequency. In this case, we use time on the y-axis. And you see we have different kinds of spectrogram for different kinds of signals that we label here.

And then you go through labeling. Now labeling can be done by visual inspection, supervised human-based, which takes a lot of time, or you can extract some features and write a MATLAB program to say if you are in this to this domain that's the 5G, if you are this to this that's LTE. So we can even make the labeling of pixels to allocate them automatic. So the process is, now I have an image, and this image represents a combination of 5G, LTE or noise, and I'm going to assign to each pixel, using a MATLAB function, a label-- 5G, LTE, and so on. And that I'm going to use in my training of neural network later.

So now we're going to go and design or use existing deep neural network. In this case, we can use DeepLab version 3+ is let-- ResNet-50 for semantic segmentation. We're going to apply transfer learning-- I'm going to talk to you about. What does that mean? So we've got to go to the Deep Network Designer, and we're going to go and look and import an available ResNet 3 DeepLab network. And each, as you can see, layers have different branches and so on and so forth. And we're going to have parameters on that.

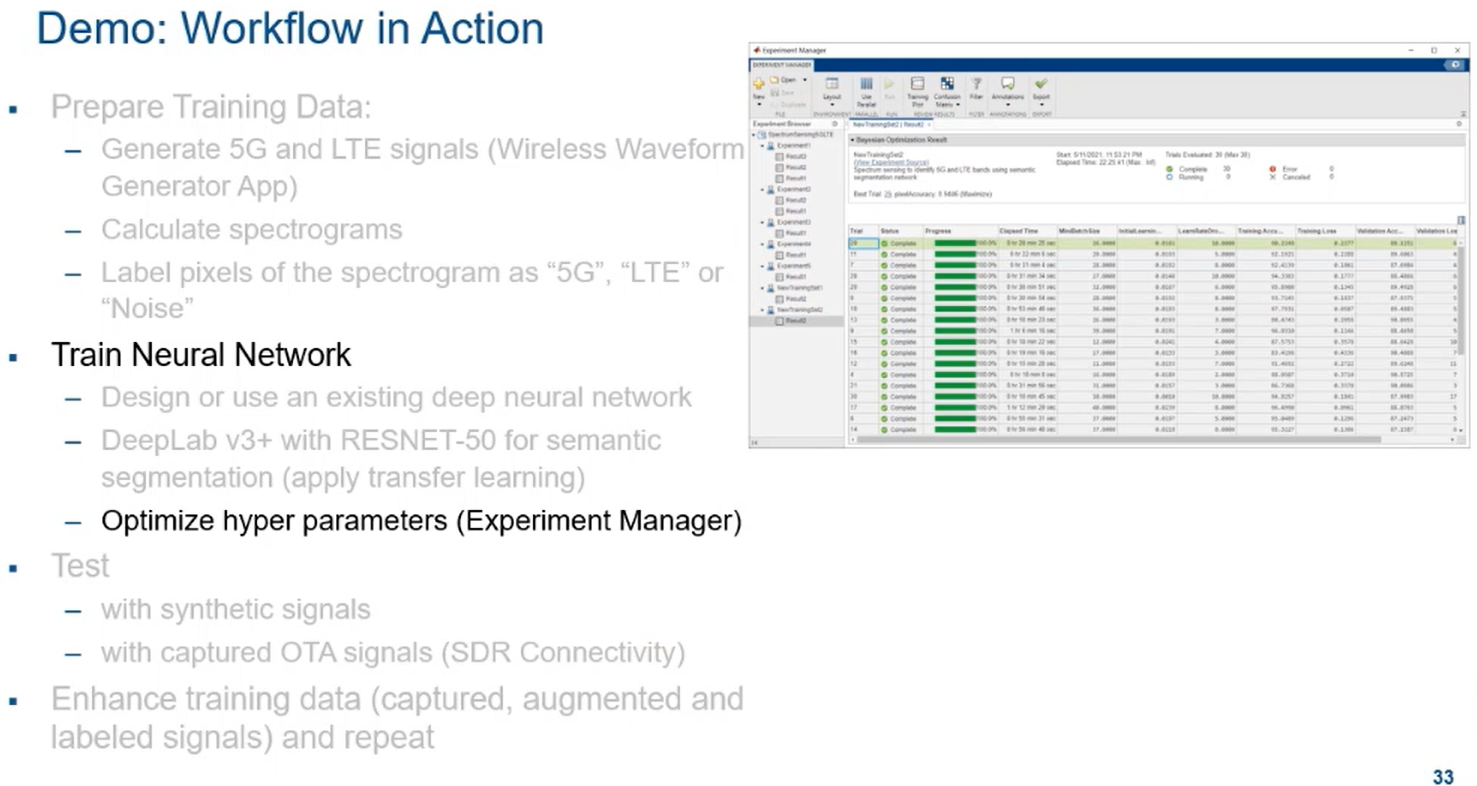

All right, so now we can optimize hyperparameters using Experiment Manager, which is another app that shipped with our Deep Learning Toolbox. So in Experiment Manager, you're going to create a new test sequence and test the environment, and you make some parameters, in this case, pixel accuracy or maximum number of frames. These are hyperparameters you set. And you make essentially a criteria, and you say, run this thing until that criteria is met.

So it runs a bunch of iterations after training until you have optimized the hyperparameter. So this is all done for you. You just set the parameters of interest and specify the criteria, and it will optimize for you.

Now it's time for you to test. So we test the synthesized signals that we generated using Waveform Generator app, and you look at the normalized confusion matrix. If I send a 5G signal and I receive a 5G, 97% right in this after training. LTE, 87% right. Noise, 97% right. So you see, LTE has not as much accuracy as 5G or noise. So we have to look at that.

And then they're going to use actually over-the-air test the signal. We're going to tune to a particular swath of frequency over 5G or LTE network close to my base station near our house and using SDR connectivity that I told you about from communication toolbox to capture the signal and test with that. And as you test with that, you realize that LTE has a problem because, although the LTE seems to have-- you can see here-- seems to have a 10 megahertz bandwidth here, but somehow only 7 megahertz of it is deemed right. So I guess we have used a lot of 5 megahertz LTE signal for training and a little bit less 10 megahertz. So now, based on the first iteration, I know I have to augment my LTE training set to not be on 5 megahertz bandwidth, also have 10 megahertz and so on.

So you see testing informs you of what's wrong with your original data. So now it creates more synthetic data, more-- you augment your training data set to have more representative data. And by doing that, if I run this one more time you see, now that I do that, the LTE is properly covered because I use more train data representative of LTE signals at 5 and 10 megahertz and even 20 megahertz. And that's the story about that.

So after all of this is done, you have to deploy to any processor with best-in-class performance. And AI models, one thing we can do for you is that because of all that code generation tools and products we have, we can deploy easily from MATLAB environment and Simulink environment to embedded devices or edge devices, enterprise system, cloud, desktop. And you can see our coder workflow, we go from MATLAB and AI through code generation to GPU to CPUs with SQL generation, GPUs with CUDA code and FPGA.

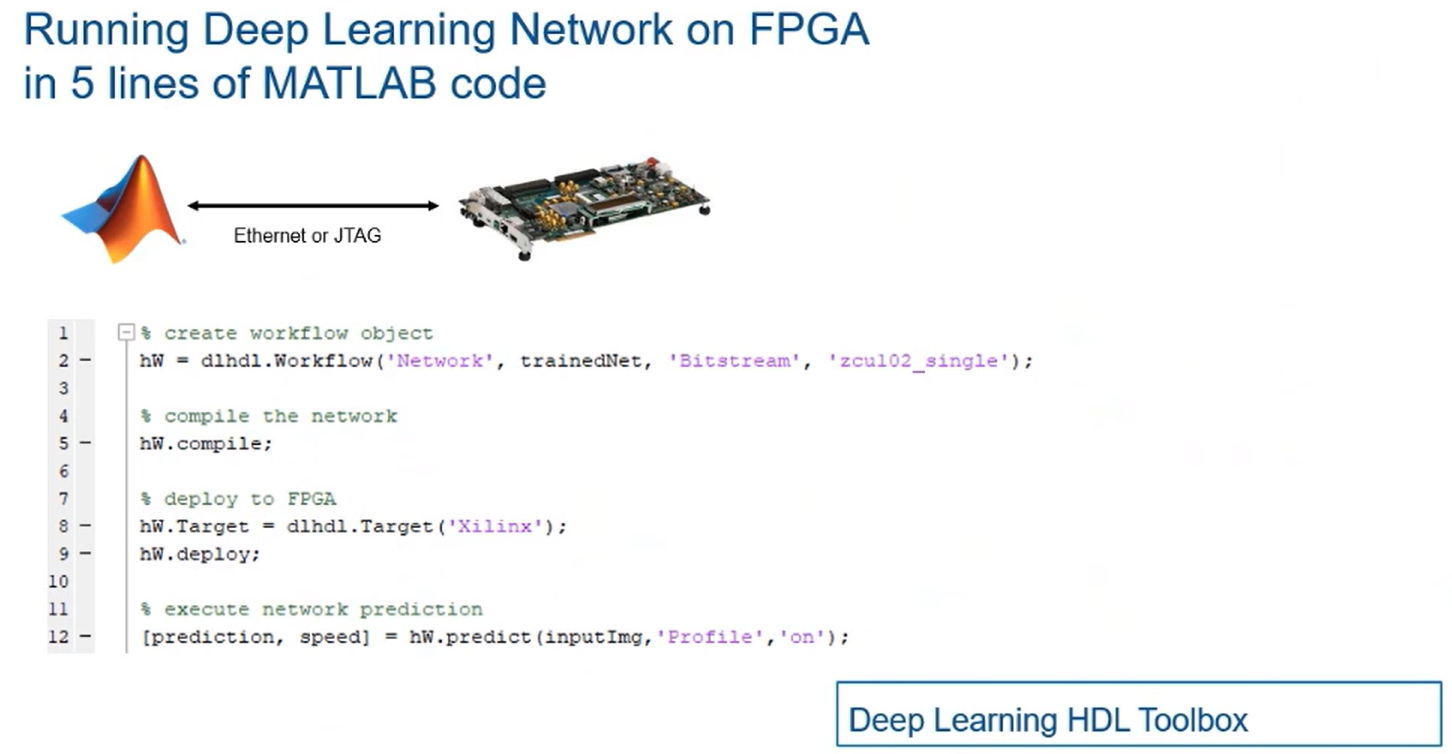

We have a new product, actually, called Deep Learning HDL Toolbox that generates automatically HDL code for you. And you see here using MATLAB compiler, we can actually deploy it to all kinds of enterprise system. So the path to embedded system, enterprise system is available, which is not available in other environments.

For example we used the Deep Learning HDL Toolbox to run the deep learning networks on some application on FPGA. And you essentially use five lines of MATLAB code. So you just say-- set up the workflow, you say compile, and you download it to Xilinx FPGA, and you run that. And when you run that, it runs much faster.

Well I showed you a cognitive radio and spectrum sensing example, but there's so many examples where AI has been applied in wireless area. We have autoencoders that adjusting coding and decoding parameters automatically based on learning, modulation classification with deep learning, designing a deep learning network with simulated data for detecting wireless routers, what kind of routers is used, and RF fingerprinting, DPD, RF receivers, training a neural network for LLR estimation, which is a situational estimation, and so on. So we have a lot of examples for you to see how the basic idea that I present, the basic workflow, can be applied in different domains, and you can modify it for your domains.

You have a user story here, our customer NanoSemi improved the efficiency for their 5G design and accelerated the design of RF power amplifiers and linearization and DPD by using machine learning algorithms. And they report the development time was reduced by half. And that's a testament of how much time we save you by allowing you not to work on really implementing or reinventing the wheel but using those techniques prebuilt-- AlexNet, ResNet, GoogLeNet, as well as yourself, the stuff in MATLAB and using lots of data to prove it.

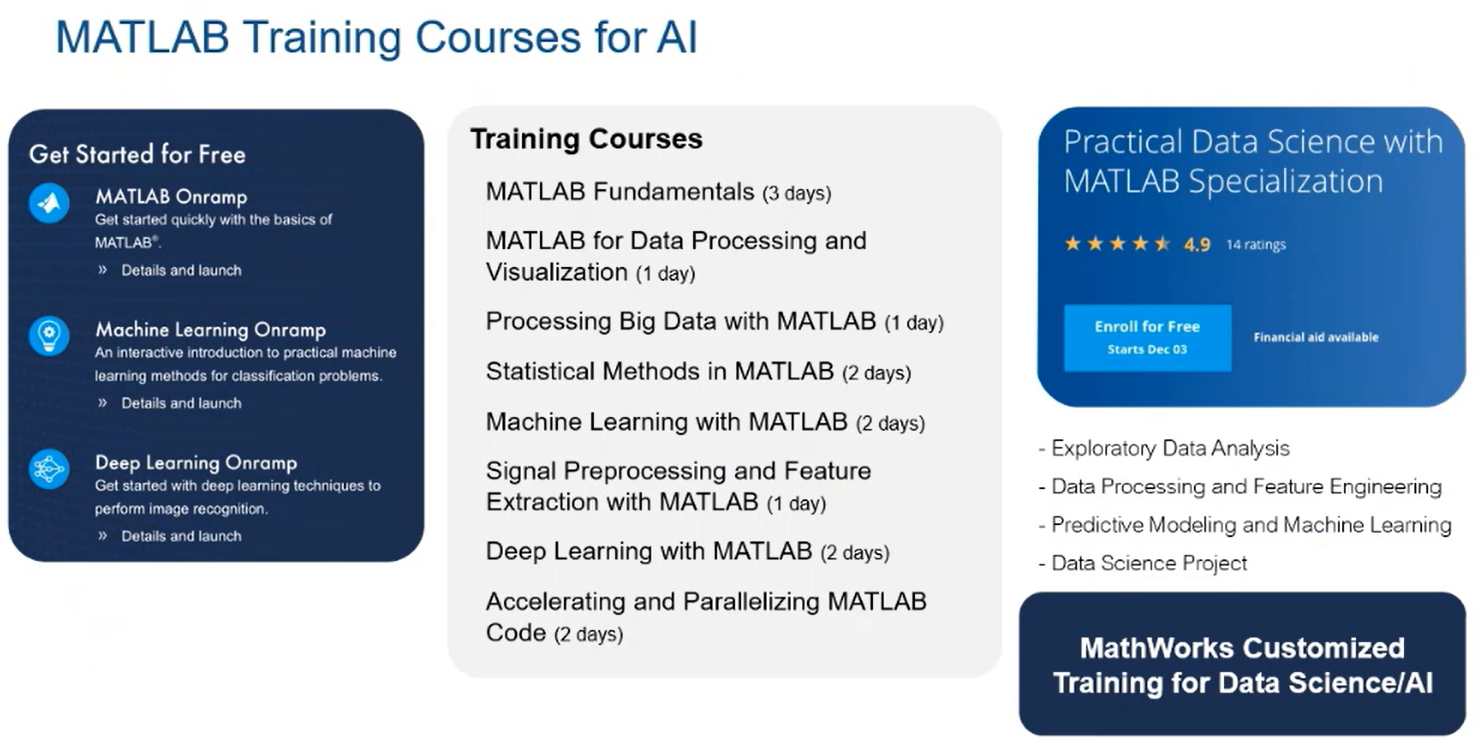

We also have MATLAB training courses for AI, which are either very extensive three-day or one-day course on machine learning and deep learning, and we have a machine learning and deep learning Onramp, which is a self-paced training, free. So you can go to mathworks.com, look for Machine Learning Onramp, Deep Learning Onramp, learn how deep learning and machine learning done on your own pace. And if you're interested, we can do customized training for data science and AI.

To summarize, we can prepare training data, train the network, and test the synthetic and over-the-air signals in MATLAB. That is the value that we have in MATLAB for your AI for wireless application. You can prepare training data for wireless machine learning, deep learning algorithms, add channel RF impairments to make the data set larger and more representative, augment captured signals with synthetic signals, we can preprocess training data, you can train neural networks using our tools, go through design and also optimizing parameters, you can test using our over-the-air signals with SDR and RF instruments, and we can deploy to any devices and import and export networks from other frameworks. So you do not-- you're not supposed to choose. We can choose either to work in MATLAB environment and deploy other areas.

With that I want to thank you very much for attending this webinar. After a short pause, my friends will open the floor for the questions that you may have. Thank you so much.

你必须对你的AI网络建模,并经历AI建模。所以我们做了我们的MathWorks工具是为AI设计的,基本上有四个——深度学习工具箱、机器学习工具箱——机器学习和统计工具箱,我们有预测维护和强化学习。我们有很多机器学习的算法,贝叶斯技术和理论等等。而对于深度学习,卷积神经网络,cnn,我讲过的GANs等等,强化学习,不同种类的算法。

你必须对你的AI网络建模,并经历AI建模。所以我们做了我们的MathWorks工具是为AI设计的,基本上有四个——深度学习工具箱、机器学习工具箱——机器学习和统计工具箱,我们有预测维护和强化学习。我们有很多机器学习的算法,贝叶斯技术和理论等等。而对于深度学习,卷积神经网络,cnn,我讲过的GANs等等,强化学习,不同种类的算法。