20201121-大数据-07

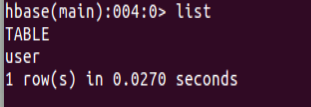

1.列出Hbase所有的表的相关信息,如表名:

2.在终端打印出指定表的所有记录数据

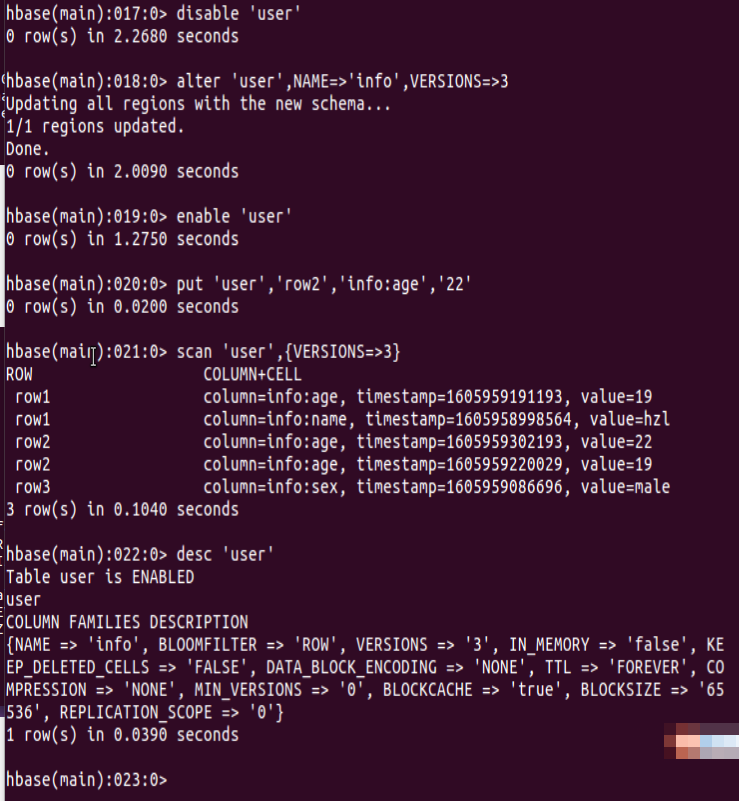

3.向已经创建好的表添加和删除指定的列族或列

4.清空指定表的所有记录数据

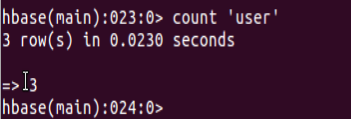

5.统计表的行数

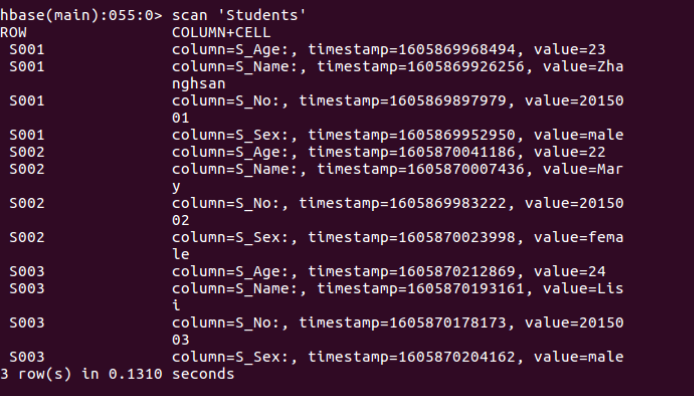

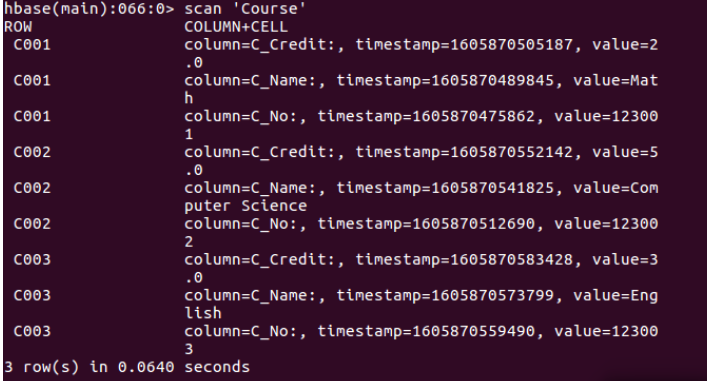

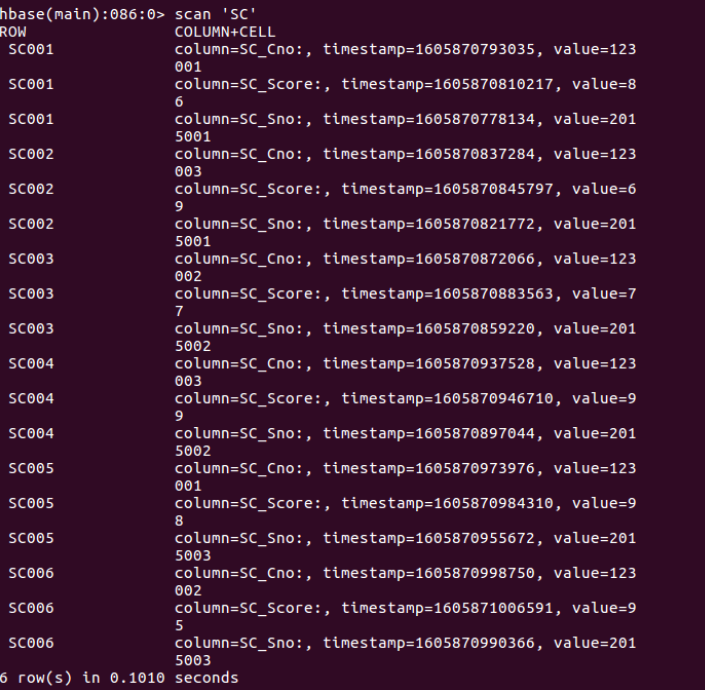

关系型数据库中的表和数据(教材P92上),要求将其转换为适合于HBase存储的表并插入数据。

编程完成以下指定功能(教材P92下):

(1)createTable(String tableName, String[] fields)创建表。

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

public class F_createTable {

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

public static void main(String[] args) throws IOException {

// TODO Auto-generated method stub

createTable("Score",new String[]{"sname","course"});

}

//建立连接

public static void init(){

configuration = HBaseConfiguration.create();

configuration.set("hbase.rootdir","hdfs://localhost:9000/hbase");

try{

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

}catch (IOException e){

e.printStackTrace();

}

}

//关闭连接

public static void close(){

try{

if(admin != null){

admin.close();

}

if(null != connection){

connection.close();

}

}catch (IOException e){

e.printStackTrace();

}

}

public static void createTable(String myTableName,String[] colFamily) throws IOException {

init();

TableName tableName = TableName.valueOf(myTableName);

if(admin.tableExists(tableName)){

deleteTable(tableName.getNameAsString());

System.out.println("talbe is exists,it will be deleted");

}else {

HTableDescriptor hTableDescriptor = new HTableDescriptor(tableName);

for(String str:colFamily){

HColumnDescriptor hColumnDescriptor = new HColumnDescriptor(str);

hTableDescriptor.addFamily(hColumnDescriptor);

}

admin.createTable(hTableDescriptor);

System.out.println("create table success");

}

close();

}

public static void deleteTable(String tableName) throws IOException {

init();

TableName tn = TableName.valueOf(tableName);

if (admin.tableExists(tn)) {

admin.disableTable(tn);

admin.deleteTable(tn);

}

close();

}

}

(2)addRecord(String tableName, String row, String[] fields, String[] values)

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Table;

public class G_addRecord {

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

public static void main(String[] args) throws IOException {

// TODO Auto-generated method stub

addRecord("student", "2015003", new String[]{"info:S_age"}, "99");

B_getAllData show = new B_getAllData();

show.getTableData("student");

}

//建立连接

public static void init(){

configuration = HBaseConfiguration.create();

configuration.set("hbase.rootdir","hdfs://localhost:9000/hbase");

try{

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

}catch (IOException e){

e.printStackTrace();

}

}

//关闭连接

public static void close(){

try{

if(admin != null){

admin.close();

}

if(null != connection){

connection.close();

}

}catch (IOException e){

e.printStackTrace();

}

}

public static void addRecord(String tableName,String rowKey,String cols[],String val) throws IOException {

init();

Table table = connection.getTable(TableName.valueOf(tableName));

for(String str:cols)

{

String[] cols_split = str.split(":");

Put put = new Put(rowKey.getBytes());

put.addColumn(cols_split[0].getBytes(), cols_split[1].getBytes(), val.getBytes());

table.put(put);

}

table.close();

close();

}

}

(3)scanColumn(String tableName, String column)

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.client.Table;

public class H_scanColumn {

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

public static void main(String[] args) throws IOException {

// TODO Auto-generated method stub

scanColumn("SC","SC_score");

}

//建立连接

public static void init(){

configuration = HBaseConfiguration.create();

configuration.set("hbase.rootdir","hdfs://localhost:9000/hbase");

try{

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

}catch (IOException e){

e.printStackTrace();

}

}

//关闭连接

public static void close(){

try{

if(admin != null){

admin.close();

}

if(null != connection){

connection.close();

}

}catch (IOException e){

e.printStackTrace();

}

}

public static void scanColumn(String tablename,String col) throws IOException {

init();

HTableDescriptor hTableDescriptors[] = admin.listTables();

String[] cols_split = col.split(":");

System.out.println(tablename);

Table table = connection.getTable(TableName.valueOf(tablename));

Scan scan1 = new Scan();

ResultScanner scanner1 = table.getScanner(scan1);

for (Result res : scanner1) {

Cell[] cells = res.rawCells();

for(Cell cell:cells){

String colF = new String(CellUtil.cloneFamily(cell));

String col_son = new String(CellUtil.cloneQualifier(cell));

//System.out.println(colF+" "+col_son+" "+cols_split[0]+" "+cols_split[1]);

if((colF.equals(cols_split[0])&&cols_split.length==1)||(colF.equals(cols_split[0])&&col_son.equals(cols_split[1])))

System.out.println(new String(CellUtil.cloneRow(cell))+" "+new String(CellUtil.cloneFamily(cell))+" "+new String(CellUtil.cloneQualifier(cell))+" "+new String(CellUtil.cloneValue(cell))+" ");

}

}

//关闭释放资源

scanner1.close();

table.close();

close();

}

}

(4)modifyData(String tableName, String row, String column)

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Table;

public class I_modifyData {

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

public static void main(String[] args) throws IOException {

// TODO Auto-generated method stub

modifyData("student","2015003","info:S_name","Sunxiaochuan");

B_getAllData show = new B_getAllData();

show.getTableData("student");

}

//建立连接

public static void init(){

configuration = HBaseConfiguration.create();

configuration.set("hbase.rootdir","hdfs://localhost:9000/hbase");

try{

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

}catch (IOException e){

e.printStackTrace();

}

}

//关闭连接

public static void close(){

try{

if(admin != null){

admin.close();

}

if(null != connection){

connection.close();

}

}catch (IOException e){

e.printStackTrace();

}

}

public static void modifyData(String tableName,String rowKey,String col,String val) throws IOException {

init();

Table table = connection.getTable(TableName.valueOf(tableName));

Put put = new Put(rowKey.getBytes());

String[] cols_split = col.split(":");

if(cols_split.length==1)

{

cols_split = insert(cols_split,"");

}

put.addColumn(cols_split[0].getBytes(), cols_split[1].getBytes(), val.getBytes());

table.put(put);

table.close();

close();

}

//I_

private static String[] insert(String[] arr, String str) {

int size = arr.length; //获取数组长度

String[] tmp = new String[size + 1];

for (int i = 0; i < size; i++){

tmp[i] = arr[i];

}

tmp[size] = str;

return tmp;

}

}

(5)deleteRow(String tableName, String row)

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.*;

import java.io.IOException;

import java.util.Collection;

import java.util.Iterator;

public class J_deleteRow {

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

public static void main(String[] args) throws IOException {

B_getAllData show = new B_getAllData();

show.getTableData("student");

deleteRow("student", "2015003");

show.getTableData("student");

// TODO Auto-generated method stub

}

//建立连接

public static void init(){

configuration = HBaseConfiguration.create();

configuration.set("hbase.rootdir","hdfs://localhost:9000/hbase");

try{

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

}catch (IOException e){

e.printStackTrace();

}

}

//关闭连接

public static void close(){

try{

if(admin != null){

admin.close();

}

if(null != connection){

connection.close();

}

}catch (IOException e){

e.printStackTrace();

}

}

public static void deleteRow(String tableName,String rowKey) throws IOException {

init();

Table table = connection.getTable(TableName.valueOf(tableName));

Delete delete = new Delete(rowKey.getBytes());

table.delete(delete);

table.close();

close();

}

}