分析system_call中断处理过程

本篇文章从上篇的 getpid() 说起。

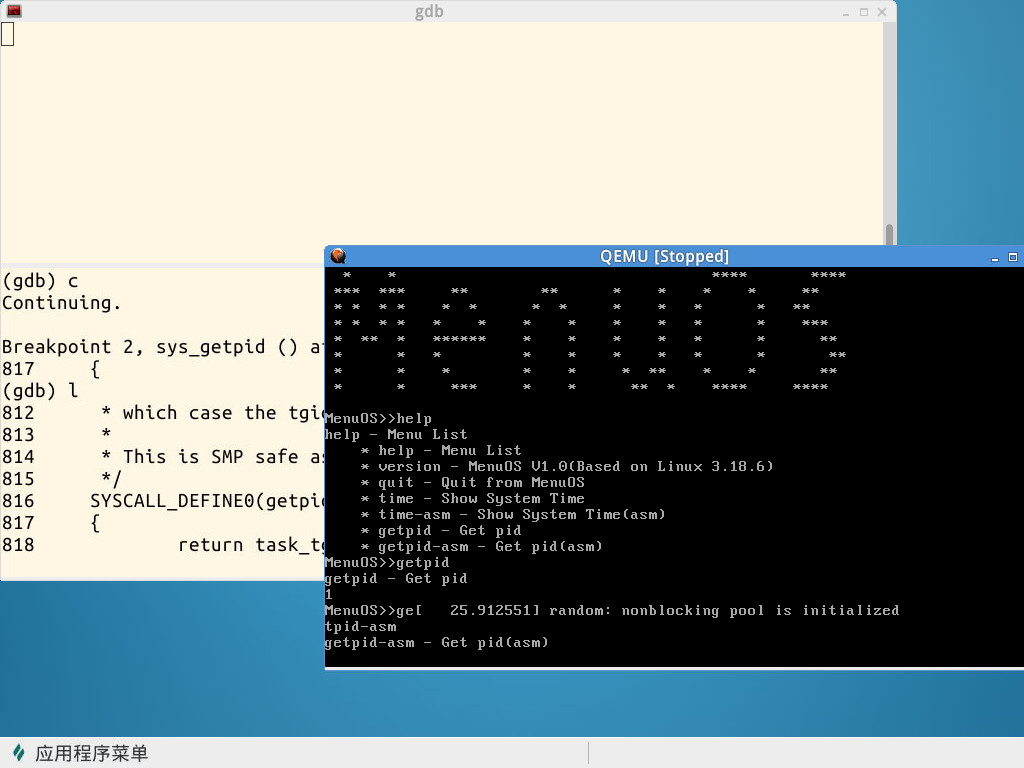

我们在之前的 MenuOS 中加入 getpid() 功能。然后在 getpid 处打断点,然后看看这系统调用详细是怎么运作的。

一、实验步骤

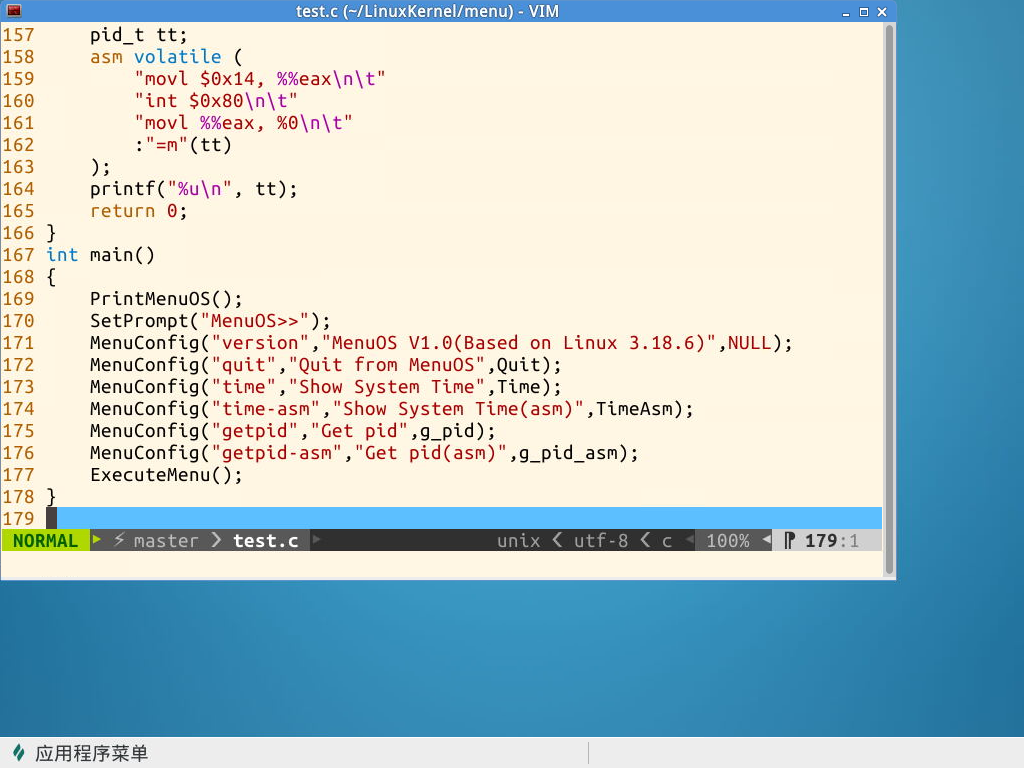

首先修改 MenuOS 中 test.c 中的代码。在 test.c 中添加上 g_pid() 和 g_pid_asm() 两个方法。test.c是MenuOS的执行文件。

在 main 函数中添加两行命令:

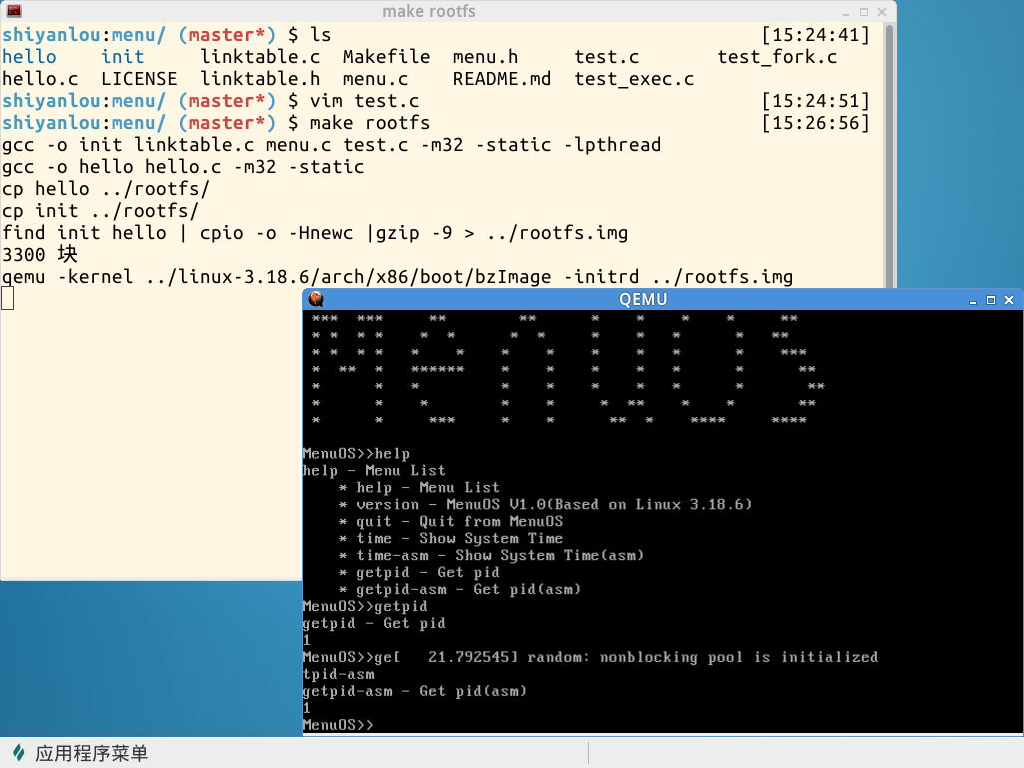

修改后输入 make rootfs 编译运行。结果如下:

可以看到输入 getpid 时可以看到当前的 pid。

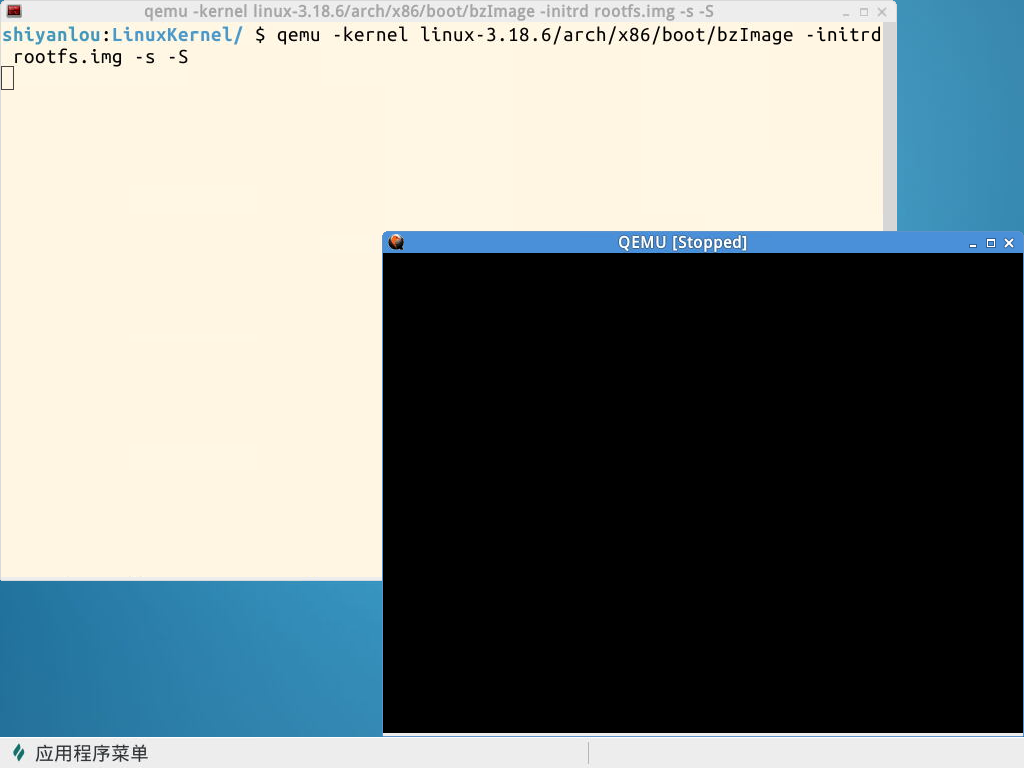

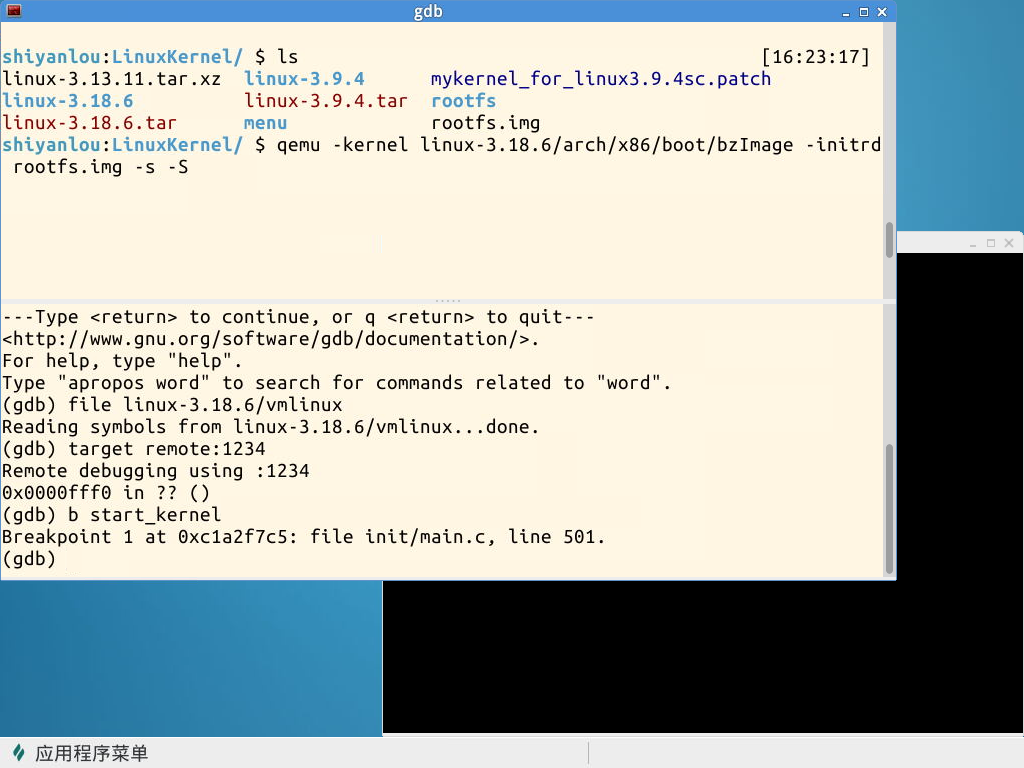

然后我们在 sys_getpid 上打上断点。

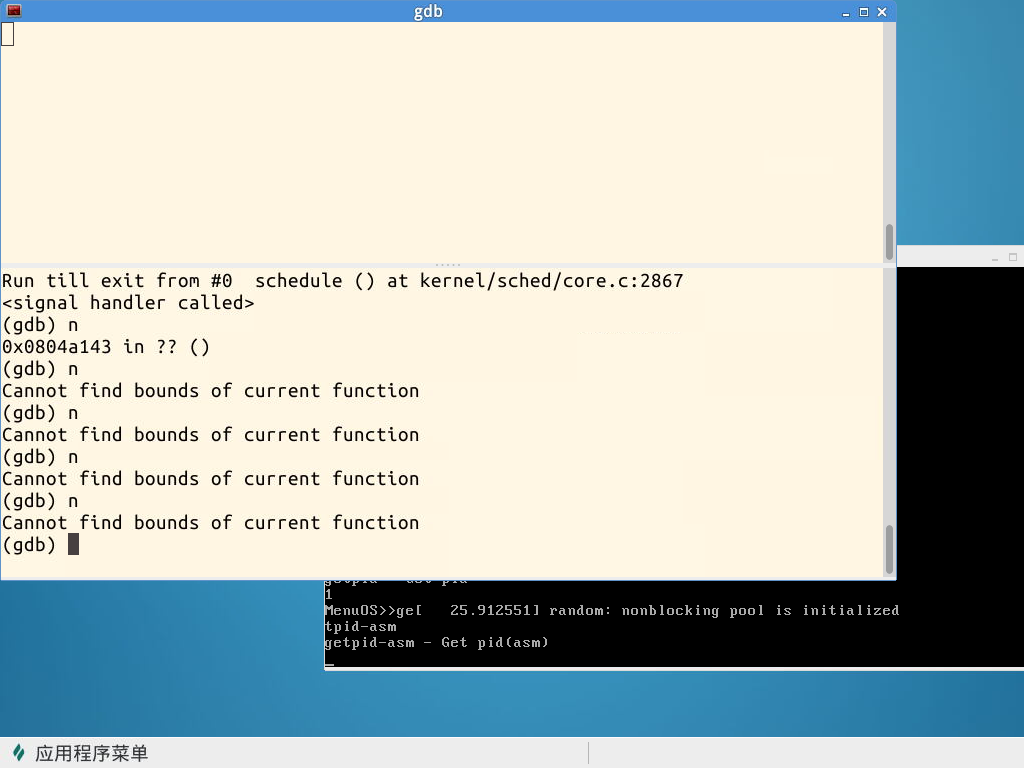

到最后我们发现我们无法继续跟踪调试汇编部分的代码,这里 system_call() 并不是一个普通的函数,gdb 并不能在此停下,所以剩下的还需要我们自己分析。

二、system_call()对应汇编代码工作过程

这一过程中,库函数触发了中断,并给出了系统调用号。然后系统通过中断描述符找到对应的中断处理函数。

然后我们发现了 ENTRY(system_call)。其位置是 /linux-3.18.6/include/linux/linkage.h

对应代码为:

1 #ifndef _LINUX_LINKAGE_H

2 #define _LINUX_LINKAGE_H

3

4 #include <linux/compiler.h>

5 #include <linux/stringify.h>

6 #include <linux/export.h>

7 #include <asm/linkage.h>

8

9 /* Some toolchains use other characters (e.g. '`') to mark new line in macro */

10 #ifndef ASM_NL

11 #define ASM_NL ;

12 #endif

13

14 #ifdef __cplusplus

15 #define CPP_ASMLINKAGE extern "C"

16 #else

17 #define CPP_ASMLINKAGE

18 #endif

19

20 #ifndef asmlinkage

21 #define asmlinkage CPP_ASMLINKAGE

22 #endif

23

24 #ifndef cond_syscall

25 #define cond_syscall(x) asm( \

26 ".weak " VMLINUX_SYMBOL_STR(x) "\n\t" \

27 ".set " VMLINUX_SYMBOL_STR(x) "," \

28 VMLINUX_SYMBOL_STR(sys_ni_syscall))

29 #endif

30

31 #ifndef SYSCALL_ALIAS

32 #define SYSCALL_ALIAS(alias, name) asm( \

33 ".globl " VMLINUX_SYMBOL_STR(alias) "\n\t" \

34 ".set " VMLINUX_SYMBOL_STR(alias) "," \

35 VMLINUX_SYMBOL_STR(name))

36 #endif

37

38 #define __page_aligned_data __section(.data..page_aligned) __aligned(PAGE_SIZE)

39 #define __page_aligned_bss __section(.bss..page_aligned) __aligned(PAGE_SIZE)

40

41 /*

42 * For assembly routines.

43 *

44 * Note when using these that you must specify the appropriate

45 * alignment directives yourself

46 */

47 #define __PAGE_ALIGNED_DATA .section ".data..page_aligned", "aw"

48 #define __PAGE_ALIGNED_BSS .section ".bss..page_aligned", "aw"

49

50 /*

51 * This is used by architectures to keep arguments on the stack

52 * untouched by the compiler by keeping them live until the end.

53 * The argument stack may be owned by the assembly-language

54 * caller, not the callee, and gcc doesn't always understand

55 * that.

56 *

57 * We have the return value, and a maximum of six arguments.

58 *

59 * This should always be followed by a "return ret" for the

60 * protection to work (ie no more work that the compiler might

61 * end up needing stack temporaries for).

62 */

63 /* Assembly files may be compiled with -traditional .. */

64 #ifndef __ASSEMBLY__

65 #ifndef asmlinkage_protect

66 # define asmlinkage_protect(n, ret, args...) do { } while (0)

67 #endif

68 #endif

69

70 #ifndef __ALIGN

71 #define __ALIGN .align 4,0x90

72 #define __ALIGN_STR ".align 4,0x90"

73 #endif

74

75 #ifdef __ASSEMBLY__

76

77 #ifndef LINKER_SCRIPT

78 #define ALIGN __ALIGN

79 #define ALIGN_STR __ALIGN_STR

80

81 #ifndef ENTRY

82 #define ENTRY(name) \

83 .globl name ASM_NL \

84 ALIGN ASM_NL \

85 name:

86 #endif

87 #endif /* LINKER_SCRIPT */

88

89 #ifndef WEAK

90 #define WEAK(name) \

91 .weak name ASM_NL \

92 name:

93 #endif

94

95 #ifndef END

96 #define END(name) \

97 .size name, .-name

98 #endif

99

100 /* If symbol 'name' is treated as a subroutine (gets called, and returns)

101 * then please use ENDPROC to mark 'name' as STT_FUNC for the benefit of

102 * static analysis tools such as stack depth analyzer.

103 */

104 #ifndef ENDPROC

105 #define ENDPROC(name) \

106 .type name, @function ASM_NL \

107 END(name)

108 #endif

109

110 #endif

111

112 #endif

下面是系统调用表 /linux-3.18.6/arch/frv/kernel/entry.S

1 /* entry.S: FR-V entry

2 *

3 * Copyright (C) 2003 Red Hat, Inc. All Rights Reserved.

4 * Written by David Howells (dhowells@redhat.com)

5 *

6 * This program is free software; you can redistribute it and/or

7 * modify it under the terms of the GNU General Public License

8 * as published by the Free Software Foundation; either version

9 * 2 of the License, or (at your option) any later version.

10 *

11 *

12 * Entry to the kernel is "interesting":

13 * (1) There are no stack pointers, not even for the kernel

14 * (2) General Registers should not be clobbered

15 * (3) There are no kernel-only data registers

16 * (4) Since all addressing modes are wrt to a General Register, no global

17 * variables can be reached

18 *

19 * We deal with this by declaring that we shall kill GR28 on entering the

20 * kernel from userspace

21 *

22 * However, since break interrupts can interrupt the CPU even when PSR.ET==0,

23 * they can't rely on GR28 to be anything useful, and so need to clobber a

24 * separate register (GR31). Break interrupts are managed in break.S

25 *

26 * GR29 _is_ saved, and holds the current task pointer globally

27 *

28 */

29

30 #include <linux/linkage.h>

31 #include <asm/thread_info.h>

32 #include <asm/setup.h>

33 #include <asm/segment.h>

34 #include <asm/ptrace.h>

35 #include <asm/errno.h>

36 #include <asm/cache.h>

37 #include <asm/spr-regs.h>

38

39 #define nr_syscalls ((syscall_table_size)/4)

40

41 .section .text..entry

42 .balign 4

43

44 .macro LEDS val

45 # sethi.p %hi(0xe1200004),gr30

46 # setlo %lo(0xe1200004),gr30

47 # setlos #~\val,gr31

48 # st gr31,@(gr30,gr0)

49 # sethi.p %hi(0xffc00100),gr30

50 # setlo %lo(0xffc00100),gr30

51 # sth gr0,@(gr30,gr0)

52 # membar

53 .endm

54

55 .macro LEDS32

56 # not gr31,gr31

57 # sethi.p %hi(0xe1200004),gr30

58 # setlo %lo(0xe1200004),gr30

59 # st.p gr31,@(gr30,gr0)

60 # srli gr31,#16,gr31

61 # sethi.p %hi(0xffc00100),gr30

62 # setlo %lo(0xffc00100),gr30

63 # sth gr31,@(gr30,gr0)

64 # membar

65 .endm

66

67 ###############################################################################

68 #

69 # entry point for External interrupts received whilst executing userspace code

70 #

71 ###############################################################################

72 .globl __entry_uspace_external_interrupt

73 .type __entry_uspace_external_interrupt,@function

74 __entry_uspace_external_interrupt:

75 LEDS 0x6200

76 sethi.p %hi(__kernel_frame0_ptr),gr28

77 setlo %lo(__kernel_frame0_ptr),gr28

78 ldi @(gr28,#0),gr28

79

80 # handle h/w single-step through exceptions

81 sti gr0,@(gr28,#REG__STATUS)

82

83 .globl __entry_uspace_external_interrupt_reentry

84 __entry_uspace_external_interrupt_reentry:

85 LEDS 0x6201

86

87 setlos #REG__END,gr30

88 dcpl gr28,gr30,#0

89

90 # finish building the exception frame

91 sti sp, @(gr28,#REG_SP)

92 stdi gr2, @(gr28,#REG_GR(2))

93 stdi gr4, @(gr28,#REG_GR(4))

94 stdi gr6, @(gr28,#REG_GR(6))

95 stdi gr8, @(gr28,#REG_GR(8))

96 stdi gr10,@(gr28,#REG_GR(10))

97 stdi gr12,@(gr28,#REG_GR(12))

98 stdi gr14,@(gr28,#REG_GR(14))

99 stdi gr16,@(gr28,#REG_GR(16))

100 stdi gr18,@(gr28,#REG_GR(18))

101 stdi gr20,@(gr28,#REG_GR(20))

102 stdi gr22,@(gr28,#REG_GR(22))

103 stdi gr24,@(gr28,#REG_GR(24))

104 stdi gr26,@(gr28,#REG_GR(26))

105 sti gr0, @(gr28,#REG_GR(28))

106 sti gr29,@(gr28,#REG_GR(29))

107 stdi.p gr30,@(gr28,#REG_GR(30))

108

109 # set up the kernel stack pointer

110 ori gr28,0,sp

111

112 movsg tbr ,gr20

113 movsg psr ,gr22

114 movsg pcsr,gr21

115 movsg isr ,gr23

116 movsg ccr ,gr24

117 movsg cccr,gr25

118 movsg lr ,gr26

119 movsg lcr ,gr27

120

121 setlos.p #-1,gr4

122 andi gr22,#PSR_PS,gr5 /* try to rebuild original PSR value */

123 andi.p gr22,#~(PSR_PS|PSR_S),gr6

124 slli gr5,#1,gr5

125 or gr6,gr5,gr5

126 andi gr5,#~PSR_ET,gr5

127

128 sti gr20,@(gr28,#REG_TBR)

129 sti gr21,@(gr28,#REG_PC)

130 sti gr5 ,@(gr28,#REG_PSR)

131 sti gr23,@(gr28,#REG_ISR)

132 stdi gr24,@(gr28,#REG_CCR)

133 stdi gr26,@(gr28,#REG_LR)

134 sti gr4 ,@(gr28,#REG_SYSCALLNO)

135

136 movsg iacc0h,gr4

137 movsg iacc0l,gr5

138 stdi gr4,@(gr28,#REG_IACC0)

139

140 movsg gner0,gr4

141 movsg gner1,gr5

142 stdi.p gr4,@(gr28,#REG_GNER0)

143

144 # interrupts start off fully disabled in the interrupt handler

145 subcc gr0,gr0,gr0,icc2 /* set Z and clear C */

146

147 # set up kernel global registers

148 sethi.p %hi(__kernel_current_task),gr5

149 setlo %lo(__kernel_current_task),gr5

150 sethi.p %hi(_gp),gr16

151 setlo %lo(_gp),gr16

152 ldi @(gr5,#0),gr29

153 ldi.p @(gr29,#4),gr15 ; __current_thread_info = current->thread_info

154

155 # make sure we (the kernel) get div-zero and misalignment exceptions

156 setlos #ISR_EDE|ISR_DTT_DIVBYZERO|ISR_EMAM_EXCEPTION,gr5

157 movgs gr5,isr

158

159 # switch to the kernel trap table

160 sethi.p %hi(__entry_kerneltrap_table),gr6

161 setlo %lo(__entry_kerneltrap_table),gr6

162 movgs gr6,tbr

163

164 # set the return address

165 sethi.p %hi(__entry_return_from_user_interrupt),gr4

166 setlo %lo(__entry_return_from_user_interrupt),gr4

167 movgs gr4,lr

168

169 # raise the minimum interrupt priority to 15 (NMI only) and enable exceptions

170 movsg psr,gr4

171

172 ori gr4,#PSR_PIL_14,gr4

173 movgs gr4,psr

174 ori gr4,#PSR_PIL_14|PSR_ET,gr4

175 movgs gr4,psr

176

177 LEDS 0x6202

178 bra do_IRQ

179

180 .size __entry_uspace_external_interrupt,.-__entry_uspace_external_interrupt

181

182 ###############################################################################

183 #

184 # entry point for External interrupts received whilst executing kernel code

185 # - on arriving here, the following registers should already be set up:

186 # GR15 - current thread_info struct pointer

187 # GR16 - kernel GP-REL pointer

188 # GR29 - current task struct pointer

189 # TBR - kernel trap vector table

190 # ISR - kernel's preferred integer controls

191 #

192 ###############################################################################

193 .globl __entry_kernel_external_interrupt

194 .type __entry_kernel_external_interrupt,@function

195 __entry_kernel_external_interrupt:

196 LEDS 0x6210

197 // sub sp,gr15,gr31

198 // LEDS32

199

200 # set up the stack pointer

201 or.p sp,gr0,gr30

202 subi sp,#REG__END,sp

203 sti gr30,@(sp,#REG_SP)

204

205 # handle h/w single-step through exceptions

206 sti gr0,@(sp,#REG__STATUS)

207

208 .globl __entry_kernel_external_interrupt_reentry

209 __entry_kernel_external_interrupt_reentry:

210 LEDS 0x6211

211

212 # set up the exception frame

213 setlos #REG__END,gr30

214 dcpl sp,gr30,#0

215

216 sti.p gr28,@(sp,#REG_GR(28))

217 ori sp,0,gr28

218

219 # finish building the exception frame

220 stdi gr2,@(gr28,#REG_GR(2))

221 stdi gr4,@(gr28,#REG_GR(4))

222 stdi gr6,@(gr28,#REG_GR(6))

223 stdi gr8,@(gr28,#REG_GR(8))

224 stdi gr10,@(gr28,#REG_GR(10))

225 stdi gr12,@(gr28,#REG_GR(12))

226 stdi gr14,@(gr28,#REG_GR(14))

227 stdi gr16,@(gr28,#REG_GR(16))

228 stdi gr18,@(gr28,#REG_GR(18))

229 stdi gr20,@(gr28,#REG_GR(20))

230 stdi gr22,@(gr28,#REG_GR(22))

231 stdi gr24,@(gr28,#REG_GR(24))

232 stdi gr26,@(gr28,#REG_GR(26))

233 sti gr29,@(gr28,#REG_GR(29))

234 stdi.p gr30,@(gr28,#REG_GR(30))

235

236 # note virtual interrupts will be fully enabled upon return

237 subicc gr0,#1,gr0,icc2 /* clear Z, set C */

238

239 movsg tbr ,gr20

240 movsg psr ,gr22

241 movsg pcsr,gr21

242 movsg isr ,gr23

243 movsg ccr ,gr24

244 movsg cccr,gr25

245 movsg lr ,gr26

246 movsg lcr ,gr27

247

248 setlos.p #-1,gr4

249 andi gr22,#PSR_PS,gr5 /* try to rebuild original PSR value */

250 andi.p gr22,#~(PSR_PS|PSR_S),gr6

251 slli gr5,#1,gr5

252 or gr6,gr5,gr5

253 andi.p gr5,#~PSR_ET,gr5

254

255 # set CCCR.CC3 to Undefined to abort atomic-modify completion inside the kernel

256 # - for an explanation of how it works, see: Documentation/frv/atomic-ops.txt

257 andi gr25,#~0xc0,gr25

258

259 sti gr20,@(gr28,#REG_TBR)

260 sti gr21,@(gr28,#REG_PC)

261 sti gr5 ,@(gr28,#REG_PSR)

262 sti gr23,@(gr28,#REG_ISR)

263 stdi gr24,@(gr28,#REG_CCR)

264 stdi gr26,@(gr28,#REG_LR)

265 sti gr4 ,@(gr28,#REG_SYSCALLNO)

266

267 movsg iacc0h,gr4

268 movsg iacc0l,gr5

269 stdi gr4,@(gr28,#REG_IACC0)

270

271 movsg gner0,gr4

272 movsg gner1,gr5

273 stdi.p gr4,@(gr28,#REG_GNER0)

274

275 # interrupts start off fully disabled in the interrupt handler

276 subcc gr0,gr0,gr0,icc2 /* set Z and clear C */

277

278 # set the return address

279 sethi.p %hi(__entry_return_from_kernel_interrupt),gr4

280 setlo %lo(__entry_return_from_kernel_interrupt),gr4

281 movgs gr4,lr

282

283 # clear power-saving mode flags

284 movsg hsr0,gr4

285 andi gr4,#~HSR0_PDM,gr4

286 movgs gr4,hsr0

287

288 # raise the minimum interrupt priority to 15 (NMI only) and enable exceptions

289 movsg psr,gr4

290 ori gr4,#PSR_PIL_14,gr4

291 movgs gr4,psr

292 ori gr4,#PSR_ET,gr4

293 movgs gr4,psr

294

295 LEDS 0x6212

296 bra do_IRQ

297

298 .size __entry_kernel_external_interrupt,.-__entry_kernel_external_interrupt

299

300 ###############################################################################

301 #

302 # deal with interrupts that were actually virtually disabled

303 # - we need to really disable them, flag the fact and return immediately

304 # - if you change this, you must alter break.S also

305 #

306 ###############################################################################

307 .balign L1_CACHE_BYTES

308 .globl __entry_kernel_external_interrupt_virtually_disabled

309 .type __entry_kernel_external_interrupt_virtually_disabled,@function

310 __entry_kernel_external_interrupt_virtually_disabled:

311 movsg psr,gr30

312 andi gr30,#~PSR_PIL,gr30

313 ori gr30,#PSR_PIL_14,gr30 ; debugging interrupts only

314 movgs gr30,psr

315 subcc gr0,gr0,gr0,icc2 ; leave Z set, clear C

316 rett #0

317

318 .size __entry_kernel_external_interrupt_virtually_disabled,.-__entry_kernel_external_interrupt_virtually_disabled

319

320 ###############################################################################

321 #

322 # deal with re-enablement of interrupts that were pending when virtually re-enabled

323 # - set ICC2.C, re-enable the real interrupts and return

324 # - we can clear ICC2.Z because we shouldn't be here if it's not 0 [due to TIHI]

325 # - if you change this, you must alter break.S also

326 #

327 ###############################################################################

328 .balign L1_CACHE_BYTES

329 .globl __entry_kernel_external_interrupt_virtual_reenable

330 .type __entry_kernel_external_interrupt_virtual_reenable,@function

331 __entry_kernel_external_interrupt_virtual_reenable:

332 movsg psr,gr30

333 andi gr30,#~PSR_PIL,gr30 ; re-enable interrupts

334 movgs gr30,psr

335 subicc gr0,#1,gr0,icc2 ; clear Z, set C

336 rett #0

337

338 .size __entry_kernel_external_interrupt_virtual_reenable,.-__entry_kernel_external_interrupt_virtual_reenable

339

340 ###############################################################################

341 #

342 # entry point for Software and Progam interrupts generated whilst executing userspace code

343 #

344 ###############################################################################

345 .globl __entry_uspace_softprog_interrupt

346 .type __entry_uspace_softprog_interrupt,@function

347 .globl __entry_uspace_handle_mmu_fault

348 __entry_uspace_softprog_interrupt:

349 LEDS 0x6000

350 #ifdef CONFIG_MMU

351 movsg ear0,gr28

352 __entry_uspace_handle_mmu_fault:

353 movgs gr28,scr2

354 #endif

355 sethi.p %hi(__kernel_frame0_ptr),gr28

356 setlo %lo(__kernel_frame0_ptr),gr28

357 ldi @(gr28,#0),gr28

358

359 # handle h/w single-step through exceptions

360 sti gr0,@(gr28,#REG__STATUS)

361

362 .globl __entry_uspace_softprog_interrupt_reentry

363 __entry_uspace_softprog_interrupt_reentry:

364 LEDS 0x6001

365

366 setlos #REG__END,gr30

367 dcpl gr28,gr30,#0

368

369 # set up the kernel stack pointer

370 sti.p sp,@(gr28,#REG_SP)

371 ori gr28,0,sp

372 sti gr0,@(gr28,#REG_GR(28))

373

374 stdi gr20,@(gr28,#REG_GR(20))

375 stdi gr22,@(gr28,#REG_GR(22))

376

377 movsg tbr,gr20

378 movsg pcsr,gr21

379 movsg psr,gr22

380

381 sethi.p %hi(__entry_return_from_user_exception),gr23

382 setlo %lo(__entry_return_from_user_exception),gr23

383

384 bra __entry_common

385

386 .size __entry_uspace_softprog_interrupt,.-__entry_uspace_softprog_interrupt

387

388 # single-stepping was disabled on entry to a TLB handler that then faulted

389 #ifdef CONFIG_MMU

390 .globl __entry_uspace_handle_mmu_fault_sstep

391 __entry_uspace_handle_mmu_fault_sstep:

392 movgs gr28,scr2

393 sethi.p %hi(__kernel_frame0_ptr),gr28

394 setlo %lo(__kernel_frame0_ptr),gr28

395 ldi @(gr28,#0),gr28

396

397 # flag single-step re-enablement

398 sti gr0,@(gr28,#REG__STATUS)

399 bra __entry_uspace_softprog_interrupt_reentry

400 #endif

401

402

403 ###############################################################################

404 #

405 # entry point for Software and Progam interrupts generated whilst executing kernel code

406 #

407 ###############################################################################

408 .globl __entry_kernel_softprog_interrupt

409 .type __entry_kernel_softprog_interrupt,@function

410 __entry_kernel_softprog_interrupt:

411 LEDS 0x6004

412

413 #ifdef CONFIG_MMU

414 movsg ear0,gr30

415 movgs gr30,scr2

416 #endif

417

418 .globl __entry_kernel_handle_mmu_fault

419 __entry_kernel_handle_mmu_fault:

420 # set up the stack pointer

421 subi sp,#REG__END,sp

422 sti sp,@(sp,#REG_SP)

423 sti sp,@(sp,#REG_SP-4)

424 andi sp,#~7,sp

425

426 # handle h/w single-step through exceptions

427 sti gr0,@(sp,#REG__STATUS)

428

429 .globl __entry_kernel_softprog_interrupt_reentry

430 __entry_kernel_softprog_interrupt_reentry:

431 LEDS 0x6005

432

433 setlos #REG__END,gr30

434 dcpl sp,gr30,#0

435

436 # set up the exception frame

437 sti.p gr28,@(sp,#REG_GR(28))

438 ori sp,0,gr28

439

440 stdi gr20,@(gr28,#REG_GR(20))

441 stdi gr22,@(gr28,#REG_GR(22))

442

443 ldi @(sp,#REG_SP),gr22 /* reconstruct the old SP */

444 addi gr22,#REG__END,gr22

445 sti gr22,@(sp,#REG_SP)

446

447 # set CCCR.CC3 to Undefined to abort atomic-modify completion inside the kernel

448 # - for an explanation of how it works, see: Documentation/frv/atomic-ops.txt

449 movsg cccr,gr20

450 andi gr20,#~0xc0,gr20

451 movgs gr20,cccr

452

453 movsg tbr,gr20

454 movsg pcsr,gr21

455 movsg psr,gr22

456

457 sethi.p %hi(__entry_return_from_kernel_exception),gr23

458 setlo %lo(__entry_return_from_kernel_exception),gr23

459 bra __entry_common

460

461 .size __entry_kernel_softprog_interrupt,.-__entry_kernel_softprog_interrupt

462

463 # single-stepping was disabled on entry to a TLB handler that then faulted

464 #ifdef CONFIG_MMU

465 .globl __entry_kernel_handle_mmu_fault_sstep

466 __entry_kernel_handle_mmu_fault_sstep:

467 # set up the stack pointer

468 subi sp,#REG__END,sp

469 sti sp,@(sp,#REG_SP)

470 sti sp,@(sp,#REG_SP-4)

471 andi sp,#~7,sp

472

473 # flag single-step re-enablement

474 sethi #REG__STATUS_STEP,gr30

475 sti gr30,@(sp,#REG__STATUS)

476 bra __entry_kernel_softprog_interrupt_reentry

477 #endif

478

479

480 ###############################################################################

481 #

482 # the rest of the kernel entry point code

483 # - on arriving here, the following registers should be set up:

484 # GR1 - kernel stack pointer

485 # GR7 - syscall number (trap 0 only)

486 # GR8-13 - syscall args (trap 0 only)

487 # GR20 - saved TBR

488 # GR21 - saved PC

489 # GR22 - saved PSR

490 # GR23 - return handler address

491 # GR28 - exception frame on stack

492 # SCR2 - saved EAR0 where applicable (clobbered by ICI & ICEF insns on FR451)

493 # PSR - PSR.S 1, PSR.ET 0

494 #

495 ###############################################################################

496 .globl __entry_common

497 .type __entry_common,@function

498 __entry_common:

499 LEDS 0x6008

500

501 # finish building the exception frame

502 stdi gr2,@(gr28,#REG_GR(2))

503 stdi gr4,@(gr28,#REG_GR(4))

504 stdi gr6,@(gr28,#REG_GR(6))

505 stdi gr8,@(gr28,#REG_GR(8))

506 stdi gr10,@(gr28,#REG_GR(10))

507 stdi gr12,@(gr28,#REG_GR(12))

508 stdi gr14,@(gr28,#REG_GR(14))

509 stdi gr16,@(gr28,#REG_GR(16))

510 stdi gr18,@(gr28,#REG_GR(18))

511 stdi gr24,@(gr28,#REG_GR(24))

512 stdi gr26,@(gr28,#REG_GR(26))

513 sti gr29,@(gr28,#REG_GR(29))

514 stdi gr30,@(gr28,#REG_GR(30))

515

516 movsg lcr ,gr27

517 movsg lr ,gr26

518 movgs gr23,lr

519 movsg cccr,gr25

520 movsg ccr ,gr24

521 movsg isr ,gr23

522

523 setlos.p #-1,gr4

524 andi gr22,#PSR_PS,gr5 /* try to rebuild original PSR value */

525 andi.p gr22,#~(PSR_PS|PSR_S),gr6

526 slli gr5,#1,gr5

527 or gr6,gr5,gr5

528 andi gr5,#~PSR_ET,gr5

529

530 sti gr20,@(gr28,#REG_TBR)

531 sti gr21,@(gr28,#REG_PC)

532 sti gr5 ,@(gr28,#REG_PSR)

533 sti gr23,@(gr28,#REG_ISR)

534 stdi gr24,@(gr28,#REG_CCR)

535 stdi gr26,@(gr28,#REG_LR)

536 sti gr4 ,@(gr28,#REG_SYSCALLNO)

537

538 movsg iacc0h,gr4

539 movsg iacc0l,gr5

540 stdi gr4,@(gr28,#REG_IACC0)

541

542 movsg gner0,gr4

543 movsg gner1,gr5

544 stdi.p gr4,@(gr28,#REG_GNER0)

545

546 # set up virtual interrupt disablement

547 subicc gr0,#1,gr0,icc2 /* clear Z flag, set C flag */

548

549 # set up kernel global registers

550 sethi.p %hi(__kernel_current_task),gr5

551 setlo %lo(__kernel_current_task),gr5

552 sethi.p %hi(_gp),gr16

553 setlo %lo(_gp),gr16

554 ldi @(gr5,#0),gr29

555 ldi @(gr29,#4),gr15 ; __current_thread_info = current->thread_info

556

557 # switch to the kernel trap table

558 sethi.p %hi(__entry_kerneltrap_table),gr6

559 setlo %lo(__entry_kerneltrap_table),gr6

560 movgs gr6,tbr

561

562 # make sure we (the kernel) get div-zero and misalignment exceptions

563 setlos #ISR_EDE|ISR_DTT_DIVBYZERO|ISR_EMAM_EXCEPTION,gr5

564 movgs gr5,isr

565

566 # clear power-saving mode flags

567 movsg hsr0,gr4

568 andi gr4,#~HSR0_PDM,gr4

569 movgs gr4,hsr0

570

571 # multiplex again using old TBR as a guide

572 setlos.p #TBR_TT,gr3

573 sethi %hi(__entry_vector_table),gr6

574 and.p gr20,gr3,gr5

575 setlo %lo(__entry_vector_table),gr6

576 srli gr5,#2,gr5

577 ld @(gr5,gr6),gr5

578

579 LEDS 0x6009

580 jmpl @(gr5,gr0)

581

582

583 .size __entry_common,.-__entry_common

584

585 ###############################################################################

586 #

587 # handle instruction MMU fault

588 #

589 ###############################################################################

590 #ifdef CONFIG_MMU

591 .globl __entry_insn_mmu_fault

592 __entry_insn_mmu_fault:

593 LEDS 0x6010

594 setlos #0,gr8

595 movsg esr0,gr9

596 movsg scr2,gr10

597

598 # now that we've accessed the exception regs, we can enable exceptions

599 movsg psr,gr4

600 ori gr4,#PSR_ET,gr4

601 movgs gr4,psr

602

603 sethi.p %hi(do_page_fault),gr5

604 setlo %lo(do_page_fault),gr5

605 jmpl @(gr5,gr0) ; call do_page_fault(0,esr0,ear0)

606 #endif

607

608

609 ###############################################################################

610 #

611 # handle instruction access error

612 #

613 ###############################################################################

614 .globl __entry_insn_access_error

615 __entry_insn_access_error:

616 LEDS 0x6011

617 sethi.p %hi(insn_access_error),gr5

618 setlo %lo(insn_access_error),gr5

619 movsg esfr1,gr8

620 movsg epcr0,gr9

621 movsg esr0,gr10

622

623 # now that we've accessed the exception regs, we can enable exceptions

624 movsg psr,gr4

625 ori gr4,#PSR_ET,gr4

626 movgs gr4,psr

627 jmpl @(gr5,gr0) ; call insn_access_error(esfr1,epcr0,esr0)

628

629 ###############################################################################

630 #

631 # handle various instructions of dubious legality

632 #

633 ###############################################################################

634 .globl __entry_unsupported_trap

635 .globl __entry_illegal_instruction

636 .globl __entry_privileged_instruction

637 .globl __entry_debug_exception

638 __entry_unsupported_trap:

639 subi gr21,#4,gr21

640 sti gr21,@(gr28,#REG_PC)

641 __entry_illegal_instruction:

642 __entry_privileged_instruction:

643 __entry_debug_exception:

644 LEDS 0x6012

645 sethi.p %hi(illegal_instruction),gr5

646 setlo %lo(illegal_instruction),gr5

647 movsg esfr1,gr8

648 movsg epcr0,gr9

649 movsg esr0,gr10

650

651 # now that we've accessed the exception regs, we can enable exceptions

652 movsg psr,gr4

653 ori gr4,#PSR_ET,gr4

654 movgs gr4,psr

655 jmpl @(gr5,gr0) ; call ill_insn(esfr1,epcr0,esr0)

656

657 ###############################################################################

658 #

659 # handle atomic operation emulation for userspace

660 #

661 ###############################################################################

662 .globl __entry_atomic_op

663 __entry_atomic_op:

664 LEDS 0x6012

665 sethi.p %hi(atomic_operation),gr5

666 setlo %lo(atomic_operation),gr5

667 movsg esfr1,gr8

668 movsg epcr0,gr9

669 movsg esr0,gr10

670

671 # now that we've accessed the exception regs, we can enable exceptions

672 movsg psr,gr4

673 ori gr4,#PSR_ET,gr4

674 movgs gr4,psr

675 jmpl @(gr5,gr0) ; call atomic_operation(esfr1,epcr0,esr0)

676

677 ###############################################################################

678 #

679 # handle media exception

680 #

681 ###############################################################################

682 .globl __entry_media_exception

683 __entry_media_exception:

684 LEDS 0x6013

685 sethi.p %hi(media_exception),gr5

686 setlo %lo(media_exception),gr5

687 movsg msr0,gr8

688 movsg msr1,gr9

689

690 # now that we've accessed the exception regs, we can enable exceptions

691 movsg psr,gr4

692 ori gr4,#PSR_ET,gr4

693 movgs gr4,psr

694 jmpl @(gr5,gr0) ; call media_excep(msr0,msr1)

695

696 ###############################################################################

697 #

698 # handle data MMU fault

699 # handle data DAT fault (write-protect exception)

700 #

701 ###############################################################################

702 #ifdef CONFIG_MMU

703 .globl __entry_data_mmu_fault

704 __entry_data_mmu_fault:

705 .globl __entry_data_dat_fault

706 __entry_data_dat_fault:

707 LEDS 0x6014

708 setlos #1,gr8

709 movsg esr0,gr9

710 movsg scr2,gr10 ; saved EAR0

711

712 # now that we've accessed the exception regs, we can enable exceptions

713 movsg psr,gr4

714 ori gr4,#PSR_ET,gr4

715 movgs gr4,psr

716

717 sethi.p %hi(do_page_fault),gr5

718 setlo %lo(do_page_fault),gr5

719 jmpl @(gr5,gr0) ; call do_page_fault(1,esr0,ear0)

720 #endif

721

722 ###############################################################################

723 #

724 # handle data and instruction access exceptions

725 #

726 ###############################################################################

727 .globl __entry_insn_access_exception

728 .globl __entry_data_access_exception

729 __entry_insn_access_exception:

730 __entry_data_access_exception:

731 LEDS 0x6016

732 sethi.p %hi(memory_access_exception),gr5

733 setlo %lo(memory_access_exception),gr5

734 movsg esr0,gr8

735 movsg scr2,gr9 ; saved EAR0

736 movsg epcr0,gr10

737

738 # now that we've accessed the exception regs, we can enable exceptions

739 movsg psr,gr4

740 ori gr4,#PSR_ET,gr4

741 movgs gr4,psr

742 jmpl @(gr5,gr0) ; call memory_access_error(esr0,ear0,epcr0)

743

744 ###############################################################################

745 #

746 # handle data access error

747 #

748 ###############################################################################

749 .globl __entry_data_access_error

750 __entry_data_access_error:

751 LEDS 0x6016

752 sethi.p %hi(data_access_error),gr5

753 setlo %lo(data_access_error),gr5

754 movsg esfr1,gr8

755 movsg esr15,gr9

756 movsg ear15,gr10

757

758 # now that we've accessed the exception regs, we can enable exceptions

759 movsg psr,gr4

760 ori gr4,#PSR_ET,gr4

761 movgs gr4,psr

762 jmpl @(gr5,gr0) ; call data_access_error(esfr1,esr15,ear15)

763

764 ###############################################################################

765 #

766 # handle data store error

767 #

768 ###############################################################################

769 .globl __entry_data_store_error

770 __entry_data_store_error:

771 LEDS 0x6017

772 sethi.p %hi(data_store_error),gr5

773 setlo %lo(data_store_error),gr5

774 movsg esfr1,gr8

775 movsg esr14,gr9

776

777 # now that we've accessed the exception regs, we can enable exceptions

778 movsg psr,gr4

779 ori gr4,#PSR_ET,gr4

780 movgs gr4,psr

781 jmpl @(gr5,gr0) ; call data_store_error(esfr1,esr14)

782

783 ###############################################################################

784 #

785 # handle division exception

786 #

787 ###############################################################################

788 .globl __entry_division_exception

789 __entry_division_exception:

790 LEDS 0x6018

791 sethi.p %hi(division_exception),gr5

792 setlo %lo(division_exception),gr5

793 movsg esfr1,gr8

794 movsg esr0,gr9

795 movsg isr,gr10

796

797 # now that we've accessed the exception regs, we can enable exceptions

798 movsg psr,gr4

799 ori gr4,#PSR_ET,gr4

800 movgs gr4,psr

801 jmpl @(gr5,gr0) ; call div_excep(esfr1,esr0,isr)

802

803 ###############################################################################

804 #

805 # handle compound exception

806 #

807 ###############################################################################

808 .globl __entry_compound_exception

809 __entry_compound_exception:

810 LEDS 0x6019

811 sethi.p %hi(compound_exception),gr5

812 setlo %lo(compound_exception),gr5

813 movsg esfr1,gr8

814 movsg esr0,gr9

815 movsg esr14,gr10

816 movsg esr15,gr11

817 movsg msr0,gr12

818 movsg msr1,gr13

819

820 # now that we've accessed the exception regs, we can enable exceptions

821 movsg psr,gr4

822 ori gr4,#PSR_ET,gr4

823 movgs gr4,psr

824 jmpl @(gr5,gr0) ; call comp_excep(esfr1,esr0,esr14,esr15,msr0,msr1)

825

826 ###############################################################################

827 #

828 # handle interrupts and NMIs

829 #

830 ###############################################################################

831 .globl __entry_do_IRQ

832 __entry_do_IRQ:

833 LEDS 0x6020

834

835 # we can enable exceptions

836 movsg psr,gr4

837 ori gr4,#PSR_ET,gr4

838 movgs gr4,psr

839 bra do_IRQ

840

841 .globl __entry_do_NMI

842 __entry_do_NMI:

843 LEDS 0x6021

844

845 # we can enable exceptions

846 movsg psr,gr4

847 ori gr4,#PSR_ET,gr4

848 movgs gr4,psr

849 bra do_NMI

850

851 ###############################################################################

852 #

853 # the return path for a newly forked child process

854 # - __switch_to() saved the old current pointer in GR8 for us

855 #

856 ###############################################################################

857 .globl ret_from_fork

858 ret_from_fork:

859 LEDS 0x6100

860 call schedule_tail

861

862 # fork & co. return 0 to child

863 setlos.p #0,gr8

864 bra __syscall_exit

865

866 .globl ret_from_kernel_thread

867 ret_from_kernel_thread:

868 lddi.p @(gr28,#REG_GR(8)),gr20

869 call schedule_tail

870 calll.p @(gr21,gr0)

871 or gr20,gr20,gr8

872 bra __syscall_exit

873

874 ###################################################################################################

875 #

876 # Return to user mode is not as complex as all this looks,

877 # but we want the default path for a system call return to

878 # go as quickly as possible which is why some of this is

879 # less clear than it otherwise should be.

880 #

881 ###################################################################################################

882 .balign L1_CACHE_BYTES

883 .globl system_call

884 system_call:

885 LEDS 0x6101

886 movsg psr,gr4 ; enable exceptions

887 ori gr4,#PSR_ET,gr4

888 movgs gr4,psr

889

890 sti gr7,@(gr28,#REG_SYSCALLNO)

891 sti.p gr8,@(gr28,#REG_ORIG_GR8)

892

893 subicc gr7,#nr_syscalls,gr0,icc0

894 bnc icc0,#0,__syscall_badsys

895

896 ldi @(gr15,#TI_FLAGS),gr4

897 andicc gr4,#_TIF_SYSCALL_TRACE,gr0,icc0

898 bne icc0,#0,__syscall_trace_entry

899

900 __syscall_call:

901 slli.p gr7,#2,gr7

902 sethi %hi(sys_call_table),gr5

903 setlo %lo(sys_call_table),gr5

904 ld @(gr5,gr7),gr4

905 calll @(gr4,gr0)

906

907

908 ###############################################################################

909 #

910 # return to interrupted process

911 #

912 ###############################################################################

913 __syscall_exit:

914 LEDS 0x6300

915

916 # keep current PSR in GR23

917 movsg psr,gr23

918

919 ldi @(gr28,#REG_PSR),gr22

920

921 sti.p gr8,@(gr28,#REG_GR(8)) ; save return value

922

923 # rebuild saved psr - execve will change it for init/main.c

924 srli gr22,#1,gr5

925 andi.p gr22,#~PSR_PS,gr22

926 andi gr5,#PSR_PS,gr5

927 or gr5,gr22,gr22

928 ori.p gr22,#PSR_S,gr22

929

930 # make sure we don't miss an interrupt setting need_resched or sigpending between

931 # sampling and the RETT

932 ori gr23,#PSR_PIL_14,gr23

933 movgs gr23,psr

934

935 ldi @(gr15,#TI_FLAGS),gr4

936 andicc gr4,#_TIF_ALLWORK_MASK,gr0,icc0

937 bne icc0,#0,__syscall_exit_work

938

939 # restore all registers and return

940 __entry_return_direct:

941 LEDS 0x6301

942

943 andi gr22,#~PSR_ET,gr22

944 movgs gr22,psr

945

946 ldi @(gr28,#REG_ISR),gr23

947 lddi @(gr28,#REG_CCR),gr24

948 lddi @(gr28,#REG_LR) ,gr26

949 ldi @(gr28,#REG_PC) ,gr21

950 ldi @(gr28,#REG_TBR),gr20

951

952 movgs gr20,tbr

953 movgs gr21,pcsr

954 movgs gr23,isr

955 movgs gr24,ccr

956 movgs gr25,cccr

957 movgs gr26,lr

958 movgs gr27,lcr

959

960 lddi @(gr28,#REG_GNER0),gr4

961 movgs gr4,gner0

962 movgs gr5,gner1

963

964 lddi @(gr28,#REG_IACC0),gr4

965 movgs gr4,iacc0h

966 movgs gr5,iacc0l

967

968 lddi @(gr28,#REG_GR(4)) ,gr4

969 lddi @(gr28,#REG_GR(6)) ,gr6

970 lddi @(gr28,#REG_GR(8)) ,gr8

971 lddi @(gr28,#REG_GR(10)),gr10

972 lddi @(gr28,#REG_GR(12)),gr12

973 lddi @(gr28,#REG_GR(14)),gr14

974 lddi @(gr28,#REG_GR(16)),gr16

975 lddi @(gr28,#REG_GR(18)),gr18

976 lddi @(gr28,#REG_GR(20)),gr20

977 lddi @(gr28,#REG_GR(22)),gr22

978 lddi @(gr28,#REG_GR(24)),gr24

979 lddi @(gr28,#REG_GR(26)),gr26

980 ldi @(gr28,#REG_GR(29)),gr29

981 lddi @(gr28,#REG_GR(30)),gr30

982

983 # check to see if a debugging return is required

984 LEDS 0x67f0

985 movsg ccr,gr2

986 ldi @(gr28,#REG__STATUS),gr3

987 andicc gr3,#REG__STATUS_STEP,gr0,icc0

988 bne icc0,#0,__entry_return_singlestep

989 movgs gr2,ccr

990

991 ldi @(gr28,#REG_SP) ,sp

992 lddi @(gr28,#REG_GR(2)) ,gr2

993 ldi @(gr28,#REG_GR(28)),gr28

994

995 LEDS 0x67fe

996 // movsg pcsr,gr31

997 // LEDS32

998

999 #if 0

1000 # store the current frame in the workram on the FR451

1001 movgs gr28,scr2

1002 sethi.p %hi(0xfe800000),gr28

1003 setlo %lo(0xfe800000),gr28

1004

1005 stdi gr2,@(gr28,#REG_GR(2))

1006 stdi gr4,@(gr28,#REG_GR(4))

1007 stdi gr6,@(gr28,#REG_GR(6))

1008 stdi gr8,@(gr28,#REG_GR(8))

1009 stdi gr10,@(gr28,#REG_GR(10))

1010 stdi gr12,@(gr28,#REG_GR(12))

1011 stdi gr14,@(gr28,#REG_GR(14))

1012 stdi gr16,@(gr28,#REG_GR(16))

1013 stdi gr18,@(gr28,#REG_GR(18))

1014 stdi gr24,@(gr28,#REG_GR(24))

1015 stdi gr26,@(gr28,#REG_GR(26))

1016 sti gr29,@(gr28,#REG_GR(29))

1017 stdi gr30,@(gr28,#REG_GR(30))

1018

1019 movsg tbr ,gr30

1020 sti gr30,@(gr28,#REG_TBR)

1021 movsg pcsr,gr30

1022 sti gr30,@(gr28,#REG_PC)

1023 movsg psr ,gr30

1024 sti gr30,@(gr28,#REG_PSR)

1025 movsg isr ,gr30

1026 sti gr30,@(gr28,#REG_ISR)

1027 movsg ccr ,gr30

1028 movsg cccr,gr31

1029 stdi gr30,@(gr28,#REG_CCR)

1030 movsg lr ,gr30

1031 movsg lcr ,gr31

1032 stdi gr30,@(gr28,#REG_LR)

1033 sti gr0 ,@(gr28,#REG_SYSCALLNO)

1034 movsg scr2,gr28

1035 #endif

1036

1037 rett #0

1038

1039 # return via break.S

1040 __entry_return_singlestep:

1041 movgs gr2,ccr

1042 lddi @(gr28,#REG_GR(2)) ,gr2

1043 ldi @(gr28,#REG_SP) ,sp

1044 ldi @(gr28,#REG_GR(28)),gr28

1045 LEDS 0x67ff

1046 break

1047 .globl __entry_return_singlestep_breaks_here

1048 __entry_return_singlestep_breaks_here:

1049 nop

1050

1051

1052 ###############################################################################

1053 #

1054 # return to a process interrupted in kernel space

1055 # - we need to consider preemption if that is enabled

1056 #

1057 ###############################################################################

1058 .balign L1_CACHE_BYTES

1059 __entry_return_from_kernel_exception:

1060 LEDS 0x6302

1061 movsg psr,gr23

1062 ori gr23,#PSR_PIL_14,gr23

1063 movgs gr23,psr

1064 bra __entry_return_direct

1065

1066 .balign L1_CACHE_BYTES

1067 __entry_return_from_kernel_interrupt:

1068 LEDS 0x6303

1069 movsg psr,gr23

1070 ori gr23,#PSR_PIL_14,gr23

1071 movgs gr23,psr

1072

1073 #ifdef CONFIG_PREEMPT

1074 ldi @(gr15,#TI_PRE_COUNT),gr5

1075 subicc gr5,#0,gr0,icc0

1076 beq icc0,#0,__entry_return_direct

1077

1078 subcc gr0,gr0,gr0,icc2 /* set Z and clear C */

1079 call preempt_schedule_irq

1080 #endif

1081 bra __entry_return_direct

1082

1083

1084 ###############################################################################

1085 #

1086 # perform work that needs to be done immediately before resumption

1087 #

1088 ###############################################################################

1089 .globl __entry_return_from_user_exception

1090 .balign L1_CACHE_BYTES

1091 __entry_return_from_user_exception:

1092 LEDS 0x6501

1093

1094 __entry_resume_userspace:

1095 # make sure we don't miss an interrupt setting need_resched or sigpending between

1096 # sampling and the RETT

1097 movsg psr,gr23

1098 ori gr23,#PSR_PIL_14,gr23

1099 movgs gr23,psr

1100

1101 __entry_return_from_user_interrupt:

1102 LEDS 0x6402

1103 ldi @(gr15,#TI_FLAGS),gr4

1104 andicc gr4,#_TIF_WORK_MASK,gr0,icc0

1105 beq icc0,#1,__entry_return_direct

1106

1107 __entry_work_pending:

1108 LEDS 0x6404

1109 andicc gr4,#_TIF_NEED_RESCHED,gr0,icc0

1110 beq icc0,#1,__entry_work_notifysig

1111

1112 __entry_work_resched:

1113 LEDS 0x6408

1114 movsg psr,gr23

1115 andi gr23,#~PSR_PIL,gr23

1116 movgs gr23,psr

1117 call schedule

1118 movsg psr,gr23

1119 ori gr23,#PSR_PIL_14,gr23

1120 movgs gr23,psr

1121

1122 LEDS 0x6401

1123 ldi @(gr15,#TI_FLAGS),gr4

1124 andicc gr4,#_TIF_WORK_MASK,gr0,icc0

1125 beq icc0,#1,__entry_return_direct

1126 andicc gr4,#_TIF_NEED_RESCHED,gr0,icc0

1127 bne icc0,#1,__entry_work_resched

1128

1129 __entry_work_notifysig:

1130 LEDS 0x6410

1131 ori.p gr4,#0,gr8

1132 call do_notify_resume

1133 bra __entry_resume_userspace

1134

1135 # perform syscall entry tracing

1136 __syscall_trace_entry:

1137 LEDS 0x6320

1138 call syscall_trace_entry

1139

1140 lddi.p @(gr28,#REG_GR(8)) ,gr8

1141 ori gr8,#0,gr7 ; syscall_trace_entry() returned new syscallno

1142 lddi @(gr28,#REG_GR(10)),gr10

1143 lddi.p @(gr28,#REG_GR(12)),gr12

1144

1145 subicc gr7,#nr_syscalls,gr0,icc0

1146 bnc icc0,#0,__syscall_badsys

1147 bra __syscall_call

1148

1149 # perform syscall exit tracing

1150 __syscall_exit_work:

1151 LEDS 0x6340

1152 andicc gr22,#PSR_PS,gr0,icc1 ; don't handle on return to kernel mode

1153 andicc.p gr4,#_TIF_SYSCALL_TRACE,gr0,icc0

1154 bne icc1,#0,__entry_return_direct

1155 beq icc0,#1,__entry_work_pending

1156

1157 movsg psr,gr23

1158 andi gr23,#~PSR_PIL,gr23 ; could let syscall_trace_exit() call schedule()

1159 movgs gr23,psr

1160

1161 call syscall_trace_exit

1162 bra __entry_resume_userspace

1163

1164 __syscall_badsys:

1165 LEDS 0x6380

1166 setlos #-ENOSYS,gr8

1167 sti gr8,@(gr28,#REG_GR(8)) ; save return value

1168 bra __entry_resume_userspace

1169

1170

1171 ###############################################################################

1172 #

1173 # syscall vector table

1174 #

1175 ###############################################################################

1176 .section .rodata

1177 ALIGN

1178 .globl sys_call_table

1179 sys_call_table:

1180 .long sys_restart_syscall /* 0 - old "setup()" system call, used for restarting */

1181 .long sys_exit

1182 .long sys_fork

1183 .long sys_read

1184 .long sys_write

1185 .long sys_open /* 5 */

1186 .long sys_close

1187 .long sys_waitpid

1188 .long sys_creat

1189 .long sys_link

1190 .long sys_unlink /* 10 */

1191 .long sys_execve

1192 .long sys_chdir

1193 .long sys_time

1194 .long sys_mknod

1195 .long sys_chmod /* 15 */

1196 .long sys_lchown16

1197 .long sys_ni_syscall /* old break syscall holder */

1198 .long sys_stat

1199 .long sys_lseek

1200 .long sys_getpid /* 20 */

1201 .long sys_mount

1202 .long sys_oldumount

1203 .long sys_setuid16

1204 .long sys_getuid16

1205 .long sys_ni_syscall // sys_stime /* 25 */

1206 .long sys_ptrace

1207 .long sys_alarm

1208 .long sys_fstat

1209 .long sys_pause

1210 .long sys_utime /* 30 */

1211 .long sys_ni_syscall /* old stty syscall holder */

1212 .long sys_ni_syscall /* old gtty syscall holder */

1213 .long sys_access

1214 .long sys_nice

1215 .long sys_ni_syscall /* 35 */ /* old ftime syscall holder */

1216 .long sys_sync

1217 .long sys_kill

1218 .long sys_rename

1219 .long sys_mkdir

1220 .long sys_rmdir /* 40 */

1221 .long sys_dup

1222 .long sys_pipe

1223 .long sys_times

1224 .long sys_ni_syscall /* old prof syscall holder */

1225 .long sys_brk /* 45 */

1226 .long sys_setgid16

1227 .long sys_getgid16

1228 .long sys_ni_syscall // sys_signal

1229 .long sys_geteuid16

1230 .long sys_getegid16 /* 50 */

1231 .long sys_acct

1232 .long sys_umount /* recycled never used phys( */

1233 .long sys_ni_syscall /* old lock syscall holder */

1234 .long sys_ioctl

1235 .long sys_fcntl /* 55 */

1236 .long sys_ni_syscall /* old mpx syscall holder */

1237 .long sys_setpgid

1238 .long sys_ni_syscall /* old ulimit syscall holder */

1239 .long sys_ni_syscall /* old old uname syscall */

1240 .long sys_umask /* 60 */

1241 .long sys_chroot

1242 .long sys_ustat

1243 .long sys_dup2

1244 .long sys_getppid

1245 .long sys_getpgrp /* 65 */

1246 .long sys_setsid

1247 .long sys_sigaction

1248 .long sys_ni_syscall // sys_sgetmask

1249 .long sys_ni_syscall // sys_ssetmask

1250 .long sys_setreuid16 /* 70 */

1251 .long sys_setregid16

1252 .long sys_sigsuspend

1253 .long sys_ni_syscall // sys_sigpending

1254 .long sys_sethostname

1255 .long sys_setrlimit /* 75 */

1256 .long sys_ni_syscall // sys_old_getrlimit

1257 .long sys_getrusage

1258 .long sys_gettimeofday

1259 .long sys_settimeofday

1260 .long sys_getgroups16 /* 80 */

1261 .long sys_setgroups16

1262 .long sys_ni_syscall /* old_select slot */

1263 .long sys_symlink

1264 .long sys_lstat

1265 .long sys_readlink /* 85 */

1266 .long sys_uselib

1267 .long sys_swapon

1268 .long sys_reboot

1269 .long sys_ni_syscall // old_readdir

1270 .long sys_ni_syscall /* 90 */ /* old_mmap slot */

1271 .long sys_munmap

1272 .long sys_truncate

1273 .long sys_ftruncate

1274 .long sys_fchmod

1275 .long sys_fchown16 /* 95 */

1276 .long sys_getpriority

1277 .long sys_setpriority

1278 .long sys_ni_syscall /* old profil syscall holder */

1279 .long sys_statfs

1280 .long sys_fstatfs /* 100 */

1281 .long sys_ni_syscall /* ioperm for i386 */

1282 .long sys_socketcall

1283 .long sys_syslog

1284 .long sys_setitimer

1285 .long sys_getitimer /* 105 */

1286 .long sys_newstat

1287 .long sys_newlstat

1288 .long sys_newfstat

1289 .long sys_ni_syscall /* obsolete olduname( syscall */

1290 .long sys_ni_syscall /* iopl for i386 */ /* 110 */

1291 .long sys_vhangup

1292 .long sys_ni_syscall /* obsolete idle( syscall */

1293 .long sys_ni_syscall /* vm86old for i386 */

1294 .long sys_wait4

1295 .long sys_swapoff /* 115 */

1296 .long sys_sysinfo

1297 .long sys_ipc

1298 .long sys_fsync

1299 .long sys_sigreturn

1300 .long sys_clone /* 120 */

1301 .long sys_setdomainname

1302 .long sys_newuname

1303 .long sys_ni_syscall /* old "cacheflush" */

1304 .long sys_adjtimex

1305 .long sys_mprotect /* 125 */

1306 .long sys_sigprocmask

1307 .long sys_ni_syscall /* old "create_module" */

1308 .long sys_init_module

1309 .long sys_delete_module

1310 .long sys_ni_syscall /* old "get_kernel_syms" */

1311 .long sys_quotactl

1312 .long sys_getpgid

1313 .long sys_fchdir

1314 .long sys_bdflush

1315 .long sys_sysfs /* 135 */

1316 .long sys_personality

1317 .long sys_ni_syscall /* for afs_syscall */

1318 .long sys_setfsuid16

1319 .long sys_setfsgid16

1320 .long sys_llseek /* 140 */

1321 .long sys_getdents

1322 .long sys_select

1323 .long sys_flock

1324 .long sys_msync

1325 .long sys_readv /* 145 */

1326 .long sys_writev

1327 .long sys_getsid

1328 .long sys_fdatasync

1329 .long sys_sysctl

1330 .long sys_mlock /* 150 */

1331 .long sys_munlock

1332 .long sys_mlockall

1333 .long sys_munlockall

1334 .long sys_sched_setparam

1335 .long sys_sched_getparam /* 155 */

1336 .long sys_sched_setscheduler

1337 .long sys_sched_getscheduler

1338 .long sys_sched_yield

1339 .long sys_sched_get_priority_max

1340 .long sys_sched_get_priority_min /* 160 */

1341 .long sys_sched_rr_get_interval

1342 .long sys_nanosleep

1343 .long sys_mremap

1344 .long sys_setresuid16

1345 .long sys_getresuid16 /* 165 */

1346 .long sys_ni_syscall /* for vm86 */

1347 .long sys_ni_syscall /* Old sys_query_module */

1348 .long sys_poll

1349 .long sys_ni_syscall /* Old nfsservctl */

1350 .long sys_setresgid16 /* 170 */

1351 .long sys_getresgid16

1352 .long sys_prctl

1353 .long sys_rt_sigreturn

1354 .long sys_rt_sigaction

1355 .long sys_rt_sigprocmask /* 175 */

1356 .long sys_rt_sigpending

1357 .long sys_rt_sigtimedwait

1358 .long sys_rt_sigqueueinfo

1359 .long sys_rt_sigsuspend

1360 .long sys_pread64 /* 180 */

1361 .long sys_pwrite64

1362 .long sys_chown16

1363 .long sys_getcwd

1364 .long sys_capget

1365 .long sys_capset /* 185 */

1366 .long sys_sigaltstack

1367 .long sys_sendfile

1368 .long sys_ni_syscall /* streams1 */

1369 .long sys_ni_syscall /* streams2 */

1370 .long sys_vfork /* 190 */

1371 .long sys_getrlimit

1372 .long sys_mmap2

1373 .long sys_truncate64

1374 .long sys_ftruncate64

1375 .long sys_stat64 /* 195 */

1376 .long sys_lstat64

1377 .long sys_fstat64

1378 .long sys_lchown

1379 .long sys_getuid

1380 .long sys_getgid /* 200 */

1381 .long sys_geteuid

1382 .long sys_getegid

1383 .long sys_setreuid

1384 .long sys_setregid

1385 .long sys_getgroups /* 205 */

1386 .long sys_setgroups

1387 .long sys_fchown

1388 .long sys_setresuid

1389 .long sys_getresuid

1390 .long sys_setresgid /* 210 */

1391 .long sys_getresgid

1392 .long sys_chown

1393 .long sys_setuid

1394 .long sys_setgid

1395 .long sys_setfsuid /* 215 */

1396 .long sys_setfsgid

1397 .long sys_pivot_root

1398 .long sys_mincore

1399 .long sys_madvise

1400 .long sys_getdents64 /* 220 */

1401 .long sys_fcntl64

1402 .long sys_ni_syscall /* reserved for TUX */

1403 .long sys_ni_syscall /* Reserved for Security */

1404 .long sys_gettid

1405 .long sys_readahead /* 225 */

1406 .long sys_setxattr

1407 .long sys_lsetxattr

1408 .long sys_fsetxattr

1409 .long sys_getxattr

1410 .long sys_lgetxattr /* 230 */

1411 .long sys_fgetxattr

1412 .long sys_listxattr

1413 .long sys_llistxattr

1414 .long sys_flistxattr

1415 .long sys_removexattr /* 235 */

1416 .long sys_lremovexattr

1417 .long sys_fremovexattr

1418 .long sys_tkill

1419 .long sys_sendfile64

1420 .long sys_futex /* 240 */

1421 .long sys_sched_setaffinity

1422 .long sys_sched_getaffinity

1423 .long sys_ni_syscall //sys_set_thread_area

1424 .long sys_ni_syscall //sys_get_thread_area

1425 .long sys_io_setup /* 245 */

1426 .long sys_io_destroy

1427 .long sys_io_getevents

1428 .long sys_io_submit

1429 .long sys_io_cancel

1430 .long sys_fadvise64 /* 250 */

1431 .long sys_ni_syscall

1432 .long sys_exit_group

1433 .long sys_lookup_dcookie

1434 .long sys_epoll_create

1435 .long sys_epoll_ctl /* 255 */

1436 .long sys_epoll_wait

1437 .long sys_remap_file_pages

1438 .long sys_set_tid_address

1439 .long sys_timer_create

1440 .long sys_timer_settime /* 260 */

1441 .long sys_timer_gettime

1442 .long sys_timer_getoverrun

1443 .long sys_timer_delete

1444 .long sys_clock_settime

1445 .long sys_clock_gettime /* 265 */

1446 .long sys_clock_getres

1447 .long sys_clock_nanosleep

1448 .long sys_statfs64

1449 .long sys_fstatfs64

1450 .long sys_tgkill /* 270 */

1451 .long sys_utimes

1452 .long sys_fadvise64_64

1453 .long sys_ni_syscall /* sys_vserver */

1454 .long sys_mbind

1455 .long sys_get_mempolicy

1456 .long sys_set_mempolicy

1457 .long sys_mq_open

1458 .long sys_mq_unlink

1459 .long sys_mq_timedsend

1460 .long sys_mq_timedreceive /* 280 */

1461 .long sys_mq_notify

1462 .long sys_mq_getsetattr

1463 .long sys_ni_syscall /* reserved for kexec */

1464 .long sys_waitid

1465 .long sys_ni_syscall /* 285 */ /* available */

1466 .long sys_add_key

1467 .long sys_request_key

1468 .long sys_keyctl

1469 .long sys_ioprio_set

1470 .long sys_ioprio_get /* 290 */

1471 .long sys_inotify_init

1472 .long sys_inotify_add_watch

1473 .long sys_inotify_rm_watch

1474 .long sys_migrate_pages

1475 .long sys_openat /* 295 */

1476 .long sys_mkdirat

1477 .long sys_mknodat

1478 .long sys_fchownat

1479 .long sys_futimesat

1480 .long sys_fstatat64 /* 300 */

1481 .long sys_unlinkat

1482 .long sys_renameat

1483 .long sys_linkat

1484 .long sys_symlinkat

1485 .long sys_readlinkat /* 305 */

1486 .long sys_fchmodat

1487 .long sys_faccessat

1488 .long sys_pselect6

1489 .long sys_ppoll

1490 .long sys_unshare /* 310 */

1491 .long sys_set_robust_list

1492 .long sys_get_robust_list

1493 .long sys_splice

1494 .long sys_sync_file_range

1495 .long sys_tee /* 315 */

1496 .long sys_vmsplice

1497 .long sys_move_pages

1498 .long sys_getcpu

1499 .long sys_epoll_pwait

1500 .long sys_utimensat /* 320 */

1501 .long sys_signalfd

1502 .long sys_timerfd_create

1503 .long sys_eventfd

1504 .long sys_fallocate

1505 .long sys_timerfd_settime /* 325 */

1506 .long sys_timerfd_gettime

1507 .long sys_signalfd4

1508 .long sys_eventfd2

1509 .long sys_epoll_create1

1510 .long sys_dup3 /* 330 */

1511 .long sys_pipe2

1512 .long sys_inotify_init1

1513 .long sys_preadv

1514 .long sys_pwritev

1515 .long sys_rt_tgsigqueueinfo /* 335 */

1516 .long sys_perf_event_open

1517 .long sys_setns

1518

1519 syscall_table_size = (. - sys_call_table)

三、总结

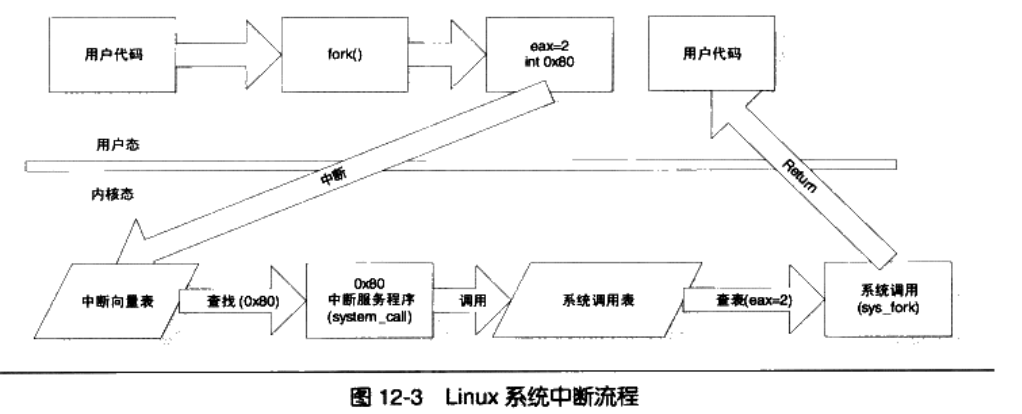

从整体过程来看,系统通过 int 0x80 从用户态进入内核态。在这个过程中系统先保存了中断环境,然后执行系统调用函数。system_call() 函数通过系统调用号查找系统调用表 sys_cal_table 来查找到具体的系统调用服务进程。在执行完系统调用后在执行 iret 之前,内核做了一系列检查,用于检查是否有新的中断产生。如果没有新的中断,则通过已保存的系统中断环境返回用户态。这样就完成了一个系统调用过程。

需要注意的是,系统调用通过 INT 0x80 进入内核,跳转到 system_call() 函数,然后执行相应服务进程。因为代表了用户进程,所以这个过程并不属于中断上下文,而是属于进程上下文。

李若森

原创作品转载请注明出处

《Linux内核分析》MOOC课程http://mooc.study.163.com/course/USTC-1000029000

浙公网安备 33010602011771号

浙公网安备 33010602011771号