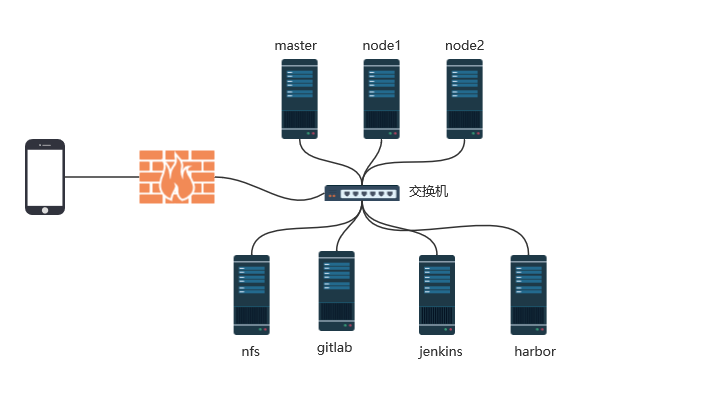

基于k8s的web集群项目

准备5台linux机器

系统是centos7.9 2核/4G的配置

1.1.准备环境:

先给每台服务器起好名字,使用固定的ip地址,防止后面因为ip地址的变化,导致整个集群异常(每台机器)

master 192.168.138.200

node1 192.168.138.201

node2 192.168.138.202

gitlab 192.168.138.24

nfs 192.168.138.130

jenkins(docker安装) 192.168.138.200:8088

harbor(docker-compose安装) 192.168.138.200:8823

[root@localhost ~]# hostnamectl set-hostname mater

[root@localhost ~]# su - root

修改使用固定ip地址

# cd /etc/sysconfig/network-scripts # vi ifcfg-ens33

BOOTPROTO=none DEFROUTE=yes NAME=ens33 DEVICE=ens33 ONBOOT=yes IPADDR=192.168.18.13* PREFIX=24 GATEWAY=192.168.18.2 DNS1=114.114.114.114

刷新网络服务

# service network restart

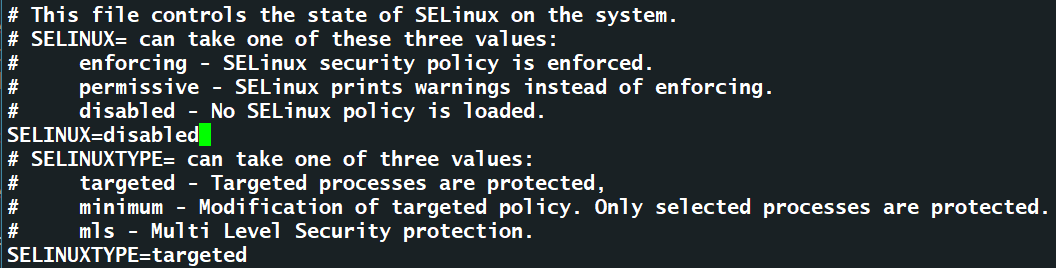

关闭selinux和firewalld

# service firewalld stop Redirecting to /bin/systemctl stop firewalld.service # systemctl disable firewalld Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service. Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service

修改selinux为disabled状态

# vi /etc/selinux/config

1.2.在每台机器上安装并启动docker,设置为开机自启

https://docs.docker.com/engine/install/centos/ 根据docker官方网址安装docker

# systemctl restart docker

# systemctl enable docker

# ps aux|grep docker

root 2190 1.4 1.5 1159376 59744 ? Ssl 16:22 0:00 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock root 2387 0.0 0.0 112824 984 pts/0 S+ 16:22 0:00 grep --color=auto docker

1.3.配置 Docker使用systemd作为默认Cgroup驱动(每台机器)

# cat <<EOF > /etc/docker/daemon.json

{ "exec-opts": ["native.cgroupdriver=systemd"] } EOF

重启docker

# systemctl restart docker

1.4. 关闭swap分区(每台机器),因为k8s不想使用swap分区来存储数据,使用swap会降低性能

# swapoff -a #临时关闭 # sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab #永久关闭

1.5.在所有主机上上添加如下命令,修改/etc/hosts文件

# cat >> /etc/hosts << EOF 192.168.18.139 master 192.168.18.138 node1 192.168.18.137 node2 192.168.18.136 node3 EOF

永久修改,追加到内核会读取的参数文件里

# cat <<EOF >> /etc/sysctl.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_nonlocal_bind = 1 net.ipv4.ip_forward = 1 vm.swappiness=0 EOF

# sysctl -p 让内核重新读取数据,加载生效

1.6.安装kubeadm,kubelet和kubectl(每台机器)

kubeadm -->k8s的管理程序-->在master上运行的-->建立整个k8s集群,背后是执行了大量的脚本,帮助我们去启动k8s

kubelet -->在node节点上用来管理容器的-->管理docker,告诉docker程序去启动容器

master和node通信用的-->管理docker,告诉docker程序去启动容器

一个在集群中每个节点(node)上运行的代理。 它保证容器(containers)都 运行在 Pod 中。

kubectl -->在master上用来给node节点发号施令的程序,用来控制node节点的,告诉它们做什么事情的,是命令行操作的工具

添加kubernetes YUM软件源

# cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

安装kubeadm,kubelet和kubectl

# yum install -y kubelet kubeadm kubectl

# yum install -y kubelet-1.23.6 kubeadm-1.23.6 kubectl-1.23.6 # 最好指定版本,因为1.24的版本默认的容器运行时环境不是docker了

https://www.docker.com/blog/dockershim-not-needed-docker-desktop-with-kubernetes-1-24/ 文章,怎么让1.24版本也支持docker

设置开机自启,因为kubelet是k8s在node节点上的代理,必须开机要运行的

# systemctl enable kubelet

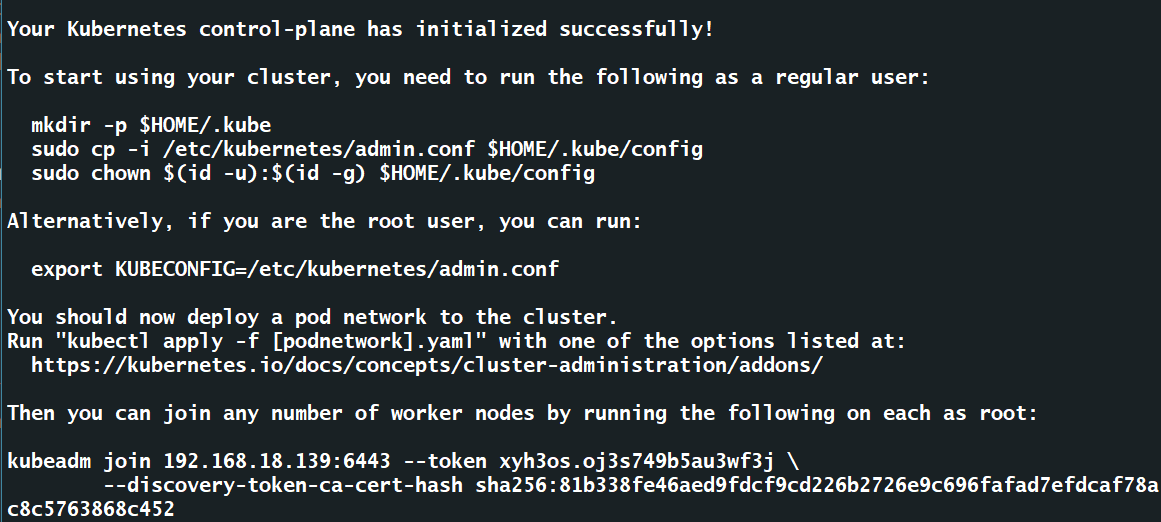

1.7.部署Kubernetes Master(master主机执行)

提前准备coredns:1.8.4的镜像,后面需要使用,需要在每台机器上下载镜像

# docker pull coredns/coredns:1.8.4 # docker tag coredns/coredns:1.8.4 registry.aliyuncs.com/google_containers/coredns:v1.8.4

初始化操作在master服务器上执行,192.168.18.139 是master的ip

# kubeadm init \ --apiserver-advertise-address=192.168.18.139 \ --image-repository registry.aliyuncs.com/google_containers \ --service-cidr=10.1.0.0/16 \ --pod-network-cidr=10.244.0.0/16

成功会看到这样的界面,按照提示操作

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

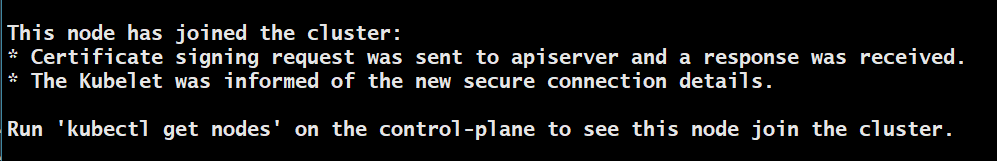

1.8. node节点服务器加入k8s集群,在所有node节点执行

kubeadm join 192.168.18.139:6443 --token xyh3os.oj3s749b5au3wf3j \ --discovery-token-ca-cert-hash sha256:81b338fe46aed9fdcf9cd226b2726e9c696fafad7efdcaf78ac8c5763868c452

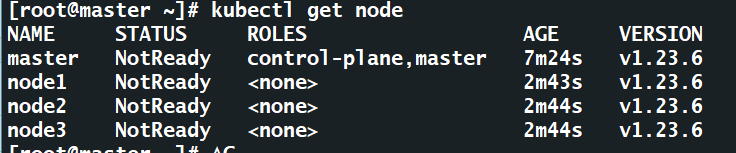

查看master节点上的所有的节点服务器

kubectl get nodes

NotReady 说明master和node节点之间的通信还是有问题的,容器之间通信还没有准备好

k8s里删除节点k8s-node1

kubectl drain node1 --delete-emptydir-data --force --ignore-daemonsets node/node1

kubectl delete node node1

1.9.安装网络插件flannel(在master节点执行),实现master上的pod和node节点上的pod之间通信

k8s里的网络插件: 1.flannel 2.calico --> 作用就是实现不同的宿主机之间的pod通信

kube-flannel.yaml 文件需要自己去创建,内容如下:

vi kube-flannel.yaml

--- kind: Namespace apiVersion: v1 metadata: name: kube-flannel labels: pod-security.kubernetes.io/enforce: privileged --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: flannel rules: - apiGroups: - "" resources: - pods verbs: - get - apiGroups: - "" resources: - nodes verbs: - list - watch - apiGroups: - "" resources: - nodes/status verbs: - patch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: flannel roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: flannel subjects: - kind: ServiceAccount name: flannel namespace: kube-flannel --- apiVersion: v1 kind: ServiceAccount metadata: name: flannel namespace: kube-flannel --- kind: ConfigMap apiVersion: v1 metadata: name: kube-flannel-cfg namespace: kube-flannel labels: tier: node app: flannel data: cni-conf.json: | { "name": "cbr0", "cniVersion": "0.3.1", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } net-conf.json: | { "Network": "10.244.0.0/16", "Backend": { "Type": "vxlan" } } --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds namespace: kube-flannel labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/os operator: In values: - linux hostNetwork: true priorityClassName: system-node-critical tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni-plugin #image: flannelcni/flannel-cni-plugin:v1.1.0 for ppc64le and mips64le (dockerhub limitations may apply) image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0 command: - cp args: - -f - /flannel - /opt/cni/bin/flannel volumeMounts: - name: cni-plugin mountPath: /opt/cni/bin - name: install-cni #image: flannelcni/flannel:v0.19.2 for ppc64le and mips64le (dockerhub limitations may apply) image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.2 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel #image: flannelcni/flannel:v0.19.2 for ppc64le and mips64le (dockerhub limitations may apply) image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.2 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN", "NET_RAW"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: EVENT_QUEUE_DEPTH value: "5000" volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ - name: xtables-lock mountPath: /run/xtables.lock volumes: - name: run hostPath: path: /run/flannel - name: cni-plugin hostPath: path: /opt/cni/bin - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg - name: xtables-lock hostPath: path: /run/xtables.lock type: FileOrCreate

部署flannel

# kubectl apply -f kube-flannel.yml

查看flannel是否运行

[root@master ~]# ps aux|grep flannel root 3818 0.2 1.6 1261176 30984 ? Ssl 14:45 0:00 /opt/bin/flanneld --ip-masq --kube-subnet-mgr root 4392 0.0 0.0 112828 976 pts/0 S+ 14:46 0:00 grep --color=auto flannel

1.10.查看集群详细状态

[root@master ~]# kubectl get nodes -n kube-system -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master Ready control-plane,master 17h v1.23.6 192.168.18.139 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://23.0.3

node1 Ready <none> 17h v1.23.6 192.168.18.138 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://23.0.3

node2 Ready <none> 17h v1.23.6 192.168.18.137 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://23.0.3

node3 Ready <none> 17h v1.23.6 192.168.18.136 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://23.0.3

查看kubernets里有哪些命名空间

[root@master ~]# kubectl get ns NAME STATUS AGE default Active 17h kube-flannel Active 7m35s kube-node-lease Active 17h kube-public Active 17h kube-system Active 17h

2.在三台node节点通过HPA水平自动扩缩启动pod来提供web服务

#2.使用脚本编译安装nginx,制作自己的nginx镜像新建目录

[root@master /]# mkdir /mynginx [root@master /]# cd /mynginx [root@master mynginx]#

下载nginx源码文件

[root@master mynginx]# curl -O http://nginx.org/download/nginx-1.21.1.tar.gz

新建nginx编译安装脚本

[root@master mynginx]# vim install_nginx.sh

#!/bin/bash

#解决软件的依赖关系,需要安装的软件包

yum -y install zlib zlib-devel openssl openssl-devel pcre pcre-devel gcc gcc-c++ autoconf automake make

#download nginx

mkdir -p /nginx

cd /nginx

#解压 下载的nginx的源码包

tar xf nginx-1.21.1.tar.gz

cd nginx-1.21.1

#生成编译前配置工作-->Makefile

./configure --prefix=/usr/local/nginx1 --with-threads --with-http_ssl_module --with-http_realip_module --with-http_v2_module --with-file-aio --with-http_stub_status_module --with-stream

#编译

make

#编译安装-->将编译好的二进制程序安装指定目录/usr/local/nginx1

make install

编写Dockerfile

[root@master mynginx]# vim Dockerfile

FROM centos:7 ENV NGINX_VERSION 1.21.1 RUN mkdir /nginx WORKDIR /nginx COPY . /nginx RUN bash install_nginx.sh EXPOSE 80 ENV PATH=/usr/local/nginx1/sbin:$PATH

CMD ["nginx","-g","daemon off;"

生成我们的nginx镜像

[root@master mynginx]# docker build -t my-nginx .

[root@master mynginx]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

my-nginx latest 610fe9652e9a About a minute ago 598MB

2.2.1k8s使用本地镜像部署pod,每个节点都需要有这个镜像(下面是我使用过的两种解决方案)

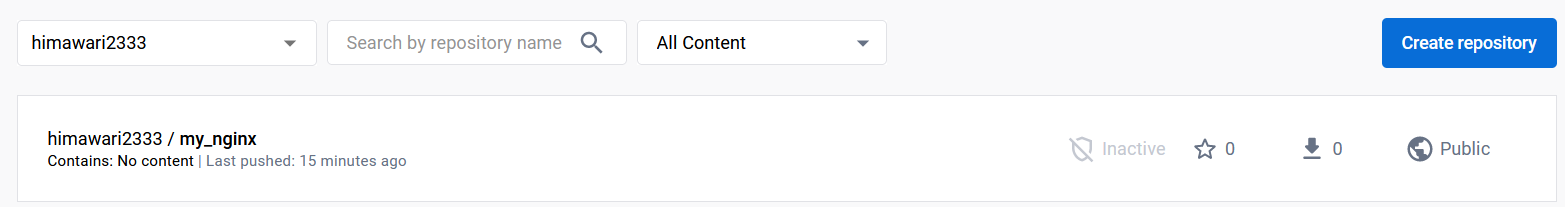

1)使用docker hub存放制作的镜像(解决方案之一)

在docker hub上新建账号并登录,创建好一个仓库

使用这个仓库的名字作为tagname并push到仓库

[root@master ~]# docker tag my-nginx himawari2333/my_nginx

[root@master ~]# docker push himawari2333/my_nginx

0fd1fa587277: Pushed

24afe9385758: Pushed

5f70bf18a086: Pushed

1a442c8e9868: Pushed

174f56854903: Pushed

latest: digest: sha256:a7809a22e6759cd5c35db6a3430f24e7a89e8faa53fa8dcd84b8285764c2df57 size: 1366

修改nginx.yaml文件image改为镜像仓库的image

iamge: docker.io/himawari2333/my_nginx:latest

imagePullPolicy: Never #只从本地拉取镜像

2)使用docker save和docker load将镜像打包复制到各个节点(解决方案二)

在master节点操作docker save和scp

[root@master mynginx]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE himawari2333/my-nginx 2.0 6257ede81cc6 26 minutes ago 599MB my-nginx latest 6257ede81cc6 26 minutes ago 599MB [root@master mynginx]# docker save 6257ede81cc6 > /my-nginx.tar [root@master mynginx]# scp /my-nginx.tar root@node3:/ root@node3's password: my-nginx.tar 100% 584MB 113.0MB/s 00:05

在每个node节点操作docker load

[root@node1 ~]# docker load -i /my-nginx.tar 174f56854903: Loading layer [==================================================>] 211.7MB/211.7MB 1a442c8e9868: Loading layer [==================================================>] 2.56kB/2.56kB 5f70bf18a086: Loading layer [==================================================>] 1.024kB/1.024kB c9f0f1461a51: Loading layer [==================================================>] 1.071MB/1.071MB 238e1c458a79: Loading layer [==================================================>] 399.5MB/399.5MB Loaded image ID: sha256:6257ede81cc6a296032aac3146378e9129429ddadc5f965593f2034812f70bac [root@node1 ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE <none> <none> 6257ede81cc6 32 minutes ago 599MB [root@node1 ~]# docker tag 6257ede81cc6 my-nginx #因为解压后的镜像名称是<none>所以这里要打个标签 [root@node1 ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE my-nginx latest 6257ede81cc6 32 minutes ago 599MB

2.2.2部署nfs(nfs-server ,k8s-master,k8s-node1,k8s-node,k8s-node3)

安装nfs服务(nfs-server)

yum install -y nfs-utils

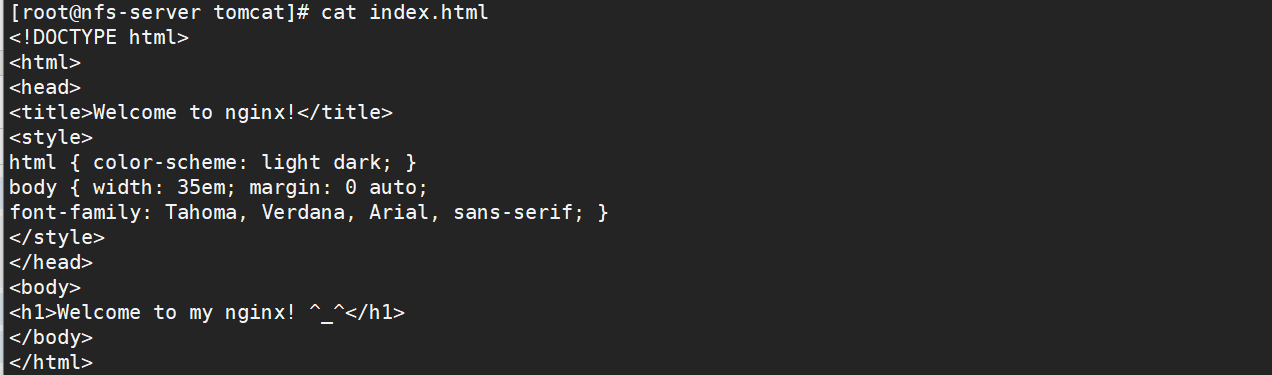

创建共享目录

mkdir -p /data/tomcat

编辑/etc/exports配置文件

cat /etc/exports /data 192.168.0.0/24(rw,no_root_squash,no_all_squash,sync)

启动nfs-server服务

systemctl start rpcbind

systemctl start nfs

在nodes安装nfs客户端

yum install -y nfs-utils

2.2.3创建pv-持久卷

[root@master mynginx]# cat pv_nfs.yaml apiVersion: v1 kind: PersistentVolume #资源类型 metadata: name: nginx-tomcat-pv labels: type: nginx-tomcat-pv spec: capacity: storage: 5Gi accessModes: - ReadWriteMany #访问模式,多个客户端读写 persistentVolumeReclaimPolicy: Recycle #回收策略-可以回收 storageClassName: nfs nfs: path: "/data/tomcat" server: 192.168.18.140 #nfs-server readOnly: false #只读

[root@master mynginx]# kubectl apply -f pv_nfs.yaml

2.2.4创建pvc-持久卷消费者

[root@master mynginx]# cat pvc_nfs.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nginx-tomcat-pvc spec: accessModes: - ReadWriteMany resources: requests: storage: 1Gi storageClassName: nfs

[root@master mynginx]# kubectl apply -f pvc_nfs.yaml

2.2.5pv,pvc自动绑定,并查看pv,pvc状态

[root@master mynginx]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nginx-tomcat-pv 5Gi RWX Recycle Bound default/nginx-tomcat-pvc nfs 9s [root@master mynginx]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE nginx-tomcat-pvc Bound nginx-tomcat-pv 5Gi RWX nfs 46h

2.2.6创建使用持久卷的pod

[root@master mynginx]# cat pv-pod.yaml apiVersion: apps/v1 kind: Deployment metadata: name: pv-pod-nfs labels: app: pv-pod-nfs spec: replicas: 40 selector: matchLabels: app: pv-pod-nfs template: metadata: labels: app: pv-pod-nfs spec: volumes: - name: pv-nfs-pvc persistentVolumeClaim: claimName: nginx-tomcat-pvc containers: - name: pv-container-nfs image: my-nginx2 imagePullPolicy: Never ports: - containerPort: 80 name: "http-server" volumeMounts: - mountPath: "/usr/local/nginx1/html" # 启动的容器里存放html页面的路径 name: pv-nfs-pvc

2.2.7发布服务

[root@master mynginx]# cat service.yaml apiVersion: v1 kind: Service metadata: name: pv-pod-nfs labels: app: pv-pod-nfs spec: type: NodePort ports: - port: 8090 targetPort: 80 protocol: TCP name: http selector: app: pv-pod-nfs

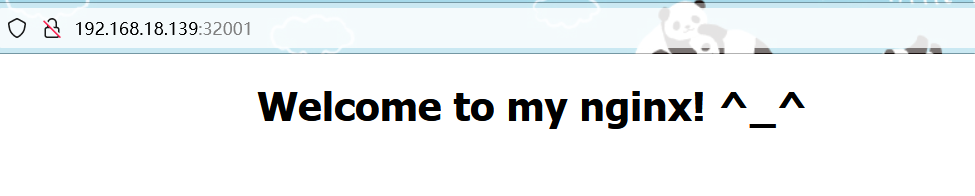

2.2.8检查持久化同步数据

[root@master mynginx]# kubectl get pod NAME READY STATUS RESTARTS AGE pv-pod-nfs-6b4f97ff64-2tc2v 1/1 Running 0 10h pv-pod-nfs-6b4f97ff64-679wn 1/1 Running 0 10h pv-pod-nfs-6b4f97ff64-6m2zk 1/1 Running 0 10h

[root@master mynginx]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 2d4h pv-pod-nfs NodePort 10.1.79.218 <none> 8090:32001/TCP 32h

3.1部署metrics-server

[root@master hpa]# cat metrics2.yaml apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: k8s-app: metrics-server rbac.authorization.k8s.io/aggregate-to-admin: "true" rbac.authorization.k8s.io/aggregate-to-edit: "true" rbac.authorization.k8s.io/aggregate-to-view: "true" name: system:aggregated-metrics-reader rules: - apiGroups: - metrics.k8s.io resources: - pods - nodes verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: k8s-app: metrics-server name: system:metrics-server rules: - apiGroups: - "" resources: - pods - nodes - nodes/stats - namespaces - configmaps verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: k8s-app: metrics-server name: metrics-server-auth-reader namespace: kube-system roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: extension-apiserver-authentication-reader subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: k8s-app: metrics-server name: metrics-server:system:auth-delegator roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:auth-delegator subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: k8s-app: metrics-server name: system:metrics-server roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:metrics-server subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: v1 kind: Service metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system spec: ports: - name: https port: 443 protocol: TCP targetPort: https selector: k8s-app: metrics-server --- apiVersion: apps/v1 kind: Deployment metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system spec: selector: matchLabels: k8s-app: metrics-server strategy: rollingUpdate: maxUnavailable: 0 template: metadata: labels: k8s-app: metrics-server spec: containers: - args: - --cert-dir=/tmp - --secure-port=4443 - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --kubelet-use-node-status-port - --metric-resolution=15s - --kubelet-insecure-tls image: registry.cn-shenzhen.aliyuncs.com/zengfengjin/metrics-server:v0.5.0 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 3 httpGet: path: /livez port: https scheme: HTTPS periodSeconds: 10 name: metrics-server ports: - containerPort: 4443 name: https protocol: TCP readinessProbe: failureThreshold: 3 httpGet: path: /readyz port: https scheme: HTTPS initialDelaySeconds: 20 periodSeconds: 10 resources: requests: cpu: 100m memory: 200Mi securityContext: readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 1000 volumeMounts: - mountPath: /tmp name: tmp-dir nodeSelector: kubernetes.io/os: linux priorityClassName: system-cluster-critical serviceAccountName: metrics-server volumes: - emptyDir: {} name: tmp-dir --- apiVersion: apiregistration.k8s.io/v1 kind: APIService metadata: labels: k8s-app: metrics-server name: v1beta1.metrics.k8s.io spec: group: metrics.k8s.io groupPriorityMinimum: 100 insecureSkipTLSVerify: true service: name: metrics-server namespace: kube-system version: v1beta1 versionPriority: 100

[root@master hpa]# kubectl get pod -A |grep metrics kube-system metrics-server-566f4dd5dd-2kjkg 1/1 Running 0 3h52m

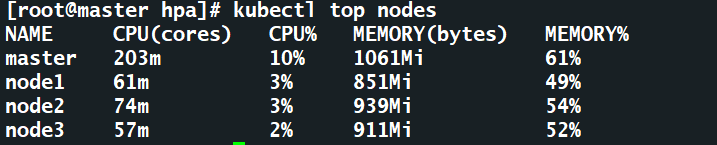

3.2查看节点信息

[root@master mynginx]# kubectl top nodes NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% master 189m 9% 1093Mi 63% node1 100m 5% 838Mi 48% node2 67m 3% 705Mi 41% node3 45m 2% 641Mi 37%

3.3基于CPU创建HPA

[root@master mynginx]# kubectl autoscale deployment pv-pod-nfs --cpu-percent=20 --min=1 --max=10

3.4基于内存创建HPA

重新编写yaml文件

[root@master mynginx]# cat pv-pod.yaml apiVersion: apps/v1 kind: Deployment metadata: name: pv-pod-nfs labels: app: pv-pod-nfs spec: replicas: 2 #创建2个副本 selector: matchLabels: app: pv-pod-nfs template: metadata: labels: app: pv-pod-nfs spec: volumes: - name: pv-nfs-pvc persistentVolumeClaim: claimName: nginx-tomcat-pvc containers: - name: pv-container-nfs image: my-nginx2 imagePullPolicy: Never ports: - containerPort: 80 name: "http-server" volumeMounts: - mountPath: "/usr/local/nginx1/html" name: pv-nfs-pvc resources: #添加以下内容 limits: cpu: 500m memory: 60Mi requests: cpu: 200m memory: 25Mi

3.5创建HPA

[root@master hpa]# cat hpa-nginx.yaml apiVersion: autoscaling/v2beta1 #上面的hpa版本有提到过,使用基于内存的hpa需要换个版本 kind: HorizontalPodAutoscaler metadata: name: nginx-hpa spec: maxReplicas: 10 #1-10的pod数量限制 minReplicas: 1 scaleTargetRef: #指定使用hpa的资源对象,版本、类型、名称要和上面创建的相同 apiVersion: apps/v1 kind: Deployment name: pv-pod-nfs metrics: - type: Resource resource: name: memory targetAverageUtilization: 50 #限制%50的内存

3.1部署前准备

#在所有节点提前下载镜像 [root@master ~]# docker pull prom/node-exporter [root@master ~]# docker pull prom/prometheus:v2.0.0 [root@master ~]# docker pull grafana/grafana:6.1.4

3.2采用daemonset方式部署node-exporter

[root@master prometheus-k8s-2]# cat node-exporter.yaml apiVersion: apps/v1 kind: DaemonSet metadata: name: node-exporter namespace: kube-system labels: k8s-app: node-exporter spec: selector: matchLabels: k8s-app: node-exporter template: metadata: labels: k8s-app: node-exporter spec: containers: - image: prom/node-exporter name: node-exporter ports: - containerPort: 9100 protocol: TCP name: http --- apiVersion: v1 kind: Service metadata: labels: k8s-app: node-exporter name: node-exporter namespace: kube-system spec: ports: - name: http port: 9100 nodePort: 31672 protocol: TCP type: NodePort selector: k8s-app: node-exporter

[root@master prometheus-k8s-2]# kubectl apply -f node-exporter.yaml

[root@master prometheus-k8s-2]# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system node-exporter-8l7dr 1/1 Running 0 6h54m kube-system node-exporter-q4767 1/1 Running 0 6h54m kube-system node-exporter-tww7x 1/1 Running 0 6h54m

[root@master prometheus-k8s-2]# kubectl get svc -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 2d5h default pv-pod-nfs NodePort 10.1.79.218 <none> 8090:32001/TCP 32h kube-system node-exporter NodePort 10.1.185.148 <none> 9100:31672/TCP 6h56m

3.3部署prometheus

[root@master prometheus-k8s-2]# cat configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

</span>- job_name: <span style="color: #800000;">'</span><span style="color: #800000;">kubernetes-apiservers</span><span style="color: #800000;">'</span><span style="color: #000000;">

kubernetes_sd_configs:

</span>-<span style="color: #000000;"> role: endpoints

scheme: https

tls_config:

ca_file: </span>/var/run/secrets/kubernetes.io/serviceaccount/<span style="color: #000000;">ca.crt

bearer_token_file: </span>/var/run/secrets/kubernetes.io/serviceaccount/<span style="color: #000000;">token

relabel_configs:

</span>-<span style="color: #000000;"> source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

</span>- job_name: <span style="color: #800000;">'</span><span style="color: #800000;">kubernetes-nodes</span><span style="color: #800000;">'</span><span style="color: #000000;">

kubernetes_sd_configs:

</span>-<span style="color: #000000;"> role: node

scheme: https

tls_config:

ca_file: </span>/var/run/secrets/kubernetes.io/serviceaccount/<span style="color: #000000;">ca.crt

bearer_token_file: </span>/var/run/secrets/kubernetes.io/serviceaccount/<span style="color: #000000;">token

relabel_configs:

</span>-<span style="color: #000000;"> action: labelmap

regex: __meta_kubernetes_node_label_(.</span>+<span style="color: #000000;">)

</span>-<span style="color: #000000;"> target_label: __address__

replacement: kubernetes.default.svc:</span><span style="color: #800080;">443</span>

-<span style="color: #000000;"> source_labels: [__meta_kubernetes_node_name]

regex: (.</span>+<span style="color: #000000;">)

target_label: __metrics_path__

replacement: </span>/api/v1/nodes/${<span style="color: #800080;">1</span>}/proxy/<span style="color: #000000;">metrics

</span>- job_name: <span style="color: #800000;">'</span><span style="color: #800000;">kubernetes-cadvisor</span><span style="color: #800000;">'</span><span style="color: #000000;">

kubernetes_sd_configs:

</span>-<span style="color: #000000;"> role: node

scheme: https

tls_config:

ca_file: </span>/var/run/secrets/kubernetes.io/serviceaccount/<span style="color: #000000;">ca.crt

bearer_token_file: </span>/var/run/secrets/kubernetes.io/serviceaccount/<span style="color: #000000;">token

relabel_configs:

</span>-<span style="color: #000000;"> action: labelmap

regex: __meta_kubernetes_node_label_(.</span>+<span style="color: #000000;">)

</span>-<span style="color: #000000;"> target_label: __address__

replacement: kubernetes.default.svc:</span><span style="color: #800080;">443</span>

-<span style="color: #000000;"> source_labels: [__meta_kubernetes_node_name]

regex: (.</span>+<span style="color: #000000;">)

target_label: __metrics_path__

replacement: </span>/api/v1/nodes/${<span style="color: #800080;">1</span>}/proxy/metrics/<span style="color: #000000;">cadvisor

</span>- job_name: <span style="color: #800000;">'</span><span style="color: #800000;">kubernetes-service-endpoints</span><span style="color: #800000;">'</span><span style="color: #000000;">

kubernetes_sd_configs:

</span>-<span style="color: #000000;"> role: endpoints

relabel_configs:

</span>-<span style="color: #000000;"> source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: </span><span style="color: #0000ff;">true</span>

-<span style="color: #000000;"> source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https</span>?<span style="color: #000000;">)

</span>-<span style="color: #000000;"> source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.</span>+<span style="color: #000000;">)

</span>-<span style="color: #000000;"> source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([</span>^:]+)(?::\d+)?;(\d+<span style="color: #000000;">)

replacement: $</span><span style="color: #800080;">1</span>:$<span style="color: #800080;">2</span>

-<span style="color: #000000;"> action: labelmap

regex: __meta_kubernetes_service_label_(.</span>+<span style="color: #000000;">)

</span>-<span style="color: #000000;"> source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

</span>-<span style="color: #000000;"> source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

</span>- job_name: <span style="color: #800000;">'</span><span style="color: #800000;">kubernetes-services</span><span style="color: #800000;">'</span><span style="color: #000000;">

kubernetes_sd_configs:

</span>-<span style="color: #000000;"> role: service

metrics_path: </span>/<span style="color: #000000;">probe

params:

module: [http_2xx]

relabel_configs:

</span>-<span style="color: #000000;"> source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]

action: keep

regex: </span><span style="color: #0000ff;">true</span>

-<span style="color: #000000;"> source_labels: [__address__]

target_label: __param_target

</span>-<span style="color: #000000;"> target_label: __address__

replacement: blackbox</span>-exporter.example.com:<span style="color: #800080;">9115</span>

-<span style="color: #000000;"> source_labels: [__param_target]

target_label: instance

</span>-<span style="color: #000000;"> action: labelmap

regex: __meta_kubernetes_service_label_(.</span>+<span style="color: #000000;">)

</span>-<span style="color: #000000;"> source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

</span>-<span style="color: #000000;"> source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

</span>- job_name: <span style="color: #800000;">'</span><span style="color: #800000;">kubernetes-ingresses</span><span style="color: #800000;">'</span><span style="color: #000000;">

kubernetes_sd_configs:

</span>-<span style="color: #000000;"> role: ingress

relabel_configs:

</span>-<span style="color: #000000;"> source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe]

action: keep

regex: </span><span style="color: #0000ff;">true</span>

-<span style="color: #000000;"> source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.</span>+);(.+);(.+<span style="color: #000000;">)

replacement: ${</span><span style="color: #800080;">1</span>}:<span style="color: #008000;">//</span><span style="color: #008000;">${2}${3}</span>

target_label: __param_target

- target_label: address

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: _meta_kubernetes_ingress_label(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name

</span>- job_name: <span style="color: #800000;">'</span><span style="color: #800000;">kubernetes-pods</span><span style="color: #800000;">'</span><span style="color: #000000;">

kubernetes_sd_configs:

</span>-<span style="color: #000000;"> role: pod

relabel_configs:

</span>-<span style="color: #000000;"> source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: </span><span style="color: #0000ff;">true</span>

-<span style="color: #000000;"> source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.</span>+<span style="color: #000000;">)

</span>-<span style="color: #000000;"> source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([</span>^:]+)(?::\d+)?;(\d+<span style="color: #000000;">)

replacement: $</span><span style="color: #800080;">1</span>:$<span style="color: #800080;">2</span><span style="color: #000000;">

target_label: __address__

</span>-<span style="color: #000000;"> action: labelmap

regex: __meta_kubernetes_pod_label_(.</span>+<span style="color: #000000;">)

</span>-<span style="color: #000000;"> source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

</span>-<span style="color: #000000;"> source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name</span></pre>

[root@master prometheus-k8s-2]# cat rbac-setup.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: prometheus rules: - apiGroups: [""] resources: - nodes - nodes/proxy - services - endpoints - pods verbs: ["get", "list", "watch"] - apiGroups: - extensions resources: - ingresses verbs: ["get", "list", "watch"] - nonResourceURLs: ["/metrics"] verbs: ["get"] --- apiVersion: v1 kind: ServiceAccount metadata: name: prometheus namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: prometheus roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: prometheus subjects: - kind: ServiceAccount name: prometheus namespace: kube-system

[root@master prometheus-k8s-2]# cat promtheus.deploy.yaml --- apiVersion: apps/v1 kind: Deployment metadata: labels: name: prometheus-deployment name: prometheus namespace: kube-system spec: replicas: 1 selector: matchLabels: app: prometheus template: metadata: labels: app: prometheus spec: containers: - image: prom/prometheus:v2.0.0 name: prometheus command: - "/bin/prometheus" args: - "--config.file=/etc/prometheus/prometheus.yml" - "--storage.tsdb.path=/prometheus" - "--storage.tsdb.retention=24h" ports: - containerPort: 9090 protocol: TCP volumeMounts: - mountPath: "/prometheus" name: data - mountPath: "/etc/prometheus" name: config-volume resources: requests: cpu: 100m memory: 100Mi limits: cpu: 500m memory: 2500Mi serviceAccountName: prometheus volumes: - name: data emptyDir: {} - name: config-volume configMap: name: prometheus-config

[root@master prometheus-k8s-2]# cat prometheus.svc.yaml --- kind: Service apiVersion: v1 metadata: labels: app: prometheus name: prometheus namespace: kube-system spec: type: NodePort ports: - port: 9090 targetPort: 9090 nodePort: 30003 selector: app: prometheus

3.4部署grafana

[root@master prometheus-k8s-2]# cat grafana-ing.yaml apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: grafana namespace: kube-system spec: rules: - host: k8s.grafana http: paths: - path: / pathType: Prefix backend: service: name: grafana port: number: 3000

[root@master prometheus-k8s-2]# cat grafana-deploy.yaml apiVersion: apps/v1 kind: Deployment metadata: name: grafana-core namespace: kube-system labels: app: grafana component: core spec: replicas: 1 selector: matchLabels: app: grafana template: metadata: labels: app: grafana component: core spec: containers: - image: grafana/grafana:6.1.4 name: grafana-core imagePullPolicy: IfNotPresent # env: resources: # keep request = limit to keep this container in guaranteed class limits: cpu: 100m memory: 100Mi requests: cpu: 100m memory: 100Mi env: # The following env variables set up basic auth twith the default admin user and admin password. - name: GF_AUTH_BASIC_ENABLED value: "true" - name: GF_AUTH_ANONYMOUS_ENABLED value: "false" # - name: GF_AUTH_ANONYMOUS_ORG_ROLE # value: Admin # does not really work, because of template variables in exported dashboards: # - name: GF_DASHBOARDS_JSON_ENABLED # value: "true" readinessProbe: httpGet: path: /login port: 3000 # initialDelaySeconds: 30 # timeoutSeconds: 1 #volumeMounts: #先不进行挂载 #- name: grafana-persistent-storage # mountPath: /var #volumes: #- name: grafana-persistent-storage #emptyDir: {}

[root@master prometheus-k8s-2]# cat grafana-svc.yaml apiVersion: v1 kind: Service metadata: name: grafana namespace: kube-system labels: app: grafana component: core spec: type: NodePort ports: - port: 3000 selector: app: grafana component: core

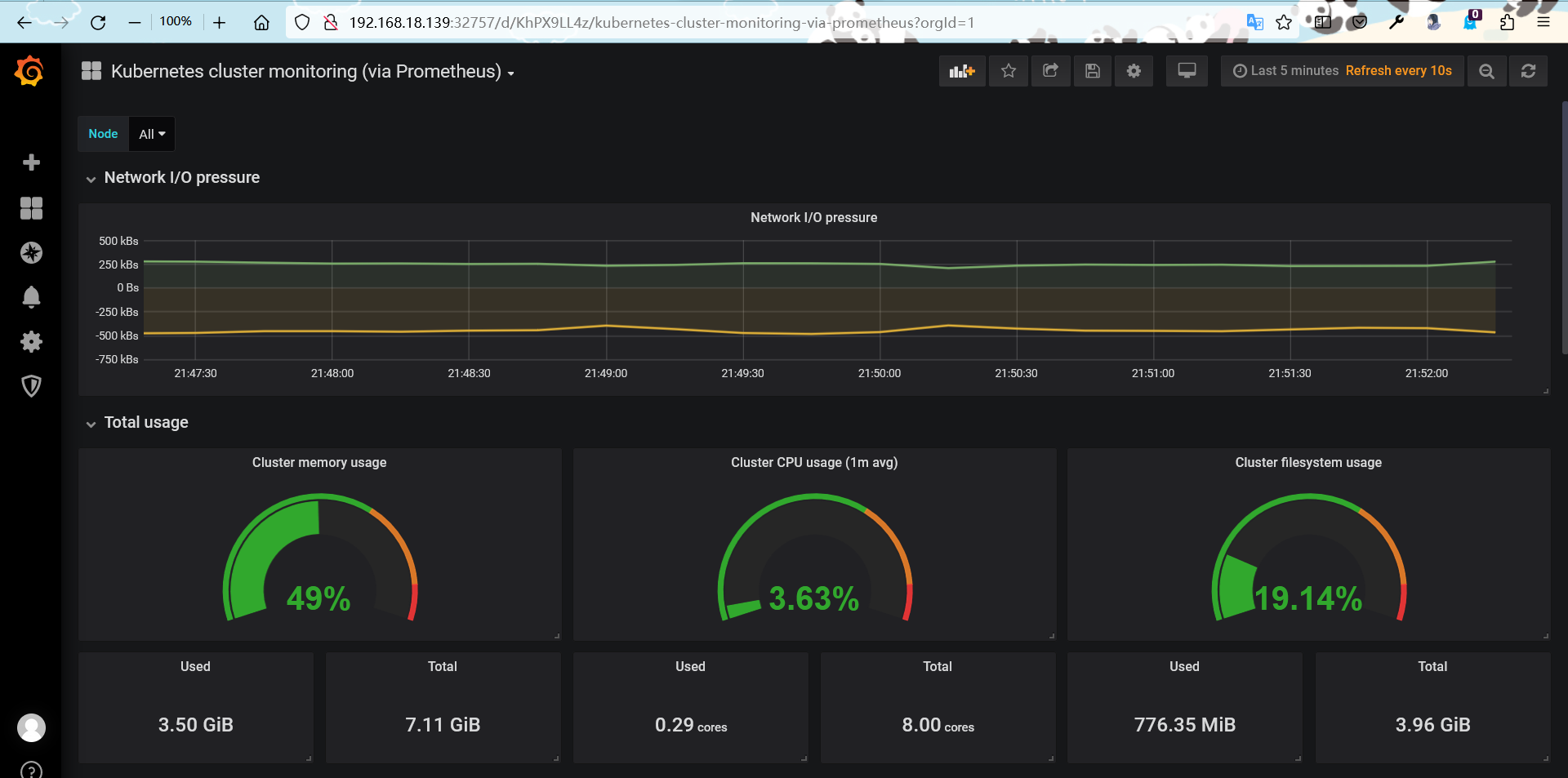

4.测试

4.1持久化数据测试,在浏览器访问k8s集群任意一台宿主机的ip地址+端口号

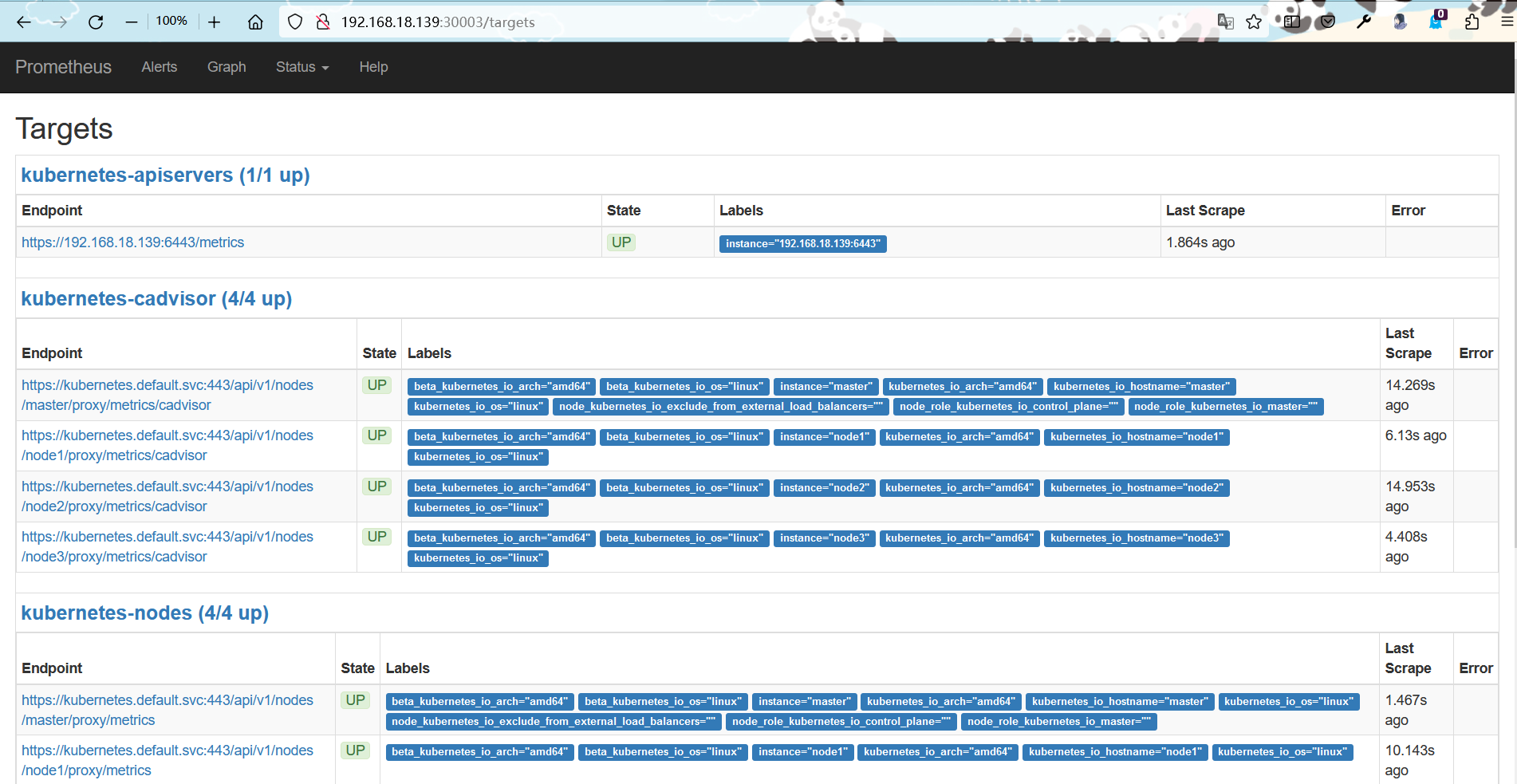

4.2k8s集群监控测试

[root@master prometheus-k8s-2]# kubectl get svc -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 2d5h default pv-pod-nfs NodePort 10.1.79.218 <none> 8090:32001/TCP 32h kube-system grafana NodePort 10.1.165.248 <none> 3000:32757/TCP 7h9m kube-system kube-dns ClusterIP 10.1.0.10 <none> 53/UDP,53/TCP,9153/TCP 2d5h kube-system node-exporter NodePort 10.1.185.148 <none> 9100:31672/TCP 7h15m kube-system prometheus NodePort 10.1.116.102 <none> 9090:30003/TCP 7h11m

访问宿主机192.168.18.139:30003 prometheus服务的端口号

访问grafana的端口号 32757

用户名和密码都是admin

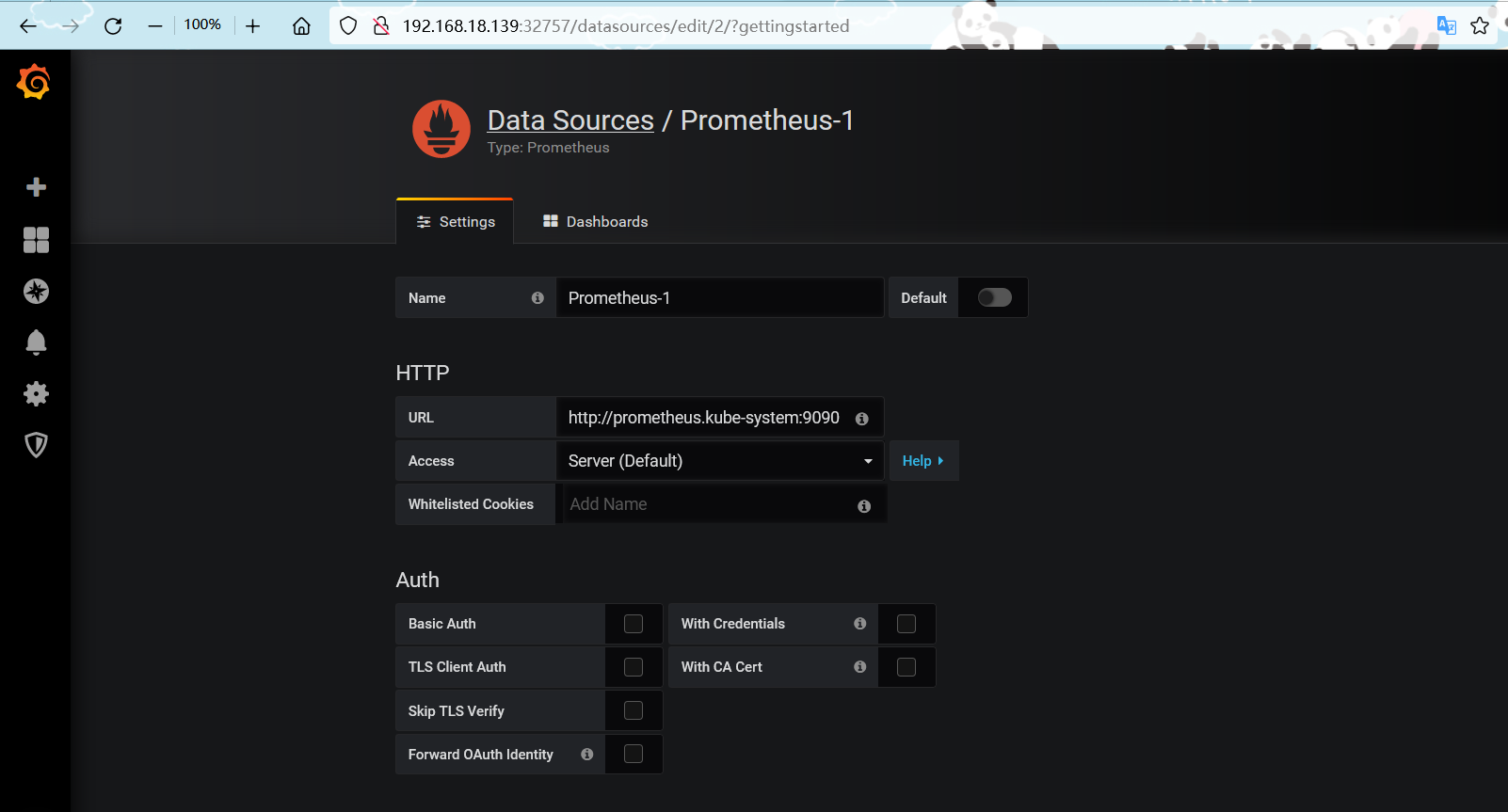

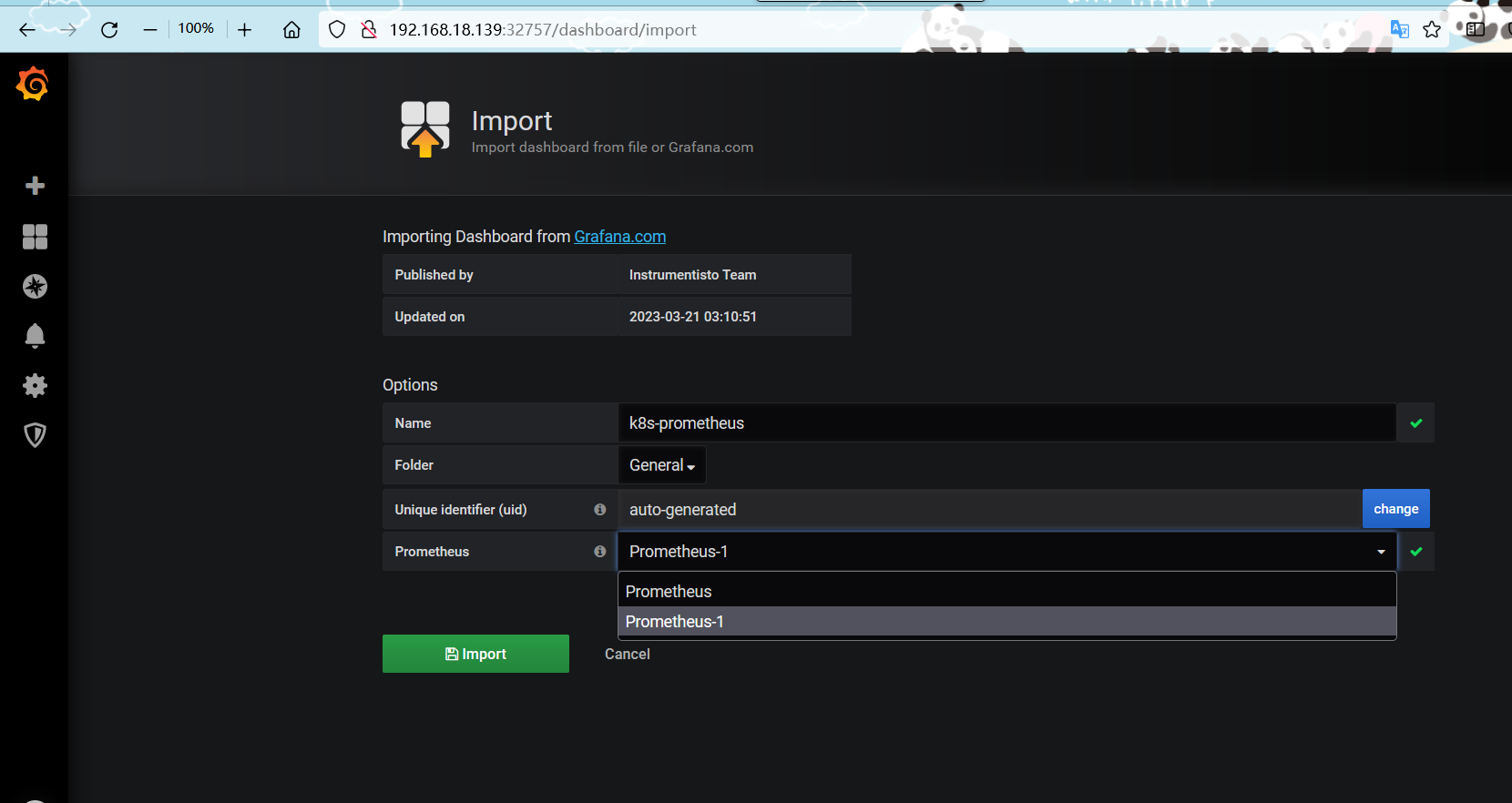

grafana添加数据源并导入模板

URL需要写成 service.namespace:port 的形式 例如:http://prometheus.kube-system:9090

导入k8s的dashboard模板

import导入dashboard模板(315)

效果图

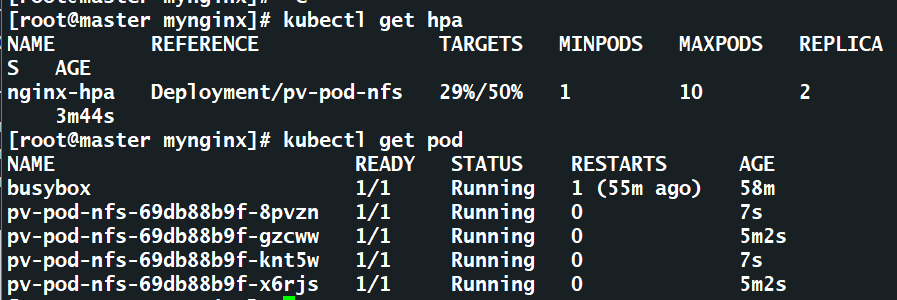

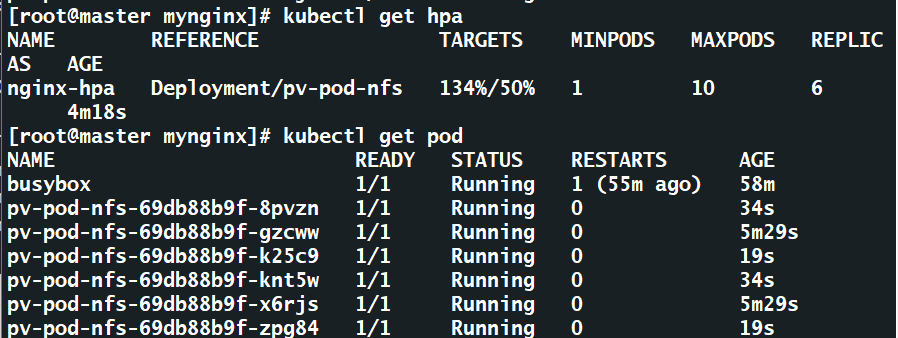

4.3HPA水平扩缩pod测试

增加cpu负载,访问地址要和svc地址相同

[root@master hpa]# kubectl run busybox -it --image=busybox -- /bin/sh -c 'while true; do wget -q -O- http://10.1.79.218:8090; done'

过几分钟查看hpa使用率和pod数量

在pod中执行命令,增加内存负载

[root@master hpa]# kubectl exec -it pv-pod-nfs-69db88b9f-x6rjs -- /bin/sh -c 'dd if=/dev/zero of=/tmp/file1'

过几分钟查看内存使用率和pod数量

5.1配置ingress-controller

deploy.yaml的配置

# 官方链接

https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.8.1/deploy/static/provider/cloud/deploy.yaml

[root@master mynginx]# cat deploy.yaml apiVersion: v1 kind: Namespace metadata: labels: app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx name: ingress-nginx --- apiVersion: v1 automountServiceAccountToken: true kind: ServiceAccount metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.8.1 name: ingress-nginx namespace: ingress-nginx --- apiVersion: v1 kind: ServiceAccount metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.8.1 name: ingress-nginx-admission namespace: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.8.1 name: ingress-nginx namespace: ingress-nginx rules: - apiGroups: - "" resources: - namespaces verbs: - get - apiGroups: - "" resources: - configmaps - pods - secrets - endpoints verbs: - get - list - watch - apiGroups: - "" resources: - services verbs: - get - list - watch - apiGroups: - networking.k8s.io resources: - ingresses verbs: - get - list - watch - apiGroups: - networking.k8s.io resources: - ingresses/status verbs: - update - apiGroups: - networking.k8s.io resources: - ingressclasses verbs: - get - list - watch - apiGroups: - coordination.k8s.io resourceNames: - ingress-nginx-leader resources: - leases verbs: - get - update - apiGroups: - coordination.k8s.io resources: - leases verbs: - create - apiGroups: - "" resources: - events verbs: - create - patch - apiGroups: - discovery.k8s.io resources: - endpointslices verbs: - list - watch - get --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.8.1 name: ingress-nginx-admission namespace: ingress-nginx rules: - apiGroups: - "" resources: - secrets verbs: - get - create --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.8.1 name: ingress-nginx rules: - apiGroups: - "" resources: - configmaps - endpoints - nodes - pods - secrets - namespaces verbs: - list - watch - apiGroups: - coordination.k8s.io resources: - leases verbs: - list - watch - apiGroups: - "" resources: - nodes verbs: - get - apiGroups: - "" resources: - services verbs: - get - list - watch - apiGroups: - networking.k8s.io resources: - ingresses verbs: - get - list - watch - apiGroups: - "" resources: - events verbs: - create - patch - apiGroups: - networking.k8s.io resources: - ingresses/status verbs: - update - apiGroups: - networking.k8s.io resources: - ingressclasses verbs: - get - list - watch - apiGroups: - discovery.k8s.io resources: - endpointslices verbs: - list - watch - get --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.8.1 name: ingress-nginx-admission rules: - apiGroups: - admissionregistration.k8s.io resources: - validatingwebhookconfigurations verbs: - get - update --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.8.1 name: ingress-nginx namespace: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: ingress-nginx subjects: - kind: ServiceAccount name: ingress-nginx namespace: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.8.1 name: ingress-nginx-admission namespace: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: ingress-nginx-admission subjects: - kind: ServiceAccount name: ingress-nginx-admission namespace: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.8.1 name: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: ingress-nginx subjects: - kind: ServiceAccount name: ingress-nginx namespace: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.8.1 name: ingress-nginx-admission roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: ingress-nginx-admission subjects: - kind: ServiceAccount name: ingress-nginx-admission namespace: ingress-nginx --- apiVersion: v1 data: allow-snippet-annotations: "true" kind: ConfigMap metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.8.1 name: ingress-nginx-controller namespace: ingress-nginx --- apiVersion: v1 kind: Service metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.8.1 name: ingress-nginx-controller namespace: ingress-nginx spec: externalTrafficPolicy: Local ipFamilies: - IPv4 ipFamilyPolicy: SingleStack ports: - appProtocol: http name: http port: 80 protocol: TCP targetPort: http - appProtocol: https name: https port: 443 protocol: TCP targetPort: https selector: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx type: NodePort --- apiVersion: v1 kind: Service metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.8.1 name: ingress-nginx-controller-admission namespace: ingress-nginx spec: ports: - appProtocol: https name: https-webhook port: 443 targetPort: webhook selector: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx type: ClusterIP --- apiVersion: apps/v1 kind: Deployment metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.8.1 name: ingress-nginx-controller namespace: ingress-nginx spec:minReadySeconds: 0

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

spec:

hostNetwork: true # 这里改为使用宿主机ip

containers:

- args:

- /nginx-ingress-controller

- --publish-service=$(POD_NAMESPACE)/ingress-nginx-controller

- --election-id=ingress-nginx-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

image: k8s.dockerproxy.com/ingress-nginx/controller:v1.8.1@sha256:e5c4824e7375fcf2a393e1c03c293b69759af37a9ca6abdb91b13d78a93da8bd

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: controller

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 8443

name: webhook

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

requests:

cpu: 100m

memory: 90Mi

securityContext:

allowPrivilegeEscalation: true

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsUser: 101

volumeMounts:

- mountPath: /usr/local/certificates/

name: webhook-cert

readOnly: true

dnsPolicy: ClusterFirst

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-admission-create

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-admission-create

spec:

containers:

- args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: k8s.dockerproxy.com/ingress-nginx/kube-webhook-certgen:v20230407@sha256:543c40fd093964bc9ab509d3e791f9989963021f1e9e4c9c7b6700b02bfb227b

imagePullPolicy: IfNotPresent

name: create

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-admission-patch

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-admission-patch

spec:

containers:

- args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: k8s.dockerproxy.com/ingress-nginx/kube-webhook-certgen:v20230407@sha256:543c40fd093964bc9ab509d3e791f9989963021f1e9e4c9c7b6700b02bfb227b

imagePullPolicy: IfNotPresent

name: patch

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: nginx

spec:

controller: k8s.io/ingress-nginx # 关联ingress

---

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-admission

webhooks:

- admissionReviewVersions:

- v1

clientConfig:

service:

name: ingress-nginx-controller-admission

namespace: ingress-nginx

path: /networking/v1/ingresses

failurePolicy: Fail

matchPolicy: Equivalent

name: validate.nginx.ingress.kubernetes.io

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

sideEffects: None

5.2配置ingress资源

[root@master mynginx]# cat ingress-nginx.yaml apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: sc-ingress annotations: kubernets.io/ingress.class: ingress-nginx # 这个ingress是关联ingress controller的 spec: ingressClassName: nginx # 关联ingress controller rules: - host: nginx.foo.com http: paths: - path: / pathType: Prefix backend: service: name: pv-pod-nfs port: number: 8090 - host: nginx2.foo.com http: paths: - path: / pathType: Prefix backend: service: name: sc-nginx-svc2 port: number: 8090

[root@master mynginx]# kubectl get svc,pod -n ingress-nginx NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/ingress-nginx-controller NodePort 10.1.126.51 <none> 80:30165/TCP,443:32549/TCP 22h service/ingress-nginx-controller-admission ClusterIP 10.1.30.58 <none> 443/TCP 22hNAME READY STATUS RESTARTS AGE

pod/ingress-nginx-admission-create-l5mb6 0/1 Completed 0 22h

pod/ingress-nginx-admission-patch-2gwsb 0/1 Completed 1 22h

pod/ingress-nginx-controller-78f78d95c7-bxjjk 1/1 Running 1 (18h ago) 21h

5.3在/etc/hosts中添加域名对应的ip地址

192.168.138.202 nginx.foo.com

5.4测试访问

[root@master mynginx]# curl nginx.foo.com <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Hello harumakigohan! ^_^</h1> </body> </html>

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· Docker 太简单,K8s 太复杂?w7panel 让容器管理更轻松!