【scrapy】scrapy-redis 全国建筑市场基本信息采集

简介

环境: python3.6

scrapy 1.5

使用scrapy-redis 开发的分布式采集demo。一次简单的例子,供初学者参考(觉得有更好的方式麻烦反馈!)

源码地址:https://github.com/H3dg3h09/scrapy-redis-jzsc

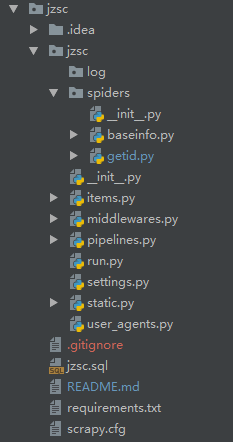

目录

常规目录,存储用的mysql,文件一起传上去了。

static.py存放了mysql连接的类。其中写了(网上借鉴)一个根据item来入库的方法..非常方便了

1 from jzsc.settings import DB 2 import six 3 4 class Db(): 5 6 def __init__(self, host, port,user, password, db): 7 import pymysql 8 self._cnx = pymysql.connect(host=host, port=port, user=user, password=password, db=db, charset="utf8") 9 self._cursor = self._cnx.cursor() 10 11 def insert_data(self, table_name, data): 12 ''' 13 :param table_name: str 14 :param data: dict 15 :return: bool 16 ''' 17 18 col_str = '' 19 row_str = '' 20 for key in data.keys(): 21 col_str = col_str + " " + key + "," 22 row_str = "{}'{}',".format(row_str, 23 data[key] if "'" not in data[key] else data[key].replace("'", "\\'")) 24 sql = "INSERT INTO {} ({}) VALUES ({}) ON DUPLICATE KEY UPDATE ".format(table_name, col_str[1:-1], 25 row_str[:-1]) 26 for (key, value) in six.iteritems(data): 27 sql += "{} = '{}', ".format(key, value if "'" not in value else value.replace("'", "\\'")) 28 sql = sql[:-2] 29 30 self._cursor.execute(sql) # 执行SQL 31 i = self._cnx.insert_id() 32 try: 33 self._cnx.commit() # 写入操作 34 except AttributeError as e: 35 raise e 36 else: 37 return i 38 39 40 @classmethod 41 def init_db(cls): 42 host = DB.get('DATABASE_HOST', '') 43 user = DB.get('DATABASE_USER', '') 44 password = DB.get('DATABASE_PASSWORD', '') 45 db = DB.get('DATABASE_DB', '') 46 port = DB.get('DATABASE_PORT', '') 47 return cls(host=host, port=port, user=user, 48 password=password, db=db) 49

run.py脚本用于调试...我习惯写一个run在pycharm里打断点调试用

Spider

getid用于爬取列表页的url, 然后将url存入redis供baseinfo调用

*对于分页的处理可能比较笨..希望有朋友告诉我更优雅的方法,代码如下:

1 # -*- coding: utf-8 -*- 2 from scrapy_redis.spiders import RedisSpider 3 import redis 4 from jzsc.settings import REDIS_URL # redis配置 5 import logging 6 from scrapy import FormRequest 7 import time 8 9 10 class GetidSpider(RedisSpider): 11 name = 'getid' 12 redis_key = 'getidspider:start_urls' 13 _rds = redis.from_url(REDIS_URL, db=0, decode_responses=True) 14 custom_settings = { 15 'LOG_FILE': 'jzsc\log\{name}_{t}.txt'.format(name=name, t=time.strftime('%Y-%m-%d', time.localtime())) 16 } 17 form_data = { 18 '$reload': '0', 19 '$pgsz': '15', 20 } 21 page = 1 22 cookie = {} 23 max_page = 0 24 def parse(self, response): 25 hrefs = response.xpath('//tbody[@class="cursorDefault"]/tr/td/a/@href').extract() 26 27 for href in hrefs: 28 new_url = response.urljoin(href) 29 self._rds.rpush('baseinfospider:start_urls', new_url) 30 31 logging.log(logging.INFO, "{url}".format(url=new_url)) 32 33 34 if not self.max_page: # 最大页数获取 35 import re 36 self.max_page = int(re.search(r'pc:\d(.*?),', response.xpath('//a[@sf="pagebar"]').extract_first()).group(1)) 37 self.form_data['$total'] = re.search(r'tt:\d(.*?),', response.xpath('//a[@sf="pagebar"]').extract_first()).group(1) 38 self.cookies = eval(response.headers.get('cookies', {})) 39 40 41 self.page += 1 42 if self.page <= self.max_page: 43 self.form_data['$pg'] = str(self.page) 44 45 yield FormRequest(response.url, callback=self.parse, 46 cookies=self.cookies, 47 formdata=self.form_data, dont_filter=True)

baseinfo脚本用于抽取公司基本信息:

1 def parse(self, response): 2 base_div = response.xpath('//div[@class="user_info spmtop"]')[0] 3 name = base_div.xpath('b/text()').extract_first() 4 info = base_div.xpath('following-sibling::table[1]/tbody/tr/td/text()').extract() 5 6 base_item = BasicInfoItem() 7 base_item['table_name'] = 'jz_info' 8 base_item['name'] = name 9 base_item['social_code'] = info[0] 10 base_item['legal_re'] = info[1] 11 base_item['com_type'] = info[2] 12 base_item['province'] = info[3] 13 base_item['addr'] = info[4] 14 yield base_item 15 16 urls_ = response.xpath('//ul[@class="tinyTab datas_tabs"]/li/a/@data-url').extract() 17 urls = { 18 'credential_url_': urls_[0], 19 'person_url_': urls_[1], 20 'progress_url_': urls_[2], 21 'g_behavior_url_': urls_[3], 22 'b_behavior_url_': urls_[4], 23 'blacklist_url_': urls_[5], 24 } 25 26 credential_url_ = urls_[0] 27 credential_url = response.urljoin(credential_url_) 28 29 cookies = eval(response.headers['cookies']) #这行的cookies是中间件写进去的 30 yield Request(credential_url, callback=self.get_credential, 31 meta={'social_code': info[0], 'urls': urls, 'cookies':cookies}, 32 cookies=cookies, dont_filter=True)

中间件写了一个cookie存入, 因为redis settings.py中没有打开cookie选项

除此之外,还有随机user-agent的中间件

1 class JszcDownloaderUserAgentMiddleware(UserAgentMiddleware): 2 3 def __init__(self, user_agent=''): 4 super(JszcDownloaderUserAgentMiddleware, self).__init__(user_agent) 5 6 def process_request(self, request, spider): 7 if not request.headers.get('User-Agent'): 8 user_agent = choice(USER_AGENTS) 9 request.headers.setdefault(b'User-Agent', user_agent) 10 11 12 class JszcDownloaderAutoCookieMiddleware(): 13 14 def process_response(self, request, response, spider): 15 cookies = response.headers.get('Set-Cookie') 16 if cookies: 17 l = filter(lambda x: '=' in x, cookies.decode('utf8').split(';') ) 18 coo = {} 19 for i in l: 20 s = i.split('=') 21 coo[s[0]] = s[1] 22 23 response.headers['cookies'] = str(coo) 24 25 return response

运行

#cmd中

scrapy crawl getid scrapy crawl baseinfo #redis-cli中 lpush getidspider:start_urls http://jzsc.mohurd.gov.cn/dataservice/query/comp/list

*记得先运行sql文件创建库

参考:

入库方法: 静觅 | Scrapy小技巧-MySQL存储