CNN practice 2021-10-16

代码练习

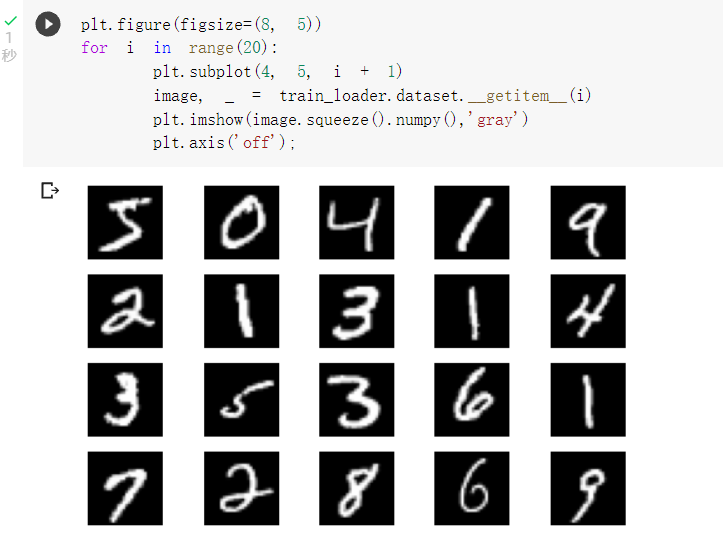

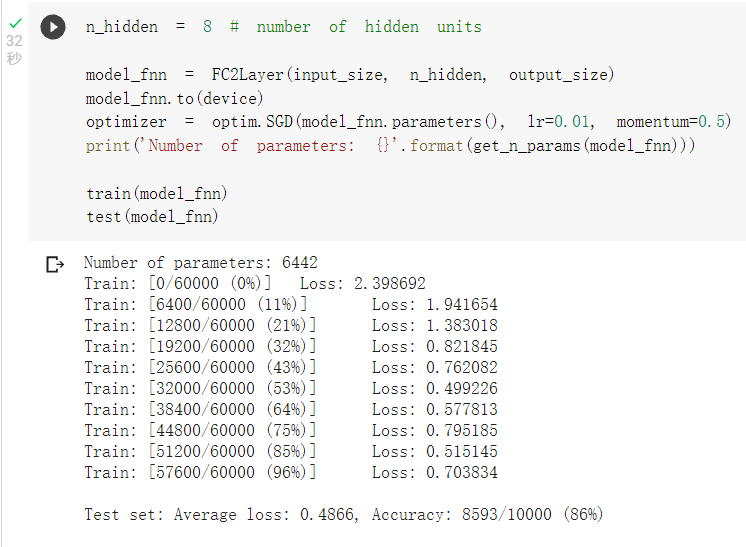

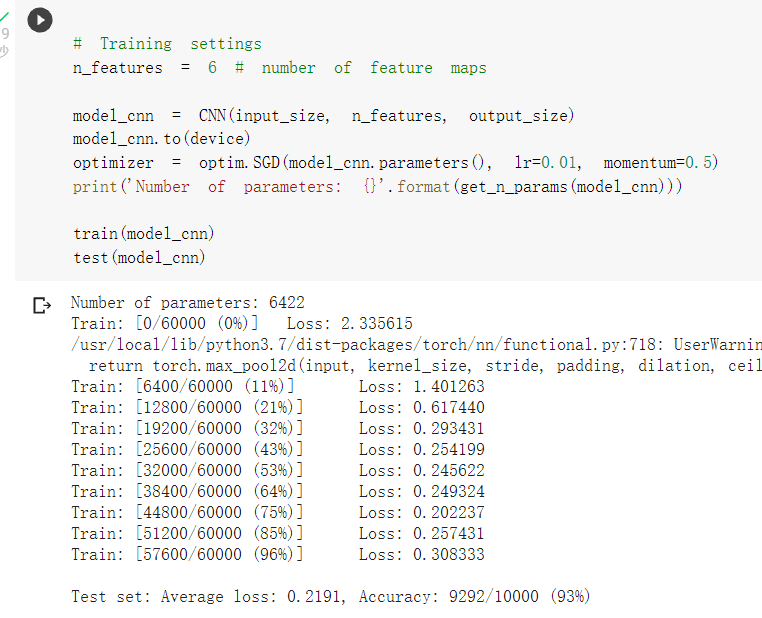

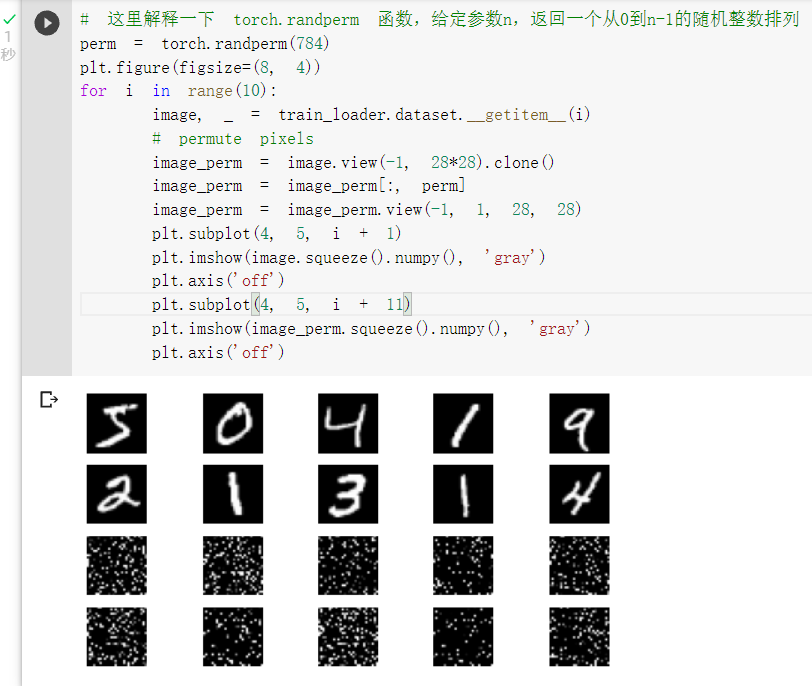

lab 1

模型代码

class FC2Layer(nn.Module):

def __init__(self, input_size, n_hidden, output_size):

super(FC2Layer, self).__init__()

self.input_size = input_size

self.network = nn.Sequential(

nn.Linear(input_size, n_hidden),

nn.ReLU(),

nn.Linear(n_hidden, n_hidden),

nn.ReLU(),

nn.Linear(n_hidden, output_size),

nn.LogSoftmax(dim=1)

)

def forward(self, x):

x = x.view(-1, self.input_size)

return self.network(x)

#生成实例

model_fnn = FC2Layer(input_size, n_hidden, output_size)

optimizer = optim.SGD(model_fnn.parameters(), lr=0.01, momentum=0.5)

class CNN(nn.Module):

def __init__(self, input_size, n_feature, output_size):

super(CNN, self).__init__()

self.n_feature = n_feature

self.conv1 = nn.Conv2d(in_channels=1, out_channels=n_feature, kernel_size=5)

self.conv2 = nn.Conv2d(n_feature, n_feature, kernel_size=5)

self.fc1 = nn.Linear(n_feature*4*4, 50)

self.fc2 = nn.Linear(50, 10)

def forward(self, x, verbose=False):

x = self.conv1(x)

x = F.relu(x)

x = F.max_pool2d(x, kernel_size=2)

x = self.conv2(x)

x = F.relu(x)

x = F.max_pool2d(x, kernel_size=2)

x = x.view(-1, self.n_feature*4*4)

x = self.fc1(x)

x = F.relu(x)

x = self.fc2(x)

x = F.log_softmax(x, dim=1)

return x

#生成实例

model_cnn = CNN(input_size, n_features, output_size)

optimizer = optim.SGD(model_cnn.parameters(), lr=0.01, momentum=0.5)

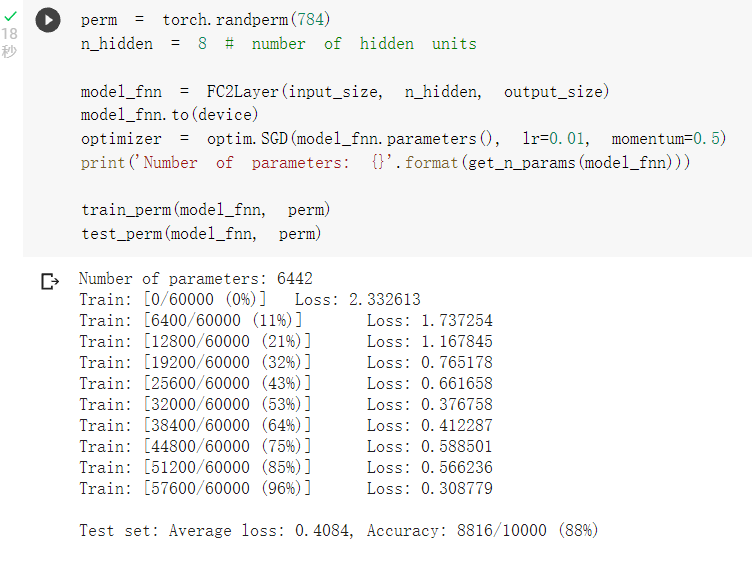

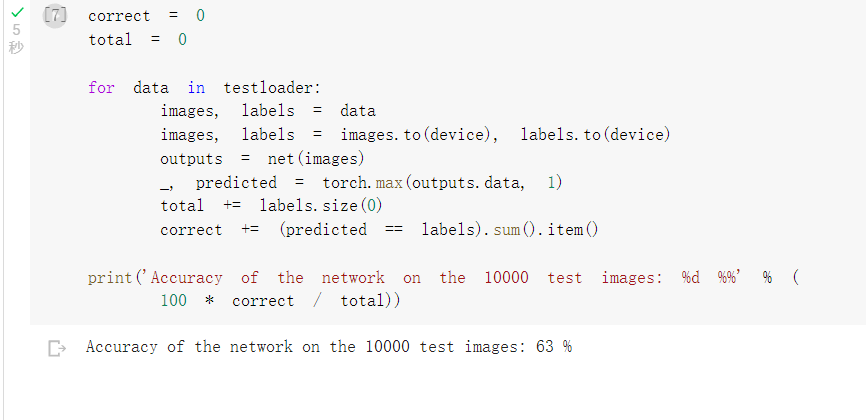

截图

lab2

模型代码

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 16 * 5 * 5)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(net.parameters(), lr=0.001)

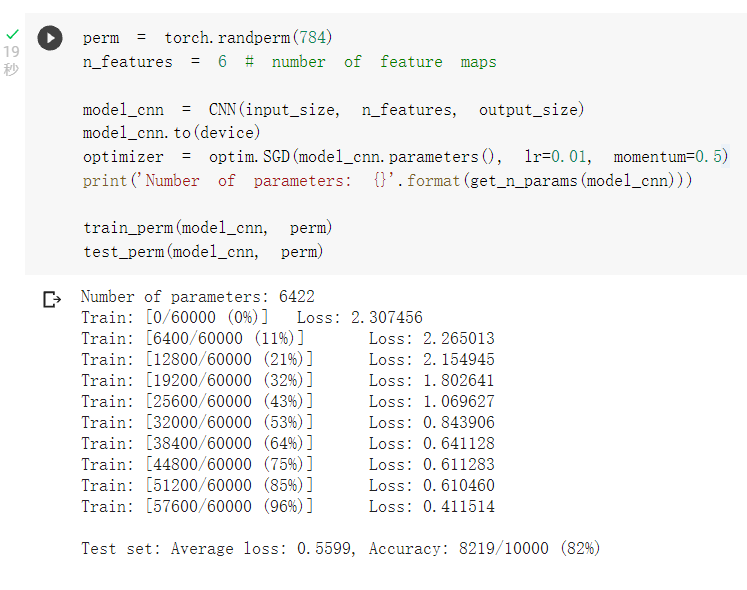

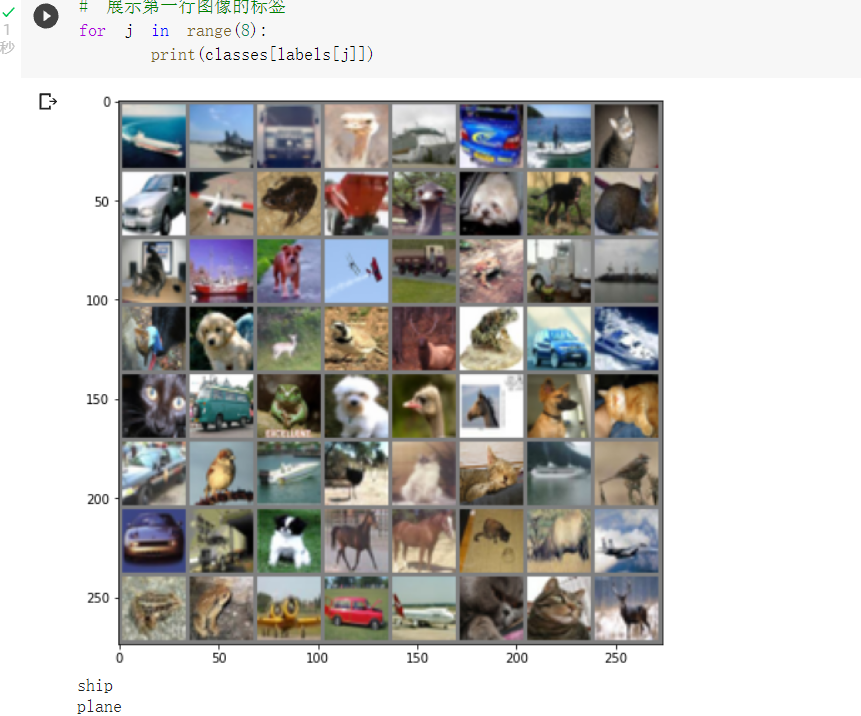

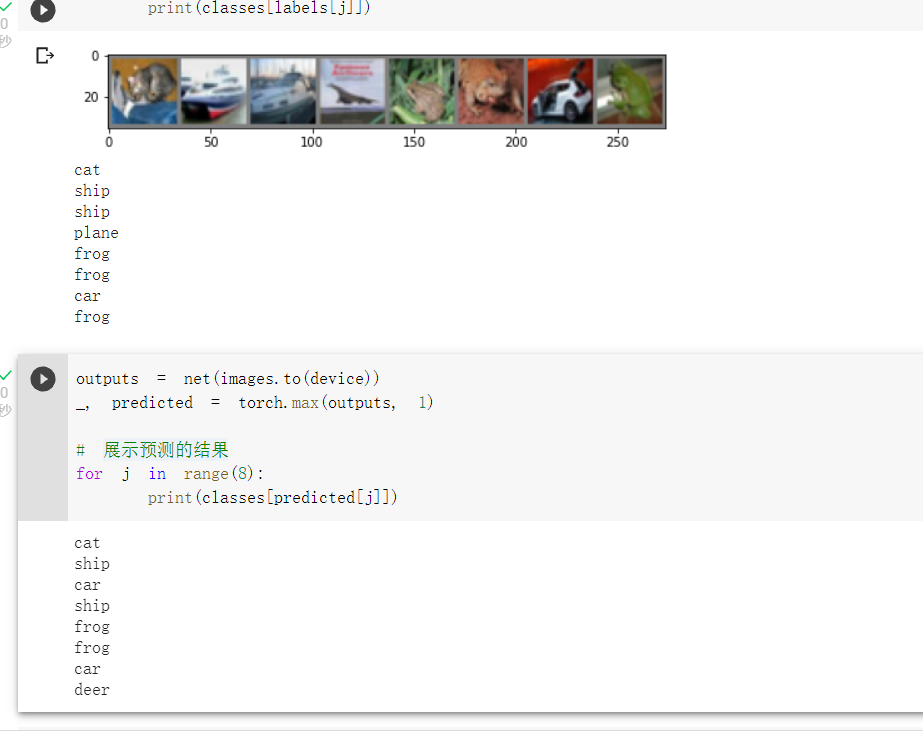

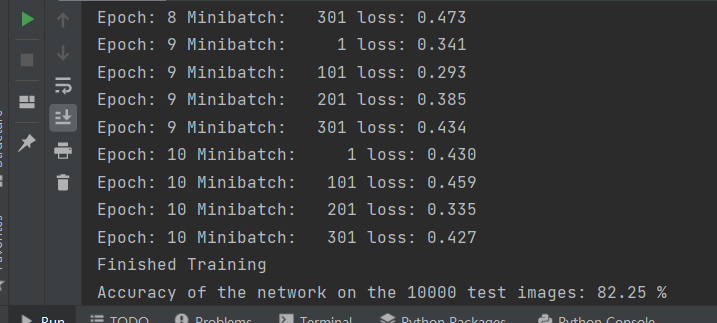

截图

lab3

由于colab上跑的太慢,在本地跑了结果

模型代码

class VGG(nn.Module):

def __init__(self):

super(VGG, self).__init__()

self.cfg = [64, 'M', 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M']

self.features = self._make_layers(self.cfg)

self.classifier = nn.Linear(512, 10)

def forward(self, x):

out = self.features(x)

out = out.view(out.size(0), -1)

out = self.classifier(out)

return out

def _make_layers(self, cfg):

layers = []

in_channels = 3

for x in cfg:

if x == 'M':

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

else:

layers += [nn.Conv2d(in_channels, x, kernel_size=3, padding=1),

nn.BatchNorm2d(x),

nn.ReLU(inplace=True)]

in_channels = x

layers += [nn.AvgPool2d(kernel_size=1, stride=1)]

return nn.Sequential(*layers)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(net.parameters(), lr=0.001)

截图

想法解读

相较于直白的全连接网络,CNN在图像处理领域有着压倒性的优势。一个好的模型往往离不开足够大的数据集。经典模型在一定广度上有着很好的表现。