【代码精读】Graph Convolution over Pruned Dependency Trees for Relation Extraction(1)

First, download and unzip GloVe vectors from the Stanford NLP group website, with:

chmod +x download.sh; ./download.sh

命令chomd+x,使download.sh文件有执行权限

使用Git Bash工具运行此脚本文件download.sh:

#!/bin/bash cd dataset; mkdir glove cd glove echo "==> Downloading glove vectors..." wget http://nlp.stanford.edu/data/glove.840B.300d.zip echo "==> Unzipping glove vectors..." unzip glove.840B.300d.zip rm glove.840B.300d.zip echo "==> Done."

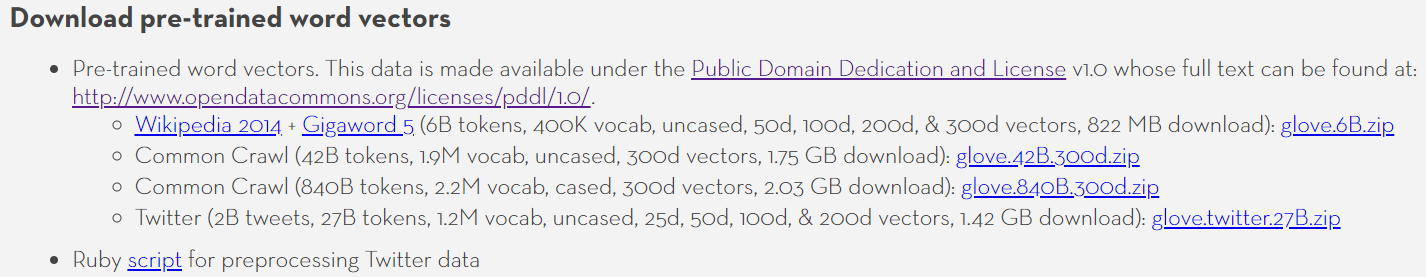

选择合适的Glove预训练词向量:

我们使用TACRED数据集,所以选择第三个预训练好的词向量。

TACRED数据集标注格式如下:

train.json中一个无关系实例:

1 { 2 "id":"61b3a65fb906688c92a1", 3 "relation":"no_relation", 4 "token":[ 5 "Ali", 6 "lied", 7 "about", 8 "having", 9 "to", 10 "leave", 11 "for", 12 "her", 13 "job", 14 "to", 15 "see", 16 "if", 17 "Jake", 18 "would", 19 "end", 20 "the", 21 "show", 22 "to", 23 "be", 24 "with", 25 "her", 26 "." 27 ], 28 "subj_start":20, 29 "subj_end":20, 30 "obj_start":12, 31 "obj_end":12, 32 "subj_type":"PERSON", 33 "obj_type":"PERSON", 34 "stanford_pos":[ 35 "NNP", 36 "VBD", 37 "IN", 38 "VBG", 39 "TO", 40 "VB", 41 "IN", 42 "PRP$", 43 "NN", 44 "TO", 45 "VB", 46 "IN", 47 "NNP", 48 "MD", 49 "VB", 50 "DT", 51 "NN", 52 "TO", 53 "VB", 54 "IN", 55 "PRP", 56 "." 57 ], 58 "stanford_ner":[ 59 "PERSON", 60 "O", 61 "O", 62 "O", 63 "O", 64 "O", 65 "O", 66 "O", 67 "O", 68 "O", 69 "O", 70 "O", 71 "PERSON", 72 "O", 73 "O", 74 "O", 75 "O", 76 "O", 77 "O", 78 "O", 79 "O", 80 "O" 81 ], 82 "stanford_head":[ 83 "2", 84 "0", 85 "4", 86 "2", 87 "6", 88 "4", 89 "11", 90 "9", 91 "11", 92 "11", 93 "6", 94 "15", 95 "15", 96 "15", 97 "11", 98 "17", 99 "15", 100 "21", 101 "21", 102 "21", 103 "17", 104 "2" 105 ], 106 "stanford_deprel":[ 107 "nsubj", 108 "ROOT", 109 "mark", 110 "advcl", 111 "mark", 112 "xcomp", 113 "mark", 114 "nmod:poss", 115 "nsubj", 116 "mark", 117 "advcl", 118 "mark", 119 "nsubj", 120 "aux", 121 "advcl", 122 "det", 123 "dobj", 124 "mark", 125 "cop", 126 "case", 127 "acl", 128 "punct" 129 ] 130 },

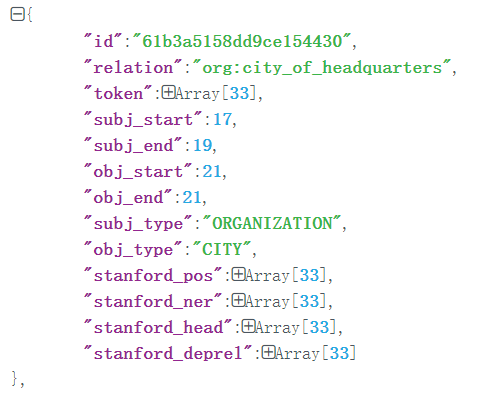

train.json中有关系的实例

1 { 2 "id":"61b3a5158dd9ce154430", 3 "relation":"org:city_of_headquarters", 4 "token":Array[33], 5 "subj_start":17, 6 "subj_end":19, 7 "obj_start":21, 8 "obj_end":21, 9 "subj_type":"ORGANIZATION", 10 "obj_type":"CITY", 11 "stanford_pos":[ 12 "NNP", 13 "NNP", 14 ",", 15 "CD", 16 ",", 17 "IN", 18 "NNP", 19 ",", 20 "NNP", 21 ",", 22 "VBD", 23 "VBN", 24 "NNP", 25 "IN", 26 "PRP$", 27 "NN", 28 "IN", 29 "NNP", 30 "NNP", 31 "NNP", 32 "IN", 33 "NNP", 34 ",", 35 "WRB", 36 "NN", 37 "VBD", 38 "PRP", 39 "VBD", 40 "NN", 41 "IN", 42 "PRP$", 43 "NN", 44 "." 45 ], 46 "stanford_ner":[ 47 "PERSON", 48 "PERSON", 49 "O", 50 "NUMBER", 51 "O", 52 "O", 53 "LOCATION", 54 "O", 55 "LOCATION", 56 "O", 57 "O", 58 "O", 59 "DATE", 60 "O", 61 "O", 62 "O", 63 "O", 64 "ORGANIZATION", 65 "ORGANIZATION", 66 "ORGANIZATION", 67 "O", 68 "LOCATION", 69 "O", 70 "O", 71 "O", 72 "O", 73 "O", 74 "O", 75 "O", 76 "O", 77 "O", 78 "O", 79 "O" 80 ], 81 "stanford_head":[ 82 "2", 83 "12", 84 "2", 85 "2", 86 "2", 87 "7", 88 "2", 89 "7", 90 "7", 91 "7", 92 "12", 93 "0", 94 "12", 95 "16", 96 "16", 97 "12", 98 "20", 99 "20", 100 "20", 101 "16", 102 "22", 103 "20", 104 "22", 105 "26", 106 "26", 107 "22", 108 "28", 109 "26", 110 "28", 111 "32", 112 "32", 113 "29", 114 "12" 115 ], 116 "stanford_deprel":[ 117 "compound", 118 "nsubjpass", 119 "punct", 120 "amod", 121 "punct", 122 "case", 123 "nmod", 124 "punct", 125 "appos", 126 "punct", 127 "auxpass", 128 "ROOT", 129 "nmod:tmod", 130 "case", 131 "nmod:poss", 132 "nmod", 133 "case", 134 "compound", 135 "compound", 136 "nmod", 137 "case", 138 "nmod", 139 "punct", 140 "advmod", 141 "nsubj", 142 "acl:relcl", 143 "nsubj", 144 "ccomp", 145 "dobj", 146 "case", 147 "nmod:poss", 148 "nmod", 149 "punct" 150 ] 151 },

dev.json中有关系实例1:

1 { 2 "id":"e7798fb92683ba431dd7", 3 "relation":"per:title", 4 "token":[ 5 "From", 6 "his", 7 "perch", 8 "as", 9 "a", 10 "researcher", 11 "for", 12 "IBM", 13 "in", 14 "New", 15 "York", 16 ",", 17 "where", 18 "he", 19 "worked", 20 "for", 21 "decades", 22 "before", 23 "accepting", 24 "a", 25 "position", 26 "at", 27 "Yale", 28 "University", 29 ",", 30 "he", 31 "noticed", 32 "patterns", 33 "that", 34 "other", 35 "researchers", 36 "may", 37 "have", 38 "overlooked", 39 "in", 40 "their", 41 "own", 42 "data", 43 ",", 44 "then", 45 "often", 46 "swooped", 47 "in", 48 "to", 49 "collaborate", 50 "." 51 ], 52 "subj_start":1, 53 "subj_end":1, 54 "obj_start":5, 55 "obj_end":5, 56 "subj_type":"PERSON", 57 "obj_type":"TITLE", 58 "stanford_pos":[ 59 "IN", 60 "PRP$", 61 "NN", 62 "IN", 63 "DT", 64 "NN", 65 "IN", 66 "NNP", 67 "IN", 68 "NNP", 69 "NNP", 70 ",", 71 "WRB", 72 "PRP", 73 "VBD", 74 "IN", 75 "NNS", 76 "IN", 77 "VBG", 78 "DT", 79 "NN", 80 "IN", 81 "NNP", 82 "NNP", 83 ",", 84 "PRP", 85 "VBD", 86 "NNS", 87 "IN", 88 "JJ", 89 "NNS", 90 "MD", 91 "VB", 92 "VBN", 93 "IN", 94 "PRP$", 95 "JJ", 96 "NNS", 97 ",", 98 "RB", 99 "RB", 100 "VBD", 101 "IN", 102 "TO", 103 "VB", 104 "." 105 ], 106 "stanford_ner":[ 107 "O", 108 "O", 109 "O", 110 "O", 111 "O", 112 "O", 113 "O", 114 "ORGANIZATION", 115 "O", 116 "LOCATION", 117 "LOCATION", 118 "O", 119 "O", 120 "O", 121 "O", 122 "O", 123 "DURATION", 124 "O", 125 "O", 126 "O", 127 "O", 128 "O", 129 "ORGANIZATION", 130 "ORGANIZATION", 131 "O", 132 "O", 133 "O", 134 "O", 135 "O", 136 "O", 137 "O", 138 "O", 139 "O", 140 "O", 141 "O", 142 "O", 143 "O", 144 "O", 145 "O", 146 "O", 147 "O", 148 "O", 149 "O", 150 "O", 151 "O", 152 "O" 153 ], 154 "stanford_head":[ 155 "3", 156 "3", 157 "27", 158 "6", 159 "6", 160 "3", 161 "8", 162 "6", 163 "11", 164 "11", 165 "6", 166 "11", 167 "15", 168 "15", 169 "11", 170 "17", 171 "15", 172 "19", 173 "15", 174 "21", 175 "19", 176 "24", 177 "24", 178 "21", 179 "27", 180 "27", 181 "0", 182 "27", 183 "34", 184 "31", 185 "34", 186 "34", 187 "34", 188 "28", 189 "38", 190 "38", 191 "38", 192 "34", 193 "27", 194 "42", 195 "42", 196 "27", 197 "42", 198 "45", 199 "42", 200 "27" 201 ], 202 "stanford_deprel":[ 203 "case", 204 "nmod:poss", 205 "nmod", 206 "case", 207 "det", 208 "nmod", 209 "case", 210 "nmod", 211 "case", 212 "compound", 213 "nmod", 214 "punct", 215 "advmod", 216 "nsubj", 217 "acl:relcl", 218 "case", 219 "nmod", 220 "mark", 221 "advcl", 222 "det", 223 "dobj", 224 "case", 225 "compound", 226 "nmod", 227 "punct", 228 "nsubj", 229 "ROOT", 230 "dobj", 231 "mark", 232 "amod", 233 "nsubj", 234 "aux", 235 "aux", 236 "ccomp", 237 "case", 238 "nmod:poss", 239 "amod", 240 "nmod", 241 "punct", 242 "advmod", 243 "advmod", 244 "dep", 245 "compound:prt", 246 "mark", 247 "xcomp", 248 "punct" 249 ] 250 },

dev.json中有关系实例2:

1 { 2 "id":"e7798eaebf3179c706bf", 3 "relation":"org:website", 4 "token":[ 5 "A123", 6 ":", 7 "http://wwwa123systemscom/", 8 "Production", 9 "of", 10 "stimulus-aided", 11 "car", 12 "batteries", 13 "revs", 14 "up", 15 "Battery", 16 "maker", 17 "A123", 18 "Systems", 19 "Inc", 20 "planned", 21 "to", 22 "open", 23 "a", 24 "new", 25 "lithium", 26 "ion", 27 "battery", 28 "plant", 29 "Monday", 30 "in", 31 "Livonia", 32 ",", 33 "Michigan", 34 "." 35 ], 36 "subj_start":0, 37 "subj_end":0, 38 "obj_start":2, 39 "obj_end":2, 40 "subj_type":"ORGANIZATION", 41 "obj_type":"URL", 42 "stanford_pos":[ 43 "NN", 44 ":", 45 "NN", 46 "NN", 47 "IN", 48 "JJ", 49 "NN", 50 "NNS", 51 "VBZ", 52 "RP", 53 "NN", 54 "NN", 55 "NNP", 56 "NNPS", 57 "NNP", 58 "VBD", 59 "TO", 60 "VB", 61 "DT", 62 "JJ", 63 "NN", 64 "NN", 65 "NN", 66 "NN", 67 "NNP", 68 "IN", 69 "NNP", 70 ",", 71 "NNP", 72 "." 73 ], 74 "stanford_ner":[ 75 "O", 76 "O", 77 "O", 78 "O", 79 "O", 80 "O", 81 "O", 82 "O", 83 "O", 84 "O", 85 "O", 86 "O", 87 "ORGANIZATION", 88 "ORGANIZATION", 89 "ORGANIZATION", 90 "O", 91 "O", 92 "O", 93 "O", 94 "O", 95 "O", 96 "O", 97 "O", 98 "O", 99 "DATE", 100 "O", 101 "LOCATION", 102 "O", 103 "LOCATION", 104 "O" 105 ], 106 "stanford_head":[ 107 "0", 108 "1", 109 "4", 110 "9", 111 "8", 112 "8", 113 "8", 114 "4", 115 "1", 116 "9", 117 "15", 118 "15", 119 "15", 120 "15", 121 "16", 122 "9", 123 "18", 124 "16", 125 "24", 126 "24", 127 "24", 128 "24", 129 "24", 130 "18", 131 "18", 132 "27", 133 "18", 134 "27", 135 "27", 136 "1" 137 ], 138 "stanford_deprel":[ 139 "ROOT", 140 "punct", 141 "compound", 142 "nsubj", 143 "case", 144 "amod", 145 "compound", 146 "nmod", 147 "appos", 148 "compound:prt", 149 "compound", 150 "compound", 151 "compound", 152 "compound", 153 "nsubj", 154 "ccomp", 155 "mark", 156 "xcomp", 157 "det", 158 "amod", 159 "compound", 160 "compound", 161 "compound", 162 "dobj", 163 "nmod:tmod", 164 "case", 165 "nmod", 166 "punct", 167 "appos", 168 "punct" 169 ] 170 },

dev.json中无关系实例:

1 { 2 "id":"e779865fb91dc9cef1f3", 3 "relation":"no_relation", 4 "token":[ 5 "The", 6 "Academy", 7 "of", 8 "Motion", 9 "Picture", 10 "Arts", 11 "and", 12 "Sciences", 13 "awarded", 14 "Edwards", 15 "--", 16 "who", 17 "was", 18 "married", 19 "to", 20 "actress", 21 "Julie", 22 "Andrews", 23 "--", 24 "an", 25 "honorary", 26 "lifetime", 27 "achievement", 28 "Oscar", 29 "in", 30 "2004", 31 "." 32 ], 33 "subj_start":9, 34 "subj_end":9, 35 "obj_start":15, 36 "obj_end":15, 37 "subj_type":"PERSON", 38 "obj_type":"TITLE", 39 "stanford_pos":[ 40 "DT", 41 "NN", 42 "IN", 43 "NNP", 44 "NNP", 45 "NNS", 46 "CC", 47 "NNPS", 48 "VBD", 49 "NNP", 50 ":", 51 "WP", 52 "VBD", 53 "VBN", 54 "TO", 55 "NN", 56 "NNP", 57 "NNP", 58 ":", 59 "DT", 60 "JJ", 61 "NN", 62 "NN", 63 "NNP", 64 "IN", 65 "CD", 66 "." 67 ], 68 "stanford_ner":[ 69 "O", 70 "ORGANIZATION", 71 "ORGANIZATION", 72 "ORGANIZATION", 73 "ORGANIZATION", 74 "ORGANIZATION", 75 "ORGANIZATION", 76 "ORGANIZATION", 77 "O", 78 "PERSON", 79 "O", 80 "O", 81 "O", 82 "O", 83 "O", 84 "O", 85 "PERSON", 86 "PERSON", 87 "O", 88 "O", 89 "O", 90 "O", 91 "O", 92 "O", 93 "O", 94 "DATE", 95 "O" 96 ], 97 "stanford_head":[ 98 "2", 99 "9", 100 "6", 101 "6", 102 "6", 103 "2", 104 "6", 105 "6", 106 "0", 107 "9", 108 "10", 109 "14", 110 "14", 111 "10", 112 "18", 113 "18", 114 "18", 115 "14", 116 "10", 117 "24", 118 "24", 119 "24", 120 "24", 121 "10", 122 "26", 123 "24", 124 "9" 125 ], 126 "stanford_deprel":[ 127 "det", 128 "nsubj", 129 "case", 130 "compound", 131 "compound", 132 "nmod", 133 "cc", 134 "conj", 135 "ROOT", 136 "dobj", 137 "punct", 138 "nsubjpass", 139 "auxpass", 140 "acl:relcl", 141 "case", 142 "compound", 143 "compound", 144 "nmod", 145 "punct", 146 "det", 147 "amod", 148 "compound", 149 "compound", 150 "dep", 151 "case", 152 "nmod", 153 "punct" 154 ] 155 },

test.json中无关系实例:

1 { 2 "id":"098f665fb956837273f5", 3 "relation":"no_relation", 4 "token":[ 5 "He", 6 "named", 7 "one", 8 "as", 9 "Shah", 10 "Abdul", 11 "Aziz", 12 ",", 13 "a", 14 "member", 15 "of", 16 "a", 17 "pro-Taliban", 18 "religious", 19 "party", 20 "elected", 21 "to", 22 "parliament", 23 "'s", 24 "lower", 25 "house", 26 "in", 27 "2002", 28 "." 29 ], 30 "subj_start":4, 31 "subj_end":6, 32 "obj_start":2, 33 "obj_end":2, 34 "subj_type":"PERSON", 35 "obj_type":"NUMBER", 36 "stanford_pos":[ 37 "PRP", 38 "VBD", 39 "CD", 40 "IN", 41 "NNP", 42 "NNP", 43 "NNP", 44 ",", 45 "DT", 46 "NN", 47 "IN", 48 "DT", 49 "JJ", 50 "JJ", 51 "NN", 52 "VBN", 53 "TO", 54 "NN", 55 "POS", 56 "JJR", 57 "NN", 58 "IN", 59 "CD", 60 "." 61 ], 62 "stanford_ner":[ 63 "O", 64 "O", 65 "NUMBER", 66 "O", 67 "PERSON", 68 "PERSON", 69 "PERSON", 70 "O", 71 "O", 72 "O", 73 "O", 74 "O", 75 "MISC", 76 "O", 77 "O", 78 "O", 79 "O", 80 "O", 81 "O", 82 "O", 83 "O", 84 "O", 85 "DATE", 86 "O" 87 ], 88 "stanford_head":[ 89 "2", 90 "0", 91 "2", 92 "7", 93 "7", 94 "7", 95 "2", 96 "7", 97 "10", 98 "7", 99 "15", 100 "15", 101 "15", 102 "15", 103 "10", 104 "15", 105 "21", 106 "21", 107 "18", 108 "21", 109 "16", 110 "23", 111 "16", 112 "2" 113 ], 114 "stanford_deprel":[ 115 "nsubj", 116 "ROOT", 117 "dobj", 118 "case", 119 "compound", 120 "compound", 121 "nmod", 122 "punct", 123 "det", 124 "appos", 125 "case", 126 "det", 127 "amod", 128 "amod", 129 "nmod", 130 "acl", 131 "case", 132 "nmod:poss", 133 "case", 134 "amod", 135 "nmod", 136 "case", 137 "nmod", 138 "punct" 139 ] 140 },

test.json有关系实例:

1 { 2 "id":"098f65f2e89007458d2d", 3 "relation":"org:top_members/employees", 4 "token":[ 5 "NDA", 6 "commercial", 7 "director", 8 "John", 9 "Clarke", 10 "said", 11 ":", 12 "``", 13 "The", 14 "NDA", 15 "has", 16 "enjoyed", 17 "a", 18 "successful", 19 "five-year", 20 "relationship", 21 "with", 22 "Westinghouse", 23 "." 24 ], 25 "subj_start":9, 26 "subj_end":9, 27 "obj_start":3, 28 "obj_end":4, 29 "subj_type":"ORGANIZATION", 30 "obj_type":"PERSON", 31 "stanford_pos":[ 32 "NNP", 33 "JJ", 34 "NN", 35 "NNP", 36 "NNP", 37 "VBD", 38 ":", 39 "``", 40 "DT", 41 "NNP", 42 "VBZ", 43 "VBN", 44 "DT", 45 "JJ", 46 "JJ", 47 "NN", 48 "IN", 49 "NNP", 50 "." 51 ], 52 "stanford_ner":[ 53 "ORGANIZATION", 54 "O", 55 "O", 56 "PERSON", 57 "PERSON", 58 "O", 59 "O", 60 "O", 61 "O", 62 "ORGANIZATION", 63 "O", 64 "O", 65 "O", 66 "O", 67 "DURATION", 68 "O", 69 "O", 70 "ORGANIZATION", 71 "O" 72 ], 73 "stanford_head":[ 74 "5", 75 "5", 76 "5", 77 "5", 78 "6", 79 "0", 80 "6", 81 "6", 82 "10", 83 "12", 84 "12", 85 "6", 86 "16", 87 "16", 88 "16", 89 "12", 90 "18", 91 "16", 92 "6" 93 ], 94 "stanford_deprel":[ 95 "compound", 96 "amod", 97 "compound", 98 "compound", 99 "nsubj", 100 "ROOT", 101 "punct", 102 "punct", 103 "det", 104 "nsubj", 105 "aux", 106 "ccomp", 107 "det", 108 "amod", 109 "amod", 110 "dobj", 111 "case", 112 "nmod", 113 "punct" 114 ] 115 },

Then prepare vocabulary and initial word vectors with:

python prepare_vocab.py dataset/tacred dataset/vocab --glove_dir dataset/glove

This will write vocabulary and word vectors as a numpy matrix into the dir dataset/vocab.

我们先看主函数main( ),之后用到什么函数再去详细介绍对应的功能函数:

1 def main(): 2 args = parse_args() 3 4 # input files 5 train_file = args.data_dir + '/train.json' 6 dev_file = args.data_dir + '/dev.json' 7 test_file = args.data_dir + '/test.json' 8 wv_file = args.glove_dir + '/' + args.wv_file 9 wv_dim = args.wv_dim 10 11 # output files 12 helper.ensure_dir(args.vocab_dir) #Output vocab directory 不存在,创建一个文件夹 13 vocab_file = args.vocab_dir + '/vocab.pkl' # 14 emb_file = args.vocab_dir + '/embedding.npy' 15 16 # load files 17 print("loading files...") 18 train_tokens = load_tokens(train_file) 19 dev_tokens = load_tokens(dev_file) 20 test_tokens = load_tokens(test_file) 21 if args.lower: 22 train_tokens, dev_tokens, test_tokens = [[t.lower() for t in tokens] for tokens in\ 23 (train_tokens, dev_tokens, test_tokens)] 24 25 # load glove 26 print("loading glove...") 27 glove_vocab = vocab.load_glove_vocab(wv_file, wv_dim) 28 print("{} words loaded from glove.".format(len(glove_vocab))) 29 30 print("building vocab...") 31 v = build_vocab(train_tokens, glove_vocab, args.min_freq) 32 33 print("calculating oov...") #oov: Out-of-vocabulary 34 datasets = {'train': train_tokens, 'dev': dev_tokens, 'test': test_tokens} 35 for dname, d in datasets.items(): 36 total, oov = count_oov(d, v) 37 print("{} oov: {}/{} ({:.2f}%)".format(dname, oov, total, oov*100.0/total)) 38 39 print("building embeddings...") 40 embedding = vocab.build_embedding(wv_file, v, wv_dim) 41 print("embedding size: {} x {}".format(*embedding.shape)) 42 43 print("dumping to files...") 44 with open(vocab_file, 'wb') as outfile: 45 pickle.dump(v, outfile) 46 np.save(emb_file, embedding) 47 print("all done.")

第2行参数解析器:

1 def parse_args(): 2 parser = argparse.ArgumentParser(description='Prepare vocab for relation extraction.') 3 parser.add_argument('data_dir', help='TACRED directory.') 4 parser.add_argument('vocab_dir', help='Output vocab directory.') 5 parser.add_argument('--glove_dir', default='dataset/glove', help='GloVe directory.') 6 parser.add_argument('--wv_file', default='glove.840B.300d.txt', help='GloVe vector file.') 7 parser.add_argument('--wv_dim', type=int, default=300, help='GloVe vector dimension.') 8 parser.add_argument('--min_freq', type=int, default=0, help='If > 0, use min_freq as the cutoff.') 9 parser.add_argument('--lower', action='store_true', help='If specified, lowercase all words.') 10 #action=‘store_true’,只要运行时该变量有传参就将该变量设为True。 11 args = parser.parse_args() 12 return args

其中data_dir与vocab_dir参数在terminal中已被赋值 dataset/tacred dataset/vocab

第18-20行通过load_tokens( )函数将json文件对应的数据转为List形式的数据便于处理:

1 def load_tokens(filename): 2 with open(filename) as infile: 3 data = json.load(infile) 4 tokens = [] 5 for d in data: 6 ts = d['token'] 7 ss, se, os, oe = d['subj_start'], d['subj_end'], d['obj_start'], d['obj_end'] 8 # do not create vocab for entity words 9 ts[ss:se+1] = ['<PAD>']*(se-ss+1) 10 ts[os:oe+1] = ['<PAD>']*(oe-os+1) 11 tokens += list(filter(lambda t: t!='<PAD>', ts)) 12 print("{} tokens from {} examples loaded from {}.".format(len(tokens), len(data), filename)) 13 return tokens

逐句子读取数据,ts存储句子内容。ss,se,os,oe分别记录主语的开始、结束,宾语的开始、结束。接着主语宾语在句子中被['<PAD>']替换。将训练数据集中所有不包括实体的单词存入tokens中。print:640 tokens from 20 examples loaded from dataset/tacred/train.json

第27行代码,调用utils工具包中的vocab.load_glove_vocab( )加载预训练好的Glove词向量:

1 file = wv_file, wv_dim = wv_dim = 300 2 def load_glove_vocab(file, wv_dim): 3 """ 4 Load all words from glove. 5 """ 6 vocab = set() 7 with open(file, encoding='utf8') as f: 8 for line in f: 9 elems = line.split() 10 token = ''.join(elems[0:-wv_dim]) 11 vocab.add(token) 12 return vocab

line.spilt()将glove.840B.300d.txt中的1条数据以空格分隔以List形式返回,将此条数据的Glove单词(elems[0:-300]对应的为单词),加入到vocab中。

执行31行代码调用build_vocab( )方法,只有训练数据集中的tokens被传递:

1 def build_vocab(tokens, glove_vocab, min_freq): 2 """ build vocab from tokens and glove words. """ 3 counter = Counter(t for t in tokens) 4 # if min_freq > 0, use min_freq, otherwise keep all glove words 5 if min_freq > 0: 6 v = sorted([t for t in counter if counter.get(t) >= min_freq], key=counter.get, reverse=True) 7 else: 8 v = sorted([t for t in counter if t in glove_vocab], key=counter.get, reverse=True) 9 # add special tokens and entity mask tokens 10 v = constant.VOCAB_PREFIX + entity_masks() + v 11 print("vocab built with {}/{} words.".format(len(v), len(counter))) 12 return v

Count( )方法统计训练集tokens中的词频,返回的counter类似与字典,通过counter.get对应的词频数字确定哪些出现频率低于min_freq的单词将被略去。v返回一个出现频率由多到少的单词List,其中第10行代码entity_mask( ):

1 def entity_masks(): 2 """ Get all entity mask tokens as a list. """ 3 masks = [] 4 subj_entities = list(constant.SUBJ_NER_TO_ID.keys())[2:] 5 obj_entities = list(constant.OBJ_NER_TO_ID.keys())[2:] 6 masks += ["SUBJ-" + e for e in subj_entities] 7 masks += ["OBJ-" + e for e in obj_entities] 8 return masks

masks返回所有实体标记类型List

1 PAD_TOKEN = '<PAD>' 2 UNK_TOKEN = '<UNK>' 3 VOCAB_PREFIX = [PAD_TOKEN, UNK_TOKEN] 4 SUBJ_NER_TO_ID = {PAD_TOKEN: 0, UNK_TOKEN: 1, 'ORGANIZATION': 2, 'PERSON': 3} 5 OBJ_NER_TO_ID = {PAD_TOKEN: 0, UNK_TOKEN: 1, 'PERSON': 2, 'ORGANIZATION': 3, 'DATE': 4, 'NUMBER': 5, 'TITLE': 6, 'COUNTRY': 7, 'LOCATION': 8, 'CITY': 9, 'MISC': 10, 'STATE_OR_PROVINCE': 11, 'DURATION': 12, 'NATIONALITY': 13, 'CAUSE_OF_DEATH': 14, 'CRIMINAL_CHARGE': 15, 'RELIGION': 16, 'URL': 17, 'IDEOLOGY': 18}

所以print:vocab built with 375/360 words

return v的内容为:<class 'list'>: ['<PAD>', '<UNK>', 'SUBJ-ORGANIZATION', 'SUBJ-PERSON', 'OBJ-PERSON', 'OBJ-ORGANIZATION', 'OBJ-DATE', 'OBJ-NUMBER', 'OBJ-TITLE', 'OBJ-COUNTRY', 'OBJ-LOCATION', 'OBJ-CITY', 'OBJ-MISC', 'OBJ-STATE_OR_PROVINCE', 'OBJ-DURATION', 'OBJ-NATIONALITY', 'OBJ-CAUSE_OF_DEATH', 'OBJ-CRIMINAL_CHARGE', 'OBJ-RELIGION', 'OBJ-URL', 'OBJ-IDEOLOGY', ',', 'the', '.', 'to', 'in', 'a', 'of', 'and', '``', "''", 'for', 'was', 'that', 'said', 'at', 'billion', '-LRB-', 'million', '-RRB-', 'on', 'with', ';', '$', "'s", 'its', 'would', 'who', 'Chinese', 'Taiwan', 'US$', 'year', 'have', 'is', 'from', 'some', 'I', 'her', 'job', 'see', 'be', 'case', 'two', 'by', 'yuan', 'John', 'as', 'spokesman', 'global', 'responsibility', 'including', 'do', 'starts', 'each', 'first', 'passengers', 'five', 'next', 'Chen', 'The', 'been', 'bpd', 'percent', 'so', "n't", 'change', 'Rado', 'his', 'office', 'Ali', 'lied', 'about', 'having', 'leave', 'if', 'end', 'show', 'In', 'considered', 'Zury'...

可以看到‘,’逗号出现频率是最高的。

接着回到主函数第36行代码:

1 tokens = train_tokens/dev_tokens/test_tokens vocab = v 2 def count_oov(tokens, vocab): 3 c = Counter(t for t in tokens) 4 total = sum(c.values()) 5 matched = sum(c[t] for t in vocab) 6 return total, total-matched

数据集中去掉实体单词的单词数目total,能在v中匹配的单词数目matched,返回out of vocabulary的单词数目total-matched

第40行代码构建embedding:

1 def build_embedding(wv_file, vocab, wv_dim): 2 vocab_size = len(vocab) 3 emb = np.random.uniform(-1, 1, (vocab_size, wv_dim)) 4 emb[constant.PAD_ID] = 0 # <pad> should be all 0 5 6 w2id = {w: i for i, w in enumerate(vocab)} 7 with open(wv_file, encoding="utf8") as f: 8 for line in f: 9 elems = line.split() 10 token = ''.join(elems[0:-wv_dim]) 11 if token in w2id: 12 emb[w2id[token]] = [float(v) for v in elems[-wv_dim:]] 13 return emb

emb[0] = 0,如果Glove中的单词在训练集中,则对应的glove.840B.300d.txt中的300维向量数据存入对应的emb[w2id[token]]中,返回emb的numpy数据文件。

第44-46行保存对应的vocab.pkl单词文件,embedding.npy文件。

参考:

Python collections.Counter()用法:https://blog.csdn.net/qwe1257/article/details/83272340

python中' '.JOIN()的使用:https://blog.csdn.net/weixin_42986099/article/details/83447926

浙公网安备 33010602011771号

浙公网安备 33010602011771号