多尺度计算3

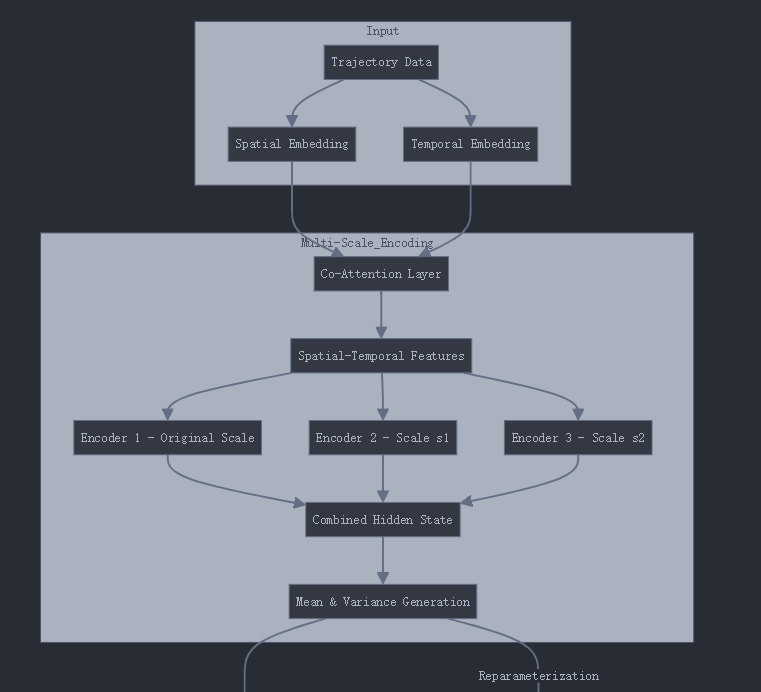

分成四个阶段

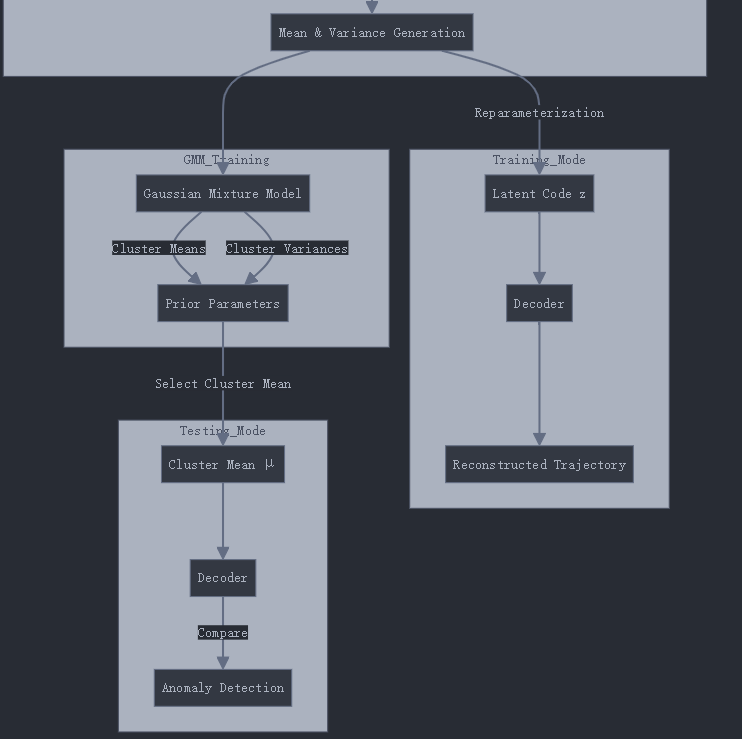

- 第一个阶段,是pretrain。先学习mean和variance的生成,一条轨迹会生成两个平均值和方差(分别表示时间和空间的)。然后输入到解码器中解码,两个lstm.一个用于重构时间,一个是用于重构路段

- 第二个阶段,是生成Gaussian model。计算sample轨迹的mean和variance,分成三个Gaussian model。只计算一次,这个值保持不变。生成prior parameters

- 第三个阶段,继续训练解码模型。结合高斯分布的loss (希望生成的mean和variance可以趋于这三个分布),种类的loss(但是又能去区分到底是哪一种)。以及重构的loss

- 第四个阶段,在预测阶段,遍历3个gaussian model (时间或者空间),作为lstm initial的值,分别计算时间预测的分数的最大值(三选一)和空间预测的分数最大值(三选一)再用1-时间预测的分数的最大值*空间预测的分数最大值作为异常分数

i given a summary for this code, plz check and revise

the reconstruction loss, you may lack ?

so plz revise

i think you lack of telling what data use? use all data, all parts of them to get GMM

but when to apply the ranking method for GMM? I see this code

I would like to ask if the reconstructed loss during training calculates the mean of cross entropy? The score for reconstruction in prediction should also be calculated in this way, right?

I'll analyze the code and provide a revised summary of the MST-OATD (Multi-Scale Temporal-Online Anomaly Trajectory Detection) model's architecture and stages.

The model operates in four main stages:

- Pretraining Stage:

- The model learns to generate spatial and temporal embeddings separately using two parallel networks (MST_OATD_S and MST_OATD_T)

- Each network uses a multi-scale encoder (with three different scales) and a decoder

- Features:

- Uses co-attention mechanism between spatial and temporal features

- Implements three GRU encoders for different scales (s1, s2, s3)

- Employs weighted combination of multi-scale features

- Generates mean (μ) and variance (σ²) for both spatial and temporal aspects

- GMM Training Stage:

- Trains two Gaussian Mixture Models (GMM) with n_cluster components:

- One for spatial representations

- One for temporal representations

- The GMMs capture the distribution patterns in the latent space

- Parameters (weights, means, covariances) are saved and fixed for later use

- These serve as prior distributions for the next stage

- Joint Training Stage:

- Combines three types of losses:

- Reconstruction loss: How well the model reconstructs trajectories

- Gaussian loss: How well the latent representations match the GMM priors

- Category loss: How well the model can distinguish between different clusters

- Uses learned GMM parameters as priors

- The model learns to balance between reconstruction accuracy and distribution matching

- Detection Stage:

- For each test trajectory:

- Runs the decoder with each GMM component as initial state

- Computes likelihood scores for both spatial and temporal aspects

- Takes maximum likelihood from spatial and temporal predictions separately

- Final anomaly score = 1 - (max spatial likelihood × max temporal likelihood)

- Uses PR-AUC (Precision-Recall Area Under Curve) for evaluation

Key Improvements/Corrections to Your Summary:

- The model uses GRU networks, not LSTM

- There are n_cluster Gaussian components (default 20), not just three

- The model maintains separate spatial and temporal aspects throughout all stages

- The co-attention mechanism helps align spatial and temporal features

- The multi-scale architecture uses weighted combination of different temporal scales

Implementation Details:

- Supports both Porto and Chengdu (CD) datasets

- Includes three update modes: pretrain, temporal, and rank

- Uses PyTorch for deep learning components

- Implements batch processing and proper masking for variable-length trajectories

- Includes comprehensive training, validation, and testing pipelines

This architecture effectively combines multi-scale temporal analysis with dual-aspect (spatial-temporal) modeling for trajectory anomaly detection.

Yes, you're absolutely right. Let me clarify the Pretraining Stage, specifically about the reconstruction loss:

- Pretraining Stage:

- The model learns to generate spatial and temporal embeddings separately using two parallel networks (MST_OATD_S and MST_OATD_T)

- Each network uses a multi-scale encoder (with three different scales) and a decoder

- Features:

- Uses co-attention mechanism between spatial and temporal features

- Implements three GRU encoders for different scales (s1, s2, s3)

- Employs weighted combination of multi-scale features

- Generates mean (μ) and variance (σ²) for both spatial and temporal aspects

- Reconstruction Loss:

- Spatial reconstruction loss: CrossEntropyLoss between predicted road segments and actual road segments

- Temporal reconstruction loss: CrossEntropyLoss between predicted time tokens and actual time tokens

- Total pretraining loss = spatial_loss + temporal_loss

This can be seen in the code in mst_oatd_trainer.py:

def pretrain(self, epoch):

# ...

output_s, _, _, _ = self.MST_OATD_S(trajs, times, seq_lengths, batch_size, "pretrain", -1)

output_t, _, _, _ = self.MST_OATD_T(trajs, times, seq_lengths, batch_size, "pretrain", -1)

times = time_convert(times, self.time_interval)

# Reconstruction loss for both spatial and temporal aspects

loss = self.crit(output_s[mask == 1], trajs.to(self.device)[mask == 1]) # Spatial reconstruction

loss += self.crit(output_t[mask == 1], times.to(self.device)[mask == 1]) # Temporal reconstruction

During pretraining, the model focuses solely on reconstruction ability without considering the Gaussian mixture components or clustering objectives that come in later stages.

Here's the revised comprehensive summary of the MST-OATD model:

- Pretraining Stage:

- The model learns to generate spatial and temporal embeddings separately using two parallel networks (MST_OATD_S and MST_OATD_T)

- Each network uses a multi-scale encoder (with three different scales) and a decoder

- Features:

- Uses co-attention mechanism between spatial and temporal features

- Implements three GRU encoders for different scales (s1, s2, s3)

- Employs weighted combination of multi-scale features

- Generates mean (μ) and variance (σ²) for both spatial and temporal aspects

- Reconstruction Loss:

- Spatial reconstruction loss: CrossEntropyLoss between predicted and actual road segments

- Temporal reconstruction loss: CrossEntropyLoss between predicted and actual time tokens

- Total pretraining loss = spatial_loss + temporal_loss

- GMM Training Stage:

- Trains two Gaussian Mixture Models (GMM) with n_cluster components (default 20):

- One for spatial representations

- One for temporal representations

- The GMMs capture the distribution patterns in the latent space

- Parameters (weights, means, covariances) are saved and fixed for later use

- These serve as prior distributions for the next stage

- Joint Training Stage:

- Combines three types of losses:

- Reconstruction loss: CrossEntropyLoss for both spatial and temporal reconstruction

- Gaussian loss: How well the latent representations match the GMM priors

- Category loss: How well the model can distinguish between different clusters

- Uses learned GMM parameters as priors

- Loss formula: L = L_reconstruction + L_gaussian/hidden_size + 0.1 * L_category

- Detection Stage:

- For each test trajectory:

- Runs the decoder with each GMM component as initial state

- Computes likelihood scores for both spatial and temporal aspects

- Takes maximum likelihood from spatial and temporal predictions separately

- Final anomaly score = 1 - (max spatial likelihood × max temporal likelihood)

- Uses PR-AUC for evaluation

Implementation Details:

- Supports both Porto and Chengdu (CD) datasets:

- Porto: 51×119 spatial tokens, 5760 temporal tokens

- Chengdu: 167×154 spatial tokens, 8640 temporal tokens

- Three update modes:

- Pretrain: Only use pretrained model

- Temporal: Update with new temporal data

- Rank: Update based on ranking scores

- Technical features:

- PyTorch implementation

- Batch processing with masking for variable-length trajectories

- Comprehensive training/validation/testing pipelines

- Multi-scale temporal analysis

- Dual-aspect (spatial-temporal) modeling

This architecture leverages multi-scale temporal analysis and dual-aspect modeling through all stages to effectively detect trajectory anomalies in an online setting.

Ah yes, you're right. Let me clarify the GMM Training Stage, specifically about the data used:

- GMM Training Stage:

- Uses the pretrained encoder to get latent representations:

- Processes all training trajectories through the pretrained encoders

- For each trajectory, gets both spatial and temporal latent vectors

- Collects all latent vectors (z_s and z_t) from the training set

This can be seen in the code from mst_oatd_trainer.py:

def train_gmm(self):

self.MST_OATD_S.eval()

self.MST_OATD_T.eval()

# Load pretrained models

checkpoint = torch.load(self.pretrained_path)

self.MST_OATD_S.load_state_dict(checkpoint['model_state_dict_s'])

self.MST_OATD_T.load_state_dict(checkpoint['model_state_dict_t'])

with torch.no_grad():

z_s = [] # Spatial latent vectors

z_t = [] # Temporal latent vectors

# Get latent representations for all training data

for batch in self.train_loader:

trajs, times, seq_lengths = batch

batch_size = len(trajs)

# Get latent vectors from encoders

_, _, _, hidden_s = self.MST_OATD_S(trajs, times, seq_lengths, batch_size, "pretrain", -1)

_, _, _, hidden_t = self.MST_OATD_T(trajs, times, seq_lengths, batch_size, "pretrain", -1)

z_s.append(hidden_s.squeeze(0))

z_t.append(hidden_t.squeeze(0))

# Concatenate all latent vectors

z_s = torch.cat(z_s, dim=0)

z_t = torch.cat(z_t, dim=0)

- Trains two separate GMMs:

- Spatial GMM: Trained on all spatial latent vectors (z_s)

- Temporal GMM: Trained on all temporal latent vectors (z_t)

- Both use n_cluster components (default 20)

- Parameters saved for each GMM:

- Weights (π)

- Means (μ)

- Covariances (Σ)

- These GMM parameters become priors for the joint training stage

- The fitting process uses the full training dataset to learn the most representative Gaussian mixtures for both spatial and temporal aspects

This stage is crucial because it learns the underlying distributions in both spatial and temporal latent spaces using all available training data, which then guides the model during joint training and anomaly detection.

Ah yes, the ranking method is applied after the initial GMM training, specifically in the update phase. Let me explain the ranking-based GMM update process from train_update.py:

- First, get trajectory categories using the initial GMM:

def get_category(trajs):

# Get latent representations using pretrained encoder

z = get_z(trajs)

# Predict cluster labels using GMM

c_labels = gmm.predict(z)

# Organize trajectories by cluster

origin_trajs = []

for label in range(args.n_cluster):

index = c_labels == label

origin_trajs.append(trajs[index].tolist())

return origin_trajs

- Then apply ranking within each cluster using a linear model:

def get_score(z):

# Get probability distribution over clusters

probs = gmm.predict_proba(z)

idx = []

linear = Linear_Model()

# For each cluster

for label in range(args.n_cluster):

data = -probs[:, label] # Negative probabilities

# Get ranking using linear model

rank = linear.test(label, torch.Tensor(data).to(args.device))

idx.append(rank)

idx = np.array(idx).T

idxs = np.argsort(idx, axis=1)

return idxs

- Update the data using the ranking scores:

def update_data(origin_trajs, train_trajs, cats_sample):

# Get latent vectors and trajectories for selected categories

_, z, train_trajs = get_index(train_trajs, cats_sample)

idxs = get_score(z)

# Update trajectories based on ranking

max_idxs = idxs[:, 0] # Best cluster for each trajectory

for i, traj in enumerate(train_trajs):

# Add trajectory to its best-matching cluster

max_idx = max_idxs[i]

origin_trajs[max_idx].append(traj)

# Remove oldest trajectory from least-matching cluster

min_c = args.n_cluster - 1

min_idx = idxs[:, min_c][i]

while not origin_trajs[min_idx]:

min_c -= 1

min_idx = idxs[:, min_c][i]

origin_trajs[min_idx].pop(0)

return np.array(sum(origin_trajs, []), dtype=object)

The ranking process works as follows:

-

For new trajectories:

- Get their latent representations using the encoder

- Use GMM to get probability distribution over clusters

- Use a linear model to rank trajectories within each cluster

- Assign trajectories to their best-matching clusters

- Remove oldest trajectories from least-matching clusters

-

This ranking-based update helps:

- Maintain cluster quality over time

- Adapt to evolving trajectory patterns

- Balance cluster sizes

- Remove outdated or less representative trajectories

The update mode can be set using args.update_mode:

- 'pretrain': Use original GMM without updates

- 'temporal': Update based on temporal sequence

- 'rank': Use this ranking-based update method

This ranking mechanism is particularly important for online learning scenarios where the trajectory patterns may evolve over time.

Yes, you're exactly right! Let me explain the difference between training and detection phase reconstruction calculations:

During Training (mean cross entropy):

def train(self, epoch):

# ...

# Using CrossEntropyLoss with reduction='mean' (default)

self.crit = nn.CrossEntropyLoss()

# Loss calculation in training

loss = self.crit(output_s[mask == 1], trajs.to(self.device)[mask == 1])

loss += self.crit(output_t[mask == 1], times.to(self.device)[mask == 1])

During Detection (cross entropy for each trajectory):

def detection(self):

# ...

# Using CrossEntropyLoss with reduction='none' to get per-element losses

self.detec = nn.CrossEntropyLoss(reduction='none')

# Score calculation in detection

likelihood_s = - self.detec(output_s.reshape(-1, output_s.shape[-1]),

trajs.to(self.device).reshape(-1))

# Average over sequence length for each trajectory

likelihood_s = torch.exp(

torch.sum(mask * (likelihood_s.reshape(batch_size, -1)), dim=-1) / torch.sum(mask, 1))

Key differences:

-

Training phase:

- Uses

reduction='mean'to get average loss across batch - Directly used for optimization

- Uses

-

Detection phase:

- Uses

reduction='none'to get individual losses - Averages losses over sequence length for each trajectory

- Exponentiates to convert to likelihood score

- Takes maximum likelihood across clusters

- Uses

This ensures consistent scoring between training and detection while accounting for variable sequence lengths in the final anomaly scores.

浙公网安备 33010602011771号

浙公网安备 33010602011771号