爬虫综合大作业

爬取哔哩哔哩弹幕

import re import sqlite3 import requests from lxml import etree import threading from queue import Queue import pandas as pd import pymysql from sqlalchemy import create_engine import jieba import random import time from wordcloud import WordCloud import matplotlib.pyplot as plt class BiliSpider: '''哔哩哔哩弹幕爬虫''' tindex=0 global g_wordlist global episodes global allBarrage global ct_episodes allBarrage = [] episodes={} ct_episodes=1 g_wordlist=[] def __init__(self): url_st = self.get_url() self.start_url = url_st self.headers = { 'Referer': 'https://www.bilibili.com/bangumi/play/ep7821', 'Upgrade-Insecure-Requests': '1', 'User-Agent': 'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/46.0.2490.76 Mobile Safari/537.36', 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8', 'Accept-Encoding': 'gzip, deflate, sdch', 'Accept-Language': 'zh-CN,zh;q=0.8', 'Cache-Control': 'max-age=0', 'Connection': 'keep-alive', # 'Cookie': 'finger=846f9182; LIVE_BUVID=AUTO7515275889865517; fts=1527589020; BANGUMI_SS_413_REC=7823; sid=bywgf18g; buvid3=89102350-5F5E-4056-A926-16EEC8780EE8140233infoc; rpdid=oqllxwklspdosimsqlwiw; bg_view_413=7820%7C7819%7C7823%7C7822', 'Host': 'm.bilibili.com', } self.barrage_url = 'https://comment.bilibili.com/{}.xml' # self.proxies = {'https': 'https://115.223.209.238:9000'} # 要请求的url队列 self.url_queue = Queue() # 解析出的html字符串队列 self.html_str_q = Queue() #获取集数队列 self.ep_list_q = Queue() # 获取到的弹幕队列 self.barrage_list_q = Queue() #保存至数据库 #print("在self前") print(self.barrage_list_q) def get_url(self): url_input = input("请输入移动版的bilibili番剧ep号:\n(如ep63725)") # url ='https://m.bilibili.com/bangumi/play/ep63725' url='https://m.bilibili.com/bangumi/play/{}'.format(url_input) return url def parse_url(self, url=None, headers={}): if url is None: while True: url = self.url_queue.get() print('\n弹幕xml为:') print(url) res = requests.get(url, headers=headers) res.encoding = 'utf-8' self.html_str_q.put(res.text) # self.url_queue.task_done() return else: print('\n弹幕xml为:') print(url) res = requests.get(url, headers=headers) res.encoding = 'utf-8' return res.text def get_cid(self, html_str): html = etree.HTML(html_str) print(html_str) script = html.xpath('//script[contains(text(),"epList")]/text()')[0] cid_list = re.findall(r'"cid":(\d+)', script) return cid_list #集标题及副标题 def get_episodes(self, html_str): global episodes html = etree.HTML(html_str) ep_content = html.xpath('//script[contains(text(),"epList")]/text()')[0] print(ep_content) ep_list = re.findall(r'"share_copy":"(\S+)', ep_content) self.ep_list_q.put(ep_list) #获取弹幕文件url def get_barrage_url(self, cid_list): for i in (cid_list[1:]): self.url_queue.put(self.barrage_url.format(i)) # return url_list def get_barrage_list(self): while True: barrage_str = self.html_str_q.get() barrage_str = barrage_str.encode('utf-8') barrage_xml = etree.HTML(barrage_str) barrage_list = barrage_xml.xpath('//d/text()') self.barrage_list_q.put(barrage_list) return barrage_list def takeSecond(elem): return elem[1] def save_barrage(self): global g_wordlist global ct_episodes global allBarrage ct_episodes+=1 #停用词表 stop = [line.strip() for line in open("stop.txt", 'r', encoding='utf-8').readlines()] barrage_list = self.barrage_list_q.get() #输出弹幕 with open('barrage2.txt', 'w', encoding='utf-8') as f: for barrage in barrage_list: f.write(barrage) f.write('\n') fo = open('barrage2.txt','r',encoding='utf-8') tk = fo.read() for s in stop: tk = tk.replace(s, "") fo.close() wordlist = jieba.lcut(tk) b_ls=[] #生成一个字典 temp ={} for word in wordlist: duplicates=False if len(word)==1: continue else: temp[word]=temp.get(word,0)+1 count=temp[word] new=aBrrage(word,episodes[ct_episodes],count) for n1 in b_ls[0:]: if n1['word']==new['word']: duplicates=True if int(new['count'])>int(n1['count']): n_temp = new b_ls.remove(n1) b_ls.append(n_temp) break #字典列表 if(duplicates==False): b_ls.append(new) print("\n******") print(episodes[ct_episodes]) print("字幕数量:",len(barrage_list)) print("处理后弹幕数量:",len(b_ls)) allBarrage.extend(b_ls) g_wordlist.extend(wordlist) print('获取成功') return allBarrage def create_dict(self): dict = {} wordlist = {} return dict def run(self): '''主要逻辑''' global episodes # 请求初始视频url html_str = self.parse_url(url=self.start_url, headers=self.headers) # 提取数据cid cid_list = self.get_cid(html_str) print(cid_list) ep_list=self.get_episodes(html_str) # 组织弹幕的url self.get_barrage_url(cid_list) # 请求网址 episodes={} episodes = self.ep_list_q.get() ex_len=len(episodes) print('==========') for i in range(ex_len-2): self.parse_url() self.get_barrage_list() res = self.save_barrage() time.sleep(random.random() * 3)#设置爬取的时间间隔 if(i==ex_len-3): save_assql(res) #生成字典 wcdict = {} for word in g_wordlist: if len(word)==1: continue else: wcdict[word]= wcdict.get(word,0)+1 #排序 wcls = list(wcdict.items()) wcls.sort(key = lambda x:x[1],reverse=True) #输出前二十五词 print('输出系列前二十五词:') for i in range(25): print(wcls[i]) ciyun(g_wordlist) print("保存到数据库") #源代码使用的线程 # for i in range(100): # # barrage_str = self.parse_url(url) # t_parse = threading.Thread(target=self.parse_url) # t_parse.setDaemon(True) # t_parse.start() # # # 提取出信息 # for i in range(2): # # barrage_list = self.get_barrage_list(barrage_str) # t_barrage_list = threading.Thread(target=self.get_barrage_list) # t_barrage_list.setDaemon(True) # t_barrage_list.start() # # # 写入文件 # for i in range(2): # # self.save_barrage(barrage_list) # t_save = threading.Thread(target=self.save_barrage) # t_save.setDaemon(True) # t_save.start() # # # for q in [self.html_str_q, self.barrage_list_q, self.url_queue]: # q.join() print('==========') print('主线程结束') #保存至数据库 def save_assql(list): conInfo = "mysql+pymysql://root:123456@localhost:3306/bilibili?charset=utf8" engine = create_engine(conInfo,encoding='utf-8') if(list!=[]): df = pd.DataFrame(list) df.to_sql(name = 'bilibilitest', con = engine, if_exists = 'append', index = False) pymysql.connect(host='localhost',port=3306,user='root',passwd='123456',db='bilibili',charset='utf8') else: return def aBrrage(str,episodes,count): ab_dict={} ab_dict['word']= str ab_dict['e_index'] = episodes ab_dict['count']=count return ab_dict def ciyun(wordlist): wl_split=''.join(wordlist) #生成词云 mywc = WordCloud().generate(wl_split) plt.imshow(mywc) plt.axis("off") plt.show() if __name__ == '__main__': bili = BiliSpider() bili.run()

通过伪造headers访问

self.headers = {

'Referer': 'https://www.bilibili.com/bangumi/play/ep7821',

'Upgrade-Insecure-Requests': '1',

'User-Agent': 'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/46.0.2490.76 Mobile Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8',

'Accept-Encoding': 'gzip, deflate, sdch',

'Accept-Language': 'zh-CN,zh;q=0.8',

'Cache-Control': 'max-age=0',

'Connection': 'keep-alive',

# 'Cookie': 'finger=846f9182; LIVE_BUVID=AUTO7515275889865517; fts=1527589020; BANGUMI_SS_413_REC=7823; sid=bywgf18g; buvid3=89102350-5F5E-4056-A926-16EEC8780EE8140233infoc; rpdid=oqllxwklspdosimsqlwiw; bg_view_413=7820%7C7819%7C7823%7C7822',

'Host': 'm.bilibili.com',

}

关键代码:

1.获取视频id:cid

def get_cid(self, html_str): html = etree.HTML(html_str) print(html_str) script = html.xpath('//script[contains(text(),"epList")]/text()')[0] # print(script) cid_list = re.findall(r'"cid":(\d+)', script) return cid_list

2.生成字典与词云

def save_barrage(self): global episodes global g_wordlist episodes=episodes+1 #停用词表 stop = [line.strip() for line in open("stop.txt", 'r', encoding='utf-8').readlines()] while True: barrage_list = self.barrage_list_q.get() #输出弹幕 g_wordlist=[] # print(barrage_list) with open('barrage2.txt', 'w', encoding='utf-8') as f: for barrage in barrage_list: f.write(barrage) f.write('\n') fo = open('barrage2.txt','r',encoding='utf-8') tk = fo.read() for s in stop: tk = tk.replace(s, "") fo.close() wordlist = jieba.lcut(tk) b_ls=[] ab_dict = {'word':"test",'e_index':999,'count':1} b_ls.append(ab_dict) #生成一个字典 temp ={} for word in wordlist: duplicates=False if len(word)==1: continue else: temp[word]=temp.get(word,0)+1 count=temp[word] new=aBrrage(word,episodes,count) # ab_dict['word']= word # ab_dict['e_index'] = episodes # ab_dict['count']=ab_dict.get(word,0)+1 for n1 in b_ls[0:]: if n1['word']==new['word']: duplicates=True if int(new['count'])>int(n1['count']): n_temp = new b_ls.remove(n1) b_ls.append(n_temp) break #字典列表 if(duplicates==False): b_ls.append(new) print("\n******") print("第",episodes,"集") print("字幕数量:",len(barrage_list)) print("处理后弹幕数量:",len(b_ls)) allBarrage.extend(b_ls) save_assql(allBarrage) ciyun(wordlist) print('保存成功')

3.全局变量保存弹幕等信息

global g_wordlist#保存结巴弹幕 global episodes#在存放xml的集标题 global allBarrage#将保存到数据库有其他信息如集信息的列表 global ct_episodes#集数序数 allBarrage = [] episodes={} ct_episodes=1#第一集集标题前两项均为无效信息 g_wordlist=[]

4.词云及数据库保存

def ciyun(wordlist): wl_split=''.join(wordlist) #生成词云 mywc = WordCloud().generate(wl_split) plt.imshow(mywc) plt.axis("off") plt.show() def save_assql(list): conInfo = "mysql+pymysql://root:123456@localhost:3306/bilibili?charset=utf8" engine = create_engine(conInfo,encoding='utf-8') if(list!=[]): df = pd.DataFrame(list) df.to_sql(name = 'bilibilitest', con = engine, if_exists = 'append', index = False) pymysql.connect(host='localhost',port=3306,user='root',passwd='123456',db='bilibili',charset='utf8') else: print('保存失败') return

5.主要逻辑

def run(self): '''主要逻辑''' global episodes # 请求初始视频url html_str = self.parse_url(url=self.start_url, headers=self.headers) # 提取数据cid cid_list = self.get_cid(html_str) print(cid_list) ep_list=self.get_episodes(html_str) # 组织弹幕的url self.get_barrage_url(cid_list) # 请求网址 episodes={} episodes = self.ep_list_q.get() ex_len=len(episodes) print('==========') for i in range(ex_len-2): self.parse_url() self.get_barrage_list() res = self.save_barrage() time.sleep(random.random() * 3)#设置爬取的时间间隔 if(i==ex_len-3): save_assql(res) #生成字典 wcdict = {} for word in g_wordlist: if len(word)==1: continue else: wcdict[word]= wcdict.get(word,0)+1 #排序 wcls = list(wcdict.items()) wcls.sort(key = lambda x:x[1],reverse=True) #输出前二十五词 print('输出系列前二十五词:') for i in range(25): print(wcls[i]) ciyun(g_wordlist) print("保存到数据库") print('==========') print('主线程结束')

4.输出词云能够分析该视频或者该系列视频的关键词

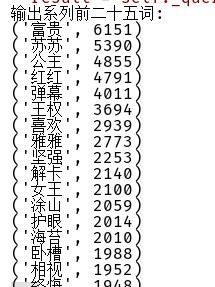

输出系列剧集的前十五词:

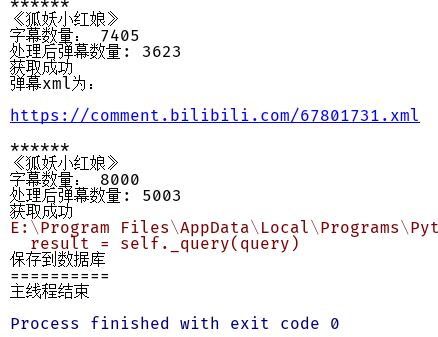

拉取弹幕提示:

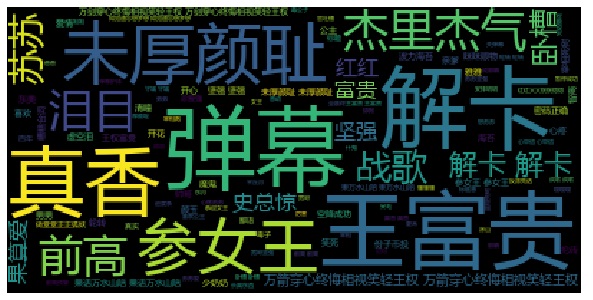

输出该系列剧集的弹幕词云如下图:

总结:

读取的弹幕可以了解到该视频的主要内容,能在看之前就较直观地了解视频的好评程度。若有铺天遍地的谩骂那么或许就不是一部适合大众观看的视频。

若看到关键词是自己喜欢的,那么就是能很快选择到自己喜欢的视频。