Test Linux/Windows 11 performance when run unit32.max() times increment time cost

Ubuntu

#include <chrono> #include <iostream> #include <limits.h> #include <uuid/uuid.h> using namespace std; void testTime(int x); int main(int args, char **argv) { int x = atoi(argv[1]); testTime(x); } void testTime(int x) { cout<<numeric_limits<uint32_t>().max()<<endl; chrono::time_point<chrono::steady_clock> startTime; chrono::time_point<chrono::steady_clock> endTime; for(int i=0;i<x;i++) { startTime = chrono::steady_clock::now(); for(uint32_t j=0;j<numeric_limits<uint32_t>().max();j++) { } endTime = chrono::steady_clock::now(); cout << i<< "," << chrono::duration_cast<chrono::milliseconds>(endTime - startTime).count() << " milliseconds,"<<chrono::duration_cast<chrono::nanoseconds>(endTime-startTime).count()<<" nanos!" << endl; } }

Compile

g++ -std=c++2a *.cpp -o h1 -luuid

Run

./h1 10

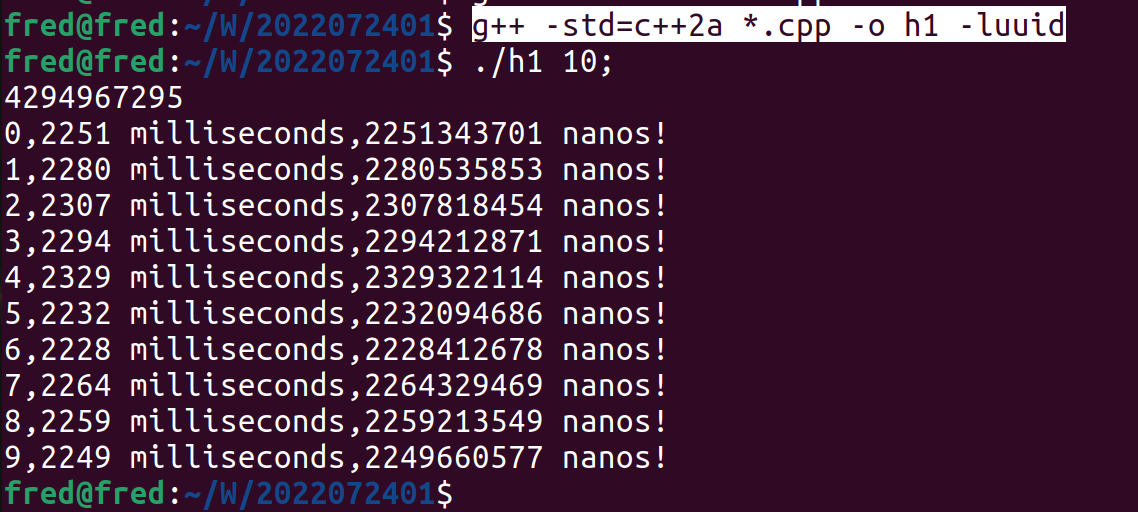

Snapshot

As the above snapshot illustrated when run 4294967296 times increment,in Ubuntu 20.04,c++ will cost approximately 2.2-2.3 seconds.

Win11/Visual Studio 2022/VC++

#include <chrono> #include <limits.h> #include <iostream> #include <Windows.h> using namespace std; void testTime(); int main() { testTime(); cin.get(); } void testTime() { chrono::time_point<chrono::steady_clock> startTime; chrono::time_point<chrono::steady_clock> endTime; for (int i = 0;i < 10;i++) { startTime = chrono::steady_clock::now(); UINT j; for (j = 0;j < UINT_MAX;j++) { } endTime = chrono::steady_clock::now(); cout << i << "," << j << "," << chrono::duration_cast<chrono::milliseconds>(endTime - startTime).count() << " milliseconds," << chrono::duration_cast<chrono::nanoseconds>(endTime - startTime).count() << " nanos!" << endl; } }

Run release/x64

As the above snapshot illustrates that when increment from 0 to 4294967295 only cost 100 nanoseconds.

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 分享一个免费、快速、无限量使用的满血 DeepSeek R1 模型,支持深度思考和联网搜索!

· 基于 Docker 搭建 FRP 内网穿透开源项目(很简单哒)

· ollama系列01:轻松3步本地部署deepseek,普通电脑可用

· 25岁的心里话

· 按钮权限的设计及实现