MongoDB之 复制集搭建

MongoDB复制集搭建步骤,本次搭建使用3台机器,一个是主节点,一个是从节点,一个是仲裁者。

主节点负责与前台客户端进行数据读写交互,从节点只负责容灾,构建高可用,冗余备份。仲裁者的作用是当主节点宕机后进行裁决,让从节点替代主节点。

仲裁者的定义:

仲裁者(Arbiter)是复制集中的一个mongodb实例,它并不保存数据。仲裁节点使用最小的资源并且不要求硬件设备,不能将Arbiter部署在同一个数据集节点中,可以部署在其他应用服务器或者监视服务器中,也可部署在单独的虚拟机中。为了确保复制集中有奇数的投票成员(包括primary),需要添加仲裁节点做为投票,否则primary不能运行时不会自动切换primary。

1、3个节点的IP分别是

172.31.22.29--------------->主节点 172.31.26.133--------------->从节点 172.31.17.203-------------->仲裁者

此外在三台机器上分别部署mongoDB服务。版本最好一样,我们这次搭建是版本3.4.18。

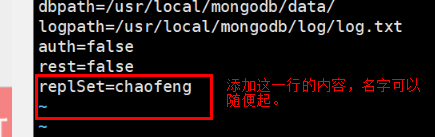

2、修改配置文件,添加如下内容:

上面的这个图片中“replSet”是关键字,其中的S是大写。

修改完配置文件后保存即可,三台机器的配置文件都要添加这一行内容。

3、初始化数据集。

现在我们把三台机器的mongodb都启动并放在后台运行。

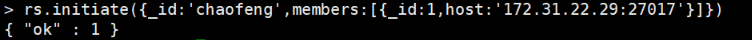

然后我们在主节点的这台机器上进行初始化

rs.initiate({_id:'repl1',members:[{_id:1,host:'IP:27017'}]})

初始化参数说明:

_id:复制集名称(第一个_id)

members:复制集服务器列表

_id:服务器的唯一ID(数组里_id)

host:服务器主机

我们操作的是172.31.22.29服务器,其中chaofeng即是复制集名称,和mongodb.conf中保持一致,初始化复制集的第一个服务器将会成为主复制集

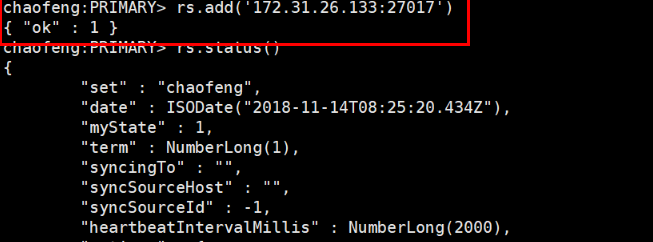

查看一下状态

chaofeng:SECONDARY> rs.status() { "set" : "chaofeng", "date" : ISODate("2018-11-14T08:20:17.034Z"), "myState" : 1, "term" : NumberLong(1), "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "heartbeatIntervalMillis" : NumberLong(2000), "optimes" : { "lastCommittedOpTime" : { "ts" : Timestamp(1542183612, 1), "t" : NumberLong(1) }, "appliedOpTime" : { "ts" : Timestamp(1542183612, 1), "t" : NumberLong(1) }, "durableOpTime" : { "ts" : Timestamp(1542183612, 1), "t" : NumberLong(1) } }, "members" : [ { "_id" : 1, "name" : "172.31.22.29:27017", "health" : 1, "state" : 1, "stateStr" : "PRIMARY", "uptime" : 932, "optime" : { "ts" : Timestamp(1542183612, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-11-14T08:20:12Z"), "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "could not find member to sync from", "electionTime" : Timestamp(1542183500, 2), "electionDate" : ISODate("2018-11-14T08:18:20Z"), "configVersion" : 1, "self" : true, "lastHeartbeatMessage" : "" } ], "ok" : 1 }

chaofeng:PRIMARY>

我们通过rs.status()可以看到,现在的这台机器已经变成PRIMARY主节点了。上面的“stateStr”显示为PRIMARY

4、主节点添加从节点

好了,现在从节点已经添加到主节点了。

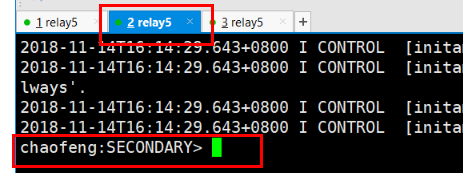

我们打开从节点的mongo前台客户端,发现mongo确实已经变成了从节点SECONDARY。

如果添加不成功,查看一下防火墙等原因

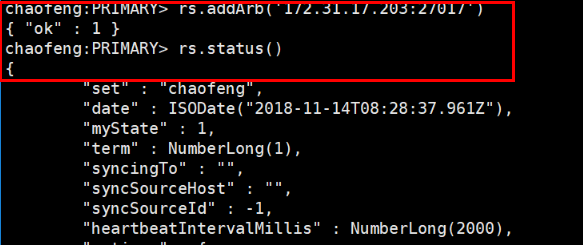

5、主节点添加仲裁节点

查看一下发现已经添加成功了。

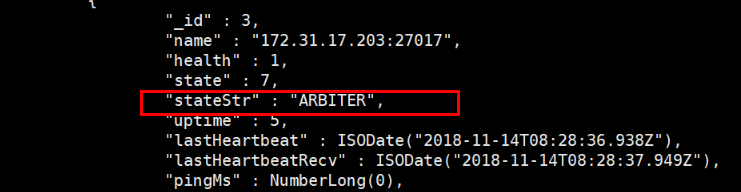

我们打开仲裁节点的mongo客户端:

发现此节点确实已经变成了仲裁节点了。

5、我们测试一下主从是否同步

在主节点进行测试:

chaofeng:PRIMARY> show dbs admin 0.000GB local 0.000GB chaofeng:PRIMARY> db test chaofeng:PRIMARY> db.haha.insert({name:"chaofengchen",id:1}) WriteResult({ "nInserted" : 1 }) chaofeng:PRIMARY> db.haha.insert({name:"xiaoming",id:2}) WriteResult({ "nInserted" : 1 }) chaofeng:PRIMARY>

然后去从节点查看一下:

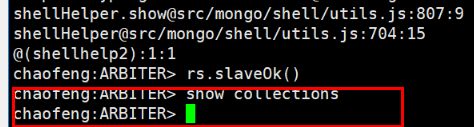

chaofeng:SECONDARY> db test chaofeng:SECONDARY> show collections; 2018-11-14T16:38:28.473+0800 E QUERY [thread1] Error: listCollections failed: { "ok" : 0, "errmsg" : "not master and slaveOk=false", "code" : 13435, "codeName" : "NotMasterNoSlaveOk" } : _getErrorWithCode@src/mongo/shell/utils.js:25:13 DB.prototype._getCollectionInfosCommand@src/mongo/shell/db.js:807:1 DB.prototype.getCollectionInfos@src/mongo/shell/db.js:819:19 DB.prototype.getCollectionNames@src/mongo/shell/db.js:830:16 shellHelper.show@src/mongo/shell/utils.js:807:9 shellHelper@src/mongo/shell/utils.js:704:15 @(shellhelp2):1:1 #虽然报错,但是没有关系

chaofeng:SECONDARY> rs.slaveOk() #执行以下这一步骤 chaofeng:SECONDARY> show collections; haha chaofeng:SECONDARY> db.haha.find() { "_id" : ObjectId("5bebdee9d002b6374b81ea22"), "name" : "chaofengchen", "id" : 1 } { "_id" : ObjectId("5bebdef7d002b6374b81ea23"), "name" : "xiaoming", "id" : 2 }

很不错,发现已经同步成功了,从节点可以很好的接受主节点的数据记录,并进行同步复制

报错:"errmsg" : "not master and slaveOk=false"错误说明:因为secondary是不允许读写的,如果非要解决,则执行:rs.slaveOk()

如果你再去仲裁节点查看消息是否同步数据,你会发现仲裁节点是没有数据的,仲裁节点只参与投票,不进行数据同步

6、测试主从节点故障转移功能

现在我们关闭主节点mongo后台服务,相当于主节点突然宕机了

chaofeng:PRIMARY> use admin switched to db admin chaofeng:PRIMARY> db.shutdownServer() server should be down... 2018-11-14T08:47:29.614+0000 I NETWORK [thread1] trying reconnect to 127.0.0.1:27017 (127.0.0.1) failed 2018-11-14T08:47:29.758+0000 I NETWORK [thread1] Socket recv() Connection reset by peer 127.0.0.1:27017 2018-11-14T08:47:29.758+0000 I NETWORK [thread1] SocketException: remote: (NONE):0 error: 9001 socket exception [RECV_ERROR] server [127.0.0.1:27017] 2018-11-14T08:47:29.758+0000 I NETWORK [thread1] reconnect 127.0.0.1:27017 (127.0.0.1) failed failed 2018-11-14T08:47:29.761+0000 I NETWORK [thread1] trying reconnect to 127.0.0.1:27017 (127.0.0.1) failed 2018-11-14T08:47:29.761+0000 W NETWORK [thread1] Failed to connect to 127.0.0.1:27017, in(checking socket for error after poll), reason: Connection refused 2018-11-14T08:47:29.761+0000 I NETWORK [thread1] reconnect 127.0.0.1:27017 (127.0.0.1) failed failed

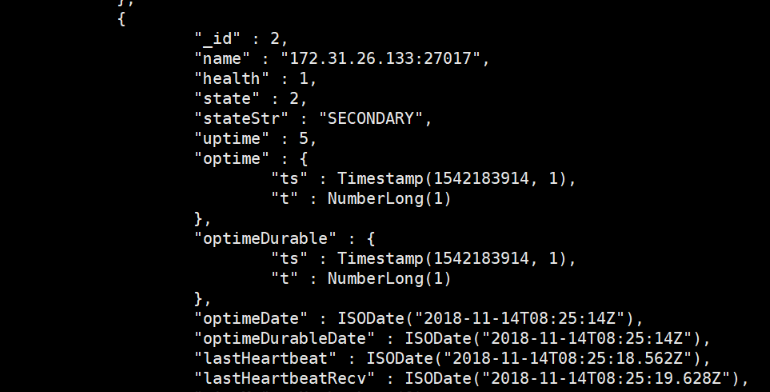

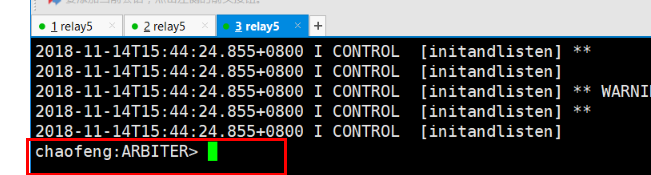

然后我们去从节点客户端看看状态:

chaofeng:SECONDARY> rs.status() { "set" : "chaofeng", "date" : ISODate("2018-11-14T08:48:19.227Z"), "myState" : 1, "term" : NumberLong(2), "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "heartbeatIntervalMillis" : NumberLong(2000), "optimes" : { "lastCommittedOpTime" : { "ts" : Timestamp(1542185242, 1), "t" : NumberLong(1) }, "appliedOpTime" : { "ts" : Timestamp(1542185290, 1), "t" : NumberLong(2) }, "durableOpTime" : { "ts" : Timestamp(1542185290, 1), "t" : NumberLong(2) } }, "members" : [ { "_id" : 1, "name" : "172.31.22.29:27017", "health" : 0, "state" : 8, "stateStr" : "(not reachable/healthy)", "uptime" : 0, "optime" : { "ts" : Timestamp(0, 0), "t" : NumberLong(-1) }, "optimeDurable" : { "ts" : Timestamp(0, 0), "t" : NumberLong(-1) }, "optimeDate" : ISODate("1970-01-01T00:00:00Z"), "optimeDurableDate" : ISODate("1970-01-01T00:00:00Z"), "lastHeartbeat" : ISODate("2018-11-14T08:48:18.833Z"), "lastHeartbeatRecv" : ISODate("2018-11-14T08:47:29.320Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "Connection refused", "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "", "configVersion" : -1 }, { "_id" : 2, "name" : "172.31.26.133:27017", "health" : 1, "state" : 1, "stateStr" : "PRIMARY", "uptime" : 2030, "optime" : { "ts" : Timestamp(1542185290, 1), "t" : NumberLong(2) }, "optimeDate" : ISODate("2018-11-14T08:48:10Z"), "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "could not find member to sync from", "electionTime" : Timestamp(1542185258, 1), "electionDate" : ISODate("2018-11-14T08:47:38Z"), "configVersion" : 3, "self" : true, "lastHeartbeatMessage" : "" }, { "_id" : 3, "name" : "172.31.17.203:27017", "health" : 1, "state" : 7, "stateStr" : "ARBITER", "uptime" : 1186, "lastHeartbeat" : ISODate("2018-11-14T08:48:18.807Z"), "lastHeartbeatRecv" : ISODate("2018-11-14T08:48:18.101Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "", "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "", "configVersion" : 3 } ], "ok" : 1 }

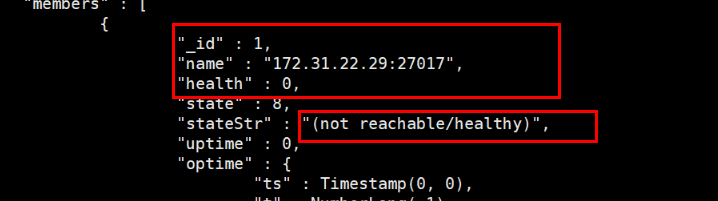

你会发现上面的主节点的状态出问题了,如下图所示(下面的图片就是上面的数据的截图):

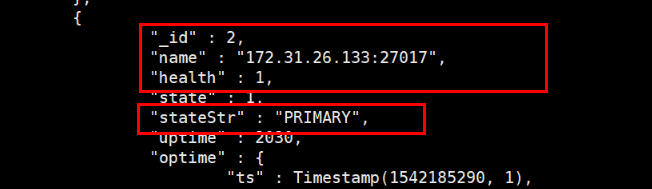

然后你再看看从节点的状态:

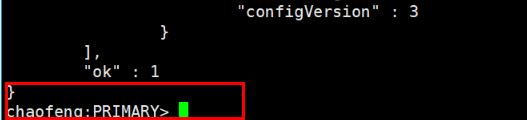

此时的从节点已经变成了主节点了,

确实变成了主节点。

复制集常用方法总结:

rs.initiate():复制集初始化,例如:rs.initiate({_id:'repl1',members:[{_id:1,host:'192.168.168.129:27017'}]})

rs.reconfig():重新加载配置文件,例如:

rs.reconfig({_id:'repl1',members:[{_id:1,host:'192.168.168.129:27017'}]},{force:true})当只剩下一个secondary节点时,复制集变得不可用,则可以指定force属性强制将节点变成primary,然后再添加secondary节点

rs.status():查看复制集状态

db.printSlaveReplicationInfo():查看复制情况

rs.conf()/rs.config():查看复制集配置

rs.slaveOk():在当前连接让secondary可以提供读操作

rs.add():增加复制集节点,例如:

rs.add('192.168.168.130:27017')rs.add({"_id":3,"host":"192.168.168.130:27017","priority":0,"hidden":true})指定hidden属性添加备份节点rs.add({"_id":3,"host":"192.168.168.130:27017","priority":0,"slaveDelay":60})指定slaveDelay属性添加延迟节点priority:是优先级,默认为1,如果想手动指定某个节点为primary节点,则把对应节点的priority属性设置为所有节点中最大的一个即可

rs.remove():删除复制集节点,例如:rs.remove('192.168.168.130:27017')

rs.addArb():添加仲裁节点,例如:

rs.addArb('192.168.168.131:27017')或者rs.add({"_id":3,"host":"192.168.168.130:27017","arbiterOnly":true}),仲裁节点,只参与投票,不接收数据

浙公网安备 33010602011771号

浙公网安备 33010602011771号