LVM卷的创建及案例演示

LVM:Logical Volume Manager, Version:2

dm: device mapper,将一个或多个底层块设备组织成一个逻辑设备的模块。

/dev/dm-# 这里的#表示数字,代表某一块dm设备

/dev/mapper/VG_NAME-LV_NAME

/dev/mapper/vol0-root

/dev/VG_NAME/LV_NAME

/dev/vol0/root

pv管理工具:

pvs:简要展示pv信息

pvdisplay:显示pv的详细信息

pvcreate /dev/DEVICE:创建pv

vg管理工具:

vgs

vgsdisplay

vgcreate [-s #MG] VolumeGroupName PhysicalDevicePath [PhysicalDevicePath....]

vgextend VolumnGroupName PhysicalDevicePath [PhysicalDevicePath...]

vgreduce VolumnGroupName PhysicalDevicePath [PhysicalDevicePath...]

lv管理工具

lvs

lvdisplay

lvcreate -L #[MG] -n LV_Name VolumeGroup

扩展逻辑卷:

lvextend -L [+]#[MGT] /dev/VG_NAME/LV_NAME

resize2fs /dev/VG_NAME/LV_NAME

缩减逻辑卷

umount /dev/VG_NAME/LV_NAME

e2fsck -f /dev/VG_NAME/LV_NAME #强制对磁盘进行检查坏块等错误。

resize2fs /dev/VG_ANME/LV_NAME #[MGT]

lvreduce -L [-]#[MGT] /dev/VG_NAME/LV_NAME

mount

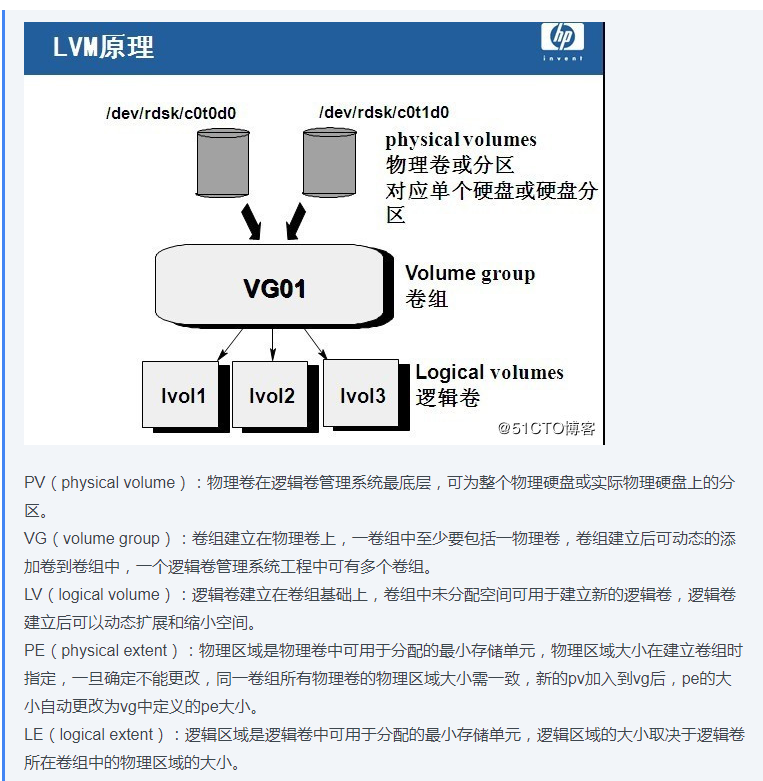

看一下原理:

案例:我们讲解一个创建LVM卷

此时我安装一块1200G的硬盘,在Linux显示为/dev/xvdb的块设备。

[root@:vg_adn_clickhouseTest_3ubuntu:172.31.18.18 /dev]#fdisk /dev/xvdb Welcome to fdisk (util-linux 2.31.1). Changes will remain in memory only, until you decide to write them. Be careful before using the write command. Command (m for help): p Disk /dev/xvdb: 1.2 TiB, 1288490188800 bytes, 2516582400 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0xc9629da1 Command (m for help): n Partition type p primary (0 primary, 0 extended, 4 free) e extended (container for logical partitions) Select (default p): p Partition number (1-4, default 1): First sector (2048-2516582399, default 2048): Last sector, +sectors or +size{K,M,G,T,P} (2048-2516582399, default 2516582399): Created a new partition 1 of type 'Linux' and of size 1.2 TiB. Partition #1 contains a LVM2_member signature. Do you want to remove the signature? [Y]es/[N]o: Y The signature will be removed by a write command. Command (m for help): t Selected partition 1 Hex code (type L to list all codes): 8e Changed type of partition 'Linux' to 'Linux LVM'. Command (m for help): w The partition table has been altered. Calling ioctl() to re-read partition table. Syncing disks. [root@:vg_adn_clickhouseTest_3ubuntu:172.31.18.18 /dev]#ls autofs fd log mapper ppp tty50 tty6 ttyS2 vcs5 vga_arbiter block full loop-control mcelog psaux tty51 tty60 ttyS3 vcs6 vhost-net btrfs-control fuse loop0 mem ptmx tty52 tty61 ttyprintk vcsa vhost-vsock char hpet loop1 memory_bandwidth pts tty53 tty62 uinput vcsa1 xen console hugepages loop2 mqueue random tty54 tty63 urandom vcsa2 xvda core hwrng loop3 net rfkill tty55 tty7 vcs vcsa3 xvda1 cpu_dma_latency initctl loop4 network_latency rtc tty56 tty8 vcs1 vcsa4 xvdb cuse input loop5 network_throughput rtc0 tty9 vcs2 vcsa5 xvdb1 disk kmsg loop6 null shm tty58 ttyS0 vcs3 vcsa6 zero ecryptfs lightnvm loop7 port snapshot tty59 ttyS1 vcs4 vfio [root@:vg_adn_clickhouseTest_3ubuntu:172.31.18.18 /dev]#pvcreate /dev/xvdb1 Physical volume "/dev/xvdb1" successfully created. [root@:vg_adn_clickhouseTest_3ubuntu:172.31.18.18 /dev]#pvdisplay "/dev/xvdb1" is a new physical volume of "1.17 TiB" --- NEW Physical volume --- PV Name /dev/xvdb1 VG Name PV Size 1.17 TiB Allocatable NO PE Size 0 Total PE 0 Free PE 0 Allocated PE 0 PV UUID u9Re6S-Bl6b-WLic-7aau-mOK5-2bBX-CB7TKg [root@:vg_adn_clickhouseTest_3ubuntu:172.31.18.18 /dev]#vgcreate data /dev/xvdb1 Volume group "data" successfully created [root@:vg_adn_clickhouseTest_3ubuntu:172.31.18.18 /dev]#vgdisplay --- Volume group --- VG Name data System ID Format lvm2 Metadata Areas 1 Metadata Sequence No 1 VG Access read/write VG Status resizable MAX LV 0 Cur LV 0 Open LV 0 Max PV 0 Cur PV 1 Act PV 1 VG Size 1.17 TiB PE Size 4.00 MiB Total PE 307199 Alloc PE / Size 0 / 0 Free PE / Size 307199 / 1.17 TiB VG UUID 1zQAIi-3O6I-k0re-ZnpQ-9o16-ZG4i-zbiW5L [root@:vg_adn_clickhouseTest_3ubuntu:172.31.18.18 /dev]#lvcreate -L 1170G -n lv-data data #这里的lv-data是lvm的名字,是自定义的。而data是vgGroup的名称 Logical volume "lv-data" created. [root@:vg_adn_clickhouseTest_3ubuntu:172.31.18.18 /dev]#lvs LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert lv-data data -wi-a----- 1.14t [root@:vg_adn_clickhouseTest_3ubuntu:172.31.18.18 /dev]#lvdisplay --- Logical volume --- LV Path /dev/data/lv-data LV Name lv-data VG Name data LV UUID OtxncR-HT6N-filt-TM84-t19s-WaJg-3fbFws LV Write Access read/write LV Creation host, time ip-172-31-18-18, 2018-12-21 11:48:03 +0800 LV Status available # open 0 LV Size 1.14 TiB Current LE 299520 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 256 Block device 253:0

我们已经创建好了卷,接下来就开始就开始格式化这个lvm卷才能使用。我一般格式化成ext4的文件系统的格式,最后再进行挂载即可。

[root@:vg_adn_clickhouseTest_3ubuntu:54.88.193.72:172.31.18.18 /dev]#mkfs -t ext4 /dev/mapper/data-lv--data mke2fs 1.44.1 (24-Mar-2018) Creating filesystem with 306708480 4k blocks and 76677120 inodes Filesystem UUID: 48bfb718-44fc-4368-960c-d3b74dc7878a Superblock backups stored on blocks: 32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208, 4096000, 7962624, 11239424, 20480000, 23887872, 71663616, 78675968, 102400000, 214990848 Allocating group tables: done Writing inode tables: done Creating journal (262144 blocks): done Writing superblocks and filesystem accounting information: done [root@:vg_adn_clickhouseTest_3ubuntu:54.88.193.72:172.31.18.18 /dev]#mkdir /data [root@:vg_adn_clickhouseTest_3ubuntu:54.88.193.72:172.31.18.18 /dev]#cd /data [root@:vg_adn_clickhouseTest_3ubuntu:54.88.193.72:172.31.18.18 /data]#ls[root@:vg_adn_clickhouseTest_3ubuntu:54.88.193.72:172.31.18.18 /data]#mount /dev/mapper/data-lv--data /data [root@:vg_adn_clickhouseTest_3ubuntu:54.88.193.72:172.31.18.18 /data]#df -Th Filesystem Type Size Used Avail Use% Mounted on udev devtmpfs 16G 0 16G 0% /dev tmpfs tmpfs 3.2G 772K 3.2G 1% /run /dev/xvda1 ext4 97G 1.6G 96G 2% / tmpfs tmpfs 16G 0 16G 0% /dev/shm tmpfs tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs tmpfs 16G 0 16G 0% /sys/fs/cgroup /dev/loop0 squashfs 88M 88M 0 100% /snap/core/5328 /dev/loop1 squashfs 13M 13M 0 100% /snap/amazon-ssm-agent/495 tmpfs tmpfs 3.2G 0 3.2G 0% /run/user/1002 /dev/loop2 squashfs 90M 90M 0 100% /snap/core/6130 /dev/loop3 squashfs 18M 18M 0 100% /snap/amazon-ssm-agent/930 /dev/mapper/data-lv--data ext4 1.2T 77M 1.1T 1% /data

创建快照

现在我们再讲一下快照:

通过使用lvm的快照我们可以轻松的备份数据,由于snapshot和源lvm的关系,snapshot只能够临时使用,不能脱离源lvm而存在.

当一个snapshot创建的时候,仅拷贝原始卷里的数据,这部是物理上的数据拷贝,因此snapshot创建的特别块,当原始卷里的数据由写入时,备份卷开始记录原始卷哪些数据发生了变化,然后在原始卷新书局覆盖旧数据时,将旧数据拷贝到snapshot的预留空间中,起到备份数据的作用,就保证了所有数据和创建备份卷之前的数据一致性。

snapshot的特点:

采用COW实现方式时,snapshot的大小并不需要和原始卷一样大

1、根据原始卷数据的改变大小范围来设置

2、根据原始卷数据的更新频率来定,一旦snapshot的空间记录满了原始卷块变换的信息,那么这个snapshot就不能使用了

lvcreate -L 50M -n lv0backup -s /dev/vg0/lv0

创建一个50M 名字为lv0backup源设备为/dev/vg0/lv0的快照

此快照不需要格式化,直接进行挂载使用就行。

删除快照

1、首先是确定一下之前创建快照的路径

[root@chaofeng mapper]# lvdisplay --- Logical volume --- LV Path /dev/data/lv-data LV Name lv-data VG Name data LV UUID DKIkKT-b7yU-9jSZ-I4fk-4Knb-NQHe-9gWgbL LV Write Access read/write LV Creation host, time chaofeng, 2019-01-05 21:24:28 +0800 LV snapshot status source of data_snap [INACTIVE] LV Status available # open 0 LV Size 19.50 GiB Current LE 4992 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:3 --- Logical volume --- LV Path /dev/data/data_snapp LV Name data_snap VG Name data LV UUID IBJMrz-leJD-EbVs-YyGZ-IUJT-da0k-0FN5Hy LV Write Access read/write LV Creation host, time chaofeng, 2019-01-05 21:31:22 +0800 LV snapshot status INACTIVE destination for lv-data LV Status available # open 0 LV Size 19.50 GiB Current LE 4992 COW-table size 4.00 MiB COW-table LE 1 Snapshot chunk size 4.00 KiB Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:5

确定好之后开始删除

[root@chaofeng data]# lvremove /dev/data/data_snap Do you really want to remove active logical volume data/data_snap? [y/n]: y Logical volume "data_snap" successfully removed

此刻我们已经删除了快照所在的那块分区了。

缩减LVM空间大小

首先查看一下原LVM空间大小

[root@chaofeng data]# lvdisplay --- Logical volume --- LV Path /dev/data/lv-data LV Name lv-data VG Name data LV UUID DKIkKT-b7yU-9jSZ-I4fk-4Knb-NQHe-9gWgbL LV Write Access read/write LV Creation host, time chaofeng, 2019-01-05 21:24:28 +0800 LV Status available # open 0 LV Size 19.50 GiB Current LE 4992 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:3

目前LVM空间是19.5G的大小,现在我想缩减到15G。

[root@chaofeng data]# lvreduce -L 15G /dev/data/lv-data WARNING: Reducing active logical volume to 15.00 GiB. THIS MAY DESTROY YOUR DATA (filesystem etc.) Do you really want to reduce data/lv-data? [y/n]: y Size of logical volume data/lv-data changed from 19.50 GiB (4992 extents) to 15.00 GiB (3840 extents). Logical volume data/lv-data successfully resized. [root@chaofeng data]# lvdisplay --- Logical volume --- LV Path /dev/data/lv-data LV Name lv-data VG Name data LV UUID DKIkKT-b7yU-9jSZ-I4fk-4Knb-NQHe-9gWgbL LV Write Access read/write LV Creation host, time chaofeng, 2019-01-05 21:24:28 +0800 LV Status available # open 0 LV Size 15.00 GiB Current LE 3840 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:3

此时已经缩减成功了。

在LVM的一些预留空间上创建swap分区

刚刚我们看到把19.5G的空间缩减到15G,也就是说此时lvm还有4.5G左右的空间预留,那我们来把这部分空间划做交换分区来使用

[root@chaofeng data]# lvcreate --help | head #先查看一下命令创建的语法 lvcreate - Create a logical volume Create a linear LV. lvcreate -L|--size Size[m|UNIT] VG [ -l|--extents Number[PERCENT] ] [ --type linear ] [ COMMON_OPTIONS ] [ PV ... ] Create a striped LV (infers --type striped). [root@chaofeng data]# vgdisplay #再确定一下VG组的名字 --- Volume group --- VG Name data System ID Format lvm2 Metadata Areas 1 Metadata Sequence No 7 VG Access read/write VG Status resizable MAX LV 0 Cur LV 1 Open LV 0 Max PV 0 Cur PV 1 Act PV 1 VG Size <20.00 GiB PE Size 4.00 MiB Total PE 5119 Alloc PE / Size 3840 / 15.00 GiB Free PE / Size 1279 / <5.00 GiB VG UUID 46OJtj-IL0F-StLt-aj5H-I5Au-wE7W-rk3l5l [root@chaofeng data]# lvcreate -L 4G -n swap_lvm data #创建 Logical volume "swap_lvm" created.

此时已经创建成功了。那接下来我们就要进行格式化并挂载了

[root@chaofeng mapper]# mkswap /dev/mapper/data-swap_lvm 正在设置交换空间版本 1,大小 = 4194300 KiB 无标签,UUID=5f25be8c-ef13-485b-a243-4f13041ffcc9

[root@chaofeng mapper]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Fri Jan 4 20:31:39 2019

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=84629116-e18f-4776-9204-aaa79fee0844 /boot xfs defaults 0 0

/dev/mapper/centos-swap swap swap defaults 0 0

/dev/mapper/data-swap_lvm swap swap defaults 0 0 #要在/etc/fstab里面添加这句话

[root@chaofeng mapper]# swapon -a [root@chaofeng mapper]# free -h total used free shared buff/cache available Mem: 972M 169M 553M 7.6M 249M 603M Swap: 7.7G 0B 7.7G

到此我们的swap分区已经添加成功。

浙公网安备 33010602011771号

浙公网安备 33010602011771号