文本信息检索——布尔模型和TF-IDF模型

文本信息检索——布尔模型和TF-IDF模型

1. 布尔模型

如要检索“布尔检索”或“概率检索”但不包括“向量检索”方面的文档,其相应的查询表达式为:Q=检索 and (布尔or 概率 not向量),那么Q可以在其相应的(检索,布尔,概率,向量)标引词向量上取(1,1,0,0)(1,0,1,0)(1,1,1,0),那么文档Dj的向量如果与这中间一个相等,那么即可认为他们之间存在相似关系,而这种相互关系也是布尔值,即sim(Q,Dj)只能为0或1。

2.TF-IDF模型

在某个一共有一千词的网页中“原子能”、“的”和“应用”分别出现了 2 次、35 次 和 5 次,那么它们的词频TF就分别是 0.002、0.035 和 0.005。 我们将这三个数相加,其和 0.042 就是相应网页和查询“原子能的应用”。

一个词预测主题能力越强,权重就越大,反之,权重就越小。我们在网页中看到“原子能”这个词,或多或少地能了解网页的主题。我们看到“应用”一次,对主题基本上还是一无所知。因此,“原子能“的权重就应该比应用大。

应删除词的权重应该是零。

2.1权重计算

- 我们假定中文网页数是D=10亿,应删除词“的”在所有的网页中都出现,即|{𝑗:𝑡_𝑖 "∈" 𝑑_𝑗}|=10亿,那么它的idf=log(10亿/10亿)= log (1) =0

- 假如专用词“原子能”在两百万个网页中出现,即|{𝑗:𝑡_𝑖 "∈" 𝑑_𝑗}| =200万,则它的权重

idf=log(500) =6.2 - 假定通用词“应用”,出现在五亿个网页中,它的权重

idf = log(2)= 0.7。 - 最终获得某一网页的TF-IDF计算如下

0.002(tf)6.2(idf)+ 0.035(tf)0(idf)+0.005(tf)*0.7(idf)

3.实现代码

- 布尔模型

def regularization(s):

ss = s.split(' ')

expression = []

target = {}

for i in ss:

if i != "and" and i != "or" and i != "not" and i != "(" and i != ")":

if i[0] == "(":

expression.append("(")

expression.append(i[1:])

target[i[1:]] = 0

elif i[-1] == ")":

expression.append(i[:-1])

expression.append(")")

target[i[:-1]] = 0

else:

expression.append(i)

target[i] = 0

else:

expression.append(i)

return target, expression

def analysis(line):

output = []

# 去除每行的换行符

t_line = line.strip('\n')

# 按空格分开每个词

words = t_line.split(' ')

for word in words[1:]:

if word == "":

continue

# 按/分开标记和词

t_word = word.split('/')

# 左方括号去除

tf_word = t_word[0].split('[')

if len(tf_word) == 2:

f_word = tf_word[1]

else:

f_word = t_word[0]

# 若不在列表中

if f_word not in output:

output.append(f_word)

big_word1 = t_line.split('[')

for i in range(1, len(big_word1)):

big_word2 = big_word1[i].split(']')[0]

words = big_word2.split(' ')

big_word = ""

for word in words:

# 按/分开标记和词

t_word = word.split('/')

big_word = big_word + t_word[0]

# 若不在列表中

if big_word not in output:

output.append(big_word)

return output

def getValue(target, reg):

# 逆波兰

RPN = []

stack = []

stack.append("#")

for i in reg:

if i in target.keys():

RPN.append(target[i])

elif i == "(":

stack.append(i)

elif i == ")":

while stack[-1] != "(":

RPN.append(stack.pop())

stack.pop()

elif i == "not":

while stack[-1] == "not":

RPN.append(stack.pop())

stack.append(i)

elif i == "and":

while stack[-1] == "not" or stack[-1] == "and":

RPN.append(stack.pop())

stack.append(i)

else:

while stack[-1] == "not" or stack[-1] == "and" or stack[-1] == "or":

RPN.append(stack.pop())

stack.append(i)

while len(stack) != 1:

RPN.append(stack.pop())

# 计算逆波兰式

ans = []

for i in RPN:

if i == 0 or i == 1:

ans.append(i)

elif i == "not":

ans.append(1 ^ ans.pop())

elif i == "and":

op1 = ans.pop()

op2 = ans.pop()

ans.append(op1 and op2)

elif i == "or":

op1 = ans.pop()

op2 = ans.pop()

ans.append(op1 or op2)

return ans[0]

if __name__ == '__main__':

booltext = input("输入布尔表达式:")

target, reg = regularization(booltext)

key_target = target.keys()

num = 0

with open('语料库.txt', mode='r', encoding='UTF-8') as f:

for line in f.readlines():

if num >=10:

break

for i in key_target:

target[i] = 0

if line is not None and line != "\n":

output = analysis(line)

for i in key_target:

if i in output:

target[i] = 1

if getValue(target, reg):

print(line)

num = num + 1

f.close()

-

TF-IDF模型

-

getWeight.py(提前计算权重)

import sys output = {} with open('语料库.txt', mode='r', encoding='UTF-8') as f: for line in f.readlines(): if line is not None and line != "\n": t_line = line.strip('\n') words = t_line.split(' ') word_w = [] for word in words[1:]: if word == "": continue t_word = word.split('/') # 左方括号 tf_word = t_word[0].split('[') if len(tf_word) == 2: f_word = tf_word[1] else: f_word = t_word[0] if f_word not in word_w: word_w.append(f_word) for f_word in word_w: if f_word in output.keys(): output[f_word] = output[f_word]+1 else: output[f_word] = 1 f.close() with open('outputWeight.txt', mode='w', encoding='UTF-8') as f: while output: minNum = sys.maxsize minName = "" for key, values in output.items(): if values < minNum: minNum = values minName = key f.write(minName+": "+str(minNum)+"\n") del output[minName] f.close() -

TF-IDF.py

import math def analysis(line): output = [] # 去除每行的换行符 t_line = line.strip('\n') # 按空格分开每个词 words = t_line.split(' ') for word in words[1:]: if word == "": continue # 按/分开标记和词 t_word = word.split('/') # 左方括号去除 tf_word = t_word[0].split('[') if len(tf_word) == 2: f_word = tf_word[1] else: f_word = t_word[0] # 若不在列表中 if f_word not in output: output.append(f_word) big_word1 = t_line.split('[') for i in range(1, len(big_word1)): big_word2 = big_word1[i].split(']')[0] words = big_word2.split(' ') big_word = "" for word in words: # 按/分开标记和词 t_word = word.split('/') big_word = big_word + t_word[0] # 若不在列表中 if big_word not in output: output.append(big_word) return output def getValue(target, reg): # 逆波兰 RPN = [] stack = [] stack.append("#") for i in reg: if i in target.keys(): RPN.append(target[i]) elif i == "(": stack.append(i) elif i == ")": while stack[-1] != "(": RPN.append(stack.pop()) stack.pop() elif i == "not": while stack[-1] == "not": RPN.append(stack.pop()) stack.append(i) elif i == "and": while stack[-1] == "not" or stack[-1] == "and": RPN.append(stack.pop()) stack.append(i) else: while stack[-1] == "not" or stack[-1] == "and" or stack[-1] == "or": RPN.append(stack.pop()) stack.append(i) while len(stack) != 1: RPN.append(stack.pop()) # 计算逆波兰式 ans = [] for i in RPN: if i == 0 or i == 1: ans.append(i) elif i == "not": ans.append(1 ^ ans.pop()) elif i == "and": op1 = ans.pop() op2 = ans.pop() ans.append(op1 and op2) elif i == "or": op1 = ans.pop() op2 = ans.pop() ans.append(op1 or op2) return ans[0] def getW(): word_list = {} with open('outputWeight.txt', mode='r', encoding='UTF-8')as f: for line in f.readlines(): if line is not None: word = line.split(':') word_list[word[0]]=word[1] f.close() return word_list def BMM(origin_sentence): MAX_WORD = 19 word_list = [] with open('output.txt', mode='r', encoding='UTF-8')as f: for line in f.readlines(): if line is not None: word = line.split(':') word_list.append(word[0]) f.close() ans_word = [] while len(origin_sentence) != 0: len_word = MAX_WORD while len_word > 0: # 从后读取最大词长度的数据,若该数据在字典中,则存入数组,并将其去除 if origin_sentence[-len_word:] in word_list: ans_word.append(origin_sentence[-len_word:]) len_sentence = len(origin_sentence) origin_sentence = origin_sentence[0:len_sentence - len_word] break # 不在词典中,则从后取词长度-1 else: len_word = len_word - 1 # 单词直接存入数组 if len_word == 0: if origin_sentence[-1:] != ' ': ans_word.append(origin_sentence[-1:]) len_sentence = len(origin_sentence) origin_sentence = origin_sentence[0:len_sentence - 1] return ans_word if __name__ == '__main__': w = getW() sentence = input("输入短语:") words = BMM(sentence) ans = [] # 计算总文档数(一行一文档) count = 0 for index, line in enumerate(open('语料库.txt', 'r', encoding='UTF-8')): count += 1 with open('语料库.txt', mode='r', encoding='UTF-8') as f: for line in f.readlines(): score = 0 if line is not None and line != "\n": out = analysis(line) for word in words: # TF-IDF计算 score = score + out.count(word) / len(out) * math.log(count*1.0/int(w[word])) ans.append((line, score)) f.close() new_ans = sorted(ans, key=lambda a: a[1], reverse=True) for i in range(10): print(new_ans[i])

-

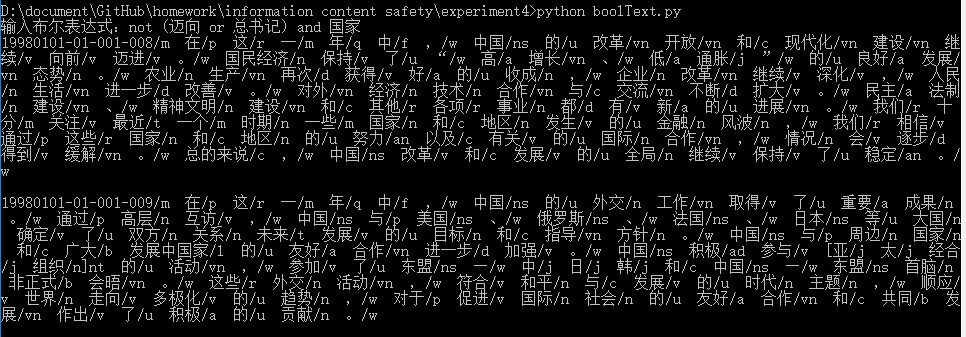

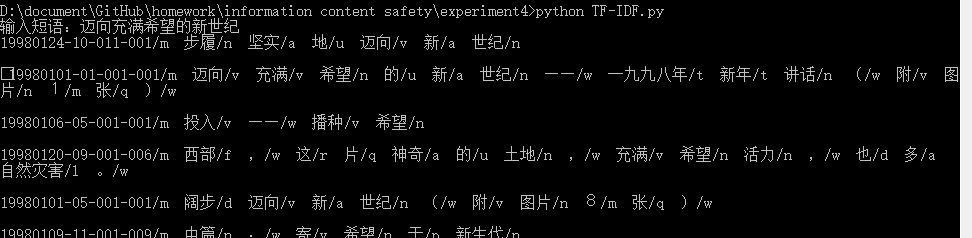

4.实现效果