简介

MySQL Group Replication(简称MGR)字面意思是mysql组复制的意思,但其实他是一个高可用的集群架构,暂时只支持mysql5.7和mysql8.0版本.

是MySQL官方于2016年12月推出的一个全新的高可用与高扩展的解决方案,提供了高可用、高扩展、高可靠的MySQL集群服务.

也是mysql官方基于组复制概念并充分参考MariaDB Galera Cluster和Percona XtraDB Cluster结合而来的新的高可用集群架构.

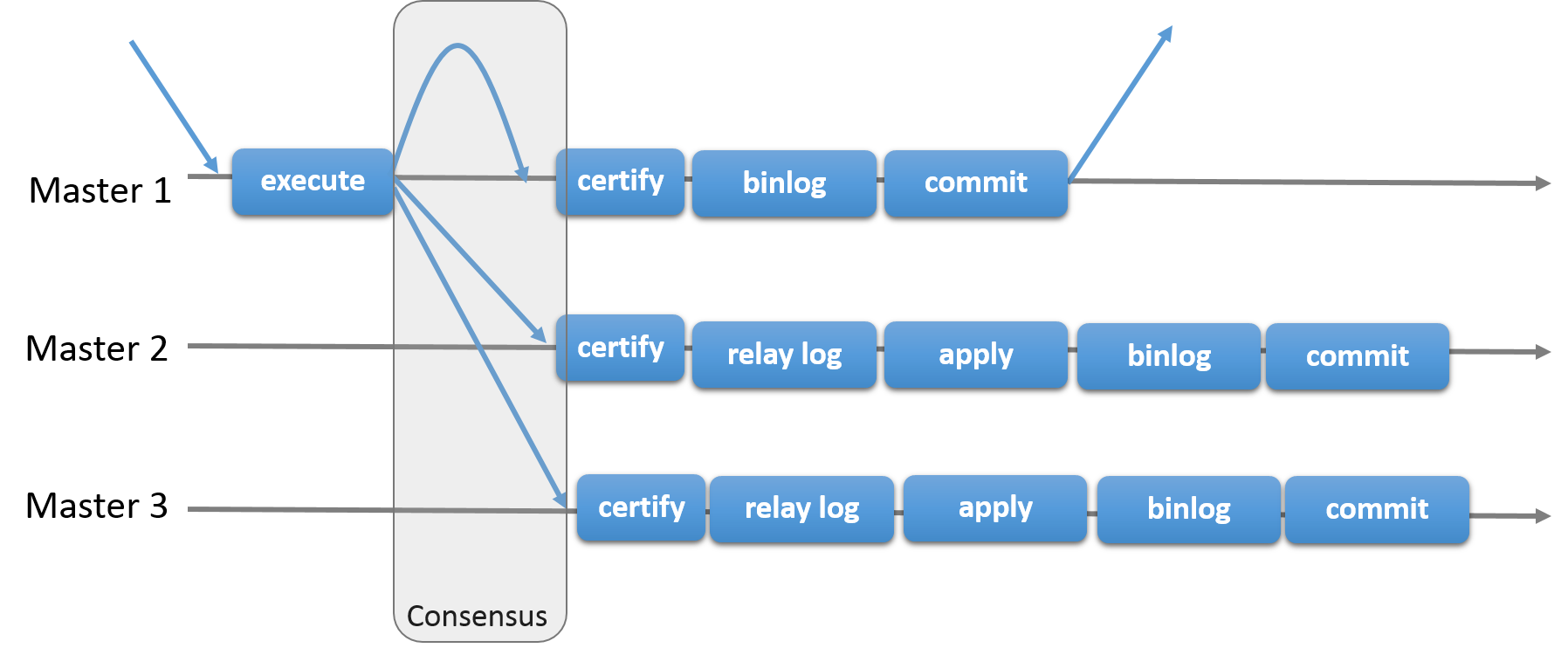

MySQL Group Replication是建立在基于Paxos的XCom之上的,正因为有了XCom基础设施,保证数据库状态机在节点间的事务一致性,才能在理论和实践中保证数据库系统在不同节点间的事务一致性。

由一般主从复制概念扩展,多个节点共同组成一个数据库集群,事务的提交必须经过半数以上节点同意方可提交,在集群中每个节点上都维护一个数据库状态机,保证节点间事务的一致性。

组复制的特点:

● 高一致性

基于原生复制及 paxos 协议的组复制技术,并以插件的方式提供,提供一致数据安全保证;

● 高容错性

只要不是大多数节点坏掉就可以继续工作,有自动检测机制,当不同节点产生资源争用冲突时,不会出现错误,按照先到者优先原则进行处理,并且内置了自动化脑裂防护机制;

● 高扩展性

节点的新增和移除都是自动的,新节点加入后,会自动从其他节点上同步状态,直到新节点和其他节点保持一致,如果某节点被移除了,其他节点自动更新组信息,自动维护新的组信息;

● 高灵活性

有单主模式和多主模式,单主模式下,会自动选主,所有更新操作都在主上进行;

多主模式下,所有 server 都可以同时处理更新操作。

优点:

高一致性,基于原生复制及paxos协议的组复制技术.

高容错性,有自动检测机制,当出现宕机后,会自动剔除问题节点,其他节点可以正常使用(类似zk集群),当不同节点产生资源争用冲突时,会按照先到先得处理,并且内置了自动化脑裂防护机制.

高扩展性,可随时在线新增和移除节点,会自动同步所有节点上状态,直到新节点和其他节点保持一致,自动维护新的组信息.

高灵活性,直接插件形式安装(5.7.17后自带.so插件),有单主模式和多主模式,单主模式下,只有主库可以读写,其他从库会加上super_read_only状态,只能读取不可写入,出现故障会自动选主.

缺点:

还是太新,不太稳定,暂时性能还略差于PXC,对网络稳定性要求很高,至少是同机房做.

应用场景:

1、弹性的数据库复制环境

组复制可以灵活的增加和减少集群中的数据库实例

2、高可用的数据库环境

组复制允许数据库实例宕机,只要集群中大多数服务器可用,则整个数据库服务可用

3、替代传统主从复制结构的数据库环境

组复制的有哪些先决条件:

1、只支持innodb存储引擎

2、每张表都需要有主键

3、只支持ipv4网络环境

4、要求高网络带宽(通常是千兆内网)和低网络延迟

组复制有哪些限制条件?

1、Replication Event Checksums

由于代码设计的原因,目前组复制还不能支持binlog的checksum,如果要使用组复制,需要配置binlog-checksum=none

2、Gap Locks

组复制校验的进程不支持间隙锁,mysql间隙锁的设计是为了解决幻读问题

3、Table Locks and Named Locks

组复制校验的进程不支持表级锁和named locks

4、SERIALIZABLE Isolation Level

组复制不支持串行事务级别

5、Concurrent DDL versus DML Operations

组复制的多主模式不支持并行的DDL和DML操作

6、Foreign Keys with Cascading Constraints

组复制的多主模式不支持带有级联约束类型的外键

7、Very Large Transactions

组复制不支持巨大的事务

组复制协议:

MGR使用paxos算法来解决一致性的问题,自己的理解:

比如一场法庭审判:

底下的人都是提议者,可以提出自己的意见和建议,但是,最后做出决定的是法官,这个法官可以是一个或者多个。

提出提议的众人争先和法官联系。每一个提议者都会提出一个意见,这个意见会生成一个唯一的ID。如果有一个提议者和大多数法官(半数以上)取得的联系,那么这个提议就会被接受

法官就会记录这个提议生成的唯一ID,和上一个ID进行对比,如果现在的这个ID大于旧ID,那么这个意见就会被接收,然后法官们一起决定这个意见,以少数服从多数的方式决定。

如果这个ID小于或者等于旧ID,那么法官就会拒绝这个提议,拒绝后,提议者会将这个ID号增加,然后继续向法官提出提议,直到被接受。

在同一时间,只有一个提议能被接受。

MGR冲突检测

MGR多主模式下,一个事务在执行时,并不会做前置的检查,但是在提交阶段,会和其他节点通信对该事务是否能够提交达成一个决议。在多个节点同对相同记录的修改,在提交时会进行冲突检测,首先提交的事务将获得优先权。例如对同一条记录的修改,t1事务先于t2事务,那么t1事务在冲突检测后获得执行权,顺利提交,而t2事务进行回滚。显然这种多点写入条件下,对于同一条记录的并发修改,由于大量的回滚,导致性能很低,因此MySQL官方建议,这种对于同一条记录的修改,应该放在同一个节点执行,这样可以利用节点本地锁来进行同步等待,减少事务回滚,提高性能。

MGR新节点加入过程

MGR中,新节点申请加入组,会在组中生成一个View_change事件,组内所有online节点将该事件写入到binlog,同时,申请加入组的新节点也会记录这个View_change事件,之后,该节点会进入下面两个阶段。

- 第一阶段,新节点会从组内online的节点中选择一个作为贡献者(donor),通过标准的异步复制通道,拉取贡献者的binlog,并应用这些binlog。与此同时,新节点也会获取当前组内正在交换的事务信息,并将其缓存到队列中,当binlog应用完成,也就是应用到View_change事件处,异步复制通道关闭,进入第二阶段。

- 第二阶段,新节点处理缓存在队列中的组内事务信息,当队列中的事务信息处理完成,即缓存队列长度为0时,新节点在组内状态变为online。

在第一阶段,遇到任何错误,新节点会自动从组内选择另外一个online节点作为贡献者,如果仍然遇到异常,会继续选择其他online节点,直到所有online节点枚举完毕,如果这时仍然报错,会sleep一段时间之后,再次重试,sleep时间和重试次数通过相应参数来控制。

第一阶段,应用binlog的开始点由新节点的gtid_executed决定,结束点由View_change事件决定。MGR新节点加入组的第一阶段,由于使用传统的异步binlog数据同步,如果新加入的节点使用较早的备份,可能出现binlog接不上的情况,新节点一直处于RECOVERING状态,在经过一定时间间隔和一定次数的重试后,恢复失败,新节点从组中退出。另外一种情况,binlog能够接上,但是binlog太多,导致应用binlog时间太长,同时第二阶段缓存队列也可能变得很大,整个恢复过程也将花费太长的时间。因些建议新节点加入组时,使用最近、最新的一次完整备份数据作为基础。

安装

1.服务环境设定规划

|

ip地址 |

mysql版本 | 数据库端口号 | Server-ID | MGR端口号 | 操作系统 |

| 192.168.202.174 | mysql 5.7.25 | 3306 | 174 | 24901 | CentOS 7.5 |

| 192.168.202.175 | mysql 5.7.25 | 3306 | 175 | 24901 | CentOS 7.5 |

| 192.168.202.176 | mysql 5.7.25 | 3306 | 176 | 24901 | CentOS 7.5 |

多主模式下最好有三台以上的节点,单主模式则视实际情况而定,不过同个Group最多节点数为9.服务器配置尽量保持一致,因为和PXC一样,也会有"木桶短板效应".

需要特别注意,mysql数据库的服务端口号和MGR的服务端口不是一回事,需要区分开来.

而server-id要区分开来是必须的,单纯做主从复制也要满足这一点了.

2.配置服务器

关闭selinux

vim /etc/selinux/config

SELINUX=disabled

vim /etc/hosts

192.168.202.174 node1

192.168.202.175 node2

192.168.202.176 node3

开放防火墙端口:

firewall-cmd --zone=public --add-port=3306/tcp --permanent

firewall-cmd --zone=public --add-port=24901/tcp --permanent

firewall-cmd --reload

firewall-cmd --zone=public --list-ports

3.安装部署

怎么安装mysql 5.7.25 参考 https://www.cnblogs.com/EikiXu/p/10595093.html

直接就说怎么安装MGR了,上面也说了,MGR在mysql5.7.17版本之后就都是自带插件了,只是没有安装上而已,和半同步插件一个套路,所以默认是没有选项.

所有集群内的服务器都必须安装MGR插件才能正常使用该功能.

我们可以看到,一开始是没有装的

(root@localhost) 11:42:26 [(none)]> show plugins; +----------------------------+----------+--------------------+---------+---------+ | Name | Status | Type | Library | License | +----------------------------+----------+--------------------+---------+---------+ | binlog | ACTIVE | STORAGE ENGINE | NULL | GPL | | mysql_native_password | ACTIVE | AUTHENTICATION | NULL | GPL | | sha256_password | ACTIVE | AUTHENTICATION | NULL | GPL | | InnoDB | ACTIVE | STORAGE ENGINE | NULL | GPL | | INNODB_TRX | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_LOCKS | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_LOCK_WAITS | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_CMP | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_CMP_RESET | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_CMPMEM | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_CMPMEM_RESET | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_CMP_PER_INDEX | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_CMP_PER_INDEX_RESET | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_BUFFER_PAGE | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_BUFFER_PAGE_LRU | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_BUFFER_POOL_STATS | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_TEMP_TABLE_INFO | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_METRICS | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_FT_DEFAULT_STOPWORD | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_FT_DELETED | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_FT_BEING_DELETED | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_FT_CONFIG | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_FT_INDEX_CACHE | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_FT_INDEX_TABLE | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_SYS_TABLES | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_SYS_TABLESTATS | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_SYS_INDEXES | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_SYS_COLUMNS | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_SYS_FIELDS | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_SYS_FOREIGN | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_SYS_FOREIGN_COLS | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_SYS_TABLESPACES | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_SYS_DATAFILES | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | INNODB_SYS_VIRTUAL | ACTIVE | INFORMATION SCHEMA | NULL | GPL | | MyISAM | ACTIVE | STORAGE ENGINE | NULL | GPL | | MRG_MYISAM | ACTIVE | STORAGE ENGINE | NULL | GPL | | MEMORY | ACTIVE | STORAGE ENGINE | NULL | GPL | | CSV | ACTIVE | STORAGE ENGINE | NULL | GPL | | PERFORMANCE_SCHEMA | ACTIVE | STORAGE ENGINE | NULL | GPL | | BLACKHOLE | ACTIVE | STORAGE ENGINE | NULL | GPL | | partition | ACTIVE | STORAGE ENGINE | NULL | GPL | | ARCHIVE | ACTIVE | STORAGE ENGINE | NULL | GPL | | FEDERATED | DISABLED | STORAGE ENGINE | NULL | GPL | | ngram | ACTIVE | FTPARSER | NULL | GPL | +----------------------------+----------+--------------------+---------+---------+

MGR相关参数也是没有加载的,只有一个其他相关的参数

(root@localhost) 11:43:27 [(none)]> show variables like 'group%'; +----------------------+-------+ | Variable_name | Value | +----------------------+-------+ | group_concat_max_len | 1024 | +----------------------+-------+ 1 row in set (0.01 sec)

然后,先看看当前插件的目录

(root@localhost) 11:43:49 [(none)]> show variables like 'plugin_dir'; +---------------+------------------------------+ | Variable_name | Value | +---------------+------------------------------+ | plugin_dir | /usr/local/mysql/lib/plugin/ | +---------------+------------------------------+ 1 row in set (0.00 sec)

再搜索一下我们需要的MGR插件,是否存在

[root@node1 mysqldata]# ll /usr/local/mysql/lib/plugin/ |grep group_replication -rwxr-xr-x. 1 mysql mysql 16393641 Dec 21 19:11 group_replication.so

最后,从新进入mysql服务,进行安装

(root@localhost) 11:45:25 [(none)]> install PLUGIN group_replication SONAME 'group_replication.so'; Query OK, 0 rows affected (0.10 sec)

这个时候,就有了

(root@localhost) 11:45:26 [(none)]> show plugins; +----------------------------+----------+--------------------+----------------------+---------+ | Name | Status | Type | Library | License | +----------------------------+----------+--------------------+----------------------+---------+ | binlog | ACTIVE | STORAGE ENGINE | NULL | GPL | | mysql_native_password | ACTIVE | AUTHENTICATION | NULL | GPL | | sha256_password | ACTIVE | AUTHENTICATION | NULL | GPL | ........ | ARCHIVE | ACTIVE | STORAGE ENGINE | NULL | GPL | | FEDERATED | DISABLED | STORAGE ENGINE | NULL | GPL | | ngram | ACTIVE | FTPARSER | NULL | GPL | | group_replication | ACTIVE | GROUP REPLICATION | group_replication.so | GPL | +----------------------------+----------+--------------------+----------------------+---------+ 45 rows in set (0.00 sec)

再去看MGR相关的参数,就有很多了

(root@localhost) 11:45:47 [(none)]> show variables like 'group%'; +----------------------------------------------------+----------------------+ | Variable_name | Value | +----------------------------------------------------+----------------------+ | group_concat_max_len | 1024 | | group_replication_allow_local_disjoint_gtids_join | OFF | | group_replication_allow_local_lower_version_join | OFF | | group_replication_auto_increment_increment | 7 | | group_replication_bootstrap_group | OFF | | group_replication_components_stop_timeout | 31536000 | | group_replication_compression_threshold | 1000000 | | group_replication_enforce_update_everywhere_checks | OFF | | group_replication_exit_state_action | READ_ONLY | | group_replication_flow_control_applier_threshold | 25000 | | group_replication_flow_control_certifier_threshold | 25000 | | group_replication_flow_control_mode | QUOTA | | group_replication_force_members | | | group_replication_group_name | | | group_replication_group_seeds | | | group_replication_gtid_assignment_block_size | 1000000 | | group_replication_ip_whitelist | AUTOMATIC | | group_replication_local_address | | | group_replication_member_weight | 50 | | group_replication_poll_spin_loops | 0 | | group_replication_recovery_complete_at | TRANSACTIONS_APPLIED | | group_replication_recovery_reconnect_interval | 60 | | group_replication_recovery_retry_count | 10 | | group_replication_recovery_ssl_ca | | | group_replication_recovery_ssl_capath | | | group_replication_recovery_ssl_cert | | | group_replication_recovery_ssl_cipher | | | group_replication_recovery_ssl_crl | | | group_replication_recovery_ssl_crlpath | | | group_replication_recovery_ssl_key | | | group_replication_recovery_ssl_verify_server_cert | OFF | | group_replication_recovery_use_ssl | OFF | | group_replication_single_primary_mode | ON | | group_replication_ssl_mode | DISABLED | | group_replication_start_on_boot | ON | | group_replication_transaction_size_limit | 0 | | group_replication_unreachable_majority_timeout | 0 | +----------------------------------------------------+----------------------+ 37 rows in set (0.01 sec)

上面有些配置是我预先配置好的,后面会详细解析.

4.配置MGR环境

熟悉mysql的人都知道,mysql支持set global的全局在线配置方式,所以并不局限于配置文件,这里直接解析参数和给出命令.

假设我们先写到配置文件my.cnf:

首先,MGR是一定要用GTID的,所以,GTID就必须要开,这个还真是要重启才能生效,各位要注意这点

#开启GTID,必须开启 gtid_mode=on #强制GTID的一致性 enforce-gtid-consistency=on

然后,列举一些公共参数的修改

#binlog格式,MGR要求必须是ROW,不过就算不是MGR,也最好用row binlog_format=row #server-id必须是唯一的 server-id = 174 #MGR使用乐观锁,所以官网建议隔离级别是RC,减少锁粒度 transaction_isolation = READ-COMMITTED #因为集群会在故障恢复时互相检查binlog的数据, #所以需要记录下集群内其他服务器发过来已经执行过的binlog,按GTID来区分是否执行过. log-slave-updates=1 #binlog校验规则,5.6之后的高版本是CRC32,低版本都是NONE,但是MGR要求使用NONE binlog_checksum=NONE #基于安全的考虑,MGR集群要求复制模式要改成slave记录记录到表中,不然就报错 master_info_repository=TABLE #同上配套 relay_log_info_repository=TABLE slave-parallel-workers=N #并行的SQL线程数量 slave-parallel-type=LOGICAL_CLOCK #基于组提交的并行复制方式 #slave上apply relay-log时事务顺序提交 slave-preserve-commit-order=1 plugin_dir=/usr/local/mysql/lib/plugin #根据实际情况修改 plugin_load = "rpl_semi_sync_master=semisync_master.so;rpl_semi_sync_slave=semisync_slave.so;group_replication.so" report_host=192.168.202.174 report_port=3306

最后就是MGR自身的独有配置参数了.

#记录事务的算法,官网建议设置该参数使用 XXHASH64 算法 transaction_write_set_extraction = XXHASH64 #相当于此GROUP的名字,是UUID值,不能和集群内其他GTID值的UUID混用,可用uuidgen来生成一个新的, #主要是用来区分整个内网里边的各个不同的GROUP,而且也是这个group内的GTID值的UUID loose-group_replication_group_name = 'cc5e2627-2285-451f-86e6-0be21581539f' #IP地址白名单,默认只添加127.0.0.1,不会允许来自外部主机的连接,按需安全设置 loose-group_replication_ip_whitelist = '127.0.0.1/8,192.168.202.0/24' #是否随服务器启动而自动启动组复制,不建议直接启动,怕故障恢复时有扰乱数据准确性的特殊情况 loose-group_replication_start_on_boot = OFF #本地MGR的IP地址和端口,host:port,是MGR的端口,不是数据库的端口 loose-group_replication_local_address = '192.168.202.174:24901' #需要接受本MGR实例控制的服务器IP地址和端口,是MGR的端口,不是数据库的端口 loose-group_replication_group_seeds = '192.168.202.174:24901,192.168.202.174:24901,192.168.202.174:24901' #开启引导模式,添加组成员,用于第一次搭建MGR或重建MGR的时候使用,只需要在集群内的其中一台开启, loose-group_replication_bootstrap_group = OFF #是否启动单主模式,如果启动,则本实例是主库,提供读写,其他实例仅提供读,如果为off就是多主模式了 loose-group_replication_single_primary_mode = off #多主模式下,强制检查每一个实例是否允许该操作,如果不是多主,可以关闭 loose-group_replication_enforce_update_everywhere_checks = on

重点来解析几个参数:

group_replication_group_name: 这个必须是独立的UUID值,不能和集群里面其他的数据库的GTID的UUID值一样,在linux系统下可以用uuidgen来生成一个新的UUID

group_replication_ip_whitelist: 关于IP白名单来说,本来是安全设置,如果全内网涵盖是不太适合的,我这样设置只是为了方便,这个参数可以set global动态修改,还是比较方便的

group_replication_start_on_boot: 不建议随系统启动的原因有两个,第一个就是怕故障恢复时的极端情况下影响数据准确性,第二个就是怕一些添加或移除节点的操作被这个参数影响到

group_replication_local_address: 特别注意的是这个端口并不是数据库服务端口,是MGR的服务端口,而且要保证这个端口没有被使用,是MGR互相通信使用的端口.

group_replication_group_seeds: 接受本group控制的IP地址和端口号,这个端口也是MGR的服务端口,可以用set global动态修改,用以添加和移动节点.

group_replication_bootstrap_group: 需要特别注意,引导的服务器只需要一台,所以集群内其他服务器都不需要开启这个参数,默认off就好了,有需要再set global来开启就足够了.

group_replication_single_primary_mode: 取决于想用的是多主模式还是单主模式,如果是单主模式,就类似于半同步复制,但是比半同步要求更高,因为需要集群内过半数的服务器写入成功后,主库才会返回写入成功,数据一致性也更高,通常金融服务也更推荐这种使用方法.如果是多主模式,看上去性能更高,但是事务冲突的几率也更高,虽然MGR内部有先到先得原则,但是这些还是不能忽略,对于高并发环境,更加可能是致命的,所以一般多主模式也是建议分开来使用,一个地址链接一个库,从逻辑操作上区分开来,避免冲突的可能.

group_replication_enforce_update_everywhere_checks: 如果是单主模式,因为不存在多主同时操作的可能,这个强制检查是可以关闭,因为已经不存在这样的操作,多主是必须要开的,不开的话数据就可能出现错乱了.

如果用set global方式动态开启的话就如下了:

set global transaction_write_set_extraction='XXHASH64'; set global group_replication_start_on_boot=OFF; set global group_replication_bootstrap_group = OFF ; set global group_replication_group_name= 'cc5e2627-2285-451f-86e6-0be21581539f'; set global group_replication_local_address='10.0.2.5:33081'; set global group_replication_group_seeds='10.0.2.5:33081,10.0.2.6:33081,10.0.2.7:33081'; set global group_replication_ip_whitelist = '127.0.0.1/8,192.168.1.0/24,10.0.0.1/8,10.18.89.49/22'; set global group_replication_single_primary_mode=off; set global group_replication_enforce_update_everywhere_checks=on;

需要特别注意的是,同一集群group内的数据库服务器的配置,都必须保持一致,不然是会报错的,或者是造成一些奇葩事情.当然了,server-id和本机的IP地址端口要注意区分.

配置好了,就可以准备启动了,但是启动有顺序要求,需要特别注意.

5.启动MGR集群

就如上面说的,启动MGR是要注意顺序的,因为需要有其中一台数据库做引导,其他数据库才可以顺利加入进来.

如果是单主模式,那么主库就一定要先启动并做引导,不然就不是主了.

当出现异常时,应该要去查看mysql报错文件mysql.err,一般都有相应的error日志提示.

好了,转回正题,现在假设用10.0.2.6这台服务器做引导,先登进本地mysql服务端:

SET SQL_LOG_BIN=0; #启动引导,注意,只有这套开启引导,其他两台都请忽略这一步 mysql> SET GLOBAL group_replication_bootstrap_group=ON; #创建一个用户来做同步的用户,并授权,所有集群内的服务器都需要做 mysql> create user 'sroot'@'%' identified by '123123'; mysql> grant REPLICATION SLAVE on *.* to 'sroot'@'%' with grant option; #清空所有旧的GTID信息,避免冲突 mysql> reset master;set sql_log_bin=1; #创建同步规则认证信息,就是刚才授权的那个用户,和一般的主从规则写法不太一样 mysql> CHANGE MASTER TO MASTER_USER='sroot', MASTER_PASSWORD='123123' FOR CHANNEL 'group_replication_recovery'; #启动MGR mysql> start group_replication; #查看是否启动成功,看到online就是成功了 mysql> SELECT * FROM performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+ | group_replication_applier | a29a1b91-4908-11e8-848b-08002778eea7 | ubuntu | 3308 | ONLINE | PRIMARY | 8.0.11 | +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+ 1 row in set (0.02 sec) #这个时候,就可以先关闭引导了 mysql> SET GLOBAL group_replication_bootstrap_group=OFF;

然后,就到另外两台服务器192.168.202.175和192.168.202.176了,也是要登进本地mysql服务端:

#不需要启动引导了,下面大致是类似的 #用户授权还是要做的 SET SQL_LOG_BIN=0; mysql> create user 'sroot'@'%' identified by '123123'; mysql> grant REPLICATION SLAVE on *.* to 'sroot'@'%' with grant option; #清空所有旧的GTID信息,避免冲突 mysql> reset master;SET SQL_LOG_BIN=1; #创建同步规则认证信息,就是刚才授权的那个用户,和一般的主从规则写法不太一样 mysql> CHANGE MASTER TO MASTER_USER='sroot', MASTER_PASSWORD='123123' FOR CHANNEL 'group_replication_recovery'; #启动MGR mysql> start group_replication; #查看是否启动成功,看到online就是成功了

(root@localhost) 13:40:12 [(none)]> SELECT * FROM performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | dbab147e-5a88-11e9-b9c3-000c297c0a9d | node1 | 3306 | ONLINE | | group_replication_applier | f8d7e601-5a88-11e9-bc6b-000c29f334ce | node2 | 3306 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+--------------+

如此类推,在192.168.202.176上就应该是下面这样了

(root@localhost) 13:42:04 [(none)]> SELECT * FROM performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | 0c22257b-5a89-11e9-bebd-000c29741024 | node3 | 3306 | ONLINE | | group_replication_applier | dbab147e-5a88-11e9-b9c3-000c297c0a9d | node1 | 3306 | ONLINE | | group_replication_applier | f8d7e601-5a88-11e9-bc6b-000c29f334ce | node2 | 3306 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ 3 rows in set (0.00 sec)

看到MEMBER_STATE全部都是online就是成功连接上了,不过如果出现故障,是会被剔除出集群的并且在本机上会显示error,这个时候就需要去看本机的mysql报错文件mysql.err了.

需要注意的是,现在是多主模式,MEMBER_ROLE里显示的都是PRIMARY,如果是单主模式,就会只显示一个PRIMARY,其他是SECONDARY了.

6.使用

在多主模式下,下面这些连接方式都是能直接读写的

mysql -usroot -p123123 -h192.168.202.174 -P3306 mysql -usroot -p123123 -h192.168.202.175 -P3306 mysql -usroot -p123123 -h192.168.202.176 -P3306

怎么操作我就不说了,和以前的mysql一样create,insert,delete一样,你就看到其他服务器也会有数据了.

如果是单主的话,那么就只有PRIMARY状态的主库可以写数据,SECONDARY状态的只能读不能写,例如下面这样

mysql> select * from ttt; +----+--------+ | id | name | +----+--------+ | 1 | ggg | | 2 | ffff | | 3 | hhhhh | | 4 | tyyyyy | | 5 | aaaaaa | +----+--------+ 5 rows in set (0.00 sec) mysql> delete from ttt where id = 5; ERROR 1290 (HY000): The MySQL server is running with the --super-read-only option so it cannot execute this statement

这些操作相关就不详细展开了,搭好了就可以慢慢试.

7.管理维护

为了验证我上面说过的东西,先看看当前的GTID和从库状态

#查一下GTID,就是之前设的那个group的uuid mysql> show master status; +------------------+----------+--------------+------------------+---------------------------------------------------+ | File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set | +------------------+----------+--------------+------------------+---------------------------------------------------+ | mysql-bin.000003 | 4801 | | | cc5e2627-2285-451f-86e6-0be21581539f:1-23:1000003 | +------------------+----------+--------------+------------------+---------------------------------------------------+ 1 row in set (0.00 sec) #再看从库状态,没有数据,因为根本不是主从结构 mysql> show slave status; Empty set (0.00 sec)

上面看到了一条命令,是查当前节点信息的,下面慢慢列举一些常用的命令

#查看group内所有成员的节点信息

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+

| group_replication_applier | a29a1b91-4908-11e8-848b-08002778eea7 | ubuntu | 3308 | ONLINE | PRIMARY | 8.0.11 |

| group_replication_applier | af892b6e-49ca-11e8-9c9e-080027b04376 | ubuntu | 3308 | ONLINE | SECONDARY | 8.0.11 |

| group_replication_applier | d058176a-51cf-11e8-8c95-080027e7b723 | ubuntu | 3308 | ONLINE | SECONDARY | 8.0.11 |

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+

3 rows in set (0.00 sec)

#查看GROUP中的同步情况,当前复制状态

mysql> select * from performance_schema.replication_group_member_stats\G

*************************** 1. row ***************************

CHANNEL_NAME: group_replication_applier

VIEW_ID: 15258529121778212:5

MEMBER_ID: a29a1b91-4908-11e8-848b-08002778eea7

COUNT_TRANSACTIONS_IN_QUEUE: 0

COUNT_TRANSACTIONS_CHECKED: 9

COUNT_CONFLICTS_DETECTED: 0

COUNT_TRANSACTIONS_ROWS_VALIDATING: 0

TRANSACTIONS_COMMITTED_ALL_MEMBERS: cc5e2627-2285-451f-86e6-0be21581539f:1-23:1000003

LAST_CONFLICT_FREE_TRANSACTION: cc5e2627-2285-451f-86e6-0be21581539f:23

COUNT_TRANSACTIONS_REMOTE_IN_APPLIER_QUEUE: 0

COUNT_TRANSACTIONS_REMOTE_APPLIED: 3

COUNT_TRANSACTIONS_LOCAL_PROPOSED: 9

COUNT_TRANSACTIONS_LOCAL_ROLLBACK: 0

*************************** 2. row ***************************

CHANNEL_NAME: group_replication_applier

VIEW_ID: 15258529121778212:5

MEMBER_ID: af892b6e-49ca-11e8-9c9e-080027b04376

COUNT_TRANSACTIONS_IN_QUEUE: 0

COUNT_TRANSACTIONS_CHECKED: 9

COUNT_CONFLICTS_DETECTED: 0

COUNT_TRANSACTIONS_ROWS_VALIDATING: 0

TRANSACTIONS_COMMITTED_ALL_MEMBERS: cc5e2627-2285-451f-86e6-0be21581539f:1-23:1000003

LAST_CONFLICT_FREE_TRANSACTION: cc5e2627-2285-451f-86e6-0be21581539f:23

COUNT_TRANSACTIONS_REMOTE_IN_APPLIER_QUEUE: 0

COUNT_TRANSACTIONS_REMOTE_APPLIED: 10

COUNT_TRANSACTIONS_LOCAL_PROPOSED: 0

COUNT_TRANSACTIONS_LOCAL_ROLLBACK: 0

*************************** 3. row ***************************

CHANNEL_NAME: group_replication_applier

VIEW_ID: 15258529121778212:5

MEMBER_ID: d058176a-51cf-11e8-8c95-080027e7b723

COUNT_TRANSACTIONS_IN_QUEUE: 0

COUNT_TRANSACTIONS_CHECKED: 9

COUNT_CONFLICTS_DETECTED: 0

COUNT_TRANSACTIONS_ROWS_VALIDATING: 0

TRANSACTIONS_COMMITTED_ALL_MEMBERS: cc5e2627-2285-451f-86e6-0be21581539f:1-23:1000003

LAST_CONFLICT_FREE_TRANSACTION: cc5e2627-2285-451f-86e6-0be21581539f:23

COUNT_TRANSACTIONS_REMOTE_IN_APPLIER_QUEUE: 0

COUNT_TRANSACTIONS_REMOTE_APPLIED: 9

COUNT_TRANSACTIONS_LOCAL_PROPOSED: 0

COUNT_TRANSACTIONS_LOCAL_ROLLBACK: 0

3 rows in set (0.00 sec)

#当前server中各个通道的使用情况,

mysql> select * from performance_schema.replication_connection_status\G

*************************** 1. row ***************************

CHANNEL_NAME: group_replication_applier

GROUP_NAME: cc5e2627-2285-451f-86e6-0be21581539f

SOURCE_UUID: cc5e2627-2285-451f-86e6-0be21581539f

THREAD_ID: NULL

SERVICE_STATE: ON

COUNT_RECEIVED_HEARTBEATS: 0

LAST_HEARTBEAT_TIMESTAMP: 0000-00-00 00:00:00.000000

RECEIVED_TRANSACTION_SET: cc5e2627-2285-451f-86e6-0be21581539f:1-23:1000003

LAST_ERROR_NUMBER: 0

LAST_ERROR_MESSAGE:

LAST_ERROR_TIMESTAMP: 0000-00-00 00:00:00.000000

LAST_QUEUED_TRANSACTION: cc5e2627-2285-451f-86e6-0be21581539f:23

LAST_QUEUED_TRANSACTION_ORIGINAL_COMMIT_TIMESTAMP: 2018-05-09 16:38:08.035692

LAST_QUEUED_TRANSACTION_IMMEDIATE_COMMIT_TIMESTAMP: 0000-00-00 00:00:00.000000

LAST_QUEUED_TRANSACTION_START_QUEUE_TIMESTAMP: 2018-05-09 16:38:08.031639

LAST_QUEUED_TRANSACTION_END_QUEUE_TIMESTAMP: 2018-05-09 16:38:08.031753

QUEUEING_TRANSACTION:

QUEUEING_TRANSACTION_ORIGINAL_COMMIT_TIMESTAMP: 0000-00-00 00:00:00.000000

QUEUEING_TRANSACTION_IMMEDIATE_COMMIT_TIMESTAMP: 0000-00-00 00:00:00.000000

QUEUEING_TRANSACTION_START_QUEUE_TIMESTAMP: 0000-00-00 00:00:00.000000

*************************** 2. row ***************************

CHANNEL_NAME: group_replication_recovery

GROUP_NAME:

SOURCE_UUID:

THREAD_ID: NULL

SERVICE_STATE: OFF

COUNT_RECEIVED_HEARTBEATS: 0

LAST_HEARTBEAT_TIMESTAMP: 0000-00-00 00:00:00.000000

RECEIVED_TRANSACTION_SET:

LAST_ERROR_NUMBER: 0

LAST_ERROR_MESSAGE:

LAST_ERROR_TIMESTAMP: 0000-00-00 00:00:00.000000

LAST_QUEUED_TRANSACTION:

LAST_QUEUED_TRANSACTION_ORIGINAL_COMMIT_TIMESTAMP: 0000-00-00 00:00:00.000000

LAST_QUEUED_TRANSACTION_IMMEDIATE_COMMIT_TIMESTAMP: 0000-00-00 00:00:00.000000

LAST_QUEUED_TRANSACTION_START_QUEUE_TIMESTAMP: 0000-00-00 00:00:00.000000

LAST_QUEUED_TRANSACTION_END_QUEUE_TIMESTAMP: 0000-00-00 00:00:00.000000

QUEUEING_TRANSACTION:

QUEUEING_TRANSACTION_ORIGINAL_COMMIT_TIMESTAMP: 0000-00-00 00:00:00.000000

QUEUEING_TRANSACTION_IMMEDIATE_COMMIT_TIMESTAMP: 0000-00-00 00:00:00.000000

QUEUEING_TRANSACTION_START_QUEUE_TIMESTAMP: 0000-00-00 00:00:00.000000

2 rows in set (0.00 sec)

#当前server中各个通道是否启用,on是启用

mysql> select * from performance_schema.replication_applier_status;

+----------------------------+---------------+-----------------+----------------------------+

| CHANNEL_NAME | SERVICE_STATE | REMAINING_DELAY | COUNT_TRANSACTIONS_RETRIES |

+----------------------------+---------------+-----------------+----------------------------+

| group_replication_applier | ON | NULL | 0 |

| group_replication_recovery | OFF | NULL | 0 |

+----------------------------+---------------+-----------------+----------------------------+

2 rows in set (0.00 sec)

#单主模式下,查看那个是主库,只显示uuid值

mysql> select * from performance_schema.global_status where VARIABLE_NAME='group_replication_primary_member';

+----------------------------------+--------------------------------------+

| VARIABLE_NAME | VARIABLE_VALUE |

+----------------------------------+--------------------------------------+

| group_replication_primary_member | a29a1b91-4908-11e8-848b-08002778eea7 |

+----------------------------------+--------------------------------------+

1 row in set (0.00 sec)

例如下面这个例子

mysql> show global variables like 'server_uuid'; +---------------+--------------------------------------+ | Variable_name | Value | +---------------+--------------------------------------+ | server_uuid | af892b6e-49ca-11e8-9c9e-080027b04376 | +---------------+--------------------------------------+ 1 row in set (0.00 sec) mysql> show global variables like 'super%'; +-----------------+-------+ | Variable_name | Value | +-----------------+-------+ | super_read_only | ON | +-----------------+-------+ 1 row in set (0.00 sec)

好明显,这台不是主库,super_read_only都开启了.

8.切换到多主模式

MGR切换模式需要重新启动组复制,因些需要在所有节点上先关闭组复制,设置 group_replication_single_primary_mode=OFF 等参数,再启动组复制。

# 停止组复制(所有节点执行): mysql> stop group_replication; mysql> set global group_replication_single_primary_mode=OFF; mysql> set global group_replication_enforce_update_everywhere_checks=ON; # 随便选择某个节点执行 mysql> SET GLOBAL group_replication_bootstrap_group=ON; mysql> START GROUP_REPLICATION; mysql> SET GLOBAL group_replication_bootstrap_group=OFF; # 其他节点执行 mysql> START GROUP_REPLICATION; # 查看组信息,所有节点的 MEMBER_ROLE 都为 PRIMARY mysql> SELECT * FROM performance_schema.replication_group_members; +---------------------------+--------------------------------------+--------------+-------------+--------------+-------------+----------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | +---------------------------+--------------------------------------+--------------+-------------+--------------+-------------+----------------+ | group_replication_applier | 5254cf46-8415-11e8-af09-fa163eab3dcf | 192.168.56.102 | 3306 | ONLINE | PRIMARY | 8.0.11 | | group_replication_applier | 601a4025-8415-11e8-b2b6-fa163e767b9a | 192.168.56.103 | 3306 | ONLINE | PRIMARY | 8.0.11 | | group_replication_applier | 8cb3f19b-8414-11e8-9d34-fa163eda7360 | 192.168.56.101 | 3306 | ONLINE | PRIMARY | 8.0.11 | +---------------------------+--------------------------------------+--------------+-------------+--------------+-------------+----------------+ 3 rows in set (0.00 sec)

可以看到所有节点状态都是online,角色都是PRIMARY,MGR多主模式搭建成功。

切回单主模式

# 所有节点执行 mysql> stop group_replication; mysql> set global group_replication_enforce_update_everywhere_checks=OFF; mysql> set global group_replication_single_primary_mode=ON; # 主节点(192.168.56.101)执行 SET GLOBAL group_replication_bootstrap_group=ON; START GROUP_REPLICATION; SET GLOBAL group_replication_bootstrap_group=OFF; # 从节点(192.168.56.102、192.168.56.103)执行 START GROUP_REPLICATION; # 查看MGR组信息 mysql> SELECT * FROM performance_schema.replication_group_members; +---------------------------+--------------------------------------+--------------+-------------+--------------+-------------+----------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | +---------------------------+--------------------------------------+--------------+-------------+--------------+-------------+----------------+ | group_replication_applier | 5254cf46-8415-11e8-af09-fa163eab3dcf | 192.168.56.102 | 3306 | ONLINE | SECONDARY | 8.0.11 | | group_replication_applier | 601a4025-8415-11e8-b2b6-fa163e767b9a | 192.168.56.103 | 3306 | ONLINE | SECONDARY | 8.0.11 | | group_replication_applier | 8cb3f19b-8414-11e8-9d34-fa163eda7360 | 192.168.56.101 | 3306 | ONLINE | PRIMARY | 8.0.11 | +---------------------------+--------------------------------------+--------------+-------------+--------------+-------------+----------------+ 3 rows in set (0.00 sec)

9.异常处理:

1、注意:前面的用户密码修改和创建用户操作必须设置binlog不记录,执行后再打开,否则会引起START GROUP_REPLICATION执行报错:

报错信息如下:

ERROR 3092 (HY000): The server is not configured properly to be an active member of the group. Please see more details on error log.1

mysql后台报错信息:

2017-04-17T12:06:55.113724+08:00 0 [ERROR] Plugin group_replication reported: 'This member has more executed transactions than those present in the group. Local transactions: 423ccc44-2318-11

e7-96e9-fa163e6e90d0:1-4 > Group transactions: 7e29f043-2317-11e7-9594-fa163e98778e:1-5,

test-testtest-test-testtesttest:1-6'

2017-04-17T12:06:55.113825+08:00 0 [ERROR] Plugin group_replication reported: 'The member contains transactions not present in the group. The member will now exit the group.'

2017-04-17T12:06:55.113835+08:00 0 [Note] Plugin group_replication reported: 'To force this member into the group you can use the group_replication_allow_local_disjoint_gtids_join option'

2017-04-17T12:06:55.113947+08:00 3 [Note] Plugin group_replication reported: 'Going to wait for view modification'

2017-04-17T12:06:55.114493+08:00 0 [Note] Plugin group_replication reported: 'getstart group_id 4317e324'

2017-04-17T12:07:00.054225+08:00 0 [Note] Plugin group_replication reported: 'state 4330 action xa_terminate'

2017-04-17T12:07:00.056324+08:00 0 [Note] Plugin group_replication reported: 'new state x_start'

2017-04-17T12:07:00.056349+08:00 0 [Note] Plugin group_replication reported: 'state 4257 action xa_exit'

2017-04-17T12:07:00.057272+08:00 0 [Note] Plugin group_replication reported: 'Exiting xcom thread'

2017-04-17T12:07:00.057288+08:00 0 [Note] Plugin group_replication reported: 'new state x_start'

2017-04-17T12:07:05.069548+08:00 3 [Note] Plugin group_replication reported: 'auto_increment_increment is reset to 1'

2017-04-17T12:07:05.069644+08:00 3 [Note] Plugin group_replication reported: 'auto_increment_offset is reset to 1'

2017-04-17T12:07:05.070107+08:00 9 [Note] Error reading relay log event for channel 'group_replication_applier': slave SQL thread was killed

解决方案:

根据提示打开group_replication_allow_local_disjoint_gtids_join选项,mysql命令行执行:

mysql> set global group_replication_allow_local_disjoint_gtids_join=ON;

再次启动组复制

mysql> START GROUP_REPLICATION;

2、连不上master,报错信息如下:

2017-04-17T16:18:14.756191+08:00 25 [Warning] Storing MySQL user name or password information in the master info repository is not secure and is therefore not recommended. Please consider using the USER and PASSWORD connection options for START SLAVE; see the 'START SLAVE Syntax' in the MySQL Manual for more information.

2017-04-17T16:18:14.814193+08:00 25 [ERROR] Slave I/O for channel 'group_replication_recovery': error connecting to master'repl_user@host-192-168-99-156:3306' - retry-time: 60 retries: 1, Error_code: 2005

2017-04-17T16:18:14.814219+08:00 25 [Note] Slave I/O thread for channel 'group_replication_recovery' killed while connecting to master

2017-04-17T16:18:14.814227+08:00 25 [Note] Slave I/O thread exiting for channel 'group_replication_recovery', read up to log 'FIRST', position 4

2017-04-17T16:18:14.814342+08:00 19 [ERROR] Plugin group_replication reported: 'There was an error when connecting to the donor server. Check group replication recovery's connection credentials.'

解决方案:

添加映射

vim /etc/hosts

192.168.99.156 db1 host-192-168-99-156

192.168.99.157 db2 host-192-168-99-157

192.168.99.158 db3 host-192-168-99-158

重启下组复制

mysql> stop group_replication;

Query OK, 0 rows affected (8.76 sec)

mysql> start group_replication;

Query OK, 0 rows affected (2.51 sec)

3.

ERROR 3092 (HY000): The server is not configured properly to be an active member of the group. Please see more details on error log.

查看日志:

2018-06-12T09:50:17.911574Z 2 [ERROR] Plugin group_replication reported: ‘binlog_checksum should be NONE for Group Replication’

解决办法:(三个节点都执行,不要忘记在配置文件中也要更新)

mysql> show variables like '%binlog_checksum%';

+-----------------+-------+

| Variable_name | Value |

+-----------------+-------+

| binlog_checksum | CRC32 |

+-----------------+-------+

1 row in set (0.00 sec)

mysql> set @@global.binlog_checksum='none';

Query OK, 0 rows affected (0.09 sec)

mysql> show variables like '%binlog_checksum%';

+-----------------+-------+

| Variable_name | Value |

+-----------------+-------+

| binlog_checksum | NONE |

+-----------------+-------+

1 row in set (0.00 sec)

mysql> START GROUP_REPLICATION;

Query OK, 0 rows affected (12.40 sec)

参考来源:

https://dev.mysql.com/doc/refman/5.7/en/group-replication.html

https://blog.csdn.net/mchdba/article/details/54381854

http://www.voidcn.com/article/p-aiixfpfr-brr.html

https://www.cnblogs.com/luoahong/articles/8043035.html

https://blog.csdn.net/i_am_wangjian/article/details/80508663