MIT 6.S081入门lab9 文件系统

MIT 6.S081入门lab9 文件系统

一、参考资料阅读与总结

1.xv6 book书籍阅读(Chapter 8 File system)

0.文件系统基础知识:

- 文件系统涉及的操作系统的概念:持久性

- 文件系统的核心诉求:作为纯软件,提供相应机制保证用户和操作系统在不同的存储设备上提供的视图相同(虚拟化)

0.1 unix文件系统常用api

-

unix文件系统目录结构:文件目录,从而实现了树型文件系统。实现了绝对路径+相对路径的统一;

-

文件名的构成:文件名+文件类型;

-

文件描述符: 非负整数,文件使用文件描述符引用并识别文件;fd0:标准输入;fd1:标准输出;fd2:标准错误;

-

文件操作:

-

Open: 打开/创建文件,最小未用描述符;Creat: 创建文件;Close: 关闭文件(进程终止内核自动执行);

Open Creat Close

#include <fcntl.h> #include <unistd.h> int open(const char *path, int oflag, ... /* mode_t mode */); //oflag为常量+可选的形式 mode为创建权限 int creat(const char *path, mode_t mode); // 此creat函数等效于: // open(path, O_WRONLY|O_CREAT|O_TRUNC, mode); int close(int fd); -

Lseek: 设置打开文件的文件偏移量;

Lseek

#include <unistd.h> off_t lseek(int fd, off_t offset, int whence); -

Read: 从打开文件中读取数据,返回实际读取到的字节数;Write: 从打开文件中写入数据,返回成功写入的字节数;在写尾端的时候可以使用O_APPEND保证写的原子性

Read Write

#include <unistd.h> ssize_t read(int fd, void *buf, size_t nbytes); ssize_t write(int fd, const void *buf, size_t nbytes); -

Dup:复制现有的文件描述符,dup返回当前可用文件描述符最小值,dup2可以指定新文件描述符值;注:新的文件描述符与原始fd共享统一文件表项

Dup

#include <unistd.h> int dup(int fd); int dup2(int fd, int fd2); -

Sync: 保证文件系统与文件缓冲区中内容一致性:

sync: 加入写队列中返回;

fsync: 阻塞写,保证同步操作完成;

fdatasync: 类似与fsync,但只影响数据部分Sync

#include <unistd.h> int fsync(int fd); int fdatasync(int fd); void sync(void); -

Stat: 查看文件元数据

Stat及返回结构体

int stat(const char *restrict pathname, struct stat *restrict buf); int fstat(int fd, struct stat *buf); struct stat{ mode_t st_mode; // permissions ino_t st_ino; // inode number dev_t st_dev; // device number dev_t st_rdev; // device number for special files nlink_t st_nlink; // number of hard links uid_t st_uid; // user ID of owner gid_t st_gid; // group ID of owner off_t st_size; // total sizes, in bytes struct timespec st_atime; // time of last access struct timespec st_mtime; // time of last modification struct timespec st_ctime; // time of last status change blksize_t st_blksize; // best block size for file system I/O blkcnt_t st_blocks; // number of disk blocks allocated } -

Link:创建硬连接;Unlink:删除目录项,并将锁引用文件硬连接计数减1

通常使用用unlink删除文件,而使用rmdir删除空目录Link Unlink

#include <unistd.h> #include <stdio.h> int link(const char *existingpath, const char *newpath); int linkat(int efd, const char *existingpath, int nfd, const char *newpath, int flag); int unlink(const char *pathname); int unlinkat(int fd, const char *pathname, int flag); int remove(const char *pathname); -

Symbolic Link:软连接(快捷方式)

Symlink

#include <unistd.h> int symlink(const char *actualpath, const char *sympath); int symlinkat(const char *actualpath, int fd, const char *sympath); -

Mkdir:创建空目录;Rmdir:删除空目录 ;Chdir: 更改当前工作目录;

Mkdir Rmdir Chdir

#include <unistd.h> int mkdir(const char *pathname, mode_t mode); int rmdir(const char *pathname); int chdir(const char *pathname);

-

-

文件类型:

普通文件: 数据;

目录文件: 其他文件的名字+指针,只有内核可以写;

块文件: 对设备带缓冲的访问,访问以固定长度进行;

字符特殊文件: 对设备不带缓冲的访问,访问长度可变;

FIFO: 进程间通信/命名管道;

嵌套字: 实现网络通信/进程之间的非网络通信;

符号连接: 软连接; -

简易文件系统构成: 数据块(用户数据);元数据(文件信息inode);位图(块状态);超级块(文件系统信息)

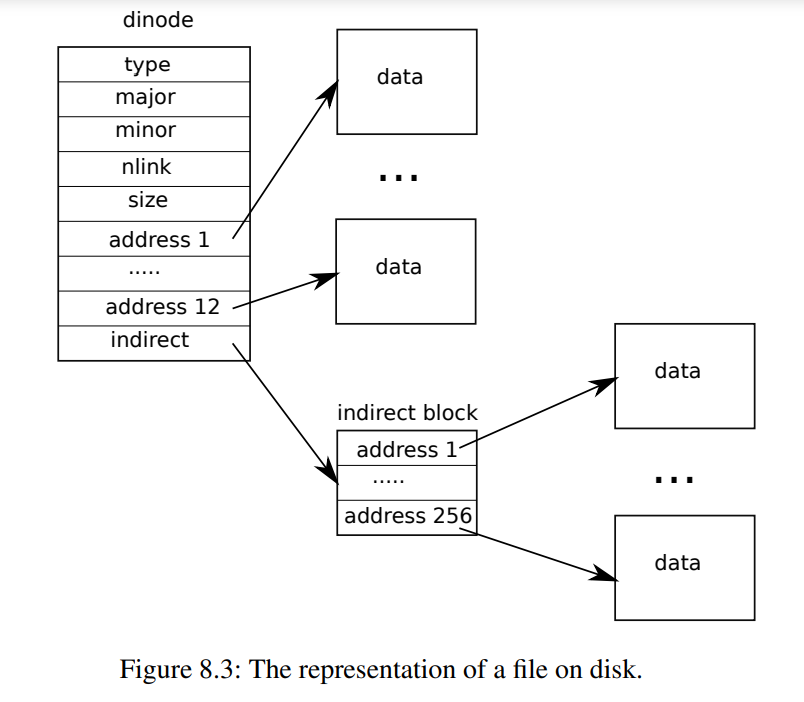

- inode: 保存文件元数据的结构,inode地址可以通过inode table起始地址+inode偏移量得到

- 多级索引:使用多级指针实现元数据的扩充,即inode可以由直接指针+多级间接指针构成;原因:多数文件都很小;

- 目录组织: 核心数据结构是列表:即 inum-总长度-已使用长度-条目名称 ;其可以使用B树替代;

- 空闲空间管理: 空闲列表/B树;在分配数据块时可以采用预分配策略提高性能;

- 从磁盘读取文件:遍历路径(从根目录inode2开始),找到文件inode -> 分配文件描述符,返回用户 -> read读取文件并更新inode

- 写入磁盘(新分配块):读取inode->读取位图->写入数据块->写入位图->写入inode;如果要考虑目录,还需要更新目录的inode;

- **缓存和缓冲: ** 使用物理内存缓存重要的块,避免低速I/O操作;同时,通过懒写入,实现对I/O操作的合并;

- inode: 保存文件元数据的结构,inode地址可以通过inode table起始地址+inode偏移量得到

0.2 快速文件系统

- 初始版本的文件系统(UFS)由于将其当成随机存取内存,因此数据分布在各个地方,同时变得碎片化

- 最初的实现:使用柱面组避免了额外的寻道操作;将大量的空闲inode放入分配数量最小的柱面组中(平衡),之后将文件数据块分配到与其inode相同的柱面组中,并保证同一目录文件放在目录柱面组中

- 大文件放置策略:利用摊销思想,将其打成大块打散放入不同柱面组,在避免破坏文件访问的局部性的前提下,减少寻道时间。

- 参数化:即通过交错的磁盘布局,避免磁盘的额外旋转

- 崩溃一致性问题: 在出现断电/系统崩溃的条件下,如何更新磁盘结构(位图/inode/data/超级块的一致性)

- 早期解决:使用fsck在重启时修复,即扫描整个磁盘,确保文件的元数据一致,其检查超级块/位图/inode/目录

问题:难以构建+性能很差

现代解决: 日志记录:操作前先写入注释和记录,在崩溃后重试,避免全盘搜索;

一般日志操作:日志写入(TxB)+日志提交(TxE)+加检查点+数据写入+释放

元日志操作:不将用户数据写入日志中,即数据写入->日志元数据写入->日志提交->对元数据加检查点->释放

元日志能够有效的减少磁盘I/O读写;

- 早期解决:使用fsck在重启时修复,即扫描整个磁盘,确保文件的元数据一致,其检查超级块/位图/inode/目录

1.概述

-

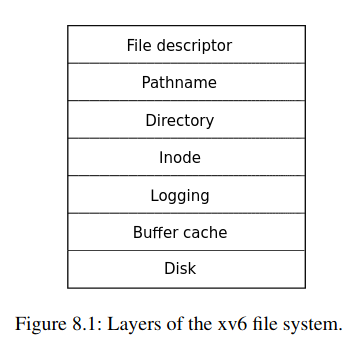

xv6文件系统: 包括七层

Disk Layer: 实现硬件操作,即对磁盘块的读写;

Buffer Cache Layer: 实现磁盘-内存的缓存,并管理进程对缓存块的并发访问;

Logging Layer: 提供更新磁盘接口,并确保更新的原子性,同时实现Crash Recovery;

Inode Layer: 为文件层提供接口,保证文件的独立并确定inode标识和相关信息;

Directory Layer: 实现目录文件,其数据是一系列的目录条目,包含该目录下的文件名和文件的inode号;

Pathname Layer: 提供符合文件系统层次结构的路径名,同时实现路径名称递归查找;

File Descriptor Layer: 实现对底层资源(管道/设备/文件)的抽象;

-

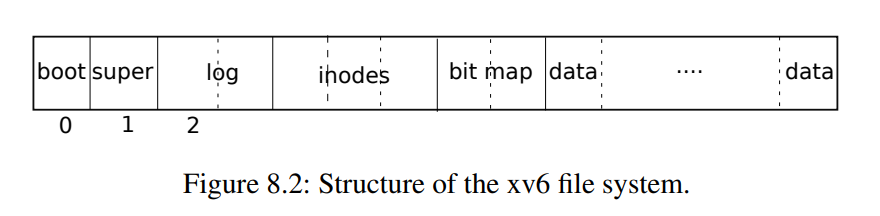

xv6的文件布局: 引导块+超级块+日志块+inodes块+位图块+数据块;块大小:1KB;

2.Buffer Cache层

- 功能: 同步对磁盘块的并发访问;使用LRU策略实现对缓存的替换

- 提供接口: bread:获取内存缓存块;bwrite:将修改后的缓存块写入磁盘;brelse:实现缓存块的释放

- 提供原子操作: 缓存块使用睡眠锁保证原子的操作

- 注: 这一层缓存槽数量是固定的,如访问文件不再缓存槽中,使用LRU实现对缓存槽的回收;

3.代码:Buffer Cache

-

struct bcache实现: 使用自旋锁保护buffer Cache,即缓存插槽

bcache kernel/bio.c

struct { struct spinlock lock; struct buf buf[NBUF]; // Linked list of all buffers, through prev/next. // Sorted by how recently the buffer was used. // head.next is most recent, head.prev is least. struct buf head; } bcache; -

struct buf槽位实现: 使用睡眠锁保护buf信息,包含元信息与数据

buf kernel/buf.h

struct buf { int valid; // has data been read from disk? int disk; // does disk "own" buf? uint dev; //磁盘dev编号 uint blockno; // 磁盘位置 struct sleeplock lock; //睡眠锁保护数据 uint refcnt; //被引用次数 struct buf *prev; // LRU cache list struct buf *next; uchar data[BSIZE]; }; -

binit: 实现锁的初始化,并将槽位连接成一个双向链表(环形链表),初始化之后访问通过链表访问

binit kernel/bio.c

void binit(void) { struct buf *b; initlock(&bcache.lock, "bcache"); //锁的初始化 // Create linked list of buffers bcache.head.prev = &bcache.head; bcache.head.next = &bcache.head; for(b = bcache.buf; b < bcache.buf+NBUF; b++){ //头插法实现环形链表 b->next = bcache.head.next; b->prev = &bcache.head; initsleeplock(&b->lock, "buffer"); bcache.head.next->prev = b; bcache.head.next = b; } } -

bread: 通过调用bget获取缓存块,如为回收槽位,则调用驱动读取磁盘块并更新标志位;

bread kernel/bio.c

// Return a locked buf with the contents of the indicated block. struct buf* bread(uint dev, uint blockno) { struct buf *b; b = bget(dev, blockno); if(!b->valid) { virtio_disk_rw(b, 0); //读取磁盘 b->valid = 1; } return b; //返回上锁的缓存块 } -

bget: 根据输入设备数和块号,扫描缓存;命中则更新计数并返回;未命中则使用LRU回收缓存块并标记无效位

注: 为了保证磁盘块缓存的唯一性和缓存块访问的原子性,使用bcache.lock保证了操作的原子性;槽位满的panic可以使用睡眠-挂起代替bget kernel/bio.c

// Look through buffer cache for block on device dev. // If not found, allocate a buffer. // In either case, return locked buffer. static struct buf* bget(uint dev, uint blockno) { struct buf *b; acquire(&bcache.lock); //自旋锁保证操作原子性 // Is the block already cached? for(b = bcache.head.next; b != &bcache.head; b = b->next){ if(b->dev == dev && b->blockno == blockno){ //缓存命中,增加应用并释放锁 b->refcnt++; release(&bcache.lock); acquiresleep(&b->lock); return b; } } // Not cached. // Recycle the least recently used (LRU) unused buffer. for(b = bcache.head.prev; b != &bcache.head; b = b->prev){ //使用LRU从后往前搜索 if(b->refcnt == 0) { b->dev = dev; b->blockno = blockno; b->valid = 0; b->refcnt = 1; release(&bcache.lock); acquiresleep(&b->lock); return b; } } panic("bget: no buffers"); //缓存已满 } -

bwrite: 检查缓存块的睡眠锁,之后刷新缓存状态

bwrite kernel/bio.c

// Write b's contents to disk. Must be locked. void bwrite(struct buf *b) { if(!holdingsleep(&b->lock)) panic("bwrite"); virtio_disk_rw(b, 1); } -

brelse: 释放缓存:释放睡眠锁 -> 减少引用 -> 更新链表(放入链表头)

brelse kernel/bio.c

// Release a locked buffer. // Move to the head of the most-recently-used list. void brelse(struct buf *b) { if(!holdingsleep(&b->lock)) //检查睡眠锁 panic("brelse"); releasesleep(&b->lock); //释放睡眠锁 acquire(&bcache.lock); //获取插槽锁 b->refcnt--; //减少引用 if (b->refcnt == 0) { //判断引用次数,在无等待访问后使用头插法插入环形链表 // no one is waiting for it. b->next->prev = b->prev; b->prev->next = b->next; b->next = bcache.head.next; b->prev = &bcache.head; bcache.head.next->prev = b; bcache.head.next = b; } release(&bcache.lock); } -

bpin、bunpin: 对缓存块的引用计数进行加减,同时利用锁保证其原子性

bpin bunpin kernel/bio.c

void bpin(struct buf *b) { acquire(&bcache.lock); b->refcnt++; release(&bcache.lock); } void bunpin(struct buf *b) { acquire(&bcache.lock); b->refcnt--; release(&bcache.lock); }

4.日志层

- 实现功能: 崩溃恢复,保证其在崩溃/断电时能从数据结构不一致状态恢复;

- 工作模式实现可参考0.2,即日志写入 -> 日志提交 -> 加检查点 -> 数据写入 -> 释放;

- 如果恢复时日志为完整操作,则会执行日志,如不为完整操作,则忽略日志

- 日志保证了操作在崩溃时成为原子操作,即恢复后要么操作写入显示在磁盘上,要么不显示

5.日志设计

- 日志位置: 磁盘固定位置,其在超级块中也存在位置记录

- 日志区域结构: 日志头块+日志块;

日志头块:数组(记录日志块山区号+日志块标志为[0为无事务,非0为存在已经成果提交事务,且需处理日志块数量为n])

将所有块写入日志后,写入日志头块,一旦成功,代表事务成功提交 - 崩溃处理:崩溃在提交之前:计数为0;提交之后:非0;

- 日志操作的原子性与并发性:

原子性: 指定部分写磁盘操作需要是原子的

并发性: 为了保证并发性,将多个文件系统调用合并为一次大事务,一次提交(组提交),保证并发性同时减少磁盘操作数; - xv6实现:

- 在数据日志模式下,其一次只能处理单一事务;但是真实操作可以在提交事务同时并发执行系统调用,执行新事务的写入

- 文件系统预留了固定大小给日志系统,因此单次提交不能超过该大小

- 文件系统调用都使用日志系统的约束:没有文件系统调用能向磁盘写入超过日志空间大小的缓存块(write使用拆分解决,同时,由于xv6只有单一位图块,因此不会引起问题);如果日志空间不足,文件系统调用会阻塞

6.代码:日志

-

典型的使用日志系统的文件调用结构:

begin_op(); // ... bp = bread(...); // read out bp bp->data[...] = ...; // modify bp->data[] log_write(bp); // bp has been modified, so put it in log brelse(bp); // release bp // ... end_op(); -

涉及接口:begin_op、log_write、end_op

-

数据结构:

-

logheader:计数n + 日志ed块写入目标磁盘位置(真正的磁盘数据结构)

-

log: 自旋锁 + logheader + 标志位(日志起始磁盘位置 + 日志区总块数 + 文件系统调用数 + 检查点状态)(内存年数据结构)

logheader log kernel/log.c

// Contents of the header block, used for both the on-disk header block // and to keep track in memory of logged block# before commit. struct logheader { int n; //计数 int block[LOGSIZE]; //写入位置 }; struct log { struct spinlock lock; //自旋锁 int start; //日志起始磁盘位置 int size; // 日志区总块数 int outstanding; // how many FS sys calls are executing. 文件系统调用数 int committing; // in commit(), please wait. 检查点状态 int dev; //设备编号 struct logheader lh; //logheader };

-

-

上层接口: begin_op, log_write,end_op:

-

begin_op: 在每个FS系统调用开始之前被调用,加检查点-> 受理系统调用(如没有足够空间先挂起)

begin_op kernel/log.c

// called at the start of each FS system call. void begin_op(void) { acquire(&log.lock); while(1){ if(log.committing){ //等待加载点完成 sleep(&log, &log.lock); } else if(log.lh.n + (log.outstanding+1)*MAXOPBLOCKS > LOGSIZE){ //判断日志区内是否有足够空间 // this op might exhaust log space; wait for commit. sleep(&log, &log.lock); } else { log.outstanding += 1; //增加引用数 release(&log.lock); break; } } } -

log_write: 检查是否合法 -> 检查块状态(使用了吸收优化,使避免重复写入占用日志块) -> 记录写位置并增加计数(引用 + 数据计数)

log_write kernel/log.c

// Caller has modified b->data and is done with the buffer. // Record the block number and pin in the cache by increasing refcnt. // commit()/write_log() will do the disk write. // // log_write() replaces bwrite(); a typical use is: // bp = bread(...) // modify bp->data[] // log_write(bp) // brelse(bp) void log_write(struct buf *b) { int i; if (log.lh.n >= LOGSIZE || log.lh.n >= log.size - 1) panic("too big a transaction"); if (log.outstanding < 1) // panic("log_write outside of trans"); acquire(&log.lock); for (i = 0; i < log.lh.n; i++) { if (log.lh.block[i] == b->blockno) // log absorbtion break; } log.lh.block[i] = b->blockno; if (i == log.lh.n) { // Add new block to log? bpin(b); log.lh.n++; } release(&log.lock); } -

end_op: 系统调用结束,准备提交事务 + 减少计数并检查(唤醒相关log)-> 调用commit提交

end_op kernel/log.c

// called at the end of each FS system call. // commits if this was the last outstanding operation. void end_op(void) { int do_commit = 0; acquire(&log.lock); log.outstanding -= 1; if(log.committing) panic("log.committing"); if(log.outstanding == 0){ do_commit = 1; log.committing = 1; } else { // begin_op() may be waiting for log space, // and decrementing log.outstanding has decreased // the amount of reserved space. wakeup(&log); } release(&log.lock); if(do_commit){ // call commit w/o holding locks, since not allowed // to sleep with locks. commit(); acquire(&log.lock); log.committing = 0; wakeup(&log); release(&log.lock); } } -

commit: 进行提交操作:将更新后的缓存块写入磁盘日志 -> 写入logheader(加检查点)-> 写入数据 -> 清除日志并完成更新

commit kernel/log.c

static void commit() { if (log.lh.n > 0) { write_log(); // Write modified blocks from cache to log write_head(); // Write header to disk -- the real commit install_trans(0); // Now install writes to home locations log.lh.n = 0; write_head(); // Erase the transaction from the log } }

-

-

日志提交操作(commit): write_log -> write_head ->install_trans ->write_head

-

write_log: 将内存中的缓存块写入到磁盘的日志区域

write_log kernel/log.c

// Copy modified blocks from cache to log. static void write_log(void) { int tail; for (tail = 0; tail < log.lh.n; tail++) { struct buf *to = bread(log.dev, log.start+tail+1); // log block +1为跳过iplogheader struct buf *from = bread(log.dev, log.lh.block[tail]); // cache block 读取缓存块 memmove(to->data, from->data, BSIZE); //更新缓存块 bwrite(to); // write the log 写入log brelse(from); brelse(to); //更新链表 } } -

write_head: 磁盘写入logheader (完成后可以恢复)

write_head kernel/log.c

// Write in-memory log header to disk. // This is the true point at which the // current transaction commits. static void write_head(void) { struct buf *buf = bread(log.dev, log.start); //从磁盘读取相应日志块 struct logheader *hb = (struct logheader *) (buf->data); //读取logheader int i; hb->n = log.lh.n; 更新磁盘上的n for (i = 0; i < log.lh.n; i++) { hb->block[i] = log.lh.block[i]; 更新磁盘上的块号 } //更新 bwrite(buf); //提交磁盘 brelse(buf);//释放mmap } -

install_trans: 提取相关数据并将数据写入磁盘

install_trans kernel/log.c

// Copy committed blocks from log to their home location static void install_trans(int recovering) { int tail; for (tail = 0; tail < log.lh.n; tail++) { struct buf *lbuf = bread(log.dev, log.start+tail+1); // read log block struct buf *dbuf = bread(log.dev, log.lh.block[tail]); // read dst memmove(dbuf->data, lbuf->data, BSIZE); // copy block to dst bwrite(dbuf); // write dst to disk if(recovering == 0) bunpin(dbuf); //允许回收dbuf brelse(lbuf); brelse(dbuf); } } -

最后一步 将logheader计数置0并写回磁盘(释放log),此处需要在end_op之前,即先释放旧块,再处理新事务

-

-

crash恢复流程: init -> fsinit初始化文件系统 -> initlog初始化日志系统

-

init初始化+fsinit 初始化文件系统

forkret kernel/proc.c

// A fork child's very first scheduling by scheduler() // will swtch to forkret. void forkret(void) { static int first = 1; // Still holding p->lock from scheduler. release(&myproc()->lock); if (first) { // File system initialization must be run in the context of a // regular process (e.g., because it calls sleep), and thus cannot // be run from main(). first = 0; // fsinit(ROOTDEV); //初始化文件系统 } usertrapret(); }fsinit kernel/fs.c

// Init fs void fsinit(int dev) { readsb(dev, &sb); if(sb.magic != FSMAGIC) panic("invalid file system"); initlog(dev, &sb); //初始化文件系统 } -

initlog: 从超级块上读取文件系统信息->调用recover_from_log恢复

initlog kernel/log.c

void initlog(int dev, struct superblock *sb) { if (sizeof(struct logheader) >= BSIZE) panic("initlog: too big logheader"); initlock(&log.lock, "log");//初始化日志锁 log.start = sb->logstart; log.size = sb->nlog; log.dev = dev; //从超级块中读取信息 recover_from_log(); } -

recover_from_log: 读出磁盘logheader,并调用read_head和write_head 实现commit的后半部分

recover_from_log kernel/log.c

static void recover_from_log(void) { read_head(); install_trans(1); // if committed, copy from log to disk log.lh.n = 0; write_head(); // clear the log }read_head kernel/log.c

// Read the log header from disk into the in-memory log header static void read_head(void) { struct buf *buf = bread(log.dev, log.start); struct logheader *lh = (struct logheader *) (buf->data); //读取logheader int i; log.lh.n = lh->n; for (i = 0; i < log.lh.n; i++) { log.lh.block[i] = lh->block[i]; //转移block信息 } brelse(buf); }

-

-

日志调用例: 将大的写操作分写为扇区的单个事务,避免日志溢出;

filewrite kernel/file.c

begin_op(); ... ilock(f->ip); r = writei(f->ip, ...); iunlock(f->ip); ... end_op();

7.代码:块分配器

- 作用: 维护空闲位图,

- 功能(对外接口): balloc 分配磁盘块; bfree 释放块, 类似于内存分配器

-

balloc: 分配新的磁盘块:扫描位图块 -> 扫描位图, 检查空闲 -> 标记被使用并返回磁盘块 (注:此处的同步机制使用的是bread)

balloc kernel/fs.c

#define BSIZE 1024 // block size // Bitmap bits per block #define BPB (BSIZE*8) // Block of free map containing bit for block b #define BBLOCK(b, sb) ((b)/BPB + sb.bmapstart) // Blocks. ... // Allocate a zeroed disk block. static uint balloc(uint dev) { int b, bi, m; struct buf *bp; bp = 0; for(b = 0; b < sb.size; b += BPB){ //扫描位图块 bp = bread(dev, BBLOCK(b, sb)); //读取位图块 for(bi = 0; bi < BPB && b + bi < sb.size; bi++){ //扫描位图 m = 1 << (bi % 8); //位数 if((bp->data[bi/8] & m) == 0){ // Is block free? bp->data[bi/8] |= m; // Mark block in use. log_write(bp); brelse(bp); bzero(dev, b + bi); //清空数据 return b + bi; //返回块号 } } brelse(bp); } panic("balloc: out of blocks"); } -

bfree: 在位图中找到位置-> 标记为0

bfree kernel/fs.c

// Free a disk block. static void bfree(int dev, uint b) { struct buf *bp; int bi, m; bp = bread(dev, BBLOCK(b, sb)); bi = b % BPB; //确定位置 m = 1 << (bi % 8); if((bp->data[bi/8] & m) == 0) panic("freeing free block"); bp->data[bi/8] &= ~m; //置0 log_write(bp); brelse(bp); }

-

- 注: 需要在事务内部调用balloc和bfree

8.索引结点层

-

inode: 磁盘上数据结构/内存中数据结构, 磁盘: 文件大小, 数据块位置等; 内存: 内核信息 + 磁盘;

-

磁盘inode: dinode: 大小固定, 包含文件类型;引用连接计数(硬连接数);文件大小;数据位置

struct dinode kernel/fs.h

#define NDIRECT 12 ... // On-disk inode structure struct dinode { short type; // File type short major; // Major device number (T_DEVICE only) short minor; // Minor device number (T_DEVICE only) short nlink; // Number of links to inode in file system uint size; // Size of file (bytes) uint addrs[NDIRECT+1]; // Data block addresses }; -

内存inode: 内存中dinode的副本, 设备 + inode数 + 程序引用数 + 访问锁 + 读取标志位 + dinode结构

struct inode kernel/file.h

// in-memory copy of an inode struct inode { uint dev; // Device number uint inum; // Inode number int ref; // Reference count struct sleeplock lock; // protects everything below here int valid; // inode has been read from disk? short type; // copy of disk inode short major; short minor; short nlink; uint size; uint addrs[NDIRECT+1]; };

-

-

inode层的锁机制: 自旋锁icache: 保护inode副本的唯一性, ref的指针数量; 睡眠锁inode.lock:保护inode副本中访问的独占性

icache kernel/fs.c

struct { struct spinlock lock; struct inode inode[NINODE]; } icache; -

注意(ref和nlink的不同): ref维护的是内存镜像的引用个数, nlink维护的是文件系统的引用个数;

-

iget调用: 获取inode指针, 直到调用iput释放; 只要存在indoe引用指针, 该inode就不会被删除释放;

注意: iget通过不离开对inode加锁的机制,实现了inode指针并发的访问; -

ilock和iunlock调用: 为了保证读取inode的串行性,使用了ilock和iunlock调用实现;

-

icache的目的: 同步多个进程对inode的访问, 且icache是直写式的,即修改后立即同步磁盘;

9.代码:Inodes

-

ialloc: inode的创建, 遍历inode找到空闲 -> 更新type字段 -> icache缓存 -> 利用iget返回inode指针 (同步机制依赖于Buffer Cache层)

ialloc kernel/fs.c

// Inodes per block. // IPB = 16 #define IPB (BSIZE / sizeof(struct dinode)) // Block containing inode i #define IBLOCK(i, sb) ((i) / IPB + sb.inodestart) ... // Allocate an inode on device dev. // Mark it as allocated by giving it type type. // Returns an unlocked but allocated and referenced inode. struct inode* ialloc(uint dev, short type) { int inum; struct buf *bp; struct dinode *dip; for(inum = 1; inum < sb.ninodes; inum++){ //寻找空闲块 bp = bread(dev, IBLOCK(inum, sb)); //读取 dip = (struct dinode*)bp->data + inum%IPB; if(dip->type == 0){ // a free inode memset(dip, 0, sizeof(*dip)); //清空 dip->type = type; //更新字段 log_write(bp); // mark it allocated on the disk brelse(bp); //释放缓存 return iget(dev, inum); } brelse(bp); } panic("ialloc: no inodes"); } -

iget: 通过设备号和inode号查找icache中indoe副本, 如没有,调用空槽位/回收槽位

注意: 此时调用空槽位/回收槽位只是返回槽位,并没有内容,需要调用ilock添加内容iget kernel/fs.c

// Find the inode with number inum on device dev // and return the in-memory copy. Does not lock // the inode and does not read it from disk. static struct inode* iget(uint dev, uint inum) { struct inode *ip, *empty; acquire(&icache.lock); // Is the inode already cached? empty = 0; for(ip = &icache.inode[0]; ip < &icache.inode[NINODE]; ip++){ if(ip->ref > 0 && ip->dev == dev && ip->inum == inum){ //扫描现有槽位 ip->ref++; release(&icache.lock); return ip; } if(empty == 0 && ip->ref == 0) // Remember empty slot. //扫描空槽位 empty = ip; } // Recycle an inode cache entry. if(empty == 0) panic("iget: no inodes"); ip = empty; ip->dev = dev; ip->inum = inum; ip->ref = 1; ip->valid = 0; //初始化空槽位 release(&icache.lock); return ip; } -

ilock: 对inode上锁, 如槽位内容为空,则从硬盘读取相应内容并送入到槽位中;

ilock kernel/fs.c

// Lock the given inode. // Reads the inode from disk if necessary. void ilock(struct inode *ip) { struct buf *bp; struct dinode *dip; if(ip == 0 || ip->ref < 1) panic("ilock"); acquiresleep(&ip->lock); //获取锁 if(ip->valid == 0){ bp = bread(ip->dev, IBLOCK(ip->inum, sb)); dip = (struct dinode*)bp->data + ip->inum%IPB; ip->type = dip->type; ip->major = dip->major; ip->minor = dip->minor; ip->nlink = dip->nlink; ip->size = dip->size; memmove(ip->addrs, dip->addrs, sizeof(ip->addrs));//初始化空槽位 brelse(bp); //释放读取内存 ip->valid = 1; //标记为存在数据 if(ip->type == 0) panic("ilock: no type"); } } -

iunlock: 释放inode的锁

iunlock kernel/fs.c

// Unlock the given inode. void iunlock(struct inode *ip) { if(ip == 0 || !holdingsleep(&ip->lock) || ip->ref < 1) //判断异常条件 panic("iunlock"); releasesleep(&ip->lock); //释放睡眠锁 } -

iput: 释放数据块inode,如果引用 + 硬连接为0 -> 则调用itrunc释放磁盘数据块 -> 使用iupdate更新磁盘数据

**注: ** 由于iupdate后,才进行ip->valid = 0, 因此alloc直到更新后, iput才会发现空块;ip->ref同理; 即完成操作后才修改相应标志位iput kernel/fs.c

// Drop a reference to an in-memory inode. // If that was the last reference, the inode cache entry can // be recycled. // If that was the last reference and the inode has no links // to it, free the inode (and its content) on disk. // All calls to iput() must be inside a transaction in // case it has to free the inode. void iput(struct inode *ip) { acquire(&icache.lock); if(ip->ref == 1 && ip->valid && ip->nlink == 0){ //准备对磁盘进行释放 // inode has no links and no other references: truncate and free. // ip->ref == 1 means no other process can have ip locked, // so this acquiresleep() won't block (or deadlock). acquiresleep(&ip->lock); release(&icache.lock); //锁的交换 itrunc(ip); //释放数据块 ip->type = 0; //更新标志位 iupdate(ip); 更新磁盘数据 ip->valid = 0; //更新标志位 releasesleep(&ip->lock); acquire(&icache.lock); } ip->ref--; //减少引用 release(&icache.lock); } -

itrunc: 见下一节

-

iupdate: 磁盘回操作, 使用日志系统保证写回的原子性

iupdate kernel/fs.c

// Copy a modified in-memory inode to disk. // Must be called after every change to an ip->xxx field // that lives on disk, since i-node cache is write-through. // Caller must hold ip->lock. void iupdate(struct inode *ip) { struct buf *bp; struct dinode *dip; bp = bread(ip->dev, IBLOCK(ip->inum, sb)); dip = (struct dinode*)bp->data + ip->inum%IPB; //读取硬盘 dip->type = ip->type; dip->major = ip->major; dip->minor = ip->minor; dip->nlink = ip->nlink; dip->size = ip->size; memmove(dip->addrs, ip->addrs, sizeof(ip->addrs)); //写回内存 log_write(bp); //日志系统写回硬盘 brelse(bp); //释放相应内存 } -

注意: iput存在写入磁盘的操作,即只要使用了文件系统, 都会引发磁盘I/O; 当nlink为0时, iput不会马上释放该文件, 这会导致潜在的磁盘空间泄漏问题, 因为如果释放前崩溃, 会导致指针(地址)丢失.

-

**解决方案: ** 启动恢复检查分配; 在超级块中记录硬连接为0且引用不为0的inode, 启动恢复截断扫描列表并删除(类似于进程的僵尸进程处理策略), 但是xv6没有实现该策略

10. 代码: Inode包含的内容

-

indoe中的addrs数组: 12个直接块 + 1个2层间接块 = 12 + 256 = 268个块

-

bmap接口: 给定inode指针, 给出数据块块号;查找直接块 -> 查找间接块 -> 如果不存在进行分配(间接块/直接块二级分配)

注意: addrs改变是由iupdate更新的;bmap kernel/fs.c

// Inode content // // The content (data) associated with each inode is stored // in blocks on the disk. The first NDIRECT block numbers // are listed in ip->addrs[]. The next NINDIRECT blocks are // listed in block ip->addrs[NDIRECT]. // Return the disk block address of the nth block in inode ip. // If there is no such block, bmap allocates one. static uint bmap(struct inode *ip, uint bn) { uint addr, *a; struct buf *bp; if(bn < NDIRECT){ if((addr = ip->addrs[bn]) == 0) ip->addrs[bn] = addr = balloc(ip->dev); return addr; } //直接块查找分配 bn -= NDIRECT; if(bn < NINDIRECT){ // Load indirect block, allocating if necessary. if((addr = ip->addrs[NDIRECT]) == 0) ip->addrs[NDIRECT] = addr = balloc(ip->dev); //分配间接块 bp = bread(ip->dev, addr); a = (uint*)bp->data; //分配间接块数据 if((addr = a[bn]) == 0){ //分配直接块 a[bn] = addr = balloc(ip->dev); log_write(bp); /调用日志系统写入磁盘 } brelse(bp); return addr; } //间接块查找分配 panic("bmap: out of range"); } -

itrunc: 释放给定inode的直接/间接块, 并将其大小和地址截断为0

itrunc kernel/fs.c

// Truncate inode (discard contents). // Caller must hold ip->lock. void itrunc(struct inode *ip) { int i, j; struct buf *bp; uint *a; for(i = 0; i < NDIRECT; i++){ if(ip->addrs[i]){ bfree(ip->dev, ip->addrs[i]); ip->addrs[i] = 0; } } //释放直接块 if(ip->addrs[NDIRECT]){ bp = bread(ip->dev, ip->addrs[NDIRECT]); a = (uint*)bp->data; for(j = 0; j < NINDIRECT; j++){ if(a[j]) bfree(ip->dev, a[j]); //释放直接块 } brelse(bp); bfree(ip->dev, ip->addrs[NDIRECT]);//释放间接块 ip->addrs[NDIRECT] = 0; } 释放间接块 ip->size = 0; //清空size iupdate(ip); } -

bmap对上层接口: readi, writei:

-

readi: 读取数据: 检查读取合法性 + 循环读取数据块并拷贝到目标地址

readi kernel/fs.c

// Read data from inode. // Caller must hold ip->lock. // If user_dst==1, then dst is a user virtual address; // otherwise, dst is a kernel address. int readi(struct inode *ip, int user_dst, uint64 dst, uint off, uint n) { uint tot, m; struct buf *bp; if(off > ip->size || off + n < off) return 0; if(off + n > ip->size) //检查合法性 n = ip->size - off; for(tot=0; tot<n; tot+=m, off+=m, dst+=m){ //循环读取 这块m依赖了编译器的初始化 bp = bread(ip->dev, bmap(ip, off/BSIZE)); m = min(n - tot, BSIZE - off%BSIZE); if(either_copyout(user_dst, dst, bp->data + (off % BSIZE), m) == -1) { 将读取数据拷贝进入用户地址 brelse(bp); tot = -1; break; } brelse(bp); } return tot; //返回读取大小 } -

writei: 写入数据: 可以写入超出文件大小,增长数据; 该操作不直接写入, 而是将将数据拷贝入Buffer Cache中; 文件写入, 则调用iupdate更新磁盘数据;

writei kernel/fs.c

// Write data to inode. // Caller must hold ip->lock. // If user_src==1, then src is a user virtual address; // otherwise, src is a kernel address. // Returns the number of bytes successfully written. // If the return value is less than the requested n, // there was an error of some kind. int writei(struct inode *ip, int user_src, uint64 src, uint off, uint n) { uint tot, m; struct buf *bp; if(off > ip->size || off + n < off) return -1; if(off + n > MAXFILE*BSIZE) //判断合法性 return -1; for(tot=0; tot<n; tot+=m, off+=m, src+=m){ //循环写入 bp = bread(ip->dev, bmap(ip, off/BSIZE)); m = min(n - tot, BSIZE - off%BSIZE); if(either_copyin(bp->data + (off % BSIZE), user_src, src, m) == -1) { //写入内存缓冲区 brelse(bp); break; } log_write(bp); //调用日志系统写入磁盘 brelse(bp); } if(off > ip->size) ip->size = off; //更新offsize // write the i-node back to disk even if the size didn't change // because the loop above might have called bmap() and added a new // block to ip->addrs[]. iupdate(ip); //更新内存中磁盘缓存数据 return tot; //返回写入大小 }

-

-

stati: 复制inode元数据到内存中stat结构体, 为上层用户提供访问inode接口;

stati kernel/fs.c

// Copy stat information from inode. // Caller must hold ip->lock. void stati(struct inode *ip, struct stat *st) { st->dev = ip->dev; st->ino = ip->inum; st->type = ip->type; st->nlink = ip->nlink; st->size = ip->size; //复制元数据 }

11.代码:目录层

-

目录的核心: 类型为目录的文件, 数据内容为目录条目. 其包括用户可读名称 + inode号, 如果为0,则为空目录

struct dirent kernel/fs.h

// Directory is a file containing a sequence of dirent structures. #define DIRSIZ 14 //名称深度限制 struct dirent { ushort inum; char name[DIRSIZ]; }; -

dirlookup: 给定目录inode指针 + 条目, 查找相应条目 -> 找到则返回inode指针 + 偏移量

注: 不给iget上锁是由于防止在路径名查找时发生死锁, 因为dirlookup已经对当前目录上锁了. 因此, 调用线程一次只能持有一把锁, 即在dirlookup返回后, 对dp解锁,同时对新的inode上锁;dirlookup kernel/fs.c

// Look for a directory entry in a directory. // If found, set *poff to byte offset of entry. struct inode* dirlookup(struct inode *dp, char *name, uint *poff) { uint off, inum; struct dirent de; //目录结构体 if(dp->type != T_DIR) panic("dirlookup not DIR"); //判断合法性 for(off = 0; off < dp->size; off += sizeof(de)){ if(readi(dp, 0, (uint64)&de, off, sizeof(de)) != sizeof(de)) panic("dirlookup read"); if(de.inum == 0) //空目录 continue; if(namecmp(name, de.name) == 0){ //根据名字查找 // entry matches path element if(poff) *poff = off; inum = de.inum; return iget(dp->dev, inum); //调用iget获取inode } } -

dirlink: 给定条目名称 + inode号, 在给定的dp中创建新的目录条目(硬连接)

dirlink kernel/fs.c

// Write a new directory entry (name, inum) into the directory dp. int dirlink(struct inode *dp, char *name, uint inum) { int off; struct dirent de; struct inode *ip; // Check that name is not present. if((ip = dirlookup(dp, name, 0)) != 0){ //在当前目录下寻找是否存在重复连接 iput(ip); return -1; } // Look for an empty dirent. for(off = 0; off < dp->size; off += sizeof(de)){ if(readi(dp, 0, (uint64)&de, off, sizeof(de)) != sizeof(de)) //遍历目录插槽,寻找空位置 panic("dirlink read"); if(de.inum == 0) break; } strncpy(de.name, name, DIRSIZ); de.inum = inum; if(writei(dp, 0, (uint64)&de, off, sizeof(de)) != sizeof(de))//写入文件inode和姓名, 如果非空,size会增大(off = dp.size) panic("dirlink"); return 0; } -

框架改进: 添加日志系统

日志系统框架

begin_op(); // ... ip = iget(dev, inum); //获取inode ilock(ip); //加锁 bp = bread(ip); //read out bp // 以下部分即 r = writei(ip, ...); bp->data[...] = ... // modify bp->data[], i.e. modify ip log_write(bp); //日志写入 brelse(bp); //释放内存 iupdate(ip); //刷新磁盘 // ... iunlock(ip); //释放锁 iput(ip); //清理磁盘 // ... end_op();

12. 代码: 路径名

-

路径名查找: 核心是一连串的dirlookup调用,对于单一目录名而言

-

路径名查找函数: namei和nameiparent; 接受完成的路径名作为数入,返回相关inode;

namei: 返回路径名最后一个元素的inode;

nameiparent: 返回最后一个元素的父目录, 并将名称返回name;namei nameiparent kernel/fs.c

struct inode* namei(char *path) { char name[DIRSIZ]; return namex(path, 0, name); } struct inode* nameiparent(char *path, char *name) { return namex(path, 1, name); } -

skipelem: namex 解析路径名称的辅助函数:提取路径名称中的下一个元素,并拷贝到name中,之后返回后续路径

skipelem kernel/fs.c

// Copy the next path element from path into name. // Return a pointer to the element following the copied one. // The returned path has no leading slashes, // so the caller can check *path=='\0' to see if the name is the last one. // If no name to remove, return 0. // // Examples: // skipelem("a/bb/c", name) = "bb/c", setting name = "a" // skipelem("///a//bb", name) = "bb", setting name = "a" // skipelem("a", name) = "", setting name = "a" // skipelem("", name) = skipelem("////", name) = 0 // static char* skipelem(char *path, char *name) { char *s; int len; while(*path == '/') //进入下一层目录 path++; if(*path == 0) //最后一层目录 return 0; s = path; //暂存当前目录 while(*path != '/' && *path != 0) //进入当前目录 path++; len = path - s; if(len >= DIRSIZ) //根据名称大小,返回当前目录名称 memmove(name, s, DIRSIZ); else { memmove(name, s, len); name[len] = 0; } while(*path == '/') //进入下一层目录 path++; return path; //返回根目录 } -

namex:实现对目录的查找:

决定查找根路径 -> 解析路径(元素放入name中, 更新path) -> 检查遍历是否结束(nameiparent) ->上锁,检查文件类型 -> 检查s上层调用,是否结束遍历(nameiparent)-> 调用 dirlookup查找条目 -> 释放ip锁避免死锁 -> 更新ip;

注意: 由于获取的锁是目录inode,因此不同目录可以并发查找;通过iget的ref机制,也避免了查找删除的目录;同时,通过严格保证先释放ip锁,再取得next锁,实现了对"."查找的死锁避免;namex kernel/fs.c

// Look up and return the inode for a path name. // If parent != 0, return the inode for the parent and copy the final // path element into name, which must have room for DIRSIZ bytes. // Must be called inside a transaction since it calls iput(). static struct inode* namex(char *path, int nameiparent, char *name) { struct inode *ip, *next; if(*path == '/') ip = iget(ROOTDEV, ROOTINO); //从根目录开始 else ip = idup(myproc()->cwd); //从当前目录开始 while((path = skipelem(path, name)) != 0){ //给出下一步目录和需要查找的名字,如果为最后一步,则根据nameiparent参数决定返回值 ilock(ip); //给inode上锁 if(ip->type != T_DIR){ //检查是否为目录类型 iunlockput(ip); return 0; } if(nameiparent && *path == '\0'){ //nameiparent跳出,返回父目录和name // Stop one level early. iunlock(ip); return ip; } if((next = dirlookup(ip, name, 0)) == 0){ //寻找name,即下一个目录元素 iunlockput(ip); return 0; //找不到返回 } iunlockput(ip); ip = next; //更新inode } if(nameiparent){ iput(ip); return 0; //nameiparent失败 } return ip; //返回查找的inode }

13. 文件描述符层

-

UNIX抽象的核心: 一切皆文件,普通目录,目录,控制台,管道等;

-

struct file: 文件描述符,即打开的文件inode及描述信息,附属于进程的打开文件表

struct file

struct file { enum { FD_NONE, FD_PIPE, FD_INODE, FD_DEVICE } type; //文件;类型 int ref; // reference count char readable; //可读? char writable; //可写? struct pipe *pipe; // FD_PIPE struct inode *ip; // FD_INODE and FD_DEVICE uint off; // FD_INODE short major; // FD_DEVICE }; -

open调用: 打开新文件,创建新file;多个进程打开统一文件,file实例不同(偏移量);相同file实例,可以多次出现在不不同进程中(dup/fork);ref跟踪单个file实例引用情况;

-

相关结构体: ftable、ofile

-

ftable: 全局打开文件表,打开文件存放在该表中

ftable kernel/file.c

struct { struct spinlock lock; struct file file[NFILE]; } ftable; -

ofile: 进程打开文件表,存在于proc结构体中

ofile kernel/proc.h

struct proc{ // ... struct file *ofile[NOFILE]; // Open files // ... }

-

-

文件表和文件描述层相关函数: filealloc(分配文件)、filedup(创建重复引用)、fileclose(释放引用)、filestat(读取元数据)、fileread(读取数据)、filewrite(写入数据)、fdalloc

-

filealloc: 分配ft:扫描ftable,找到空闲槽位并返回新的引用;

filealloc kernel/file.c

// Allocate a file structure. struct file* filealloc(void) { struct file *f; acquire(&ftable.lock); for(f = ftable.file; f < ftable.file + NFILE; f++){ //遍历ftable寻找空余 if(f->ref == 0){ f->ref = 1; release(&ftable.lock); return f; } } release(&ftable.lock); return 0; } -

filedup: 创建打开文件副本(别名):引用数++;

filedup kernel/file.c

// Increment ref count for file f. struct file* filedup(struct file *f) { acquire(&ftable.lock); if(f->ref < 1) //检查合法性 panic("filedup"); f->ref++;//引用数++ release(&ftable.lock); return f; }sys_dup kernel/sysfile.c

uint64 sys_dup(void) { struct file *f; int fd; if(argfd(0, 0, &f) < 0) return -1; if((fd=fdalloc(f)) < 0) return -1; filedup(f); return fd; } -

fileclose: 减少引用计数,并释放底层资源

fileclose kernel/file.c

// Close file f. (Decrement ref count, close when reaches 0.) void fileclose(struct file *f) { struct file ff; acquire(&ftable.lock); if(f->ref < 1) //检测合法性 panic("fileclose"); if(--f->ref > 0){ //检测引用计数 release(&ftable.lock); return; } ff = *f; //暂存状态 f->ref = 0; f->type = FD_NONE; //修改状态 release(&ftable.lock); if(ff.type == FD_PIPE){ //释放管道 pipeclose(ff.pipe, ff.writable); } else if(ff.type == FD_INODE || ff.type == FD_DEVICE){ //释放inode和设备 begin_op(); iput(ff.ip); end_op(); } } -

filestat:利用下层提供的stati接口,读取其元数据

filestat kernel/file.c

// Get metadata about file f. // addr is a user virtual address, pointing to a struct stat. int filestat(struct file *f, uint64 addr) { struct proc *p = myproc(); struct stat st; if(f->type == FD_INODE || f->type == FD_DEVICE){ //验证文件类型 ilock(f->ip); stati(f->ip, &st); iunlock(f->ip); if(copyout(p->pagetable, addr, (char *)&st, sizeof(st)) < 0) //传出数据 return -1; return 0; } return -1; } -

fileread: 根据文件类型,进行不同的读操作,为系统调用read提供服务

fileread kernel/file.c

// Read from file f. // addr is a user virtual address. int fileread(struct file *f, uint64 addr, int n) { int r = 0; if(f->readable == 0) //判断是否可读 return -1; if(f->type == FD_PIPE){ //管道类型 r = piperead(f->pipe, addr, n); } else if(f->type == FD_DEVICE){ //设备类型 if(f->major < 0 || f->major >= NDEV || !devsw[f->major].read) //判断设备参数是否正确 return -1; r = devsw[f->major].read(1, addr, n); //调用设备驱动接口 } else if(f->type == FD_INODE){ //inode类型 ilock(f->ip); //保证读取的原子性 if((r = readi(f->ip, 1, addr, f->off, n)) > 0) //读取文件 f->off += r; iunlock(f->ip); } else { panic("fileread"); } return r; //返回用户空间的虚拟地址 }sys_read kernel/sysfile.c

uint64 sys_read(void) { struct file *f; int n; uint64 p; if(argfd(0, 0, &f) < 0 || argint(2, &n) < 0 || argaddr(1, &p) < 0) return -1; return fileread(f, p, n); } -

filewrite: 类似于fileread,根据文件类型为系统调用write提供服务

filewrite kernel/file.c

// Write to file f. // addr is a user virtual address. int filewrite(struct file *f, uint64 addr, int n) { int r, ret = 0; if(f->writable == 0) //判断可写 return -1; if(f->type == FD_PIPE){ //管道类型 ret = pipewrite(f->pipe, addr, n); } else if(f->type == FD_DEVICE){ //设备类型 if(f->major < 0 || f->major >= NDEV || !devsw[f->major].write) return -1; ret = devsw[f->major].write(1, addr, n);//调用设备驱动接口 } else if(f->type == FD_INODE){ //inode类型 // write a few blocks at a time to avoid exceeding // the maximum log transaction size, including // i-node, indirect block, allocation blocks, // and 2 blocks of slop for non-aligned writes. // this really belongs lower down, since writei() // might be writing a device like the console. int max = ((MAXOPBLOCKS-1-1-2) / 2) * BSIZE; int i = 0; while(i < n){ //对写入数据进行分块 int n1 = n - i; if(n1 > max) n1 = max; begin_op(); ilock(f->ip); //保证写入的原子性 if ((r = writei(f->ip, 1, addr + i, f->off, n1)) > 0) //写入数据 f->off += r; iunlock(f->ip); end_op(); if(r != n1){ // error from writei break; } i += r; } ret = (i == n ? n : -1); //判断是否完全写入 } else { panic("filewrite"); } return ret; }sys_write kernel/sysfile.c

uint64 sys_write(void) { struct file *f; int n; uint64 p; if(argfd(0, 0, &f) < 0 || argint(2, &n) < 0 || argaddr(1, &p) < 0) return -1; return filewrite(f, p, n); } -

注fileread和filewrite在读写inode时,利用锁ilock锁机制保证了文件偏移量变更的原子性,因此保证了数据不丢失,但是内容可能是交错的

-

fdalloc:创建文件描述符

fdalloc kernel/sysfile.c

static int fdalloc(struct file *f) { int fd; struct proc *p = myproc(); for(fd = 0; fd < NOFILE; fd++){ if(p->ofile[fd] == 0){ p->ofile[fd] = f; return fd; } } return -1; }

14.代码:系统调用

-

系统调用实现: 使用底层提供的功能函数进行进一步封装

-

sys_link: 为给定的inode创建新的目录条目(硬连接);参数:a0:旧路径;a1:新路径

旧路径存在且不未目录(硬连接+1)->调用nameiparent查找新路径父目录 -> 创建新目录条目(检查是否在同一设备中)

注: 日志系统简化了操作,避免了文件系统的不一致性(对上封装)sys_link kernel/sysfile.c

// Create the path new as a link to the same inode as old. uint64 sys_link(void) { char name[DIRSIZ], new[MAXPATH], old[MAXPATH]; struct inode *dp, *ip; if(argstr(0, old, MAXPATH) < 0 || argstr(1, new, MAXPATH) < 0) //获取参数 return -1; begin_op(); if((ip = namei(old)) == 0){ //查找旧路径 end_op(); return -1; } ilock(ip); if(ip->type == T_DIR){ //检查文件类型是否为目录 iunlockput(ip); end_op(); return -1; } ip->nlink++; //硬连接+1 iupdate(ip); iunlock(ip); if((dp = nameiparent(new, name)) == 0) //查找新路径父目录 goto bad; ilock(dp); if(dp->dev != ip->dev || dirlink(dp, name, ip->inum) < 0){ //检查设备 + 创建新硬连接 iunlockput(dp); goto bad; } iunlockput(dp); iput(ip); end_op(); return 0; bad: //失败处理 ilock(ip); ip->nlink--; iupdate(ip); iunlockput(ip); end_op(); return -1; } -

sys_unlink: 和sys_link类似

sys_unlink kernel/sysfile.c

uint64 sys_unlink(void) { struct inode *ip, *dp; struct dirent de; char name[DIRSIZ], path[MAXPATH]; uint off; if(argstr(0, path, MAXPATH) < 0) //获取参数 return -1; begin_op(); if((dp = nameiparent(path, name)) == 0){ //获取父目录 end_op(); return -1; } ilock(dp); // Cannot unlink "." or "..". if(namecmp(name, ".") == 0 || namecmp(name, "..") == 0) //判断合法性 goto bad; if((ip = dirlookup(dp, name, &off)) == 0) //寻找当前目录下的该文件 goto bad; ilock(ip); if(ip->nlink < 1) panic("unlink: nlink < 1"); if(ip->type == T_DIR && !isdirempty(ip)){ //检查合法性 iunlockput(ip); goto bad; } memset(&de, 0, sizeof(de)); if(writei(dp, 0, (uint64)&de, off, sizeof(de)) != sizeof(de)) //覆写清空 panic("unlink: writei"); if(ip->type == T_DIR){ //文件为目录的情况 dp->nlink--; iupdate(dp); } iunlockput(dp); ip->nlink--; //减少硬连接 iupdate(ip); iunlockput(ip); end_op(); return 0; bad: iunlockput(dp); end_op(); return -1; } -

create(辅助函数): 根据给定的路径名,创建新的inode与硬连接;

create的上层系统调用引用: open使用O_CREATE创建新文件;mkdir创建新目录;mkdev创建新设备文件

nameiparent获取父目录的inode指针 -> 父目录上锁 -> dirlookup查找文件是否存在(如存在,根据上层调用进行处理) -> 分配新的inodeip,上锁 -> 如为目录,创建目录相应的条目 -> dirlink连接父目录 -> 返回上锁inodecreate kernel/sysfile.c

static struct inode* create(char *path, short type, short major, short minor) { struct inode *ip, *dp; char name[DIRSIZ]; if((dp = nameiparent(path, name)) == 0) //获取父目录指针和创建名称 return 0; ilock(dp); if((ip = dirlookup(dp, name, 0)) != 0){ //寻找现有同名 iunlockput(dp); ilock(ip); if(type == T_FILE && (ip->type == T_FILE || ip->type == T_DEVICE)) return ip; iunlockput(ip); return 0; } if((ip = ialloc(dp->dev, type)) == 0) //分配inode panic("create: ialloc"); ilock(ip); ip->major = major; ip->minor = minor; ip->nlink = 1; iupdate(ip); if(type == T_DIR){ // Create . and .. entries. //目录 dp->nlink++; // for ".." iupdate(dp); // No ip->nlink++ for ".": avoid cyclic ref count. if(dirlink(ip, ".", ip->inum) < 0 || dirlink(ip, "..", dp->inum) < 0) panic("create dots"); } if(dirlink(dp, name, ip->inum) < 0) //对上一层进行连接 panic("create: dirlink"); iunlockput(dp); return ip; //返回上锁inode } -

sys_open: 创建create/打开文件namei并锁定 -> 检查合法性 -> 分配打开文件并分配文件描述符 -> 填充file结构

sys_open kernel/sysfile.c

uint64 sys_open(void) { char path[MAXPATH]; int fd, omode; struct file *f; struct inode *ip; int n; if((n = argstr(0, path, MAXPATH)) < 0 || argint(1, &omode) < 0) //传递参数 return -1; begin_op(); if(omode & O_CREATE){ //如果为O_CREATE模式,创建相应path ip = create(path, T_FILE, 0, 0); if(ip == 0){ end_op(); return -1; } } else { if((ip = namei(path)) == 0){ //使用namei找到相应inode end_op(); return -1; } ilock(ip); if(ip->type == T_DIR && omode != O_RDONLY){ //检查目录合法性 iunlockput(ip); end_op(); return -1; } } if(ip->type == T_DEVICE && (ip->major < 0 || ip->major >= NDEV)){ //检查设备合法性 iunlockput(ip); end_op(); return -1; } if((f = filealloc()) == 0 || (fd = fdalloc(f)) < 0){ //分配打开文件并创建文件描述符 if(f) fileclose(f); iunlockput(ip); end_op(); return -1; } if(ip->type == T_DEVICE){ //填充设备文件 f->type = FD_DEVICE; f->major = ip->major; } else { f->type = FD_INODE; f->off = 0; } f->ip = ip; f->readable = !(omode & O_WRONLY); f->writable = (omode & O_WRONLY) || (omode & O_RDWR); if((omode & O_TRUNC) && ip->type == T_FILE){ //填充文件 itrunc(ip); } iunlock(ip); end_op(); return fd; } -

sys_mkdir: 创建目录节点

sys_mkdir kernel/sysfile.c

uint64 sys_mkdir(void) { char path[MAXPATH]; struct inode *ip; begin_op(); if(argstr(0, path, MAXPATH) < 0 || (ip = create(path, T_DIR, 0, 0)) == 0){ 读取参数并创建节点 end_op(); return -1; } iunlockput(ip); end_op(); return 0; } -

sys_mknod:创建设备节点

sys_mknod kernel/sysfile.c

uint64 sys_mknod(void) { struct inode *ip; char path[MAXPATH]; int major, minor; begin_op(); if((argstr(0, path, MAXPATH)) < 0 || argint(1, &major) < 0 || argint(2, &minor) < 0 || (ip = create(path, T_DEVICE, major, minor)) == 0){ //读取参数并创建设备节点 end_op(); return -1; } iunlockput(ip); end_op(); return 0; } -

sys_close: 清空ofile,直接调用fileclose关闭文件

sys_close kernel/sysfile.c

uint64

sys_close(void)

{

int fd;

struct file *f;

if(argfd(0, &fd, &f) < 0)

return -1;

myproc()->ofile[fd] = 0;

fileclose(f);

return 0;

}

-

sys_pipe:匿名管道创建;读取参数 -> 创建管道 -> fdalloc创建文件描述符 -> copyout在数组中放置新的文件描述符

fdalloc:分配新打开文件,并初始化管道sys_pipe kernel/sysfile.c

uint64 sys_pipe(void) { uint64 fdarray; // user pointer to array of two integers struct file *rf, *wf; int fd0, fd1; struct proc *p = myproc(); if(argaddr(0, &fdarray) < 0) return -1; if(pipealloc(&rf, &wf) < 0) return -1; fd0 = -1; if((fd0 = fdalloc(rf)) < 0 || (fd1 = fdalloc(wf)) < 0){ if(fd0 >= 0) p->ofile[fd0] = 0; fileclose(rf); fileclose(wf); return -1; } if(copyout(p->pagetable, fdarray, (char*)&fd0, sizeof(fd0)) < 0 || copyout(p->pagetable, fdarray+sizeof(fd0), (char *)&fd1, sizeof(fd1)) < 0){ p->ofile[fd0] = 0; p->ofile[fd1] = 0; fileclose(rf); fileclose(wf); return -1; } return 0; }pipealloc kernel/sysfile.c

int pipealloc(struct file **f0, struct file **f1) { struct pipe *pi; pi = 0; *f0 = *f1 = 0; if((*f0 = filealloc()) == 0 || (*f1 = filealloc()) == 0) //分配新打开文件 goto bad; if((pi = (struct pipe*)kalloc()) == 0) //申请内存 goto bad; pi->readopen = 1; pi->writeopen = 1; pi->nwrite = 0; pi->nread = 0; initlock(&pi->lock, "pipe"); (*f0)->type = FD_PIPE; (*f0)->readable = 1; (*f0)->writable = 0; (*f0)->pipe = pi; (*f1)->type = FD_PIPE; (*f1)->readable = 0; (*f1)->writable = 1; (*f1)->pipe = pi; //初始化管道 return 0; bad: if(pi) kfree((char*)pi); if(*f0) fileclose(*f0); if(*f1) fileclose(*f1); return -1; }

15.真实世界

- Buffer Cache核心概念: 缓存与同步,真实世界使用缓存+堆,同时,将将虚拟内存页面和文件系统页面集成到统一页面缓存中,以便支持内存映射文件

- 日志系统: 存在问题:

数据和元数据都要被写入磁盘两次 ->元数据日志;

调用正在执行时,就不能提交日志

只有几个字节要更新,也需要将整个数据块都写入日志 -> 组优化

写入日志和加检查点时,一次只写入一个块到磁盘上 -> 柱面组 - 类UNIX文件系统: 缺陷:查找目录需要线性扫描;改进:B树构建目录层次结构

- 磁盘错误的处理: xv6:panic ;应该合适处理磁盘错误

- 多磁盘联合问题: RAID解决方案;改进方案:动态的文件系统

- 现代操作系统优势: 一切皆文件,命名管道、网络套接字、网络文件系统、监视和控制接口等

二、涉及函数

-

基本大部分函数上文都提到了,补充相关的辅助函数:

readsb iinit iunlockput namecmp kernel/fs.c

// Read the super block. static void readsb(int dev, struct superblock *sb) //读取超级块 { struct buf *bp; bp = bread(dev, 1); memmove(sb, bp->data, sizeof(*sb)); brelse(bp); } ... //// Inodes. ... void iinit() //节点初始化(锁) { int i = 0; initlock(&icache.lock, "icache"); for(i = 0; i < NINODE; i++) { initsleeplock(&icache.inode[i].lock, "inode"); } } ... // Common idiom: unlock, then put. void iunlockput(struct inode *ip) //解锁同时iput { iunlock(ip); iput(ip); } ... // Directories ... int namecmp(const char *s, const char *t)//文件名比较,本质是strncmp的封装 { return strncmp(s, t, DIRSIZ); } ... // Pathsfileinit kernel/file.c

void fileinit(void) //文件系统初始化(锁) { initlock(&ftable.lock, "ftable"); }

三、课程视频观看笔记(lec14、lec15)

1.lec14

- 文件系统核心属性: 用户友好的命名/路径名;允许再在用户/进程间共享;持久化的存储;

- 文件系统核心问题: 崩溃保护;文件系统在磁盘上的安排;性能优化(缓存和并发性)

- API示例/文件系统调用:

实现功能:路径名 + 文件名的可读性;offset的隐藏;硬连接的实现;文件在使用时的一致性; - 存储系组织的其他方法: 数据库等

- 文件系统的架构 文件名称inode;硬连接计数;系统引用计数;偏移量;进程描述符

- 文件系统层级:磁盘 -> (内存) buf cache ->日志系统 -> ichche inode缓存(同步)-> inode(读取与写入)->名称/路径层

- 存储设备:

SSD和HDD;扇区(硬件定义);块(操作系统定义),其和CPU之间通过PCIe等协议实现块编号+数据的读写- 磁盘抽象: 将磁盘抽象为大型数组,其中包含boot,超级块,log,Inodes索引,位图,数据块;

数据索引利用inode实现 - 磁盘上inode结构: 类型+硬连接数+大小+块编号(间接+直接)

- 系统从boot启动 根据启动地址获取inode,得到相应数据地址

- 磁盘抽象: 将磁盘抽象为大型数组,其中包含boot,超级块,log,Inodes索引,位图,数据块;

- 目录: 目录本质就是特殊的文件

- 目录数据结构: inode + 文件名;

- 目录查找过程: 通过层序遍历文件树,寻找相应文件

- echo"hi" > x写入过程:

创建文件(写入inode->更新inode信息->写入相关父目录 -> 更新inode大小 -> 更新inode) ->

写入hi (搜索位图 -> 写入块数据 -> 更新inode)->

写入\n (写入块数据 -> 更新inode) - bache buffer: 通过自旋锁保护缓存结构, 使用睡眠锁保护缓存inode槽(可以避免死锁);

不变量保证:保证block和mem缓存是一一映射,同时使用采用双链表实现LRU操作

2.lec15

- 并行调试技巧: 使用断言和print结合

- 崩溃问题: 如何在崩溃时保存状态

- 崩溃问题的核心: 多步操作中间崩溃导致的文件系统不变性被破坏

崩溃问题造成的问题: 丢失磁盘空间/丢失数据/破坏隔离性->操作的原子性 - 日志优势: 文件操作的原子性; 快速恢复; 高性能;

- 日志核心思想: 通过写入日志 -> 提交操作(原子化) ->写入文件系统 -> 清理日志;崩溃恢复时读取日志

预写规则: 提交操作必须在日志完成写入后; - 日志结构: 磁盘: head(计数+地址) + 数据; 内存: 对应数据结构采用bcache存储;

- 文件系统调用: 使用begin_op和end_op 保证写入的原子性

- end-op核心函数: commit提交日志: 写入日志->写入提交操作->写入文件系统->清理日志

- 恢复核心函数: recover_from_log: commit的下半部;

- 日志系统所面临的挑战:

驱逐过程: bache不能驱逐属于日志系统的块(通过加引用标记)

文件系统操作必须记录在日志中: 日志大小必须大于文件系统操作可写入的最大数据块数量;写入大文件时,需要拆分文件保证写入的原子性;

并发挑战: 通过限制并发文件系统的数量,来避免多个并发操作导致日志系统的溢出(组提交);begin_op和end_op共同实现了这一功能

四、完成lab及其代码

-

Large files: 主要核心是通过增加二级间接块来扩展文件大小,需要在fs.h和file.h中修改相应定义,同时在fs.c中通过bmap和itrunc完成相应的二级块的分配/映射与释放

kernel/fs.h

... #define NDIRECT 11 //change NDIRECT from 12 to 11 #define NINDIRECT (BSIZE / sizeof(uint)) //indirect block 上 direct block的个数 #define MAXFILE (NDIRECT + NINDIRECT + NINDIRECT*NINDIRECT) // inode上 data block个数 // On-disk inode structure struct dinode { ... uint addrs[NDIRECT+2]; // Data block addresses: NDIRECT:0 - 10; NDIRECT1:11; NDIRECT2: 12 };kernel/file.h

// in-memory copy of an inode struct inode { ... uint addrs[NDIRECT+2]; // Data block addresses: NDIRECT:0 - 10; NDIRECT1:11; NDIRECT2: 12 };kernel/fs.c

... // Inode content // // The content (data) associated with each inode is stored // in blocks on the disk. The first NDIRECT block numbers // are listed in ip->addrs[]. The next NINDIRECT blocks are // listed in block ip->addrs[NDIRECT]. // Return the disk block address of the nth block in inode ip. // If there is no such block, bmap allocates one. static uint bmap(struct inode *ip, uint bn) { uint addr, *a; struct buf *bp; if(bn < NDIRECT){ if((addr = ip->addrs[bn]) == 0) ip->addrs[bn] = addr = balloc(ip->dev); return addr; } bn -= NDIRECT; if(bn < NINDIRECT){ // Load indirect block, allocating if necessary. if((addr = ip->addrs[NDIRECT]) == 0) ip->addrs[NDIRECT] = addr = balloc(ip->dev); bp = bread(ip->dev, addr); a = (uint*)bp->data; if((addr = a[bn]) == 0){ a[bn] = addr = balloc(ip->dev); log_write(bp); } brelse(bp); return addr; } bn -= NINDIRECT; if (bn < NINDIRECT * NINDIRECT) { //注意这里复用了bp,将其作为一个buffer if((addr = ip->addrs[NDIRECT + 1]) == 0) ip->addrs[NDIRECT + 1] = addr = balloc(ip->dev); bp = bread(ip->dev, addr); a = (uint*)bp->data; if ((addr = a[bn/NINDIRECT]) == 0) { //更新一级间接块并取得相应二级间接块的地址 a[bn/NINDIRECT] = addr = balloc(ip->dev); log_write(bp); } brelse(bp); bn %= NINDIRECT; bp = bread(ip->dev, addr); a = (uint*)bp->data; if ((addr = a[bn]) == 0) { //更新二级间接块所指向的block的地址 a[bn] = addr = balloc(ip->dev); log_write(bp); } brelse(bp); return addr; } panic("bmap: out of range"); } // Truncate inode (discard contents). // Caller must hold ip->lock. void itrunc(struct inode *ip) { int i, j, k; struct buf *bp; uint *a; for(i = 0; i < NDIRECT; i++){ if(ip->addrs[i]){ bfree(ip->dev, ip->addrs[i]); ip->addrs[i] = 0; } } if(ip->addrs[NDIRECT]){ bp = bread(ip->dev, ip->addrs[NDIRECT]); a = (uint*)bp->data; for(j = 0; j < NINDIRECT; j++){ if(a[j]) bfree(ip->dev, a[j]); } brelse(bp); bfree(ip->dev, ip->addrs[NDIRECT]); ip->addrs[NDIRECT] = 0; } if(ip->addrs[NDIRECT + 1]){ bp = bread(ip->dev, ip->addrs[NDIRECT + 1]); //读取一级间接块 a = (uint*)bp->data; for(j = 0; j < NINDIRECT; j++){ if(a[j]){ //读取二级间接块地址 struct buf *bp_indir = bread(ip->dev, a[j]); //由于不能复用,直接申请新的buffer uint *a_indir = (uint*)bp_indir->data; for (k = 0; k < NINDIRECT; k++){ //遍历一级间接块中的二级间接块 if(a_indir[k]) bfree(ip->dev, a_indir[k]); } brelse(bp_indir); bfree(ip->dev, a[j]); } } brelse(bp); bfree(ip->dev, ip->addrs[NDIRECT + 1]); ip->addrs[NDIRECT + 1] = 0; } ip->size = 0; iupdate(ip); } ... -

Symbolic links: 核心是利用当前文件类型,创建新的文件类型-软连接文件,通过增加系统调用的方式, 实现软连接

核心:通过改写sys_open实现对文件的递归调用,同时实现利用create的现有机制 + writei的机制,实现sys_symlink系统调用增加系统调用:

user/usys.pl

... entry("symlink");user/user.h

// system calls ... int symlink(char *, char *); ...kernel/syscall.h

... #define SYS_symlink 22kernel/syscall.c

... extern uint64 sys_symlink(void); static uint64 (*syscalls[])(void) = { ... [SYS_symlink] sys_symlink, };添加/修改相应常量:

kernel/stat.h

... #define T_SYMLINK 4 //Symbolic links ...kernel/parm.h

... #define MAXDEPTH 10 // maximum file path SYMLINK depthkerenl/fcnt.h

... #define O_NOFOLLOW 0x800实现sys_symlink,并修改sys_open使其实现递归搜索

kerenl/sysfile.h

uint64 sys_open(void) { char path[MAXPATH]; int fd, omode; struct file *f; struct inode *ip; int n, depth = 0; //增加递归深度 ... ... while(ip->type == T_SYMLINK && !(omode & O_NOFOLLOW)) { //软连接的处理,递归搜索 char target[MAXPATH] = {0}; if (readi(ip, 0, (uint64)target, 0, MAXPATH) < 0) { //读取软连接中地址信息 iunlockput(ip); end_op(); return - 1; } iunlockput(ip); if((ip = namei(target)) == 0){ //获取目标地址 end_op(); return - 1; } ilock(ip); depth++; if (depth > MAXDEPTH) { //检查是否到达最大限制深度 iunlockput(ip); end_op(); return - 1; } } ... ... } ... uint64 sys_symlink(void) { struct inode *ip; char target[MAXPATH], path[MAXPATH]; begin_op(); if(argstr(0, target, MAXPATH) < 0 || argstr(1, path, MAXPATH) < 0 || (ip = create(path, T_SYMLINK, 0, 0)) == 0 ){ //如果重复创建symlink,create会直接返回0防止覆写 end_op(); return -1; } if(writei(ip, 0, (uint64)target, 0, MAXPATH) < 0) { //写入相应的数据(target的地址) printf("sys_symlink: writei"); iunlockput(ip); end_op(); return -1; } iunlockput(ip); end_op(); return 0; }

参考文献

2020版xv6手册:https://pdos.csail.mit.edu/6.S081/2020/xv6/book-riscv-rev1.pdf

xv6手册与代码笔记:https://zhuanlan.zhihu.com/p/353830216

xv6手册中文版:http://xv6.dgs.zone/tranlate_books/book-riscv-rev1/c8/s1.html

28天速通MIT 6.S081操作系统公开课:https://zhuanlan.zhihu.com/p/630222721

MIT6.s081操作系统笔记:https://juejin.cn/post/7022347989683273765