《机器学习实战》学习笔记第五章 —— Logistic回归

一.有关笔记:

2.吴恩达机器学习笔记(十一) —— Large Scale Machine Learning

二.Python源码(不带正则项):

1 # coding:utf-8 2 3 ''' 4 Created on Oct 27, 2010 5 Logistic Regression Working Module 6 @author: Peter 7 ''' 8 from numpy import * 9 10 def sigmoid(inX): 11 return 1.0 / (1 + exp(-inX)) 12 13 def gradAscent(dataMatIn, classLabels): 14 dataMatrix = mat(dataMatIn) # convert to NumPy matrix 15 labelMat = mat(classLabels).transpose() # convert to NumPy matrix 16 m, n = shape(dataMatrix) 17 alpha = 0.001 18 maxCycles = 500 19 weights = ones((n, 1)) 20 for k in range(maxCycles): # heavy on matrix operations 21 h = sigmoid(dataMatrix * weights) # matrix mult 22 error = (labelMat - h) # vector subtraction 23 weights = weights + alpha * dataMatrix.transpose() * error # matrix mult 24 return weights 25 26 def stocGradAscent0(dataMatrix, classLabels,numIter=150): 27 m, n = shape(dataMatrix) 28 alpha = 0.01 29 weights = ones(n) # initialize to all ones 30 for j in range(numIter): 31 for i in range(m): 32 h = sigmoid(sum(dataMatrix[i] * weights)) 33 error = classLabels[i] - h 34 weights = weights + alpha * error * dataMatrix[i] 35 return weights 36 37 def stocGradAscent1(dataMatrix, classLabels, numIter=150): 38 m, n = shape(dataMatrix) 39 weights = ones(n) # initialize to all ones 40 for j in range(numIter): 41 dataIndex = range(m) 42 for i in range(m): 43 alpha = 4 / (1.0 + j + i) + 0.0001 # apha decreases with iteration, does not 44 randIndex = int(random.uniform(0, len(dataIndex))) # go to 0 because of the constant 45 h = sigmoid(sum(dataMatrix[randIndex] * weights)) 46 error = classLabels[randIndex] - h 47 weights = weights + alpha * error * dataMatrix[randIndex] 48 del (dataIndex[randIndex]) 49 return weights 50 51 def classifyVector(inX, weights): 52 prob = sigmoid(sum(inX * weights)) 53 if prob > 0.5: 54 return 1.0 55 else: 56 return 0.0 57 58 def colicTest(): 59 frTrain = open('horseColicTraining.txt') 60 frTest = open('horseColicTest.txt') 61 trainingSet = [] 62 trainingLabels = [] 63 for line in frTrain.readlines(): 64 currLine = line.strip().split('\t') 65 lineArr = [] 66 for i in range(21): 67 lineArr.append(float(currLine[i])) 68 trainingSet.append(lineArr) 69 trainingLabels.append(float(currLine[21])) 70 trainWeights = stocGradAscent1(array(trainingSet), trainingLabels,500) 71 errorCount = 0; numTestVec = 0.0 72 for line in frTest.readlines(): 73 numTestVec += 1.0 74 currLine = line.strip().split('\t') 75 lineArr = [] 76 for i in range(21): 77 lineArr.append(float(currLine[i])) 78 if int(classifyVector(array(lineArr), trainWeights)) != int(currLine[21]): 79 errorCount += 1 80 errorRate = (float(errorCount) / numTestVec) 81 print "the error rate of this test is: %f" % errorRate 82 return errorRate 83 84 def multiTest(): 85 numTests = 10; errorSum = 0.0 86 for k in range(numTests): 87 errorSum += colicTest() 88 print "after %d iterations the average error rate is: %f" % (numTests, errorSum / float(numTests)) 89 90 if __name__=="__main__": 91 multiTest()

三.Batch gradient descent、Stochastic gradient descent、Mini-batch gradient descent 的性能比较

1.Batch gradient descent

1 def gradAscent(dataMatIn, classLabels): 2 dataMatrix = mat(dataMatIn) # convert to NumPy matrix 3 labelMat = mat(classLabels).transpose() # convert to NumPy matrix 4 m, n = shape(dataMatrix) 5 alpha = 0.001 6 maxCycles = 500 7 weights = ones((n, 1)) 8 for k in range(maxCycles): # heavy on matrix operations 9 h = sigmoid(dataMatrix * weights) # matrix mult 10 error = (labelMat - h) # vector subtraction 11 weights = weights + alpha * dataMatrix.transpose() * error # matrix mult 12 return weights

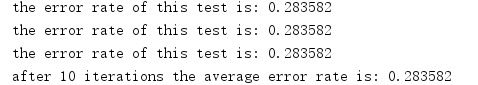

其运行结果:

错误率为:28.4%

2.Stochastic gradient descent

1 def stocGradAscent0(dataMatrix, classLabels,numIter=150): 2 m, n = shape(dataMatrix) 3 alpha = 0.01 4 weights = ones(n) # initialize to all ones 5 for j in range(numIter): 6 for i in range(m): 7 h = sigmoid(sum(dataMatrix[i] * weights)) 8 error = classLabels[i] - h 9 weights = weights + alpha * error * dataMatrix[i] 10 return weights

迭代次数为150时,错误率为:46.3%

迭代次数为500时,错误率为:32.8%

迭代次数为800时,错误率为:38.8%

3.Mini-batch gradient descent

1 def stocGradAscent1(dataMatrix, classLabels, numIter=150): 2 m, n = shape(dataMatrix) 3 weights = ones(n) # initialize to all ones 4 for j in range(numIter): 5 dataIndex = range(m) 6 for i in range(m): 7 alpha = 4 / (1.0 + j + i) + 0.0001 # apha decreases with iteration, does not 8 randIndex = int(random.uniform(0, len(dataIndex))) # go to 0 because of the constant 9 h = sigmoid(sum(dataMatrix[randIndex] * weights)) 10 error = classLabels[randIndex] - h 11 weights = weights + alpha * error * dataMatrix[randIndex] 12 del (dataIndex[randIndex]) 13 return weights

迭代次数为150时,错误率为:37.8%

迭代次数为500时,错误率为:35.2%

迭代次数为800时,错误率为:37.3%

4.综上:

1.在训练数据集较小且特征较少的时候,使用Batch gradient descent的效果是最好的。但如果不能满足这个条件,则可使用Mini-batch gradient descent,并设置合适的迭代次数。

2.对于Stochastic gradient descent 和 Mini-batch gradient descent 而言,并非迭代次数越多效果越好。不知为何?

浙公网安备 33010602011771号

浙公网安备 33010602011771号