CDH-Hadoop_Hive_Impala单机环境搭建纯离线安装

环境介绍及安装包下载地址

Centos6.5

版本选择:cdh5.14.0-src.tar.gz CDH5 – 5.14.0

Hadoop安装包下载地址

http://archive.cloudera.com/cdh5/cdh/5/hadoop-2.6.0-cdh5.14.0.tar.gz

Hive安装包下载地址

http://archive.cloudera.com/cdh5/cdh/5/hive-1.1.0-cdh5.14.0.tar.gz

impala安装包下载地址

参考文档

https://docs.cloudera.com/documentation/enterprise/5-12-x/topics/cdh_qs_cdh5_pseudo.html#topic_3

https://docs.cloudera.com/documentation/index.html

1.安装JDK

上传rpm包

rpm -ivh jdk-8u221-linux-x64.rpm

默认安装在/usr/java中

路径为/usr/java/jdk1.8.0_221-amd64

配置环境变量

vim /etc/profile

#添加如下内容

JAVA_HOME=/usr/java/jdk1.8.0_221-amd64

JRE_HOME=/usr/java/jdk1.8.0_221-amd64/jre

PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin

CLASSPATH=:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

export JAVA_HOME JRE_HOME PATH CLASSPATH

[root@sunny conf]# java -version

java version "1.8.0_221"

Java(TM) SE Runtime Environment (build 1.8.0_221-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.221-b11, mixed mode)

2.安装Hadoop

2.1指定Hadoop安装目录/data/CDH,进行解压

cd /data/CDH

tar -zvxf /data/install_pkg_CDH/hadoop-2.6.0-cdh5.14.0.tar.gz

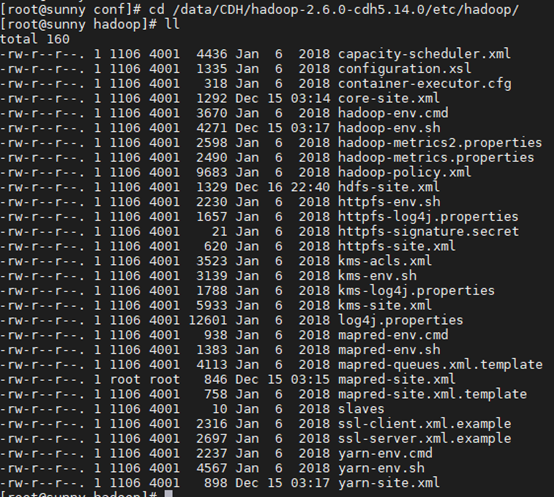

hadoop核心配置文件位置:

/data/CDH/hadoop-2.6.0-cdh5.14.0/etc/hadoop/

2.2配置Hadoop环境变量

vi /etc/profile

#在末尾加入配置:

JAVA_HOME=/usr/java/jdk1.8.0_221-amd64

JRE_HOME=/usr/java/jdk1.8.0_221-amd64/jre

PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin

CLASSPATH=:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

export JAVA_HOME JRE_HOME PATH CLASSPATH

export HADOOP_HOME=/data/CDH/hadoop-2.6.0-cdh5.14.0

export HADOOP_COMMON_LIB_NATIVE_DIR=${HADOOP_HOME}/lib/native

export HADOOP_OPTS="-Djava.library.path=HADOOP_HOME/lib"

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

source /etc/profile #使得配置生效

2.3先在hadoop安装目录下创建数据目录(用于配置: core-site.xml)

cd /data/CDH/hadoop-2.6.0-cdh5.14.0

mkdir data

2.4配置core-site.xml

vi /data/CDH/hadoop-2.6.0-cdh5.14.0/etc/hadoop/core-site.xml

#添加如下配置:

<property>

<name>fs.defaultFS</name>

<!-- 这里填的是你自己的ip,端口默认-->

<value>hdfs://172.20.71.66:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<!-- 这里填的是你自定义的hadoop工作的目录,端口默认-->

<value>/data/CDH/hadoop-2.6.0-cdh5.14.0/data</value>

</property>

<property>

<name>hadoop.native.lib</name>

<value>false</value>

<description>Should native hadoop libraries, if present, be used.

</description>

</property>

2.5配置hdfs-site.xml

vi /data/CDH/hadoop-2.6.0-cdh5.14.0/etc/hadoop/hdfs-site.xml

#添加如下配置:

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>172.20.71.66:50090</value>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

2.6配置mapres-site.xml

cd /data/CDH/hadoop-2.6.0-cdh5.14.0/etc/hadoop

cp mapred-site.xml.template ./mapred-site.xml #复制默认文件配置命名为mapred-site.xml

vi /data/CDH/hadoop-2.6.0-cdh5.14.0/etc/hadoop/mapred-site.xml

#增加如下配置:

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

2.7配置yarn-site.xml

vi /data/CDH/hadoop-2.6.0-cdh5.14.0/etc/hadoop/yarn-site.xml

#添加如下配置:

<property>

<name>yarn.resourcemanager.hostname</name>

<value>172.20.71.66</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

2.8配置hadoop-env.sh

vi /data/CDH/hadoop-2.6.0-cdh5.14.0/etc/hadoop/hadoop-env.sh

export JAVA_HOME=${JAVA_HOME}

更改为:

export JAVA_HOME=/usr/java/jdk1.8.0_221-amd64

2.9启动hadoop

配置好之后切换到sbin目录下

cd /data/CDH/hadoop-2.6.0-cdh5.14.0/sbin/

hadoop namenode –format #格式化hadoop文件格式

错误提示:hostname与/etc/hosts中的不对应。

执行hostname命令,查看主机名。比如为testuser

执行vi /etc/hosts

修改

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

为

127.0.0.1 localhost localhost.localdomain testuser localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

再次格式化: hadoop namenode -format

成功后启动所有的

./start-all.sh

中间需要输入多次当前服务器密码,按照提示输入即可

然后再输入命令 jps 查看服务启动情况

出现下图标记的五个进程,即表示启动成功!

2.10Hadoop页面访问

http://172.20.71.66:50070 #把172.20.71.66 换为你的服务器IP即可

3.安装Hive

3.1指定Hive安装目录/data/CDH,并且解压

cd /data/CDH

tar -zxvf /data/install_pkg_CDH/hive-1.1.0-cdh5.14.0.tar.gz

3.2配置环境变量

vim /etc/profile

#添加如下内容

export HIVE_HOME=/data/CDH/hive-1.1.0-cdh5.14.0/

export PATH=$HIVE_HOME/bin:$PATH

export HIVE_CONF_DIR=$HIVE_HOME/conf

export HIVE_AUX_JARS_PATH=$HIVE_HOME/lib

~ source /etc/profile

3.3配置hive-env.sh

cd /data/CDH/hive-1.1.0-cdh5.14.0/conf

cp hive-env.sh.template hive-env.sh

vi hive_env.sh

#在文件末尾添加如下内容

export JAVA_HOME=/usr/java/jdk1.8.0_221-amd64

export HADOOP_HOME=/data/CDH/hadoop-2.6.0-cdh5.14.0

export HIVE_HOME=/data/CDH/hive-1.1.0-cdh5.14.0

export HIVE_CONF_DIR=/data/CDH/hive-1.1.0-cdh5.14.0/conf

export HIVE_AUX_JARS_PATH=/data/CDH/hive-1.1.0-cdh5.14.0/lib

3.4配置文件hive-site.xml

cd /data/CDH/hive-1.1.0-cdh5.14.0/conf

vi hive-site.xml

#添加如下内容

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:derby:;databaseName=metastore_db;create=true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>org.apache.derby.jdbc.EmbeddedDriver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>APP</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>mine</value>

</property>

</configuration>

3.5创建HDFS目录(这里好像非必须的,也可不操作)

cd /data/CDH/hive-1.1.0-cdh5.14.0

mkdir hive_hdfs

cd hive_hdfs

hdfs dfs -mkdir -p warehouse #使用这条命令的前提是hadoop已经安装好了

hdfs dfs -mkdir -p tmp

hdfs dfs -mkdir -p log

hdfs dfs -chmod -R 777 warehouse

hdfs dfs -chmod -R 777 tmp

hdfs dfs -chmod -R 777 log

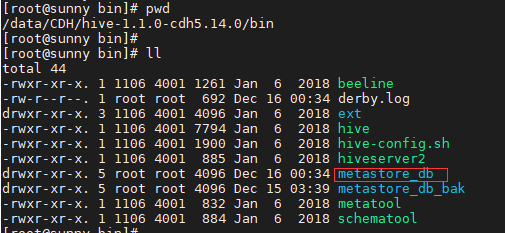

3.6初始化hive (建议在安装目录的bin目录里执行)

cd /data/CDH/hive-1.1.0-cdh5.14.0/bin

schematool -dbType derby –initSchema

#如果需要反复执行schematool -dbType derby –initSchema ,可以手动删除或重命名metastore_db目录。

3.7启动hive

hive --service metastore & #可在任意目录输入

hive --service hiveserver2 & #可在任意目录输入

3.8使用hive

hive #可在任意目录输入

3.9安装hive时的异常处理

3.9.1 java.lang.ClassNotFoundException: org.htrace.Trace

从maven仓库中下载htrace-core.jar包拷贝到$HIVE_HOME/lib目录下

<!-- https://mvnrepository.com/artifact/org.htrace/htrace-core -->

<dependency>

<groupId>org.htrace</groupId>

<artifactId>htrace-core</artifactId>

<version>3.0</version>

</dependency>

cp /data/install_pkg_apache/htrace-core-3.0.jar /data/CDH/hive-1.1.0-cdh5.14.0/lib

cd /data/CDH/hive-1.1.0-cdh5.14.0/bin

3.9.2 [ERROR] Terminal initialization failed; falling back to unsupported java.lang.IncompatibleClassChangeError: Found class jline.Terminal, but interface was expected

处理方案:从maven仓库中下载 jline-2.12.jar(上传到当前服务器)

cd /data/CDH/hadoop-2.6.0-cdh5.14.0/share/hadoop/yarn/lib

mv jline-2.11.jar jline-2.11.jar_bak

cd /data/CDH/hive-1.1.0-cdh5.14.0/lib

cp jline-2.12.jar /data/CDH/hadoop-2.6.0-cdh5.14.0/share/hadoop/yarn/lib

重新启动 hadoop:

cd /data/CDH/hadoop-2.6.0-cdh5.14.0/sbin

sh stop-all.sh

sh start-all.sh

再次执行: schematool -dbType derby -initSchema

错误还在!!!

处理方案Plus:

vi /etc/profile

export HADOOP_USER_CLASSPATH_FIRST=true

再次执行: schematool -dbType derby -initSchema (ok)

接着开始启动hive:

./hive --service metastore &

./hive --service hiveserver2 &

3.9.3 java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

参考博客地址: https://www.58jb.com/html/89.html

在Hive2.0.0以前的版本,需要在一个Hive的主节点上启动两个服务:metastore 、hiveserver2 才可以登陆到Hive的命令行下。(配置hive-site.xml再启动即可)

hive --service metastore &

hive --service hiveserver2 &

3.10Hive基本操作测试

hive #进入hive

#执行hql语句

show databases;

create database test;

use test;

create table user_test(name string) row format delimited fields terminated by '\001';

insert into table user_test values('zs');

select * from user_test;

4.安装Impala

4.1磁盘扩容(服务器配置足够的跳过即可)

需要来个小插曲,我这是自己搭建的centos环境,服务器配置不够,需要加空间。

参考博客: http://blog.itpub.net/117319/viewspace-2125079/

windows操作进入cdm命令行:

C:\Users\kingdee\VirtualBox VMs\centos6\centos6.vdi

VBoxManage.exe modifymedium C:\Users\kingdee\VirtualBox VMs\centos6\centos6.vdi resize --20480 #这里运行有问题 因为文件名称存在空格

C:

cd Users\kingdee\VirtualBox VMs\centos6

F:\VirtualBox\VBoxManage.exe modifymedium centos6.vdi --resize 20480 #这样执行就可以啦

Centos操作:

fdisk -l

fdisk /dev/sda

n

p

3

回车 (使用默认)

+10G (分配的内存)

w (保存)

查看并立即刷新分区信息

cat /proc/partitions

reboot #重启

mkfs -t ext3 /dev/sda3

格式分后再挂载到映射目录

mount /dev/sda3 /home #取消挂载命令umount /home

将 impala安装在 /home目录下

cd /home

mkdir data

mkdir CDH

mkdir impala_rpm

cd /home/data/CDH/impala_rpm

4.2安装impala(ps:所有安装包都放到/home/data/CDH/impala_rpm_install_pkg 目录下 )

cd /home/data/CDH/impala_rpm

rpm -ivh ../impala_rpm_install_pkg/impala-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64.rpm

出现如下错误:

warning: ../impala_rpm_install_pkg/impala-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64.rpm: Header V4 DSA/SHA1 Signature, key ID e8f86acd: NOKEY

error: Failed dependencies:

bigtop-utils >= 0.7 is needed by impala-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64

hadoop is needed by impala-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64

hadoop-hdfs is needed by impala-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64

hadoop-yarn is needed by impala-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64

hadoop-mapreduce is needed by impala-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64

hbase is needed by impala-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64

hive >= 0.12.0+cdh5.1.0 is needed by impala-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64

zookeeper is needed by impala-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64

hadoop-libhdfs is needed by impala-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64

avro-libs is needed by impala-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64

parquet is needed by impala-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64

sentry >= 1.3.0+cdh5.1.0 is needed by impala-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64

sentry is needed by impala-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64

/lib/lsb/init-functions is needed by impala-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64

libhdfs.so.0.0.0()(64bit) is needed by impala-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64

emmmm… 得先安装 bigtop-util 和 sentry

4.3安装bigtop-util 和 sentry

bigtop-util 和 sentry安装包下载地址:

http://archive.cloudera.com/cdh5/redhat/7/x86_64/cdh/5.14.0/RPMS/noarch/

也可以直接点击下面这两个链接下载:

执行如下命令:

sudo rpm -ivh ../impala_rpm_install_pkg/bigtop-utils-0.7.0+cdh5.14.0+0-1.cdh5.14.0.p0.47.el7.noarch.rpm

sudo rpm -ivh ../impala_rpm_install_pkg/sentry-1.5.1+cdh5.14.0+432-1.cdh5.14.0.p0.47.el7.noarch.rpm --nodeps # --nodeps 不强制依赖

sudo rpm -ivh ../impala_rpm_install_pkg/impala-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64.rpm --nodeps

sudo rpm -ivh ../impala_rpm_install_pkg/impala-server-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64.rpm

sudo rpm -ivh ../impala_rpm_install_pkg/impala-state-store-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64.rpm

sudo rpm -ivh ../impala_rpm_install_pkg/impala-catalog-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64.rpm

sudo rpm -ivh ../impala_rpm_install_pkg/impala-shell-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64.rpm

执行到impala-shell得时候,需要先安装:python-setuptools

4.4安装python-setuptools

python-setuptools的下载地址:

http://mirror.centos.org/altarch/7/os/aarch64/Packages/python-setuptools-0.9.8-7.el7.noarch.rpm

执行如下命令:

sudo rpm -ivh ../impala_rpm_install_pkg/python-setuptools-0.9.8-7.el7.noarch.rpm --nodeps

然后我们继续上次的impala-shell安装:

sudo rpm -ivh ../impala_rpm_install_pkg/impala-shell-2.11.0+cdh5.14.0+0-1.cdh5.14.0.p0.50.el6.x86_64.rpm

欧克了

4.5配置bigtop-utils

vi /etc/default/bigtop-utils

export JAVA_HOME=/usr/java/jdk1.8.0_221-amd64

4.6配置conf

cd /etc/impala/conf #复制hadoop与hive的配置文件到impala的目录下

cp /data/CDH/hadoop-2.6.0-cdh5.14.0/etc/hadoop/core-site.xml ./

cp /data/CDH/hadoop-2.6.0-cdh5.14.0/etc/hadoop/hdfs-site.xml ./

cp /data/CDH/hive-1.1.0-cdh5.14.0/conf/hive-site.xml ./

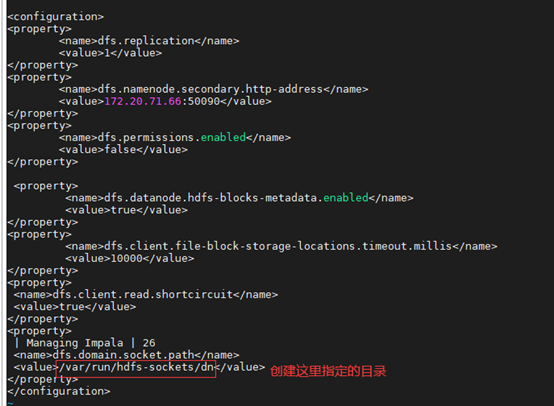

4.7配置hdfs-site.xml

vi hdfs-site.xml

#添加如下内容:

<property>

<name>dfs.datanode.hdfs-blocks-metadata.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.client.file-block-storage-locations.timeout.millis</name>

<value>10000</value>

</property>

4.8处理jar包

4.8.1我们先来学习一下linux的软链接

创建软链接

ln -s [源文件或目录] [目标文件或目录]

例如:

当前路径创建test 引向/var/www/test 文件夹

ln –s /var/www/test test

删除软链接

和删除普通的文件是一样的,删除都是使用rm来进行操作

rm –rf 软链接名称(请注意不要在后面加”/”,rm –rf 后面加不加”/” 的区别,可自行去百度下啊)

例如:

删除test

rm –rf test

修改软链接

ln –snf [新的源文件或目录] [目标文件或目录]

这将会修改原有的链接地址为新的地址

例如:

创建一个软链接

ln –s /var/www/test /var/test

修改指向的新路径

ln –snf /var/www/test1 /var/test

4.8.2处理impala jar包的软连接

(以下内容可以复制,记得替换版本号哦,例如我的包版本是 2.6.0-cdh5.14.0, 需要按照你具体的安装版本替换哦)

Hadoop相关jar包

sudo rm -rf /usr/lib/impala/lib/hadoop-*.jar

sudo ln -s $HADOOP_HOME/share/hadoop/common/lib/hadoop-annotations-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-annotations.jar

sudo ln -s $HADOOP_HOME/share/hadoop/common/lib/hadoop-auth-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-auth.jar

sudo ln -s $HADOOP_HOME/share/hadoop/tools/lib/hadoop-aws-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-aws.jar

sudo ln -s $HADOOP_HOME/share/hadoop/common/hadoop-common-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-common.jar

sudo ln -s $HADOOP_HOME/share/hadoop/hdfs/hadoop-hdfs-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-hdfs.jar

sudo ln -s $HADOOP_HOME/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-mapreduce-client-common.jar

sudo ln -s $HADOOP_HOME/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-mapreduce-client-core.jar

sudo ln -s $HADOOP_HOME/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-mapreduce-client-jobclient.jar

sudo ln -s $HADOOP_HOME/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-mapreduce-client-shuffle.jar

sudo ln -s $HADOOP_HOME/share/hadoop/yarn/hadoop-yarn-api-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-yarn-api.jar

sudo ln -s $HADOOP_HOME/share/hadoop/yarn/hadoop-yarn-client-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-yarn-client.jar

sudo ln -s $HADOOP_HOME/share/hadoop/yarn/hadoop-yarn-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-yarn-common.jar

sudo ln -s $HADOOP_HOME/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-yarn-server-applicationhistoryservice.jar

sudo ln -s $HADOOP_HOME/share/hadoop/yarn/hadoop-yarn-server-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-yarn-server-common.jar

sudo ln -s $HADOOP_HOME/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-yarn-server-nodemanager.jar

sudo ln -s $HADOOP_HOME/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-yarn-server-resourcemanager.jar

sudo ln -s $HADOOP_HOME/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-yarn-server-web-proxy.jar

sudo ln -s $HADOOP_HOME/share/hadoop/mapreduce1/hadoop-core-2.6.0-mr1-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-core.jar

sudo ln -s $HADOOP_HOME/share/hadoop/tools/lib/hadoop-azure-datalake-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-azure-datalake.jar

#重新建立软连接:

sudo ln -snf $HADOOP_HOME/share/hadoop/yarn/hadoop-yarn-common-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-yarn-common.jar

sudo ln -snf $HADOOP_HOME/share/hadoop/yarn/hadoop-yarn-server-common-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-yarn-server-common.jar

sudo ln -snf $HADOOP_HOME/share/hadoop/common/lib/avro-1.7.6-cdh5.14.0.jar /usr/lib/impala/lib/avro.jar

sudo ln -snf $HADOOP_HOME/share/hadoop/common/lib/zookeeper-3.4.5-cdh5.14.0.jar /usr/lib/impala/lib/zookeeper.jar

hive相关jar包

sudo rm -rf /usr/lib/impala/lib/hive-*.jar

sudo ln -s $HIVE_HOME/lib/hive-ant-1.1.0-cdh5.14.0.jar /usr/lib/impala/lib/hive-ant.jar

sudo ln -s $HIVE_HOME/lib/hive-beeline-1.1.0-cdh5.14.0.jar /usr/lib/impala/lib/hive-beeline.jar

sudo ln -s $HIVE_HOME/lib/hive-common-1.1.0-cdh5.14.0.jar /usr/lib/impala/lib/hive-common.jar

sudo ln -s $HIVE_HOME/lib/hive-exec-1.1.0-cdh5.14.0.jar /usr/lib/impala/lib/hive-exec.jar

sudo ln -s $HIVE_HOME/lib/hive-hbase-handler-1.1.0-cdh5.14.0.jar /usr/lib/impala/lib/hive-hbase-handler.jar

sudo ln -s $HIVE_HOME/lib/hive-metastore-1.1.0-cdh5.14.0.jar /usr/lib/impala/lib/hive-metastore.jar

sudo ln -s $HIVE_HOME/lib/hive-serde-1.1.0-cdh5.14.0.jar /usr/lib/impala/lib/hive-serde.jar

sudo ln -s $HIVE_HOME/lib/hive-service-1.1.0-cdh5.14.0.jar /usr/lib/impala/lib/hive-service.jar

sudo ln -s $HIVE_HOME/lib/hive-shims-common-1.1.0-cdh5.14.0.jar /usr/lib/impala/lib/hive-shims-common.jar

sudo ln -s $HIVE_HOME/lib/hive-shims-1.1.0-cdh5.14.0.jar /usr/lib/impala/lib/hive-shims.jar

sudo ln -s $HIVE_HOME/lib/hive-shims-scheduler-1.1.0-cdh5.14.0.jar /usr/lib/impala/lib/hive-shims-scheduler.jar

sudo ln -snf $HIVE_HOME/lib/parquet-hadoop-bundle-1.5.0-cdh5.14.0.jar /usr/lib/impala/lib/parquet-hadoop-bundle.jar

其它jar包

sudo ln -snf $HIVE_HOME/lib/hbase-annotations-1.2.0-cdh5.14.0.jar /usr/lib/impala/lib/hbase-annotations.jar

sudo ln -snf $HIVE_HOME/lib/hbase-common-1.2.0-cdh5.14.0.jar /usr/lib/impala/lib/hbase-common.jar

sudo ln -snf $HIVE_HOME/lib/hbase-protocol-1.2.0-cdh5.14.0.jar /usr/lib/impala/lib/hbase-protocol.jar

sudo ln -snf /data/CDH/impala-2.11.0-cdh5.14.0/thirdparty/hbase-1.2.0-cdh5.14.0/lib/hbase-client-1.2.0-cdh5.14.0.jar /usr/lib/impala/lib/hbase-client.jar

sudo ln -snf /data/CDH/impala-2.11.0-cdh5.14.0/thirdparty/hadoop-2.6.0-cdh5.14.0/lib/native/libhadoop.so /usr/lib/impala/lib/libhadoop.so

sudo ln -snf /data/CDH/impala-2.11.0-cdh5.14.0/thirdparty/hadoop-2.6.0-cdh5.14.0/lib/native/libhadoop.so.1.0.0 /usr/lib/impala/lib/libhadoop.so.1.0.0

sudo ln -snf /data/CDH/impala-2.11.0-cdh5.14.0/thirdparty/hadoop-2.6.0-cdh5.14.0/lib/native/libhdfs.so /usr/lib/impala/lib/libhdfs.so

sudo ln -snf /data/CDH/impala-2.11.0-cdh5.14.0/thirdparty/hadoop-2.6.0-cdh5.14.0/lib/native/libhdfs.so.0.0.0 /usr/lib/impala/lib/libhdfs.so.0.0.0

额外补充

如有遗漏,还有一些jar包软链接未生效,可以全局搜索当前服务器上是否存在此jar,如果不存在就去maven上下载一个,然后放到某个目录,软连接过去即可。

eg:发现还有个 hadoop-core.jar软连接无效。

find / -name “hadoop-core*”; #根据此命令找到相关包的位置,然后ln –s xx xx 即可

4.9启动impala

service impala-state-store start

service impala-catalog start

service impala-server start

在执行service impala-state-store start 报错

[root@sunny conf]# service impala-state-store start

/etc/init.d/impala-state-store: line 34: /lib/lsb/init-functions: No such file or directory

/etc/init.d/impala-state-store: line 104: log_success_msg: command not found

需要安装 redhat-lsb

下载地址:http://rpmfind.net/linux/rpm2html/search.php?query=redhat-lsb-core(x86-64)

选择:redhat-lsb-core-4.1-27.el7.centos.1.x86_64.rpm 下载

下载好后执行命令:

rpm -ivh redhat-lsb-core-4.1-27.el7.centos.1.x86_64.rpm

继续启动impala

service impala-state-store start

service impala-catalog start

service impala-server start #所有datanode节点启动impalad

所有服务启动成功后可以开始访问impala

4.10使用impala

进入impala:

impala-shell #任意路径输入即可,基本操作同hive

访问impalad的管理界面

访问statestored的管理界面

访问catalog的管理界面

impala日志文件: /var/log/impala

impala配置文件:/etc/default/impala

/etc/impala/conf (core-site.xml, hdfs-site.xml, hive-site.xml)

4.11 安装impala异常处理

4.11.1 FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.metastore.HiveMetaStoreClient

处理方案:

参考:http://blog.csdn.net/l1028386804/article/details/51566262

进入所安装的Hive的conf目录,找到hive-site.xml,(若没修改,则是hive-default.xml.template)。

<property>

<name>hive.metastore.schema.verification</name>

<value>true</value>

<description>

Enforce metastore schema version consistency.

True: Verify that version information stored in metastore matches with one from Hive jars. Also disable automatic

schema migration attempt. Users are required to manully migrate schema after Hive upgrade which ensures

proper metastore schema migration. (Default)

False: Warn if the version information stored in metastore doesn't match with one from in Hive jars.

</description>

</property>

改为

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

<description>

Enforce metastore schema version consistency.

True: Verify that version information stored in metastore matches with one from Hive jars. Also disable automatic

schema migration attempt. Users are required to manully migrate schema after Hive upgrade which ensures

proper metastore schema migration. (Default)

False: Warn if the version information stored in metastore doesn't match with one from in Hive jars.

</description>

</property>

然后重启hive

hive --service metastore &

hive --service hiveserver2 &

4.11.2 Invalid short-circuit reads configuration:- Impala cannot read or execute the parent directory of dfs.domain.socket.path

处理方案:创建配置文件中 /etc/impala/conf/hdfs-site.xml,对应目录即可

cd /var/run

mkdir hdfs-sockets

cd hdfs-sockets

mkdir dn

4.11.3impala insert数据报错:NoClassDefFoundError: org/apache/hadoop/fs/adl/AdlFileSystem

[localhost.localdomain:21000] > insert into user_test values('zs');

Query: insert into user_test values('zs')

Query submitted at: 2020-12-17 02:39:24 (Coordinator: http://sunny:25000)

ERROR: AnalysisException: Failed to load metadata for table: test.user_test. Running 'invalidate metadata test.user_test' may resolve this problem.

CAUSED BY: NoClassDefFoundError: org/apache/hadoop/fs/adl/AdlFileSystem

CAUSED BY: TableLoadingException: Failed to load metadata for table: test.user_test. Running 'invalidate metadata test.user_test' may resolve this problem.

CAUSED BY: NoClassDefFoundError: org/apache/hadoop/fs/adl/AdlFileSystem

处理方案:

impala没有引用hadoop-azure-datalake.jar ,补充引用

sudo ln -s /data/CDH/hadoop-2.6.0-cdh5.14.0/share/hadoop/tools/lib/hadoop-azure-datalake-2.6.0-cdh5.14.0.jar /usr/lib/impala/lib/hadoop-azure-datalake.jar

hadoop core-site.xml 配置解析:http://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-common/core-default.xml

到此 hadoop hive impala 安装结束啦,完结 撒花~

--=====================补充更新 2020-12-28================

ERROR: AnalysisException: Unable to INSERT into target table (Test.user_test) because Impala does not have WRITE access to at least one HDFS path: hdfs://172.20.71.66:9000/user/hive/warehouse/test.db/user_test

处理方案:

vi /etc/selinux/config

SELINUX=disabled

vi /data/CDH/hive-1.1.0-cdh5.14.0/conf/hive-site.xml

添加内容

<property>

<name>hive.metastore.warehouse.dir</name>

<!--此处修改-->

<value>/user/hive/warehouse</value>

<description>location of default database for the warehouse</description>

</property>

hadoop fs -chmod 777 /user/hive/warehouse/test.db/user_test

hive --service metastore &

hive --service hiveserver2 &

service impala-state-store restart

service impala-catalog restart

service impala-server restart

impala-shell

invalidate metadata

impala-shell --auth_creds_ok_in_clear -l -i 172.20.71.66 -u APP

-- ============================================================================

posted on

posted on

浙公网安备 33010602011771号

浙公网安备 33010602011771号