SpringBoot+Mybatis+Sharding-JDBC实现分库分表

项目里面一直用Sharding-JDBC,今天整理一下,就当温故而知新了,也是稳固而知新了。

一、整体介绍

- 项目采用的框架是SpringBoot+Mybatis+Sharding-JDBC,采用的是properties的形式;

- 分为两个数据库sharding_0,sharding_1。每个库三个表,t_user_00,t_user_01,t_user_02;

- 分库策略:age % 2 = 0的数据存储到sharding_0 ,为1的数据存储到sharding_1;

- 分表策略:user_id % 3 = 0的数据存储到t_user_00,为1的数据存储到t_user_01,为2的数据存储到t_user_02;

- Sharding-JDBC官网

二、数据库文件

分别在sharding_0,sharding_1两个数据库中执行下列脚本。

SET NAMES utf8mb4; SET FOREIGN_KEY_CHECKS = 0; -- ---------------------------- -- Table structure for t_user_00 -- ---------------------------- DROP TABLE IF EXISTS `t_user_00`; CREATE TABLE `t_user_00` ( `id` bigint(0) NOT NULL, `user_id` int(0) NOT NULL, `name` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL, `age` int(0) NOT NULL, PRIMARY KEY (`id`) USING BTREE ) ENGINE = InnoDB AUTO_INCREMENT = 10 CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Dynamic; -- ---------------------------- -- Table structure for t_user_01 -- ---------------------------- DROP TABLE IF EXISTS `t_user_01`; CREATE TABLE `t_user_01` ( `id` bigint(0) NOT NULL, `user_id` int(0) NOT NULL, `name` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL, `age` int(0) NOT NULL, PRIMARY KEY (`id`) USING BTREE ) ENGINE = InnoDB AUTO_INCREMENT = 6 CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Dynamic; -- ---------------------------- -- Table structure for t_user_02 -- ---------------------------- DROP TABLE IF EXISTS `t_user_02`; CREATE TABLE `t_user_02` ( `id` bigint(0) NOT NULL, `user_id` int(0) NOT NULL, `name` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL, `age` int(0) NOT NULL, PRIMARY KEY (`id`) USING BTREE ) ENGINE = InnoDB AUTO_INCREMENT = 3 CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Dynamic; SET FOREIGN_KEY_CHECKS = 1;

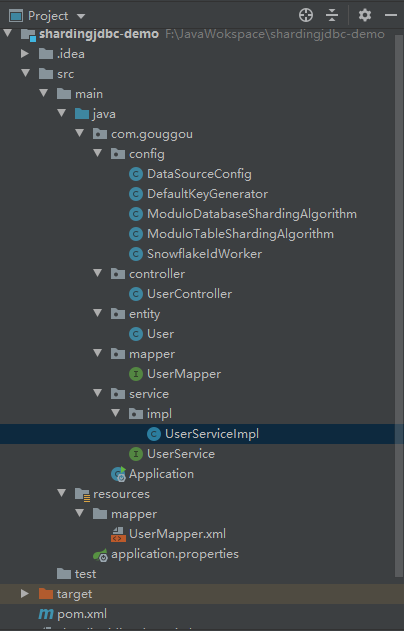

三、项目结构

四、pom.xml

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <parent> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-parent</artifactId> <version>2.1.13.RELEASE</version> <relativePath/> <!-- lookup parent from repository --> </parent> <groupId>com.gougou</groupId> <artifactId>shardingjdbc-demo</artifactId> <version>0.0.1-SNAPSHOT</version> <name>shardingjdbc-demo</name> <description>shardingjdbc-demo</description> <properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <java.version>1.8</java.version> </properties> <dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <dependency> <groupId>org.projectlombok</groupId> <artifactId>lombok</artifactId> <optional>true</optional> </dependency> <dependency> <groupId>org.mybatis.spring.boot</groupId> <artifactId>mybatis-spring-boot-starter</artifactId> <version>1.3.2</version> </dependency> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> </dependency> <dependency> <groupId>com.dangdang</groupId> <artifactId>sharding-jdbc-core</artifactId> <version>1.5.4</version> </dependency> <!--Snowflake算法中使用-->

<dependency> <groupId>org.apache.commons</groupId> <artifactId>commons-lang3</artifactId> <version>3.8.1</version> </dependency> </dependencies> <build> <plugins> <plugin> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-maven-plugin</artifactId> </plugin> </plugins> </build> </project>

五、application.properties

spring.application.name=shardingjdbc-demo #mybatis配置 # mapper映射文件位置 #mybatis.mapper-locations=classpath:mapper/*.xml # 实体类所在的位置 mybatis.type-aliases-package=com.gouggou.shardingtable.entity #datasource spring.devtools.remote.restart.enabled=false #data source1 spring.datasource.db0.driverClassName=com.mysql.jdbc.Driver spring.datasource.db0.jdbcUrl=jdbc:mysql://127.0.0.1:3306/sharding_0?serverTimezone=UTC spring.datasource.db0.username=root spring.datasource.db0.password=root #data source2 spring.datasource.db1.driverClassName=com.mysql.jdbc.Driver spring.datasource.db1.jdbcUrl=jdbc:mysql://127.0.0.1:3306/sharding_1?serverTimezone=UTC spring.datasource.db1.username=root spring.datasource.db1.password=root

六、启动类Application

@SpringBootApplication(exclude = {DataSourceAutoConfiguration.class})

@MapperScan(basePackages = "com.gouggou.mapper")

@EnableTransactionManagement(proxyTargetClass = true) //开启事物管理功能

public class Application {

public static void main(String[] args) {

SpringApplication.run(Application.class, args);

}

}

七、分库分表策略配置

1、数据源配置

注意下面标红的部分,这是分库分表的部分逻辑体现。

package com.gouggou.config; import com.dangdang.ddframe.rdb.sharding.api.ShardingDataSourceFactory; import com.dangdang.ddframe.rdb.sharding.api.rule.BindingTableRule; import com.dangdang.ddframe.rdb.sharding.api.rule.DataSourceRule; import com.dangdang.ddframe.rdb.sharding.api.rule.ShardingRule; import com.dangdang.ddframe.rdb.sharding.api.rule.TableRule; import com.dangdang.ddframe.rdb.sharding.api.strategy.database.DatabaseShardingStrategy; import com.dangdang.ddframe.rdb.sharding.api.strategy.table.TableShardingStrategy; import org.apache.ibatis.session.SqlSessionFactory; import org.mybatis.spring.SqlSessionFactoryBean; import org.mybatis.spring.SqlSessionTemplate; import org.mybatis.spring.annotation.MapperScan; import org.springframework.beans.factory.annotation.Qualifier; import org.springframework.boot.context.properties.ConfigurationProperties; import org.springframework.boot.jdbc.DataSourceBuilder; import org.springframework.context.annotation.Bean; import org.springframework.context.annotation.Configuration; import org.springframework.context.annotation.Primary; import org.springframework.core.io.support.PathMatchingResourcePatternResolver; import org.springframework.jdbc.datasource.DataSourceTransactionManager; import javax.sql.DataSource; import java.sql.SQLException; import java.util.*; @Configuration @MapperScan(basePackages = "com.gouggou.mapper", sqlSessionTemplateRef = "test1SqlSessionTemplate") public class DataSourceConfig { /** * 主键生成器 * * @return */ @Bean public DefaultKeyGenerator defaultKeyGenerator() { return new DefaultKeyGenerator(); } /** * 配置数据源0 * * @return */ @Bean(name = "dataSource0") @ConfigurationProperties(prefix = "spring.datasource.db0") public DataSource dataSource0() { return DataSourceBuilder.create().build(); } /** * 配置数据源1 * * @return */ @Bean(name = "dataSource1") @ConfigurationProperties(prefix = "spring.datasource.db1") public DataSource dataSource1() { return DataSourceBuilder.create().build(); } /** * 配置数据源规则,即将多个数据源交给sharding-jdbc管理,并且可以设置默认的数据源, * 当表没有配置分库规则时会使用默认的数据源 * * @param dataSource0 * @param dataSource1 * @return */ @Bean public DataSourceRule dataSourceRule(@Qualifier("dataSource0") DataSource dataSource0, @Qualifier("dataSource1") DataSource dataSource1) { Map<String, DataSource> dataSourceMap = new HashMap<>(); dataSourceMap.put("dataSource0", dataSource0); dataSourceMap.put("dataSource1", dataSource1); //设置默认库,两个库以上时必须设置默认库。默认库的数据源名称必须是dataSourceMap的key之一 return new DataSourceRule(dataSourceMap, "dataSource0"); } /** * 配置数据源策略和表策略,具体策略需要自己实现 * * @param dataSourceRule * @return */ @Bean public ShardingRule shardingRule(DataSourceRule dataSourceRule) { //分表策略 TableRule userTableRule = TableRule.builder("t_user") .actualTables(Arrays.asList("t_user_00", "t_user_01", "t_user_02")) .tableShardingStrategy(new TableShardingStrategy("user_id", new ModuloTableShardingAlgorithm())) .dataSourceRule(dataSourceRule) .build(); //绑定表策略,在查询时会使用主表策略计算路由的数据源,因此需要约定绑定表策略的表的规则需要一致,可以一定程度提高效率 List<BindingTableRule> bindingTableRules = new ArrayList<BindingTableRule>(); bindingTableRules.add(new BindingTableRule(Arrays.asList(userTableRule))); return ShardingRule.builder() .dataSourceRule(dataSourceRule) .tableRules(Arrays.asList(userTableRule)) .bindingTableRules(bindingTableRules) .databaseShardingStrategy(new DatabaseShardingStrategy("age", new ModuloDatabaseShardingAlgorithm())) .tableShardingStrategy(new TableShardingStrategy("user_id", new ModuloTableShardingAlgorithm())) .build(); } /** * 创建sharding-jdbc的数据源DataSource,MybatisAutoConfiguration会使用此数据源 * * @param shardingRule * @return * @throws SQLException */ @Bean(name = "dataSource") public DataSource shardingDataSource(ShardingRule shardingRule) throws SQLException { return ShardingDataSourceFactory.createDataSource(shardingRule); } /** * 手动配置事务管理器 * * @param dataSource * @return */ @Bean public DataSourceTransactionManager transactitonManager(@Qualifier("dataSource") DataSource dataSource) { return new DataSourceTransactionManager(dataSource); } @Bean(name = "test1SqlSessionFactory") @Primary public SqlSessionFactory testSqlSessionFactory(@Qualifier("dataSource") DataSource dataSource) throws Exception { SqlSessionFactoryBean bean = new SqlSessionFactoryBean(); bean.setDataSource(dataSource); bean.setMapperLocations(new PathMatchingResourcePatternResolver().getResources("classpath:mapper/*.xml")); return bean.getObject(); } @Bean(name = "test1SqlSessionTemplate") @Primary public SqlSessionTemplate testSqlSessionTemplate(@Qualifier("test1SqlSessionFactory") SqlSessionFactory sqlSessionFactory) throws Exception { return new SqlSessionTemplate(sqlSessionFactory); } }

2、分库策略配置

package com.gouggou.config; import com.dangdang.ddframe.rdb.sharding.api.ShardingValue; import com.dangdang.ddframe.rdb.sharding.api.strategy.database.SingleKeyDatabaseShardingAlgorithm; import com.google.common.collect.Range; import java.util.Collection; import java.util.LinkedHashSet; /** * 分库策略 */ public class ModuloDatabaseShardingAlgorithm implements SingleKeyDatabaseShardingAlgorithm<Integer> { /** * equals查询 * * @param databaseNames * @param shardingValue * @return */ @Override public String doEqualSharding(Collection<String> databaseNames, ShardingValue<Integer> shardingValue) { for (String each : databaseNames) { if (each.endsWith(Integer.parseInt(shardingValue.getValue().toString()) % 2 + "")) { return each; } } throw new IllegalArgumentException(); } /** * in查询 * * @param databaseNames * @param shardingValue * @return */ @Override public Collection<String> doInSharding(Collection<String> databaseNames, ShardingValue<Integer> shardingValue) { Collection<String> result = new LinkedHashSet<>(databaseNames.size()); for (Integer value : shardingValue.getValues()) { for (String tableName : databaseNames) { if (tableName.endsWith(value % 2 + "")) { result.add(tableName); } } } return result; } /** * between查询 * * @param databaseNames * @param shardingValue * @return */ @Override public Collection<String> doBetweenSharding(Collection<String> databaseNames, ShardingValue<Integer> shardingValue) { Collection<String> result = new LinkedHashSet<>(databaseNames.size()); Range<Integer> range = (Range<Integer>) shardingValue.getValueRange(); for (Integer i = range.lowerEndpoint(); i <= range.upperEndpoint(); i++) { for (String each : databaseNames) { if (each.endsWith(i % 2 + "")) { result.add(each); } } } return result; } }

3、分表策略配置

package com.gouggou.config; import com.dangdang.ddframe.rdb.sharding.api.ShardingValue; import com.dangdang.ddframe.rdb.sharding.api.strategy.table.SingleKeyTableShardingAlgorithm; import com.google.common.collect.Range; import java.util.Collection; import java.util.LinkedHashSet; /** * 分表策略 */ public class ModuloTableShardingAlgorithm implements SingleKeyTableShardingAlgorithm<Integer> { /** * equals查询 * * @param tableNames * @param shardingValue * @return */ @Override public String doEqualSharding(Collection<String> tableNames, ShardingValue<Integer> shardingValue) { for (String each : tableNames) { if (each.endsWith(shardingValue.getValue() % 3 + "")) { return each; } } throw new IllegalArgumentException(); } /** * in查询 * * @param tableNames * @param shardingValue * @return */ @Override public Collection<String> doInSharding(Collection<String> tableNames, ShardingValue<Integer> shardingValue) { Collection<String> result = new LinkedHashSet<>(tableNames.size()); for (Integer value : shardingValue.getValues()) { for (String tableName : tableNames) { if (tableName.endsWith(value % 3 + "")) { result.add(tableName); } } } return result; } /** * between查询 * * @param tableNames * @param shardingValue * @return */ @Override public Collection<String> doBetweenSharding(Collection<String> tableNames, ShardingValue<Integer> shardingValue) { Collection<String> result = new LinkedHashSet<>(tableNames.size()); Range<Integer> range = (Range<Integer>) shardingValue.getValueRange(); for (Integer i = range.lowerEndpoint(); i <= range.upperEndpoint(); i++) { for (String each : tableNames) { if (each.endsWith(i % 3 + "")) { result.add(each); } } } return result; } }

4、分布式主键生成策略

不一定非要使用Snowflake算法为主键,你也可以自己实现,然后实现KeyGenerator接口。

package com.gouggou.config; import lombok.extern.slf4j.Slf4j; import org.apache.commons.lang3.RandomUtils; import org.apache.commons.lang3.StringUtils; import org.apache.commons.lang3.SystemUtils; import java.net.Inet4Address; import java.net.UnknownHostException; /** * 分布式数据库主键生成策略 */ @Slf4j public class SnowflakeIdWorker { /** * 开始时间截 (2015-01-01) */ private final long twepoch = 1489111610226L; /** * 机器id所占的位数 */ private final long workerIdBits = 5L; /** * 数据标识id所占的位数 */ private final long dataCenterIdBits = 5L; /** * 支持的最大机器id,结果是31 (这个移位算法可以很快的计算出几位二进制数所能表示的最大十进制数) */ private final long maxWorkerId = -1L ^ (-1L << workerIdBits); /** * 支持的最大数据标识id,结果是31 */ private final long maxDataCenterId = -1L ^ (-1L << dataCenterIdBits); /** * 序列在id中占的位数 */ private final long sequenceBits = 12L; /** * 机器ID向左移12位 */ private final long workerIdShift = sequenceBits; /** * 数据标识id向左移17位(12+5) */ private final long dataCenterIdShift = sequenceBits + workerIdBits; /** * 时间截向左移22位(5+5+12) */ private final long timestampLeftShift = sequenceBits + workerIdBits + dataCenterIdBits; /** * 生成序列的掩码,这里为4095 (0b111111111111=0xfff=4095) */ private final long sequenceMask = -1L ^ (-1L << sequenceBits); /** * 工作机器ID(0~31) */ private long workerId; /** * 数据中心ID(0~31) */ private long dataCenterId; /** * 毫秒内序列(0~4095) */ private long sequence = 0L; /** * 上次生成ID的时间截 */ private long lastTimestamp = -1L; private static SnowflakeIdWorker idWorker; static { idWorker = new SnowflakeIdWorker(getWorkId(), getDataCenterId()); } //==============================Constructors===================================== /** * 构造函数 * * @param workerId 工作ID (0~31) * @param dataCenterId 数据中心ID (0~31) */ public SnowflakeIdWorker(long workerId, long dataCenterId) { if (workerId > maxWorkerId || workerId < 0) { throw new IllegalArgumentException(String.format("workerId can't be greater than %d or less than 0", maxWorkerId)); } if (dataCenterId > maxDataCenterId || dataCenterId < 0) { throw new IllegalArgumentException(String.format("dataCenterId can't be greater than %d or less than 0", maxDataCenterId)); } this.workerId = workerId; this.dataCenterId = dataCenterId; } // ==============================Methods========================================== /** * 获得下一个ID (该方法是线程安全的) * * @return SnowflakeId */ public synchronized long nextId() { long timestamp = timeGen(); //如果当前时间小于上一次ID生成的时间戳,说明系统时钟回退过这个时候应当抛出异常 if (timestamp < lastTimestamp) { throw new RuntimeException( String.format("Clock moved backwards. Refusing to generate id for %d milliseconds", lastTimestamp - timestamp)); } //如果是同一时间生成的,则进行毫秒内序列 if (lastTimestamp == timestamp) { sequence = (sequence + 1) & sequenceMask; //毫秒内序列溢出 if (sequence == 0) { //阻塞到下一个毫秒,获得新的时间戳 timestamp = tilNextMillis(lastTimestamp); } } //时间戳改变,毫秒内序列重置 else { sequence = 0L; } //上次生成ID的时间截 lastTimestamp = timestamp; //移位并通过或运算拼到一起组成64位的ID return ((timestamp - twepoch) << timestampLeftShift) | (dataCenterId << dataCenterIdShift) | (workerId << workerIdShift) | sequence; } /** * 阻塞到下一个毫秒,直到获得新的时间戳 * * @param lastTimestamp 上次生成ID的时间截 * @return 当前时间戳 */ protected long tilNextMillis(long lastTimestamp) { long timestamp = timeGen(); while (timestamp <= lastTimestamp) { timestamp = timeGen(); } return timestamp; } /** * 返回以毫秒为单位的当前时间 * * @return 当前时间(毫秒) */ protected long timeGen() { return System.currentTimeMillis(); } private static Long getWorkId() { try { String hostAddress = Inet4Address.getLocalHost().getHostAddress(); int[] ints = StringUtils.toCodePoints(hostAddress); int sums = 0; for (int b : ints) { sums += b; } return (long) (sums % 32); } catch (UnknownHostException e) { // 如果获取失败,则使用随机数备用 return RandomUtils.nextLong(0, 31); } } private static Long getDataCenterId() { int[] ints = StringUtils.toCodePoints(SystemUtils.getHostName()); int sums = 0; for (int i : ints) { sums += i; } return (long) (sums % 32); } /** * 静态工具类 * * @return */ public static synchronized Long generateId() { return idWorker.nextId(); } }

package com.gouggou.config; import com.dangdang.ddframe.rdb.sharding.keygen.KeyGenerator; public class DefaultKeyGenerator implements KeyGenerator { @Override public Number generateKey() { return SnowflakeIdWorker.generateId(); } }

八、controller

package com.gouggou.controller; import com.gouggou.config.DefaultKeyGenerator; import com.gouggou.entity.User; import com.gouggou.service.UserService; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.web.bind.annotation.RequestMapping; import org.springframework.web.bind.annotation.RestController; import java.util.ArrayList; import java.util.Arrays; import java.util.List; import java.util.Random; @RequestMapping("user") @RestController public class UserController { @Autowired private UserService userService; @Autowired private DefaultKeyGenerator defaultKeyGenerator; @RequestMapping("save") public String save() { User user = null; for (int i = 0; i < 60; i++) { user = new User(); user.setId(defaultKeyGenerator.generateKey().longValue()); user.setUserId(new Random().nextInt(1000) + 1); user.setName("张三" + user.getUserId()); user.setAge(new Random().nextInt(80) + 1); userService.insert(user); } return "创建成功!"; } @RequestMapping("findById") public List<User> findById(Integer id){ List<Integer> list = new ArrayList<>(1); list.add(id); return userService.findByUserIds(list); } }

九、service

package com.gouggou.service; import com.gouggou.entity.User; import java.util.List; public interface UserService { Integer insert(User u); List<User> findByUserIds(List<Integer> userIds); }

package com.gouggou.service.impl; import com.gouggou.entity.User; import com.gouggou.mapper.UserMapper; import com.gouggou.service.UserService; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.stereotype.Service; import org.springframework.transaction.annotation.Transactional; import java.util.List; @Service @Transactional public class UserServiceImpl implements UserService { @Autowired private UserMapper userMapper; @Override public Integer insert(User u) { return userMapper.insert(u); } @Override public List<User> findByUserIds(List<Integer> userIds) { return userMapper.findByUserIds(userIds); } }

十、entity

package com.gouggou.entity; import lombok.Data; import java.io.Serializable; @Data public class User implements Serializable { private static final long serialVersionUID = -5514139686858156155L; private Long id; private Integer userId; private String name; private Integer age; }

十一、mapper

package com.gouggou.mapper; import com.gouggou.entity.User; import org.springframework.stereotype.Repository; import java.util.List; @Repository public interface UserMapper { Integer insert(User u); List<User> findByUserIds(List<Integer> userIds); }

<?xml version="1.0" encoding="UTF-8" ?> <!DOCTYPE mapper PUBLIC "-//mybatis.org//DTD Mapper 3.0//EN" "http://mybatis.org/dtd/mybatis-3-mapper.dtd" > <mapper namespace="com.gouggou.mapper.UserMapper"> <resultMap id="resultMap" type="com.gouggou.entity.User"> <id column="id" property="id" jdbcType="INTEGER"/> <result column="user_id" property="userId" jdbcType="INTEGER"/> <result column="name" property="name" jdbcType="VARCHAR"/> <result column="age" property="age" jdbcType="INTEGER"/> </resultMap> <insert id="insert"> insert into t_user (id,user_id,name,age) values (#{id},#{userId},#{name},#{age}) </insert> <select id="findByUserIds" resultMap="resultMap"> select <include refid="columnsName"/> from t_user where user_id in ( <foreach collection="list" item="item" separator=","> #{item} </foreach> ) </select> <sql id="columnsName"> id,user_id,name,age </sql> </mapper>

十二、总结

1、我使用的分库分表依赖是com.dangdang.sharding-jdbc-core。但是后来当当把它捐给Apache,依赖变为org.apache.shardingsphere.sharding-jdbc-core,代码可能会有所不同,但是原理是相通的;

2、SpringBoot的配置文件有两种格式,一个是properties,一个是yml。这里采用的properties,yml格式的配置在探索中;

3、这里我从网上找的SnowFlake算法生成的id都是偶数,本来我打算使用id作为分库策略的,后来没办法就用了age做分库策略;

4、以后再补充读写分离的功能;

在全栈的道路上,积极向上、成熟稳重、谦虚好学、怀着炽热的心向前方的走得更远。