MongoDB对集合开启分片功能

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5cc2dd241030c29eb9c33e2a")

}

shards:

{ "_id" : "rs01", "host" : "rs01/192.168.100.25:27001,192.168.100.26:27001", "state" : 1 }

{ "_id" : "rs02", "host" : "rs02/192.168.100.26:27002,192.168.101.25:27002", "state" : 1 }

{ "_id" : "rs03", "host" : "rs03/192.168.100.25:27003,192.168.101.25:27003", "state" : 1 }

active mongoses:

"3.6.12" : 3

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

rs01 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : rs01 Timestamp(1, 0)

{ "_id" : "test_db", "primary" : "rs03", "partitioned" : true }

test_db.test_tab

shard key: { "name" : 1 }

unique: false

balancing: true

chunks:

rs03 1

{ "name" : { "$minKey" : 1 } } -->> { "name" : { "$maxKey" : 1 } } on : rs03 Timestamp(1, 0)

mongos> db.handleResultMongo.insert({'name':'test'});

WriteResult({ "nInserted" : 1 })

mongos>

mongos>

mongos> show tables;

handleResultMongo

mongos> db.h

db.handleResultMongo db.hasOwnProperty db.help( db.hostInfo(

mongos> db.handleResultMongo.find()

{ "_id" : ObjectId("5cd5125795294c8733f3dad2"), "name" : "test" }

mongos> sh.enableSharding('scdataDB')

{

"ok" : 1,

"operationTime" : Timestamp(1557467898, 5),

"$clusterTime" : {

"clusterTime" : Timestamp(1557467898, 5),

"signature" : {

"hash" : BinData(0,"DPZYJwN+9VcAasQPMybjqKFbL3U="),

"keyId" : NumberLong("6684147943659798555")

}

}

}

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5cc2dd241030c29eb9c33e2a")

}

shards:

{ "_id" : "rs01", "host" : "rs01/192.168.100.25:27001,192.168.100.26:27001", "state" : 1 }

{ "_id" : "rs02", "host" : "rs02/192.168.100.26:27002,192.168.101.25:27002", "state" : 1 }

{ "_id" : "rs03", "host" : "rs03/192.168.100.25:27003,192.168.101.25:27003", "state" : 1 }

active mongoses:

"3.6.12" : 3

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

rs01 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : rs01 Timestamp(1, 0)

{ "_id" : "handleResultMongo", "primary" : "rs02", "partitioned" : true }

{ "_id" : "scdataDB", "primary" : "rs02", "partitioned" : true }

{ "_id" : "test_db", "primary" : "rs03", "partitioned" : true }

test_db.test_tab

shard key: { "name" : 1 }

unique: false

balancing: true

chunks:

rs03 1

{ "name" : { "$minKey" : 1 } } -->> { "name" : { "$maxKey" : 1 } } on : rs03 Timestamp(1, 0)

mongos> sh.shardCollection("scdataDB.handleResultMongo",{"_id":1})

{

"collectionsharded" : "scdataDB.handleResultMongo",

"collectionUUID" : UUID("94279e8c-e74b-4901-8e91-a61903901933"),

"ok" : 1,

"operationTime" : Timestamp(1557468137, 11),

"$clusterTime" : {

"clusterTime" : Timestamp(1557468137, 11),

"signature" : {

"hash" : BinData(0,"69UAIYlxWDxCsKBQ3IThOZasMmU="),

"keyId" : NumberLong("6684147943659798555")

}

}

}

mongos> db.handleResultMongo.stats()

{

"sharded" : true,

"capped" : false,

"ns" : "scdataDB.handleResultMongo",

"count" : 1,

"size" : 37,

"storageSize" : 16384,

"totalIndexSize" : 16384,

"indexSizes" : {

"_id_" : 16384

},

"avgObjSize" : 37,

"nindexes" : 1,

"nchunks" : 1,

"shards" : {

"rs02" : {

"ns" : "scdataDB.handleResultMongo",

"size" : 37,

"count" : 1,

"avgObjSize" : 37,

"storageSize" : 16384,

"capped" : false,

"wiredTiger" : {

"metadata" : {

"formatVersion" : 1

},

"creationString" : "access_pattern_hint=none,allocation_size=4KB,app_metadata=(formatVersion=1),assert=(commit_timestamp=none,read_timestamp=none),block_allocation=best,block_compressor=snappy,cache_resident=false,checksum=on,colgroups=,collator=,columns=,dictionary=0,encryption=(keyid=,name=),exclusive=false,extractor=,format=btree,huffman_key=,huffman_value=,ignore_in_memory_cache_size=false,immutable=false,internal_item_max=0,internal_key_max=0,internal_key_truncate=true,internal_page_max=4KB,key_format=q,key_gap=10,leaf_item_max=0,leaf_key_max=0,leaf_page_max=32KB,leaf_value_max=64MB,log=(enabled=true),lsm=(auto_throttle=true,bloom=true,bloom_bit_count=16,bloom_config=,bloom_hash_count=8,bloom_oldest=false,chunk_count_limit=0,chunk_max=5GB,chunk_size=10MB,merge_custom=(prefix=,start_generation=0,suffix=),merge_max=15,merge_min=0),memory_page_image_max=0,memory_page_max=10m,os_cache_dirty_max=0,os_cache_max=0,prefix_compression=false,prefix_compression_min=4,source=,split_deepen_min_child=0,split_deepen_per_child=0,split_pct=90,type=file,value_format=u",

"type" : "file",

"uri" : "statistics:table:scdataDB/collection-17-752567444311372324",

"LSM" : {

"bloom filter false positives" : 0,

"bloom filter hits" : 0,

"bloom filter misses" : 0,

"bloom filter pages evicted from cache" : 0,

"bloom filter pages read into cache" : 0,

"bloom filters in the LSM tree" : 0,

"chunks in the LSM tree" : 0,

"highest merge generation in the LSM tree" : 0,

"queries that could have benefited from a Bloom filter that did not exist" : 0,

"sleep for LSM checkpoint throttle" : 0,

"sleep for LSM merge throttle" : 0,

"total size of bloom filters" : 0

},

"block-manager" : {

"allocations requiring file extension" : 3,

"blocks allocated" : 3,

"blocks freed" : 0,

"checkpoint size" : 4096,

"file allocation unit size" : 4096,

"file bytes available for reuse" : 0,

"file magic number" : 120897,

"file major version number" : 1,

"file size in bytes" : 16384,

"minor version number" : 0

},

"btree" : {

"btree checkpoint generation" : 19914,

"column-store fixed-size leaf pages" : 0,

"column-store internal pages" : 0,

"column-store variable-size RLE encoded values" : 0,

"column-store variable-size deleted values" : 0,

"column-store variable-size leaf pages" : 0,

"fixed-record size" : 0,

"maximum internal page key size" : 368,

"maximum internal page size" : 4096,

"maximum leaf page key size" : 2867,

"maximum leaf page size" : 32768,

"maximum leaf page value size" : 67108864,

"maximum tree depth" : 3,

"number of key/value pairs" : 0,

"overflow pages" : 0,

"pages rewritten by compaction" : 0,

"row-store internal pages" : 0,

"row-store leaf pages" : 0

},

"cache" : {

"bytes currently in the cache" : 1142,

"bytes read into cache" : 0,

"bytes written from cache" : 131,

"checkpoint blocked page eviction" : 0,

"data source pages selected for eviction unable to be evicted" : 0,

"eviction walk passes of a file" : 0,

"eviction walk target pages histogram - 0-9" : 0,

"eviction walk target pages histogram - 10-31" : 0,

"eviction walk target pages histogram - 128 and higher" : 0,

"eviction walk target pages histogram - 32-63" : 0,

"eviction walk target pages histogram - 64-128" : 0,

"eviction walks abandoned" : 0,

"eviction walks gave up because they restarted their walk twice" : 0,

"eviction walks gave up because they saw too many pages and found no candidates" : 0,

"eviction walks gave up because they saw too many pages and found too few candidates" : 0,

"eviction walks reached end of tree" : 0,

"eviction walks started from root of tree" : 0,

"eviction walks started from saved location in tree" : 0,

"hazard pointer blocked page eviction" : 0,

"in-memory page passed criteria to be split" : 0,

"in-memory page splits" : 0,

"internal pages evicted" : 0,

"internal pages split during eviction" : 0,

"leaf pages split during eviction" : 0,

"modified pages evicted" : 0,

"overflow pages read into cache" : 0,

"page split during eviction deepened the tree" : 0,

"page written requiring cache overflow records" : 0,

"pages read into cache" : 0,

"pages read into cache after truncate" : 1,

"pages read into cache after truncate in prepare state" : 0,

"pages read into cache requiring cache overflow entries" : 0,

"pages requested from the cache" : 2,

"pages seen by eviction walk" : 0,

"pages written from cache" : 2,

"pages written requiring in-memory restoration" : 0,

"tracked dirty bytes in the cache" : 0,

"unmodified pages evicted" : 0

},

"cache_walk" : {

"Average difference between current eviction generation when the page was last considered" : 0,

"Average on-disk page image size seen" : 0,

"Average time in cache for pages that have been visited by the eviction server" : 0,

"Average time in cache for pages that have not been visited by the eviction server" : 0,

"Clean pages currently in cache" : 0,

"Current eviction generation" : 0,

"Dirty pages currently in cache" : 0,

"Entries in the root page" : 0,

"Internal pages currently in cache" : 0,

"Leaf pages currently in cache" : 0,

"Maximum difference between current eviction generation when the page was last considered" : 0,

"Maximum page size seen" : 0,

"Minimum on-disk page image size seen" : 0,

"Number of pages never visited by eviction server" : 0,

"On-disk page image sizes smaller than a single allocation unit" : 0,

"Pages created in memory and never written" : 0,

"Pages currently queued for eviction" : 0,

"Pages that could not be queued for eviction" : 0,

"Refs skipped during cache traversal" : 0,

"Size of the root page" : 0,

"Total number of pages currently in cache" : 0

},

"compression" : {

"compressed pages read" : 0,

"compressed pages written" : 0,

"page written failed to compress" : 0,

"page written was too small to compress" : 2,

"raw compression call failed, additional data available" : 0,

"raw compression call failed, no additional data available" : 0,

"raw compression call succeeded" : 0

},

"cursor" : {

"bulk-loaded cursor-insert calls" : 0,

"close calls that result in cache" : 0,

"create calls" : 1,

"cursor operation restarted" : 0,

"cursor-insert key and value bytes inserted" : 38,

"cursor-remove key bytes removed" : 0,

"cursor-update value bytes updated" : 0,

"cursors reused from cache" : 1,

"insert calls" : 1,

"modify calls" : 0,

"next calls" : 2,

"open cursor count" : 0,

"prev calls" : 1,

"remove calls" : 0,

"reserve calls" : 0,

"reset calls" : 5,

"search calls" : 0,

"search near calls" : 0,

"truncate calls" : 0,

"update calls" : 0

},

"reconciliation" : {

"dictionary matches" : 0,

"fast-path pages deleted" : 0,

"internal page key bytes discarded using suffix compression" : 0,

"internal page multi-block writes" : 0,

"internal-page overflow keys" : 0,

"leaf page key bytes discarded using prefix compression" : 0,

"leaf page multi-block writes" : 0,

"leaf-page overflow keys" : 0,

"maximum blocks required for a page" : 1,

"overflow values written" : 0,

"page checksum matches" : 0,

"page reconciliation calls" : 2,

"page reconciliation calls for eviction" : 0,

"pages deleted" : 0

},

"session" : {

"object compaction" : 0

},

"transaction" : {

"update conflicts" : 0

}

},

"nindexes" : 1,

"totalIndexSize" : 16384,

"indexSizes" : {

"_id_" : 16384

},

"ok" : 1,

"operationTime" : Timestamp(1557468182, 1),

"$gleStats" : {

"lastOpTime" : Timestamp(0, 0),

"electionId" : ObjectId("7fffffff0000000000000001")

},

"$configServerState" : {

"opTime" : {

"ts" : Timestamp(1557468186, 1),

"t" : NumberLong(1)

}

},

"$clusterTime" : {

"clusterTime" : Timestamp(1557468186, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

},

"ok" : 1,

"operationTime" : Timestamp(1557468186, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1557468186, 1),

"signature" : {

"hash" : BinData(0,"mYqJKszTfPJ4f1LSGHc/7SCjhSM="),

"keyId" : NumberLong("6684147943659798555")

}

}

}

查看是否分片

mongos> db.handleResultMongo.stats().sharded

true

插入数据测试

use scdataDB

for(var i=1;i<=10000;i++){db.handleResultMongo.insert({

x:i,name:"MACLEAN",name1:"MACLEAN",name2:"MACLEAN",name3:"MACLEAN"

})}

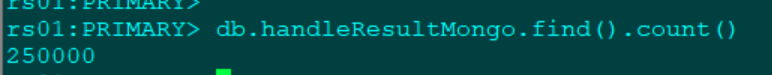

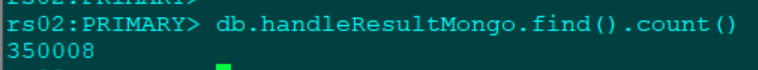

在每个分片上查看数据分布

rs01

rs02

rs03

![]()

刚开始没有分片成功:

分片成功后显示

测试完毕后删除表

db.handleResultMongo.drop()

删除测试的库

use test_db

db.dropDatabase()

创建账号:

创建账号

db.createUser(

{

user:"datacenter_r",

pwd:"******",

roles:[{role:"read",db:"scdataDB"}]

})

db.createUser(

{

user:"datacenter_rw",

pwd:"****",

roles:[{role:"readWrite",db:"scdataDB"}]

})

浙公网安备 33010602011771号

浙公网安备 33010602011771号