【安装文档】TRex流量分析仪保姆级安装指南--基于VMware虚拟机(ubantu18.04@Intel 82545EM)

前言

23.1.12补充更新了安装部分的内容(基于ESXi+trex v2.87)

既然你已经知道TRex并尝试搜索它的安装教程,这意味着你有一定的基础知识(至少知道自己需要什么)。因此本文对于TRex的介绍部分会偏少

本次主要为TRex安装过程的一次记录(版本为v3.0.0),我会整理一些遇到的问题与解决思路,希望对各位有所帮助。

简介

Cisco开源的一个使用DPDK发包的高性能测试仪。

主要的工作原理概括如下:

- 使用scapy来构建数据包模板;或者从pcap文件中读取数据包模板;

- 利用dpdk发送数据包;(重写指定变化的部分)

其兼具了python构建流的效率和dpdk发包的高性能。

TRex安装

TRex是一个Linux环境下的软件,安装环境无非两种:物理机和虚拟机

本文主要基于虚拟机介绍TRex的安装

而使用虚拟机又可以分为两种安装环境:

-

本地虚拟机

基于本地VMware构建的虚拟机环境

-

远程服务器虚拟机

基于VMware ESXi构建的远程虚拟机环境

两种方式最大的不同在网卡添加时,VMware ESXi需要使用ifcofig让系统识别出网卡,详见[7]

其余的流程差不多,本文着重介绍本地VMware基于ubantu18.04安装TRex的流程

如果你在VMware ESXi安装遇到了问题,也欢迎留言讨论

基于本地虚拟机的安装过程

如何获得一个本地VMware虚拟机(包括换源等操作)请自行Google或参考[1]

我使用的VMware版本是VMwareWorkStation PRO16,虚拟机镜像为Ubantu18.04桌面版

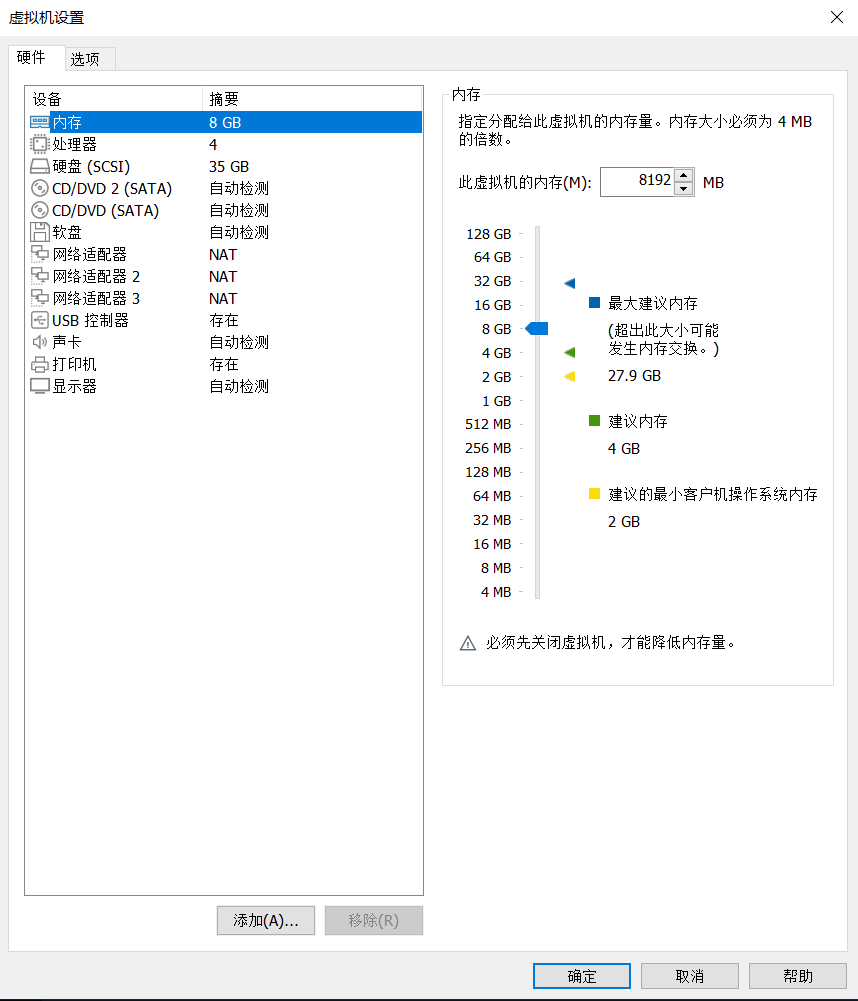

虚拟机配置

先列一下我使用的虚拟机配置(图挂了看表)

设备 摘要

内存 8 GB

处理器 4

硬盘(SCSI) 35 GB

CD/DVD 2 (SATA) 自动检测

CD/DVD (SATA) 自动检测

软盘 自动检测

网络适配器 NAT

网络适配器2 NAT

网络适配器3 NAT

USB控制器 存在

声卡 自动检测

打印机 存在

显示器 自动检测

注:因为安装之后的测试需要用到多块网卡,故此处添加了3块网卡,一块作为Linux内核的主网卡,其余两块用于绑定uio驱动进行测试。

虚拟机的网卡可以随时添加或删除,只要保证其模式始终为NAT即可

虚拟机配置完成后,推荐使用VScode连接并操作,因为之后涉及对配置文件的修改,会比较方便。具体请看:https://www.cnblogs.com/DAYceng/p/16867325.html

当然也可以直接在VMware提供的界面中操作

安装gcc环境

安装gcc环境

root@ubuntu:#

sudo apt install build-essential

sudo apt install make

sudo apt-get install libnuma-dev

ps:这步报错的请先换源

获取TRex

在你喜欢的目录下创建一个文件夹存放安装文件

mkdir trex

cd trex

下载TRex最新分支并解压

root@ubuntu:/root/trex# wget --no-check-certificate https://trex-tgn.cisco.com/trex/release/latest

root@ubuntu:/root/trex# tar -zxvf latest

注:

- latest文件下载过程很慢,挂梯子也很慢,可以先在本地访问https://trex-tgn.cisco.com/trex/release/latest把文件下载下来再传到虚拟机上

- 如果不想下最新版,可以去https://trex-tgn.cisco.com/trex/release自行选择下载版本

得到以下目录

root@ubuntu:/root/trex# ls

latest v3.00

进入解压后的文件夹,使用脚本查看当前可用的网卡

root@ubuntu:/root/trex# cd v3.00

root@ubuntu:/root/trex/v3.00#

root@ubuntu:/root/trex/v3.00#sudo ./dpdk_setup_ports.py -s

Network devices using DPDK-compatible driver

============================================

<none>

Network devices using kernel driver

===================================

0000:02:01.0 '82545EM Gigabit Ethernet Controller (Copper)' if=ens33 drv=e1000 unused=igb_uio,vfio-pci,uio_pci_generic *Active*

0000:02:06.0 '82545EM Gigabit Ethernet Controller (Copper)' drv=e1000 unused=igb_uio,vfio-pci,uio_pci_generic

0000:02:07.0 '82545EM Gigabit Ethernet Controller (Copper)' drv=e1000 unused=igb_uio,vfio-pci,uio_pci_generic

Other network devices

=====================

<none>

root@ubuntu:/root/trex/v3.00#

如果之前添加了网卡,这里就可以看见有3个网卡(没添加的现在再加也行),* Active *为Linux内核正在使用的网卡,另外两张是未启用的网卡(先不要启用),如果有需要请查看[7]

TRex自带脚本自动安装

解压TRex发行版压缩包之后得到的文件夹中包含有以下部分:

- TRex自身运行所需文件(包括支持库、测试脚本等)

- 与当前TRex版本匹配的完整DPDK

- TRex PythonApi

因此,如果没有特殊需求,只需运行TRex提供的自动化脚本就可以方便的绑定已有的端口

查看当前NIC端口

需要先明确当前网卡有哪些端口可用(这里trex版本是v2.87,没有影响不用在意)

root@xxx:~/trex/v2.87# ./dpdk_setup_ports.py -s

Network devices using DPDK-compatible driver

============================================

<none>

Network devices using kernel driver

===================================

0000:03:00.0 'VMXNET3 Ethernet Controller' if=ens160 drv=vmxnet3 unused=igb_uio,vfio-pci,uio_pci_generic *Active*

0000:0b:00.0 'VMXNET3 Ethernet Controller' if=ens192 drv=vmxnet3 unused=igb_uio,vfio-pci,uio_pci_generic

0000:13:00.0 'VMXNET3 Ethernet Controller' if=ens224 drv=vmxnet3 unused=igb_uio,vfio-pci,uio_pci_generic

Other network devices

=====================

<none>

没有* Active *的就是可以使用的网卡,记住其编号

编辑配置文件

先将TRex提供的配置文件复制到系统目录中

sudo cp cfg/simple_cfg.yaml /etc/trex_cfg.yaml

sudo vim /etc/trex_cfg.yaml

打开配置文件,将刚刚查询到的网卡id填进来

- port_limit : 2

version : 2

#List of interfaces. Change to suit your setup. Use ./dpdk_setup_ports.py -s to see available options

interfaces : ["0b:00.0","13:00.0"]

port_info : # Port IPs. Change to suit your needs. In case of loopback, you can leave as is.

- ip : 1.1.1.1

default_gw : 2.2.2.2

- ip : 2.2.2.2

default_gw : 1.1.1.1

保存退出

运行自动安装脚本

root@xxx:~/trex/v2.87# ./dpdk_setup_ports.py

WARNING: tried to configure 2048 hugepages for socket 0, but result is: 177

Trying to bind to vfio-pci ...

Trying to compile and bind to igb_uio ...

ERROR: We don't have precompiled igb_uio.ko module for your kernel version.

Will try compiling automatically...

Success.

/usr/bin/python3 dpdk_nic_bind.py --bind=igb_uio 0000:0b:00.0 0000:13:00.0

The ports are bound/configured.

查看当前网口绑定情况

root@node-wazuh:~/trex/v2.87# ./dpdk_setup_ports.py -s

Network devices using DPDK-compatible driver

============================================

0000:0b:00.0 'VMXNET3 Ethernet Controller' drv=igb_uio unused=vmxnet3,vfio-pci,uio_pci_generic

0000:13:00.0 'VMXNET3 Ethernet Controller' drv=igb_uio unused=vmxnet3,vfio-pci,uio_pci_generic

Network devices using kernel driver

===================================

0000:03:00.0 'VMXNET3 Ethernet Controller' if=ens160 drv=vmxnet3 unused=igb_uio,vfio-pci,uio_pci_generic *Active*

Other network devices

=====================

<none>

搞定,接下来去启动TRex测试即可

自定义安装

因为TRex的一个重要部分是DPDK,安装过程中主要就是去绑定DPDK

自定义安装方式就是不使用TRex内置的DPDK,而是自行下载所需版本的DPDK进行绑定安装,和独立安装dpdk差别不大

前面的简介中有提到,TRex可以大致分为两部分,一部分是用来构造数据包的【基于scapy】,另一部分则是用来发送数据包【基于DPDK】

安装gcc环境

现在我们先来安装DPDK部分,首先安装gcc环境

root@ubuntu:/root/trex/v3.00#

sudo apt install build-essential

sudo apt install make

sudo apt-get install libnuma-dev

下载dpdk

root@ubuntu:/root/trex/v3.00# wget http://fast.dpdk.org/rel/dpdk-18.11.9.tar.xz

root@ubuntu:/root/trex/v3.00# tar xvJf dpdk-18.11.9.tar.xz

root@ubuntu:/root/trex/v3.00# cd dpdk-stable-18.11.9/

root@ubuntu:/root/trex/v3.00/dpdk-stable-18.11.9#

添加环境变量

在dpdk的文件目录下配置环境变量,否则会导致之后用脚本安装dpdk构建环境时出错

root@ubuntu:/root/trex/v3.00/dpdk-stable-18.11.9# export RTE_SDK=`pwd`

root@ubuntu:/root/trex/v3.00/dpdk-stable-18.11.9# export DESTDIR=`pwd`

root@ubuntu:/root/trex/v3.00/dpdk-stable-18.11.9# export RTE_TARGET=x86_64-default-linuxapp-gcc

加载uio驱动

# 加载uio驱动

root@ubuntu:/root/trex/v3.00/dpdk-stable-18.11.9# modprobe uio

使用脚本安装dpdk

使用dpdk-setup.sh脚本进行安装

root@ubuntu:/root/trex/v3.00/dpdk-stable-18.11.9# ./usertools/dpdk-setup.sh

------------------------------------------------------------------------------

RTE_SDK exported as /root/dpdk

------------------------------------------------------------------------------

----------------------------------------------------------

Step 1: Select the DPDK environment to build

----------------------------------------------------------

[1] arm64-armv8a-linuxapp-clang

[2] arm64-armv8a-linuxapp-gcc

[3] arm64-dpaa2-linuxapp-gcc

[4] arm64-dpaa-linuxapp-gcc

[5] arm64-stingray-linuxapp-gcc

[6] arm64-thunderx-linuxapp-gcc

[7] arm64-xgene1-linuxapp-gcc

[8] arm-armv7a-linuxapp-gcc

[9] i686-native-linuxapp-gcc

[10] i686-native-linuxapp-icc

[11] ppc_64-power8-linuxapp-gcc

[12] x86_64-native-bsdapp-clang

[13] x86_64-native-bsdapp-gcc

[14] x86_64-native-linuxapp-clang

[15] x86_64-native-linuxapp-gcc

[16] x86_64-native-linuxapp-icc

[17] x86_x32-native-linuxapp-gcc

----------------------------------------------------------

Step 2: Setup linuxapp environment

----------------------------------------------------------

[18] Insert IGB UIO module

[19] Insert VFIO module

[20] Insert KNI module

[21] Setup hugepage mappings for non-NUMA systems

[22] Setup hugepage mappings for NUMA systems

[23] Display current Ethernet/Crypto device settings

[24] Bind Ethernet/Crypto device to IGB UIO module

[25] Bind Ethernet/Crypto device to VFIO module

[26] Setup VFIO permissions

----------------------------------------------------------

Step 3: Run test application for linuxapp environment

----------------------------------------------------------

[27] Run test application ($RTE_TARGET/app/test)

[28] Run testpmd application in interactive mode ($RTE_TARGET/app/testpmd)

----------------------------------------------------------

Step 4: Other tools

----------------------------------------------------------

[29] List hugepage info from /proc/meminfo

----------------------------------------------------------

Step 5: Uninstall and system cleanup

----------------------------------------------------------

[30] Unbind devices from IGB UIO or VFIO driver

[31] Remove IGB UIO module

[32] Remove VFIO module

[33] Remove KNI module

[34] Remove hugepage mappings

[35] Exit Script

Option:

步骤一

根据虚拟机的环境选择相应的build,例如我的虚拟机是64位Intel架构的环境,则选择[15] x86_64-native-linuxapp-gcc

...

Installation in /root/dpdk/ complete

------------------------------------------------------------------------------

RTE_TARGET exported as x86_64-native-linuxapp-gcc

------------------------------------------------------------------------------

Press enter to continue ...

注:若此时输出信息为以下情况(报错“Installation cannot run with T defined and DESTDIR undefined”)

INSTALL-APP dpdk-test-eventdev

INSTALL-MAP dpdk-test-eventdev.map

Build complete [x86_64-native-linuxapp-gcc]

Installation cannot run with T defined and DESTDIR undefined

------------------------------------------------------------------------------

RTE_TARGET exported as x86_64-native-linuxapp-gcc

--------------------------------------------

Press enter to continue ...

请先添加环境变量并再次执行[15]

步骤二

- 选择[18]加载

igb_uio模块

Unloading any existing DPDK UIO module

Loading DPDK UIO module

Press enter to continue ...

- 选择[19]加载

VFIO module

Unloading any existing VFIO module

Loading VFIO module

chmod /dev/vfio

OK

Press enter to continue ...

- 选择[20]加载

KNI module

Unloading any existing DPDK KNI module

Loading DPDK KNI module

Press enter to continue ...

- 选择[21]来创建Hugepage

Option: 21

Removing currently reserved hugepages

Unmounting /mnt/huge and removing directory

Input the number of 2048kB hugepages

Example: to have 128MB of hugepages available in a 2MB huge page system,

enter '64' to reserve 64 * 2MB pages

Number of pages: 1024

Reserving hugepages

Creating /mnt/huge and mounting as hugetlbfs

Press enter to continue ...

- 选择[24]来绑定PCI网卡

Option: 24

Network devices using kernel driver

===================================

0000:02:01.0 '82545EM Gigabit Ethernet Controller (Copper) 100f' if=ens33 drv=e1000 unused=igb_uio,vfio-pci *Active*

0000:02:06.0 '82545EM Gigabit Ethernet Controller (Copper) 100f' if=ens38 drv=e1000 unused=igb_uio,vfio-pci

0000:02:07.0 '82545EM Gigabit Ethernet Controller (Copper) 100f' if=ens39 drv=e1000 unused=igb_uio,vfio-pci

No 'Crypto' devices detected

============================

No 'Eventdev' devices detected

==============================

No 'Mempool' devices detected

=============================

No 'Compress' devices detected

==============================

Enter PCI address of device to bind to IGB UIO driver: 02:06.0

OK

Press enter to continue ...

======================================================================

======================================================================

Option: 24

Network devices using DPDK-compatible driver

============================================

0000:02:06.0 '82545EM Gigabit Ethernet Controller (Copper) 100f' drv=igb_uio unused=e1000,vfio-pci

Network devices using kernel driver

===================================

0000:02:01.0 '82545EM Gigabit Ethernet Controller (Copper) 100f' if=ens33 drv=e1000 unused=igb_uio,vfio-pci *Active*

0000:02:07.0 '82545EM Gigabit Ethernet Controller (Copper) 100f' if=ens39 drv=e1000 unused=igb_uio,vfio-pci

No 'Crypto' devices detected

============================

No 'Eventdev' devices detected

==============================

No 'Mempool' devices detected

=============================

No 'Compress' devices detected

==============================

Enter PCI address of device to bind to IGB UIO driver: 02:07.0

OK

Press enter to continue ...

PCI网卡的drv=igb_uio即完成绑定

步骤三

测试,选择[27]和[28]

Option: 27

Enter hex bitmask of cores to execute test app on

Example: to execute app on cores 0 to 7, enter 0xff

bitmask: 0x3

Launching app

sudo: x86_64-default-linuxapp-gcc/app/test: command not found

Press enter to continue ...

Option: 28

Enter hex bitmask of cores to execute testpmd app on

Example: to execute app on cores 0 to 7, enter 0xff

bitmask: 0x3

Launching app

sudo: x86_64-default-linuxapp-gcc/app/testpmd: command not found

这两个测试都有可能会出现"command not found"的报错提醒,

其中[27]出现该错误不用理会,[28]若出现的话,可以退出脚本(选[35]),到/[你的DPDK目录]/x86_64-native-linuxapp-gcc/app下找到testpmd,再运行它进行测试即可,若无以外会得到以下输出

root@ubuntu:/root/trex/v3.00/dpdk-stable-18.11.9/x86_64-native-linuxapp-gcc/app# ./testpmd

EAL: Detected 4 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: No free hugepages reported in hugepages-1048576kB

EAL: Probing VFIO support...

EAL: VFIO support initialized

EAL: PCI device 0000:02:01.0 on NUMA socket -1

EAL: Invalid NUMA socket, default to 0

EAL: probe driver: 8086:100f net_e1000_em

EAL: PCI device 0000:02:06.0 on NUMA socket -1

EAL: Invalid NUMA socket, default to 0

EAL: probe driver: 8086:100f net_e1000_em

EAL: PCI device 0000:02:07.0 on NUMA socket -1

EAL: Invalid NUMA socket, default to 0

EAL: probe driver: 8086:100f net_e1000_em

testpmd: create a new mbuf pool <mbuf_pool_socket_0>: n=171456, size=2176, socket=0

testpmd: preferred mempool ops selected: ring_mp_mc

Configuring Port 0 (socket 0)

Port 0: 00:0C:29:AE:BF:4D

Configuring Port 1 (socket 0)

Port 1: 00:0C:29:AE:BF:43

Checking link statuses...

Done

No commandline core given, start packet forwarding

io packet forwarding - ports=2 - cores=1 - streams=2 - NUMA support enabled, MP allocation mode: native

Logical Core 1 (socket 0) forwards packets on 2 streams:

RX P=0/Q=0 (socket 0) -> TX P=1/Q=0 (socket 0) peer=02:00:00:00:00:01

RX P=1/Q=0 (socket 0) -> TX P=0/Q=0 (socket 0) peer=02:00:00:00:00:00

io packet forwarding packets/burst=32

nb forwarding cores=1 - nb forwarding ports=2

port 0: RX queue number: 1 Tx queue number: 1

Rx offloads=0x0 Tx offloads=0x0

RX queue: 0

RX desc=256 - RX free threshold=0

RX threshold registers: pthresh=0 hthresh=0 wthresh=0

RX Offloads=0x0

TX queue: 0

TX desc=256 - TX free threshold=0

TX threshold registers: pthresh=0 hthresh=0 wthresh=0

TX offloads=0x0 - TX RS bit threshold=0

port 1: RX queue number: 1 Tx queue number: 1

Rx offloads=0x0 Tx offloads=0x0

RX queue: 0

RX desc=256 - RX free threshold=0

RX threshold registers: pthresh=0 hthresh=0 wthresh=0

RX Offloads=0x0

TX queue: 0

TX desc=256 - TX free threshold=0

TX threshold registers: pthresh=0 hthresh=0 wthresh=0

TX offloads=0x0 - TX RS bit threshold=0

Press enter to exit

Telling cores to stop...

Waiting for lcores to finish...

---------------------- Forward statistics for port 0 ----------------------

RX-packets: 44 RX-dropped: 0 RX-total: 44

TX-packets: 34 TX-dropped: 0 TX-total: 34

----------------------------------------------------------------------------

---------------------- Forward statistics for port 1 ----------------------

RX-packets: 59 RX-dropped: 0 RX-total: 59

TX-packets: 24 TX-dropped: 0 TX-total: 24

----------------------------------------------------------------------------

+++++++++++++++ Accumulated forward statistics for all ports+++++++++++++++

RX-packets: 103 RX-dropped: 0 RX-total: 103

TX-packets: 58 TX-dropped: 0 TX-total: 58

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

Done.

Stopping port 0...

Stopping ports...

Done

Stopping port 1...

Stopping ports...

Done

Shutting down port 0...

Closing ports...

Done

Shutting down port 1...

Closing ports...

Done

Bye...

运行testpmd可能出现的问题

HugePage容量问题

当运行测试时或者testpmd,可能会遇到如下问题

root@ubuntu:/root/trex/v3.00/dpdk-stable-18.11.9/x86_64-native-linuxapp-gcc/app# ./testpmd

EAL: Detected 8 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: No free hugepages reported in hugepages-2048kB

EAL: No free hugepages reported in hugepages-2048kB

EAL: FATAL: Cannot get hugepage information.

EAL: Cannot get hugepage information.

PANIC in main():

Cannot init EAL

5: [./testpmd(_start+0x29) [0x498829]]

4: [/lib/x86_64-linux-gnu/libc.so.6(__libc_start_main+0xf0) [0x7f8a0fee0830]]

3: [./testpmd(main+0xc48) [0x48f528]]

2: [./testpmd(__rte_panic+0xbb) [0x47eb09]]

1: [./testpmd(rte_dump_stack+0x2b) [0x5c8a1b]]

Aborted (core dumped)

这说明Hugepage不够用,可以先查看系统内存状况

root@ubuntu:/root/trex/v3.00/dpdk-stable-18.11.9/x86_64-native-linuxapp-gcc/app# cat /proc/meminfo | grep Huge

AnonHugePages: 0 kB

ShmemHugePages: 0 kB

FileHugePages: 0 kB

HugePages_Total: 1024

HugePages_Free: 775

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

Hugetlb: 2097152 kB

如果不够用可以按需修改

root@ubuntu:/root/trex/v3.00/dpdk-stable-18.11.9/x86_64-native-linuxapp-gcc/build/kernel/linux# echo 2048 > /sys/kernel/mm/hugepages/hugepages-2048kB/nr_hugepages

root@ubuntu:/root/trex/v3.00/dpdk-stable-18.11.9/x86_64-native-linuxapp-gcc/build/kernel/linux# cat /proc/meminfo | grep Huge

AnonHugePages: 0 kB

ShmemHugePages: 0 kB

FileHugePages: 0 kB

HugePages_Total: 1448

HugePages_Free: 1199

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

Hugetlb: 2965504 kB

此时再去脚本中运行测试[28]会得到以下输出

Option: 28

Enter hex bitmask of cores to execute testpmd app on

Example: to execute app on cores 0 to 7, enter 0xff

bitmask: 0x3

Launching app

EAL: Detected 4 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: No free hugepages reported in hugepages-1048576kB

EAL: Probing VFIO support...

EAL: VFIO support initialized

EAL: PCI device 0000:02:01.0 on NUMA socket -1

EAL: Invalid NUMA socket, default to 0

EAL: probe driver: 8086:100f net_e1000_em

EAL: PCI device 0000:02:06.0 on NUMA socket -1

EAL: Invalid NUMA socket, default to 0

EAL: probe driver: 8086:100f net_e1000_em

EAL: PCI device 0000:02:07.0 on NUMA socket -1

EAL: Invalid NUMA socket, default to 0

EAL: probe driver: 8086:100f net_e1000_em

Interactive-mode selected

testpmd: create a new mbuf pool <mbuf_pool_socket_0>: n=155456, size=2176, socket=0

testpmd: preferred mempool ops selected: ring_mp_mc

Configuring Port 0 (socket 0)

Port 0: 00:0C:29:AE:BF:4D

Configuring Port 1 (socket 0)

Port 1: 00:0C:29:AE:BF:43

Checking link statuses...

Done

testpmd>

这是正常的,包括"EAL: No free hugepages reported in hugepages-1048576kB"提示也是正常的

之后再去/[你的DPDK目录]/x86_64-native-linuxapp-gcc/app下运行testpmd可得到与本文一致的结果

82545EM 虚拟网卡问题

若物理机的网卡为82545EM,使用VMware建立虚拟机后,该网卡对于dpdk的支持会存在问题,

报错为"Eal:Error reading from file descriptor 33: Input/output error"

具体见[5]的分析

为了跳过dpdk对82545EM某些兼容性的检测,我们需要修改dpdk的配置文件igb_uio.c

该文件位于/[你的DPDK目录]//kernel/linux/igb_uio/igb_uio.c下

使用vscode打开该文件(或者vim也行)

定位到

if (pci_intx_mask_supported(udev->pdev) ,大概是第260行

然后修改为if (pci_intx_mask_supported(udev->pdev)|| 1),如下:

#endif

/* falls through - to INTX 修改*/

case RTE_INTR_MODE_LEGACY:

if (pci_intx_mask_supported(udev->pdev)|| 1) {

dev_dbg(&udev->pdev->dev, "using INTX");

udev->info.irq_flags = IRQF_SHARED | IRQF_NO_THREAD;

udev->info.irq = udev->pdev->irq;

udev->mode = RTE_INTR_MODE_LEGACY;

break;

}

然后重新执行编译

modprobe uio

并重新运行脚本中的[15]项,安装环境

解决

启动TRex

编辑配置文件(如果之前安装时没有做以下配置,例如使用自定义安装,则需要补充)

sudo cp cfg/simple_cfg.yaml /etc/trex_cfg.yaml

sudo vim /etc/trex_cfg.yaml

- port_limit : 2

version : 2

#List of interfaces. Change to suit your setup. Use ./dpdk_setup_ports.py -s to see available options

interfaces : ["02:06.0","02:07.0"] #将之前绑定uio驱动的网卡添加到这里

port_info : # Port IPs. Change to suit your needs. In case of loopback, you can leave as is.

- ip : 1.1.1.1

default_gw : 2.2.2.2

- ip : 2.2.2.2

default_gw : 1.1.1.1

打开两个终端,分别运行以下指令启动stateless服务器

astf启动

root@ubuntu:sudo ./t-rex-64 -i --astf -f /path/to/astf/file.py -m 1000 -k 10 -l 1000 -d 60

stl启动

root@ubuntu:sudo ./t-rex-64 -i --stl

默认启动

root@ubuntu:/root/trex/v3.00# sudo ./t-rex-64 -i

root@ubuntu:/root/trex/v3.00# sudo ./trex-console

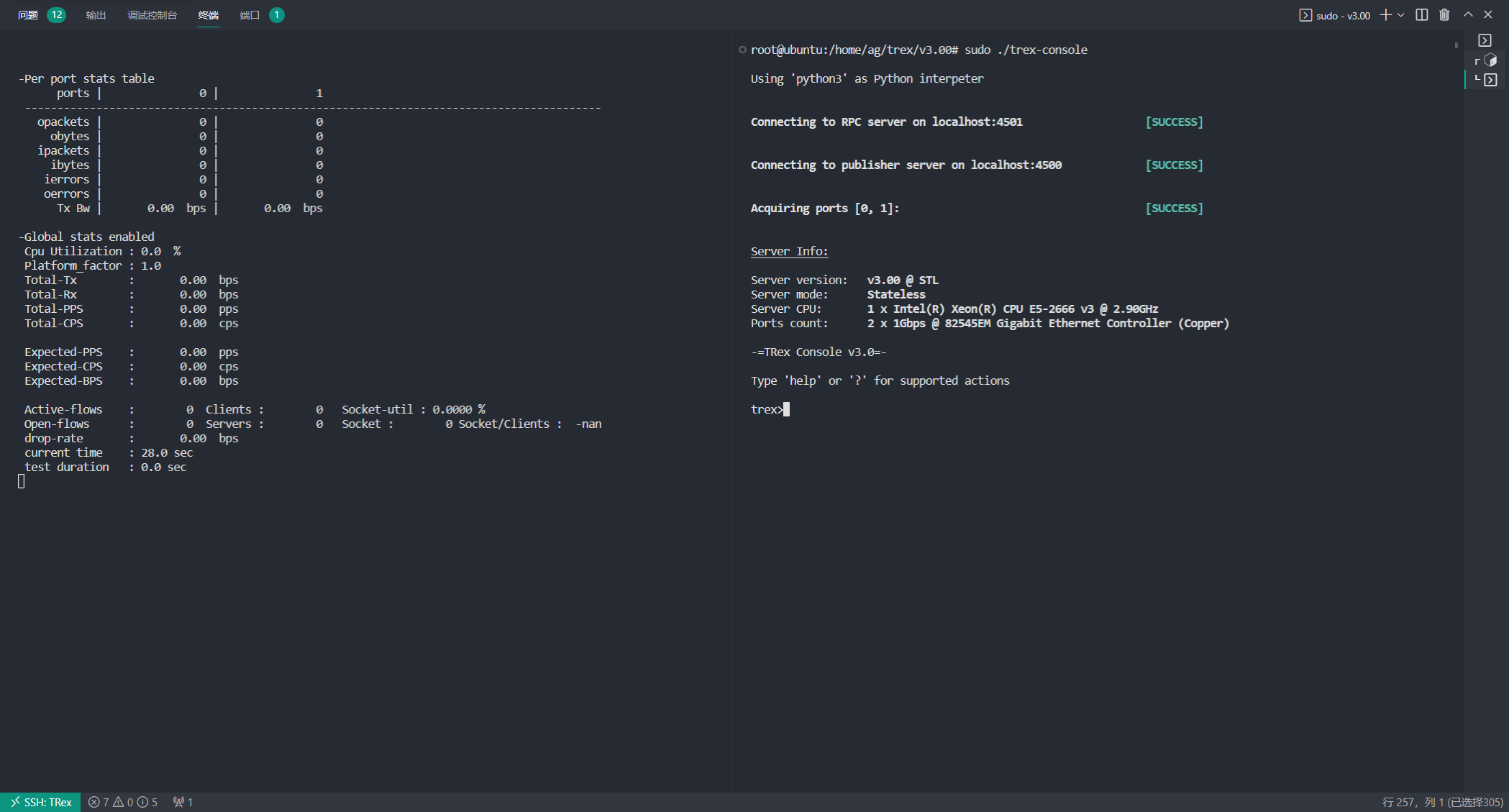

效果如下(图挂了看代码块)

##服务器启动

-Per port stats table

ports | 0 | 1

-----------------------------------------------------------------------------------------

opackets | 0 | 0

obytes | 0 | 0

ipackets | 0 | 0

ibytes | 0 | 0

ierrors | 0 | 0

oerrors | 0 | 0

Tx Bw | 0.00 bps | 0.00 bps

-Global stats enabled

Cpu Utilization : 0.0 %

Platform_factor : 1.0

Total-Tx : 0.00 bps

Total-Rx : 0.00 bps

Total-PPS : 0.00 pps

Total-CPS : 0.00 cps

Expected-PPS : 0.00 pps

Expected-CPS : 0.00 cps

Expected-BPS : 0.00 bps

Active-flows : 0 Clients : 0 Socket-util : 0.0000 %

Open-flows : 0 Servers : 0 Socket : 0 Socket/Clients : -nan

drop-rate : 0.00 bps

current time : 63.5 sec

test duration : 0.0 sec

*** TRex is shutting down - cause: 'CTRL + C detected'

All cores stopped !!

Killing Scapy server... Scapy server is killed

root@ubuntu:/root/trex/v3.00#

##console启动

Using 'python3' as Python interpeter

Connecting to RPC server on localhost:4501 [SUCCESS]

Connecting to publisher server on localhost:4500 [SUCCESS]

Acquiring ports [0, 1]: [SUCCESS]

Server Info:

Server version: v3.00 @ STL

Server mode: Stateless

Server CPU: 1 x Intel(R) Xeon(R) CPU E5-2666 v3 @ 2.90GHz

Ports count: 2 x 1Gbps @ 82545EM Gigabit Ethernet Controller (Copper)

-=TRex Console v3.0=-

Type 'help' or '?' for supported actions

trex>quit

Shutting down RPC client

root@ubuntu:/home/ag/trex/v3.00#

TRex测速实验

启动trex服务器端和客户端(必须用sudo,要不然速度不达标)

sudo ./t-rex-64 --no-ofed-check -i -c 24 # 24核启动

sudo ./trex-console

发送流量

start -f stl/udp_1pkt_pcap.py -m 100mbps -p 0 --force

使用port0、1都可以发送流量,如图

关于丢包率

根据issue:https://github.com/cisco-system-traffic-generator/trex-core/issues/765

我们丢包率高是因为只发送了流量而没有接收流量

用简单的HTTP配置文件运行TRex(astf)

发送一个简单的HTTP流量,以下配置文件定义了HTTP的一个模板

from trex.astf.api import *

class Prof1():

def get_profile(self):

# ip generator

ip_gen_c = ASTFIPGenDist(ip_range=["16.0.0.0", "16.0.0.255"],

distribution="seq")

ip_gen_s = ASTFIPGenDist(ip_range=["48.0.0.0", "48.0.255.255"],

distribution="seq")

ip_gen = ASTFIPGen(glob=ASTFIPGenGlobal(ip_offset="1.0.0.0"), # 定义客户端和服务器端的元组发生器范围

dist_client=ip_gen_c,

dist_server=ip_gen_s)

return ASTFProfile(default_ip_gen=ip_gen,

cap_list=[ASTFCapInfo(

file="../avl/delay_10_http_browsing_0.pcap"

cps=1)

]) # 具有相对CPS(每秒连接数)的模板列表

def register():

return Prof1()

用此配置文件交互式运行TRex(v2.47及以上版本)

在交互模式下启动ASTF

sudo ./t-rex-64 -i --astf

启动控制台

./trex-console -s [server-ip]

从控制台输入

trex>start -f astf/http_simple.py -m 1000 -d 1000 -l 1000

trex>tui

trex>[press] t/l for astf statistics and latency

trex>stop

到此,TRex的安装流程结束

但是这只相当于走完了"helloworld",更多的使用方法请参考[0]

感谢各个社区的内容分享,参考资料我已经在文末给出

参考资料与注释

[0]TRex官方文档https://trex-tgn.cisco.com/trex/doc/trex_manual.html#_hardware_recommendations

[1]https://www.cnblogs.com/hanyanling/p/13364204.html

[2]http://www.isimble.com/2018/11/15/dpdk-setup/

[3]https://blog.51cto.com/feishujun/5573292

[4]https://dev.to/dannypsnl/dpdk-eal-input-output-error-1kn4

[5]https://blog.csdn.net/Longyu_wlz/article/details/121443906

[6]https://blog.csdn.net/yb890102/article/details/127587910

[7]

注:未启用的网卡在终端中使用ifconfig命令是查不到的,需要先使用ip addr找出网卡,再激活,此时网卡会变为* Active *状态

#激活网卡

ifconfig [网卡名称] up

sudo vim /home/ag/trex/v3.00/stl/simple_3st.py

浙公网安备 33010602011771号

浙公网安备 33010602011771号